标签:查看 sch dso rman aop create using 准备 been

5.持久化存储k8s中的副本控制器保证了pod的始终存储,却保证不了pod中的数据。只有启动一个新pod的,之前pod中的数据会随着容器的删掉而丢失!

pv和pvc的概念:

PersistentVolume(一些简称PV):由管理员添加的的一个存储的描述,是一个全局资源,包含存储的类型,存储的大小和访问模式等。它的生命周期独立于Pod,例如当使用它的Pod销毁时对PV没有影响。

PersistentVolumeClaim(一些简称PVC):是Namespace里的资源,描述对PV的一个请求。请求信息包含存储大小,访问模式等。#1.所有节点安装nfs-utils

[root@k8s-master ~]# yum install -y nfs-utils

#2.master配置nfs服务端

[root@k8s-master ~]#yum install -y rpcbind

[root@k8s-master ~]# vim /etc/exports

[root@k8s-master ~]# systemctl restart nfs

[root@k8s-master ~]# cat /etc/exports

/data 10.0.0.0/24(rw,async,no_root_squash,no_all_squash)

[root@k8s-master ~]#

创建挂载目录

[root@k8s-master volume]# mkdir -p /data/k8s

#3.node节点查看nfs挂载目录

[root@k8s-node1 ~]# showmount -e 10.0.0.11

Export list for 10.0.0.11:

/data 10.0.0.0/24

[root@k8s-node2 ~]# showmount -e 10.0.0.11

Export list for 10.0.0.11:

/data 10.0.0.0/24

[root@k8s-node2 ~]#

#启动nfs服务

所有节点systemctl start nfs

master节点

systemctl start rpcbind

#4.创建PV

## 4.1准备yml

[root@k8s-master volume]# cat test-py.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: test

labels:

type: test

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

path: "/data/k8s"

server: 10.0.0.11

readOnly: false

[root@k8s-master volume]# cat test-py2.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: test2

labels:

type: test

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

path: "/data/k8s"

server: 10.0.0.11

readOnly: false

##4.2创建pv

[root@k8s-master volume]# kubectl create -f test-py.yml

persistentvolume "test" created

[root@k8s-master volume]# kubectl create -f test-py2.yml

persistentvolume "test2" created

##4.3查看pv

[root@k8s-master volume]# kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE

test 10Gi RWX Recycle Available 2m

test2 5Gi RWX Recycle Available 1m

[root@k8s-master volume]#

#5.创建pvc

##5.1准备yml

[root@k8s-master volume]# cat test-pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

[root@k8s-master volume]# kubectl create test-pvc.yml

##5.2创建pvc

[root@k8s-master volume]# kubectl create -f test-pvc.yml

persistentvolumeclaim "nfs" created

[root@k8s-master volume]#

##5.3查看,绑定在了test2这个pv上,选择容量够自己使用的pv

[root@k8s-master volume]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESSMODES AGE

nfs Bound test2 5Gi RWX 30s

[root@k8s-master volume]#

#1.删除之前创建的内容

[root@k8s-master volume]# kubectl get all

NAME REFERENCE TARGET CURRENT MINPODS MAXPODS AGE

hpa/myweb ReplicationController/myweb 10% 0% 1 8 1h

NAME DESIRED CURRENT READY AGE

rc/myweb 1 1 1 1h

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 23h

NAME READY STATUS RESTARTS AGE

po/myweb-9khsv 1/1 Running 0 1h

[root@k8s-master volume]# kubectl delete hpa myweb

horizontalpodautoscaler "myweb" deleted

[root@k8s-master volume]# kubectl delete rc myweb

replicationcontroller "myweb" deleted

[root@k8s-master volume]# kubectl get all

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 23h

[root@k8s-master volume]#

#2.tomcat-mysql实验的内容重新赋值一份,创建Pod

由于构建了nfs服务,所以tomcat-rc中 name: MYSQL_SERVICE_HOST value: ‘mysql‘ 可以直接使用svc的name。

[root@k8s-master k8s]# cp -r tomcat_demo tomcat_demo2

[root@k8s-master k8s]# cd tomcat_demo2/

[root@k8s-master tomcat_demo2]# ll

total 16

-rw-r--r-- 1 root root 416 Aug 29 13:42 mysql-rc.yml

-rw-r--r-- 1 root root 145 Aug 29 13:42 mysql-svc.yml

-rw-r--r-- 1 root root 483 Aug 29 13:42 tomcat-rc.yml

-rw-r--r-- 1 root root 162 Aug 29 13:42 tomcat-svc.yml

[root@k8s-master tomcat_demo2]# vim tomcat-rc.yml

[root@k8s-master tomcat_demo2]# kubectl create -f .

replicationcontroller "mysql" created

service "mysql" created

replicationcontroller "myweb" created

service "myweb" created

[root@k8s-master tomcat_demo2]# kubectl get all

NAME DESIRED CURRENT READY AGE

rc/mysql 1 1 1 7s

rc/myweb 1 1 1 6s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 23h

svc/mysql 10.254.81.140 <none> 3306/TCP 7s

svc/myweb 10.254.206.74 <nodes> 8080:30008/TCP 6s

NAME READY STATUS RESTARTS AGE

po/mysql-nq9r3 1/1 Running 0 7s

po/myweb-n8h93 1/1 Running 0 6s

[root@k8s-master tomcat_demo2]#

#没有做持久化时,删除Pod,数据就没有了,因为pod会重新创建

#由于本项目只有数据库中保存数据,所以只对mysql做持久化

#3.创建pv,pvc

##3.1准备yml

[root@k8s-master tomcat_demo2]# mkdir -p /data/mysql

[root@k8s-master tomcat_demo2]# cat mysql-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql

labels:

type: mysql

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

path: "/data/mysql"

server: 10.0.0.11

readOnly: false

[root@k8s-master tomcat_demo2]

[root@k8s-master tomcat_demo2]# cat mysql-pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: mysql

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 9Gi

##3.2创建pv和PVC

[root@k8s-master tomcat_demo2]# kubectl create -f mysql-pv.yml

persistentvolume "mysql" created

[root@k8s-master tomcat_demo2]# kubectl create -f mysql-pvc.yml

persistentvolumeclaim "mysql" created

确保mysql pvc绑定在mysql pv上

[root@k8s-master tomcat_demo2]# kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE

mysql 10Gi RWX Recycle Bound default/mysql 26m

test2 5Gi RWX Recycle Bound default/nfs 46m

[root@k8s-master tomcat_demo2]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESSMODES AGE

mysql Bound mysql 10Gi RWX 6m

nfs Bound test2 5Gi RWX 42m

[root@k8s-master tomcat_demo2]#

##3.3修改MySQL的rc.yml文件

[root@k8s-master tomcat_demo2]# cp mysql-rc.yml mysql-rc-pvc.yml

[root@k8s-master tomcat_demo2]# cat mysql-rc-pvc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: ‘123456‘

volumeMounts:

- name: data

mountPath: /var/lib/mysql

volumes:

- name: data

persistentVolumeClaim:

claimName: mysql

[root@k8s-master tomcat_demo2]#

##3.4应用

[root@k8s-master tomcat_demo2]# kubectl apply -f mysql-rc-pvc.yml

replicationcontroller "mysql" configured

##3.5删除现有的pod,自动新建的mysql pod会应用pvc

[root@k8s-master tomcat_demo2]# kubectl get all

NAME DESIRED CURRENT READY AGE

rc/mysql 1 1 1 15m

rc/myweb 1 1 1 15m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 23h

svc/mysql 10.254.81.140 <none> 3306/TCP 15m

svc/myweb 10.254.206.74 <nodes> 8080:30008/TCP 15m

NAME READY STATUS RESTARTS AGE

po/mysql-nq9r3 1/1 Running 0 15m

po/myweb-n8h93 1/1 Running 0 15m

[root@k8s-master tomcat_demo2]# kubectl delete pod mysql-nq9r3

pod "mysql-nq9r3" deleted

[root@k8s-master tomcat_demo2]#

[root@k8s-master tomcat_demo2]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 11m mysql 10.0.0.11:5000/mysql:5.7 app=mysql

rc/myweb 1 1 1 36m myweb 10.0.0.11:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d <none>

svc/mysql 10.254.81.140 <none> 3306/TCP 36m app=mysql

svc/myweb 10.254.206.74 <nodes> 8080:30008/TCP 36m app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-tr6bc 1/1 Running 0 5m 172.16.48.3 k8s-node2

po/myweb-n8h93 1/1 Running 0 36m 172.16.79.2 k8s-node1

##3.6master上查看/data/mysql目录下是否有内容

##注意,这里如果看不到数据,可能有几种原因

1.你的mysql pvc没有绑定在mysql pv上

2.nfs服务是否运行正常,master端运行nfs和rpcbind服务,node端运行nfs服务

[root@k8s-master tomcat_demo2]# ll /data/mysql

total 188484

-rw-r----- 1 polkitd ssh_keys 56 Aug 29 14:14 auto.cnf

-rw------- 1 polkitd ssh_keys 1675 Aug 29 14:14 ca-key.pem

-rw-r--r-- 1 polkitd ssh_keys 1107 Aug 29 14:14 ca.pem

-rw-r--r-- 1 polkitd ssh_keys 1107 Aug 29 14:14 client-cert.pem

-rw------- 1 polkitd ssh_keys 1679 Aug 29 14:14 client-key.pem

drwxr-x--- 2 polkitd ssh_keys 58 Aug 29 14:14 HPE_APP

-rw-r----- 1 polkitd ssh_keys 699 Aug 29 14:20 ib_buffer_pool

-rw-r----- 1 polkitd ssh_keys 79691776 Aug 29 14:20 ibdata1

-rw-r----- 1 polkitd ssh_keys 50331648 Aug 29 14:20 ib_logfile0

-rw-r----- 1 polkitd ssh_keys 50331648 Aug 29 14:14 ib_logfile1

-rw-r----- 1 polkitd ssh_keys 12582912 Aug 29 14:20 ibtmp1

drwxr-x--- 2 polkitd ssh_keys 4096 Aug 29 14:14 mysql

drwxr-x--- 2 polkitd ssh_keys 8192 Aug 29 14:14 performance_schema

-rw------- 1 polkitd ssh_keys 1675 Aug 29 14:14 private_key.pem

-rw-r--r-- 1 polkitd ssh_keys 451 Aug 29 14:14 public_key.pem

-rw-r--r-- 1 polkitd ssh_keys 1107 Aug 29 14:14 server-cert.pem

-rw------- 1 polkitd ssh_keys 1675 Aug 29 14:14 server-key.pem

drwxr-x--- 2 polkitd ssh_keys 8192 Aug 29 14:14 sys

[root@k8s-master tomcat_demo2]#

##3.7由于MySQL在node2节点上,所以在node2节点上查看挂载情况

[root@k8s-node2 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

10.0.0.11:/data/mysql 98G 12G 87G 12% /var/lib/kubelet/pods/4539b360-ca24-11e9-90d0-000c29e15b21/volumes/kubernetes.io~nfs/mysql

overlay 98G 3.4G 95G 4% /var/lib/docker/overlay2/5e84e1093fab4dce4ce314f8ce6cfbfb35e3af6428be837a2c40f617ab627561/merged

shm 64M 0 64M 0% /var/lib/docker/containers/3324fee122006e54388c132976752343824fb7a91a438a0c5344e5fcdc0e917e/shm

overlay 98G 3.4G 95G 4% /var/lib/docker/overlay2/0be4a6ee94804a6d0b8ff5f90874a11ec25ef02bdd6e878f901d5c671be75530/merged

[root@k8s-node2 ~]#

##3.8测试删除Pod,新加的数据仍然存在

[root@k8s-master tomcat_demo2]# kubectl delete pod mysql-wz4ds

pod "mysql-wz4ds" deleted

nfs服务不方便扩容和管理,生产环境更多使用glusterfs

Glusterfs是一个开源分布式文件系统,具有强大的横向扩展能力,可支持数PB存储容量和数千客户端,通过网络互联成一个并行的网络文件系统。

具有可扩展性、高性能、高可用性等特点。

#1.安装glusterfs

所有节点:

yum install centos-release-gluster -y

yum install install glusterfs-server -y

systemctl start glusterd.service

systemctl enable glusterd.service

mkdir -p /gfs/test1

mkdir -p /gfs/test2

#2.master节点添加存储资源池

detach是删除节点

probe是添加节点

[root@k8s-master tomcat_demo2]# gluster pool list

UUID Hostname State

e3966f16-295f-4dd4-99db-0facec4ad990 localhost Connected

[root@k8s-master tomcat_demo2]# gluster peer probe k8s-node1

peer probe: success.

[root@k8s-master tomcat_demo2]# gluster peer probe k8s-node2

peer probe: success.

[root@k8s-master tomcat_demo2]# gluster pool list

UUID Hostname State

04afc91b-5c3d-4a07-a86f-e59288ae7ed2 k8s-node1 Connected

234ac958-4da1-4ae1-ab72-df825026465d k8s-node2 Connected

e3966f16-295f-4dd4-99db-0facec4ad990 localhost Connected

[root@k8s-master tomcat_demo2]#

#3.glusterfs卷管理

##3.1创建分布式复制卷使用最多,最稳定

由于复制-分布式,所以replica 2,最少需要4个目录,所以使用了master和node1上各两个

force是由于/gfs/test1目录再root分区下,建议在其他分区更加安全,所以不加force会创建失败。我这里就强制创建了

[root@k8s-master tomcat_demo2]#gluster volume create qiangge replica 2 k8s-master:/gfs/test1 k8s-master:/gfs/test2 k8s-node1:/gfs/test1 k8s-node1:/gfs/test2 force

##3.2启动卷

[root@k8s-master tomcat_demo2]# gluster volume start qiangge

volume start: qiangge: success

##3.3查看卷

[root@k8s-master tomcat_demo2]# gluster volume info qiangge

Volume Name: qiangge

Type: Distributed-Replicate

Volume ID: 4ab2f3fb-ffcb-4213-8455-2f9ddf7b443a

Status: Started

Snapshot Count: 0

Number of Bricks: 2 x 2 = 4

Transport-type: tcp

Bricks:

Brick1: k8s-master:/gfs/test1

Brick2: k8s-master:/gfs/test2

Brick3: k8s-node1:/gfs/test1

Brick4: k8s-node1:/gfs/test2

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

[root@k8s-master tomcat_demo2]#

##3.4挂载卷

mount -t glusterfs

10.0.0.11:任意一个节点

/qiangge 选择卷

/mnt 选择挂载点

[root@k8s-master tomcat_demo2]# mount -t glusterfs 10.0.0.11:/qiangge /mnt

容量为98G

[root@k8s-master tomcat_demo2]# df -h

10.0.0.11:/qiangge 98G 12G 87G 12% /mnt

##3.5分布式复制卷扩容

[root@k8s-master tomcat_demo2]# gluster volume add-brick qiangge k8s-node2:/gfs/test1 k8s-node2:/gfs/test2 force

volume add-brick: success

##3.6扩容后查看

[root@k8s-master tomcat_demo2]# gluster volume info qiangge

Volume Name: qiangge

Type: Distributed-Replicate

Volume ID: 4ab2f3fb-ffcb-4213-8455-2f9ddf7b443a

Status: Started

Snapshot Count: 0

Number of Bricks: 3 x 2 = 6

Transport-type: tcp

Bricks:

Brick1: k8s-master:/gfs/test1

Brick2: k8s-master:/gfs/test2

Brick3: k8s-node1:/gfs/test1

Brick4: k8s-node1:/gfs/test2

Brick5: k8s-node2:/gfs/test1

Brick6: k8s-node2:/gfs/test2

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

[root@k8s-master tomcat_demo2]#

容量变为了147G

[root@k8s-master tomcat_demo2]# df -h

10.0.0.11:/qiangge 147G 14G 134G 10% /mnt

##3.7往qiangge卷中写入数据

[root@k8s-master tomcat_demo2]# cd /mnt/

[root@k8s-master mnt]# cp /opt/k8s/jenkins-k8s/xiaoniaofeifei.zip .

[root@k8s-master mnt]# ll

total 89

-rw-r--r-- 1 root root 91014 Aug 29 15:02 xiaoniaofeifei.zip

[root@k8s-master mnt]# unzip xiaoniaofeifei.zip

Archive: xiaoniaofeifei.zip

inflating: sound1.mp3

creating: img/

inflating: img/bg1.jpg

inflating: img/bg2.jpg

inflating: img/number1.png

inflating: img/number2.png

inflating: img/s1.png

inflating: img/s2.png

inflating: 21.js

inflating: 2000.png

inflating: icon.png

inflating: index.html

#master节点上查看

[root@k8s-master mnt]# tree /gfs/

/gfs/

├── test1

│?? ├── 2000.png

│?? ├── 21.js

│?? ├── icon.png

│?? ├── img

│?? │?? ├── number1.png

│?? │?? ├── number2.png

│?? │?? └── s1.png

│?? ├── index.html

│?? ├── sound1.mp3

│?? └── xiaoniaofeifei.zip

└── test2

├── 2000.png

├── 21.js

├── icon.png

├── img

│?? ├── number1.png

│?? ├── number2.png

│?? └── s1.png

├── index.html

├── sound1.mp3

└── xiaoniaofeifei.zip

4 directories, 18 files

[root@k8s-master mnt]# ^C

[root@k8s-master mnt]#

#node1节点上查看

[root@k8s-node1 ~]# tree /gfs/

/gfs/

├── test1

│?? └── img

│?? ├── bg1.jpg

│?? └── s2.png

└── test2

└── img

├── bg1.jpg

└── s2.png

4 directories, 4 files

[root@k8s-node1 ~]#

#node2节点上查看

[root@k8s-node2 ~]# tree /gfs/

/gfs/

├── test1

│?? └── img

│?? └── bg2.jpg

└── test2

└── img

└── bg2.jpg

4 directories, 2 files

[root@k8s-node2 ~]# #1.创建endpoint

[root@k8s-master glusterfs]#vi glusterfs-ep.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs

namespace: default

subsets:

- addresses:

- ip: 10.0.0.11

- ip: 10.0.0.12

- ip: 10.0.0.13

ports:

- port: 49152

protocol: TCP

[root@k8s-master glusterfs]# kubectl create -f glusterfs-ep.yaml

endpoints "glusterfs" created

[root@k8s-master glusterfs]# kubectl get ep

NAME ENDPOINTS AGE

glusterfs 10.0.0.11:49152,10.0.0.12:49152,10.0.0.13:49152 12s

kubernetes 10.0.0.11:6443 1d

mysql 172.16.48.3:3306 1h

myweb 172.16.79.2:8080 1h

[root@k8s-master glusterfs]#

#2.创建service

service与endpoint是通过name关联的,name要相同

[root@k8s-master glusterfs]# cat glusterfs-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: glusterfs

namespace: default

spec:

ports:

- port: 49152

protocol: TCP

targetPort: 49152

sessionAffinity: None

type: ClusterIP

[root@k8s-master glusterfs]#

[root@k8s-master glusterfs]# kubectl create -f glusterfs-svc.yaml

service "glusterfs" created

[root@k8s-master glusterfs]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

glusterfs 10.254.192.201 <none> 49152/TCP 9s

kubernetes 10.254.0.1 <none> 443/TCP 1d

mysql 10.254.81.140 <none> 3306/TCP 1h

myweb 10.254.206.74 <nodes> 8080:30008/TCP 1h

[root@k8s-master glusterfs]#

#3.查看service与endpoint是否关联上

[root@k8s-master glusterfs]# kubectl describe svc glusterfs

Name: glusterfs

Namespace: default

Labels: <none>

Selector: <none>

Type: ClusterIP

IP: 10.254.192.201

Port: <unset> 49152/TCP

Endpoints: 10.0.0.11:49152,10.0.0.12:49152,10.0.0.13:49152

Session Affinity: None

No events.

[root@k8s-master glusterfs]#

#4.创建gluster类型pv

path: "qiangge"就是卷的名字,在搭建glusterfs的时候创建的

[root@k8s-master glusterfs]# cat gluster-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: gluster

labels:

type: glusterfs

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteMany

glusterfs:

endpoints: "glusterfs"

path: "qiangge"

readOnly: false

[root@k8s-master glusterfs]#

[root@k8s-master glusterfs]# kubectl create -f gluster-pv.yml

persistentvolume "gluster" created

[root@k8s-master glusterfs]# kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE

gluster 50Gi RWX Retain Available 10s

mysql 10Gi RWX Recycle Bound default/mysql 1h

test2 5Gi RWX Recycle Bound default/nfs 1h

[root@k8s-master glusterfs]#

#5.创建pvc

[root@k8s-master glusterfs]# cat gluster-pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: gluster

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 50Gi

[root@k8s-master glusterfs]# kubectl create -f gluster-pvc.yml

persistentvolumeclaim "gluster" created

[root@k8s-master glusterfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESSMODES AGE

gluster Bound gluster 50Gi RWX 7s

mysql Bound mysql 10Gi RWX 1h

nfs Bound test2 5Gi RWX 1h

[root@k8s-master glusterfs]#

#6.创建pod

[root@k8s-master glusterfs]# cat nginx_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

hostPort: 80

volumeMounts:

- name: nfs-vol2

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol2

persistentVolumeClaim:

claimName: gluster

[root@k8s-master glusterfs]#

[root@k8s-master glusterfs]# kubectl create -f nginx_pod.yaml

pod "nginx" created

#查看在node2节点上

[root@k8s-master glusterfs]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 1h mysql 10.0.0.11:5000/mysql:5.7 app=mysql

rc/myweb 1 1 1 1h myweb 10.0.0.11:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/glusterfs 10.254.192.201 <none> 49152/TCP 26m <none>

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d <none>

svc/mysql 10.254.81.140 <none> 3306/TCP 1h app=mysql

svc/myweb 10.254.206.74 <nodes> 8080:30008/TCP 1h app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-snhd1 1/1 Running 1 1h 172.16.48.3 k8s-node2

po/myweb-n8h93 1/1 Running 0 1h 172.16.79.2 k8s-node1

po/nginx 1/1 Running 0 1m 172.16.48.2 k8s-node2

内存至少要2G呀,要不卡到502呀!

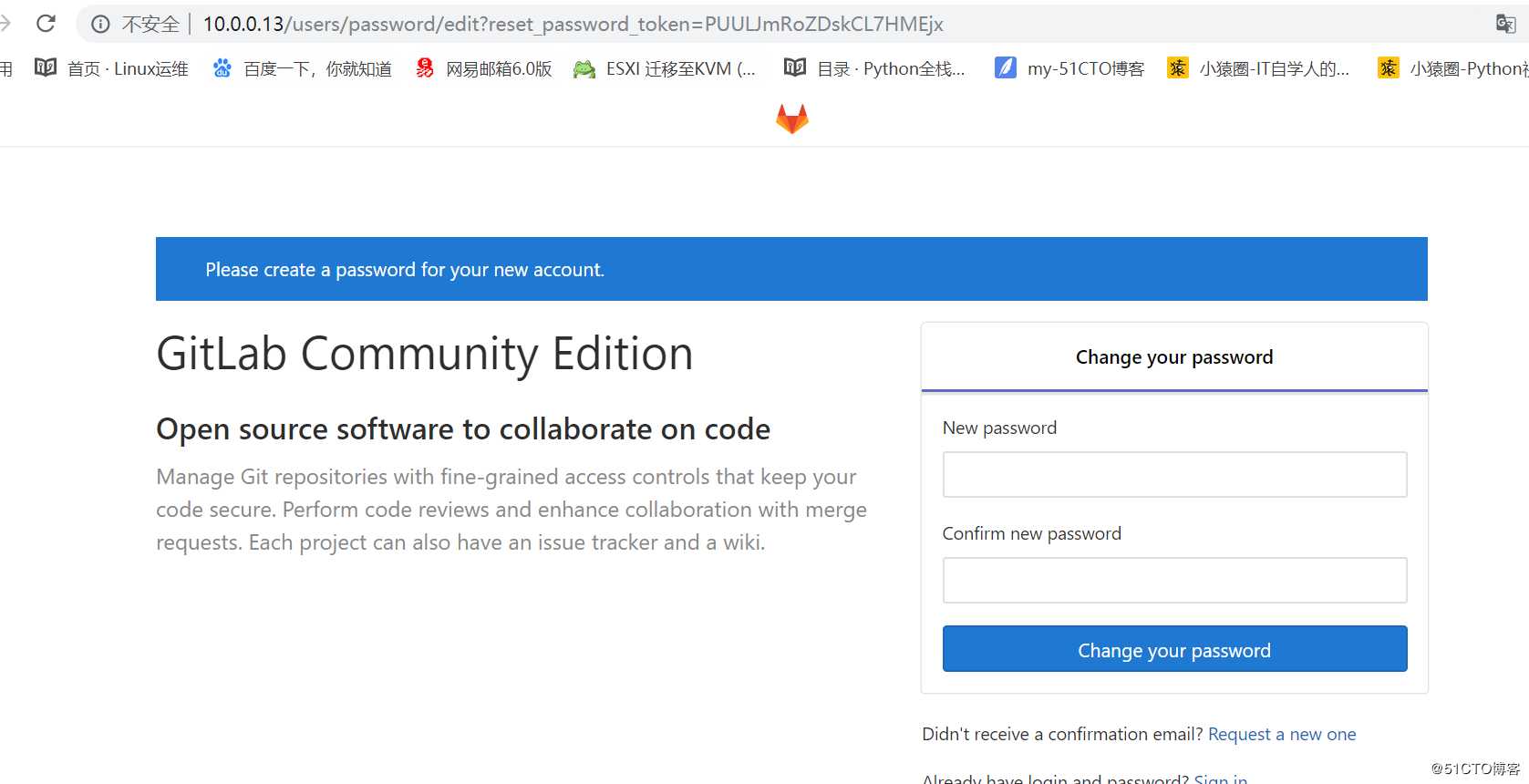

#1.node2安装gitlab

[root@k8s-node2 jenkins-k8s]#wget https://mirror.tuna.tsinghua.edu.cn/gitlab-ce/yum/el7/gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm

[root@k8s-node2 jenkins-k8s]#yum localinstall gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm -y

配置gitlab

[root@k8s-node2 jenkins-k8s]#vim /etc/gitlab/gitlab.rb

13行 external_url ‘http://10.0.0.13‘

1535行 prometheus_monitoring[‘enable‘] = false

启动gitlab

[root@k8s-node2 jenkins-k8s]#gitlab-ctl reconfigure

访问gitlab

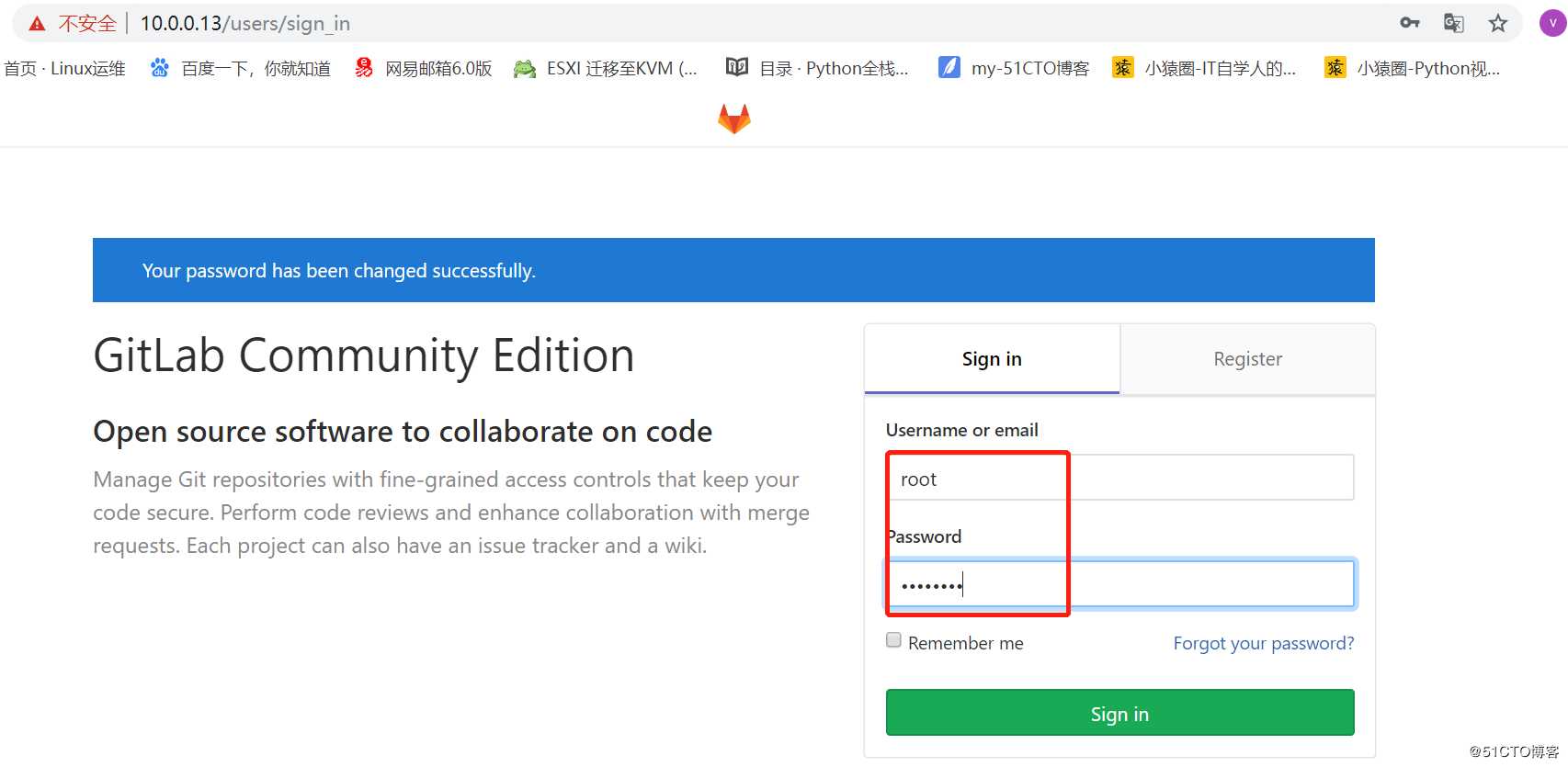

http://10.0.0.13

密码为12345678

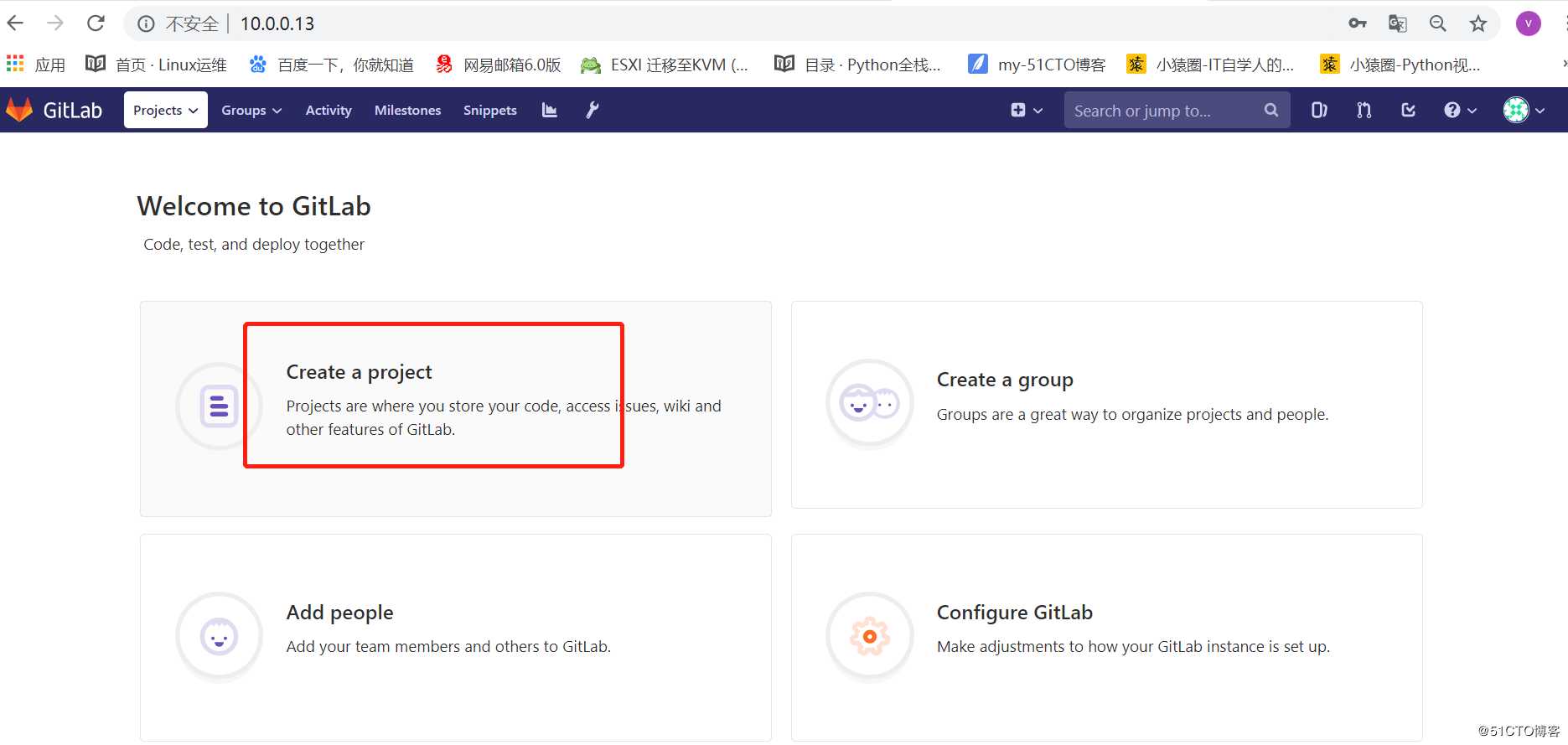

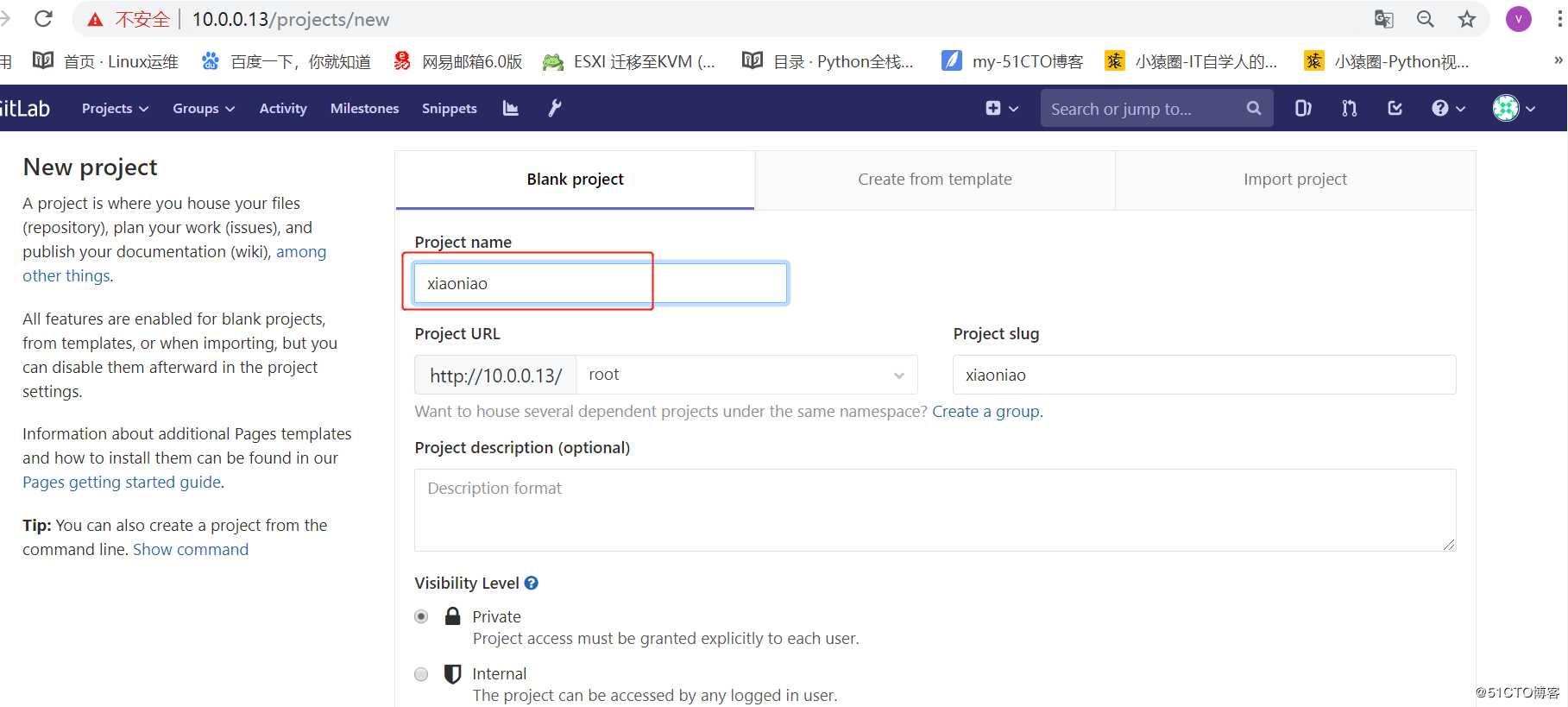

#2.gitlab创建仓库并上传代码

##2.1准备文件

[root@k8s-node2 jenkins-k8s]# mkdir /opt/xiaoniao

[root@k8s-node2 jenkins-k8s]# cd /opt/xiaoniao/

[root@k8s-node2 xiaoniao]# cp /opt/jenkins-k8s/xiaoniaofeifei.zip .

[root@k8s-node2 xiaoniao]# unzip xiaoniaofeifei.zip

Archive: xiaoniaofeifei.zip

inflating: sound1.mp3

creating: img/

inflating: img/bg1.jpg

inflating: img/bg2.jpg

inflating: img/number1.png

inflating: img/number2.png

inflating: img/s1.png

inflating: img/s2.png

inflating: 21.js

inflating: 2000.png

inflating: icon.png

inflating: index.html

[root@k8s-node2 xiaoniao]# ll

total 232

-rw-r--r-- 1 root root 15329 Aug 2 2014 2000.png

-rw-r--r-- 1 root root 51562 Aug 2 2014 21.js

-rw-r--r-- 1 root root 254 Aug 2 2014 icon.png

drwxr-xr-x 2 root root 102 Aug 8 2014 img

-rw-r--r-- 1 root root 3049 Aug 2 2014 index.html

-rw-r--r-- 1 root root 63008 Aug 2 2014 sound1.mp3

-rw-r--r-- 1 root root 91014 Aug 29 16:54 xiaoniaofeifei.zip

[root@k8s-node2 xiaoniao]# rm -rf xiaoniaofeifei.zip

[root@k8s-node2 xiaoniao]#

##2.2gitlab创建仓库并上传代码

[root@k8s-node2 xiaoniao]# git config --global user.name "Administrator"

[root@k8s-node2 xiaoniao]# git config --global user.email admin@example.com

[root@k8s-node2 xiaoniao]# git init

Initialized empty Git repository in /opt/xiaoniao/.git/

[root@k8s-node2 xiaoniao]# git remote add origin http://10.0.0.13/root/xiaoniao.git

[root@k8s-node2 xiaoniao]# git push -u origin master

Username for ‘http://10.0.0.13‘: ^C

[root@k8s-node2 xiaoniao]# git add .

[root@k8s-node2 xiaoniao]# git commit -m "Initial commit"

[master (root-commit) 0212ea4] Initial commit

11 files changed, 184 insertions(+)

create mode 100644 2000.png

create mode 100644 21.js

create mode 100644 icon.png

create mode 100644 img/bg1.jpg

create mode 100644 img/bg2.jpg

create mode 100644 img/number1.png

create mode 100644 img/number2.png

create mode 100644 img/s1.png

create mode 100644 img/s2.png

create mode 100644 index.html

create mode 100644 sound1.mp3

[root@k8s-node2 xiaoniao]# git push -u origin master

Username for ‘http://10.0.0.13‘: root

Password for ‘http://root@10.0.0.13‘: 12345678

Counting objects: 14, done.

Compressing objects: 100% (14/14), done.

Writing objects: 100% (14/14), 88.15 KiB | 0 bytes/s, done.

Total 14 (delta 0), reused 0 (delta 0)

To http://10.0.0.13/root/xiaoniao.git

* [new branch] master -> master

Branch master set up to track remote branch master from origin.

[root@k8s-node2 xiaoniao]#

#1.node1上安装jenkins

##1.1安装jenkins

[root@k8s-node1 jenkins-k8s]# rpm -ivh jdk-8u102-linux-x64.rpm

Preparing... ################################# [100%]

Updating / installing...

1:jdk1.8.0_102-2000:1.8.0_102-fcs ################################# [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

[root@k8s-node1 jenkins-k8s]# mkdir -p /app

[root@k8s-node1 jenkins-k8s]# tar xf apache-tomcat-8.0.27.tar.gz -C /app

[root@k8s-node1 jenkins-k8s]# rm -rf /app/apache-tomcat-8.0.27/webapps/*

[root@k8s-node1 jenkins-k8s]# mv jenkins.war /app/apache-tomcat-8.0.27/webapps/ROOT.war

[root@k8s-node1 jenkins-k8s]#

##1.2jenkin的数据包

[root@k8s-node1 jenkins-k8s]# tar xf jenkin-data.tar.gz -C /root

[root@k8s-node1 jenkins-k8s]# ls /root/.jenkins/

config.xml jenkins.install.UpgradeWizard.state nodes updates

hudson.model.UpdateCenter.xml jenkins.model.JenkinsLocationConfiguration.xml plugins userContent

hudson.plugins.git.GitTool.xml jenkins.telemetry.Correlator.xml queue.xml users

identity.key.enc jobs secret.key workflow-libs

jenkins.install.InstallUtil.installingPlugins logs secret.key.not-so-secret

jenkins.install.InstallUtil.lastExecVersion nodeMonitors.xml secrets

[root@k8s-node1 jenkins-k8s]#

##1.3启动tomcat

[root@k8s-node1 jenkins-k8s]# /app/apache-tomcat-8.0.27/bin/startup.sh

Using CATALINA_BASE: /app/apache-tomcat-8.0.27

Using CATALINA_HOME: /app/apache-tomcat-8.0.27

Using CATALINA_TMPDIR: /app/apache-tomcat-8.0.27/temp

Using JRE_HOME: /usr

Using CLASSPATH: /app/apache-tomcat-8.0.27/bin/bootstrap.jar:/app/apache-tomcat-8.0.27/bin/tomcat-juli.jar

Tomcat started.

[root@k8s-node1 jenkins-k8s]# netstat -antlp|grep 8080

tcp 0 0 10.0.0.12:48266 10.0.0.11:8080 ESTABLISHED 124115/kubelet

tcp 0 0 10.0.0.12:49970 10.0.0.11:8080 ESTABLISHED 124115/kubelet

tcp 0 0 10.0.0.12:42178 10.0.0.11:8080 ESTABLISHED 26169/kube-proxy

tcp 0 0 10.0.0.12:42182 10.0.0.11:8080 ESTABLISHED 26169/kube-proxy

tcp 0 0 10.0.0.12:44416 10.0.0.11:8080 ESTABLISHED 124115/kubelet

tcp 0 0 10.0.0.12:48388 10.0.0.11:8080 ESTABLISHED 124115/kubelet

tcp6 0 0 :::8080 :::* LISTEN 60694/java

[root@k8s-node1 jenkins-k8s]#

admin/123456

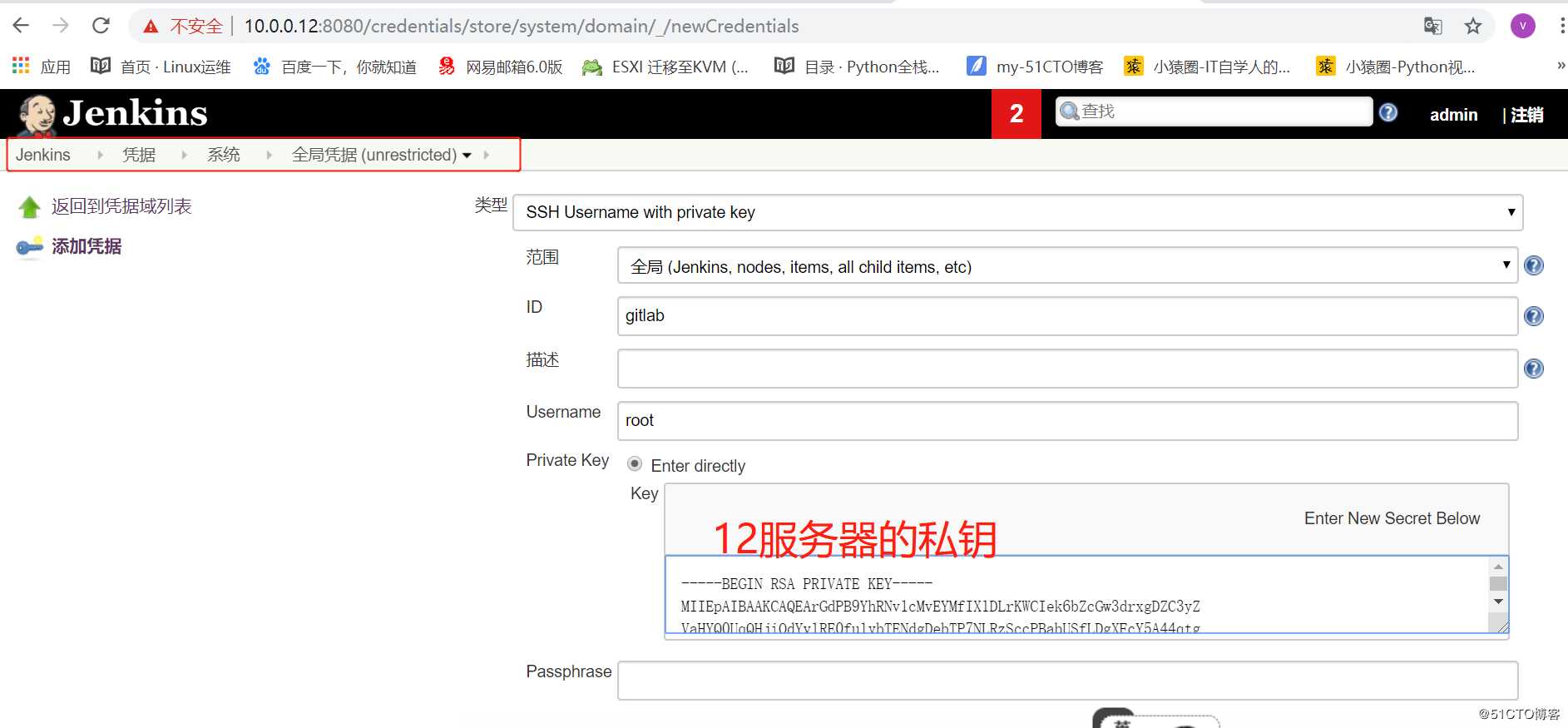

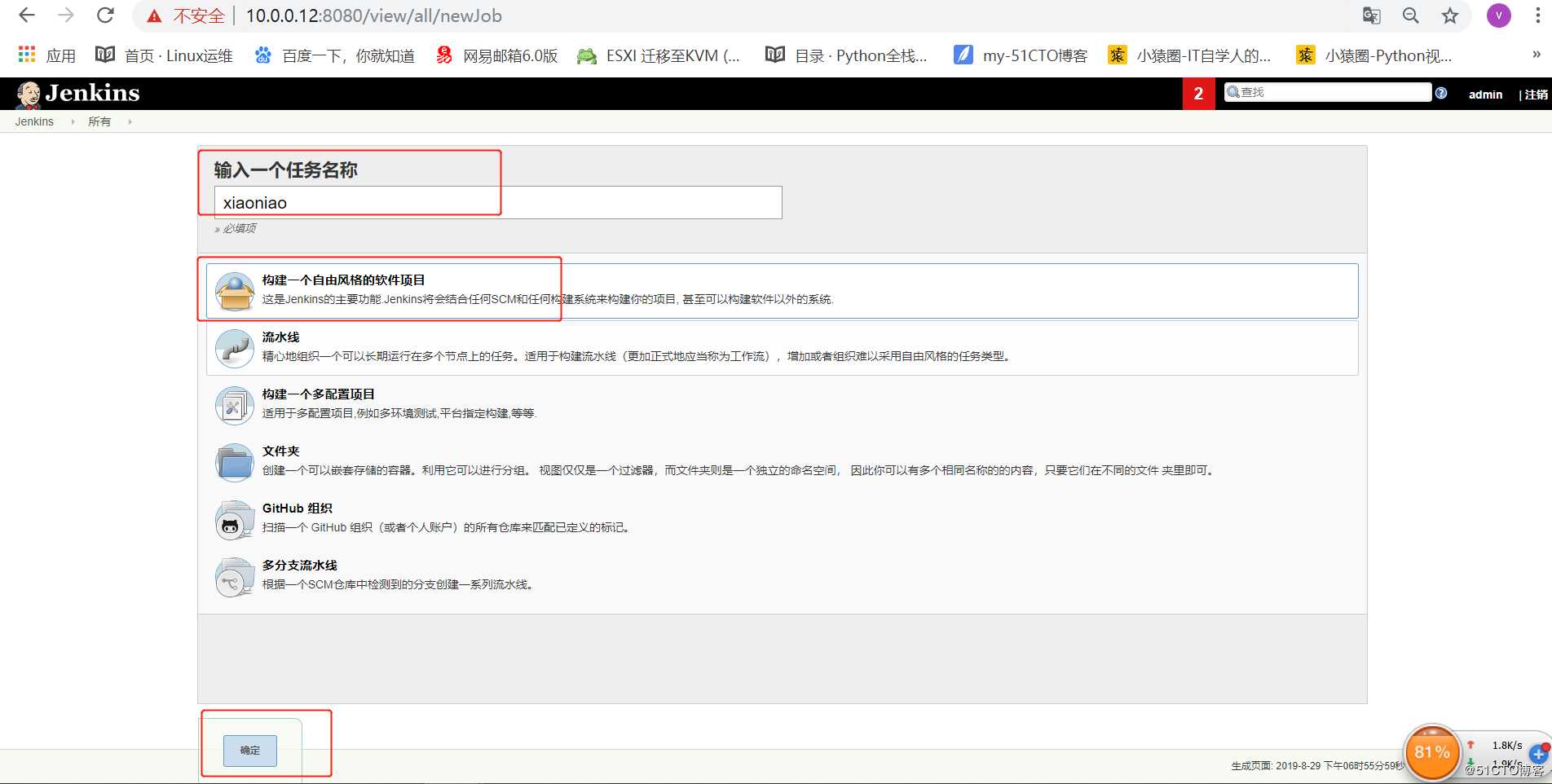

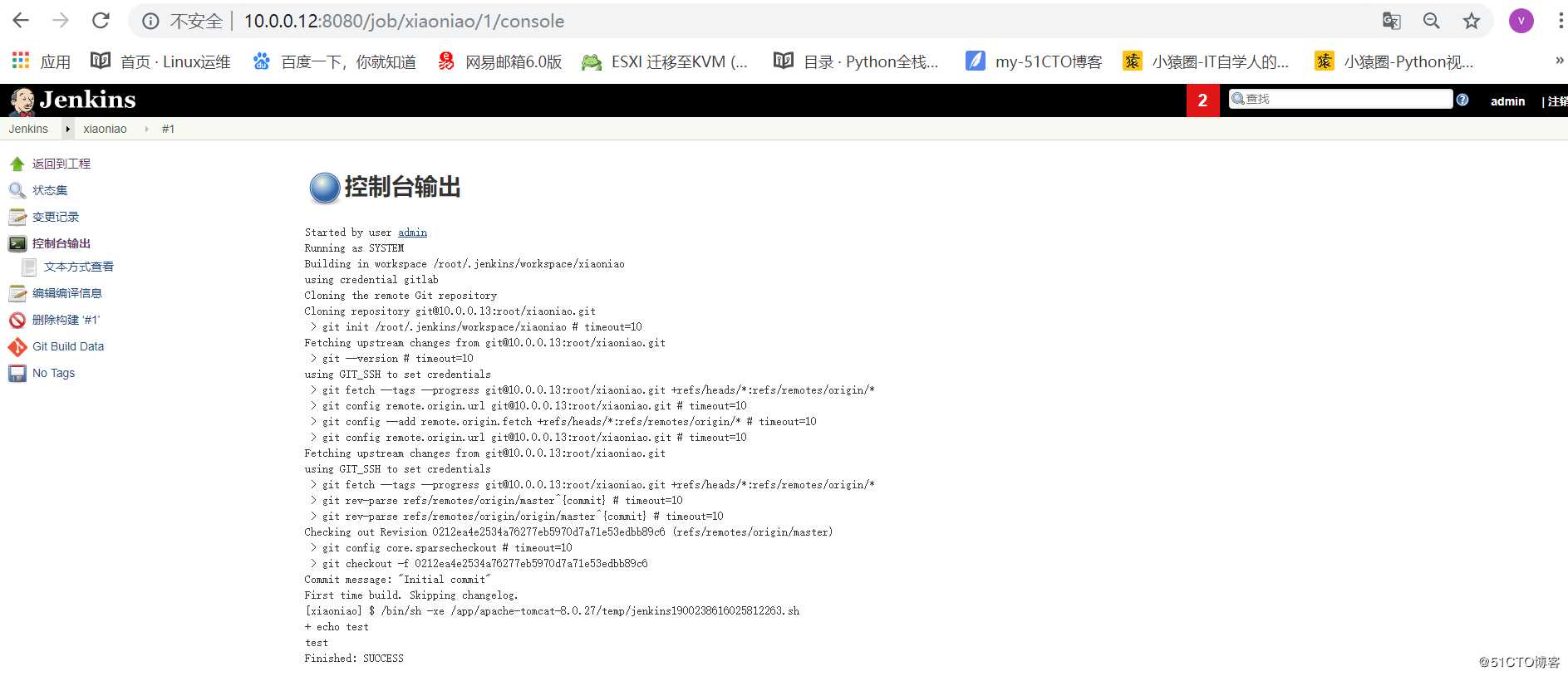

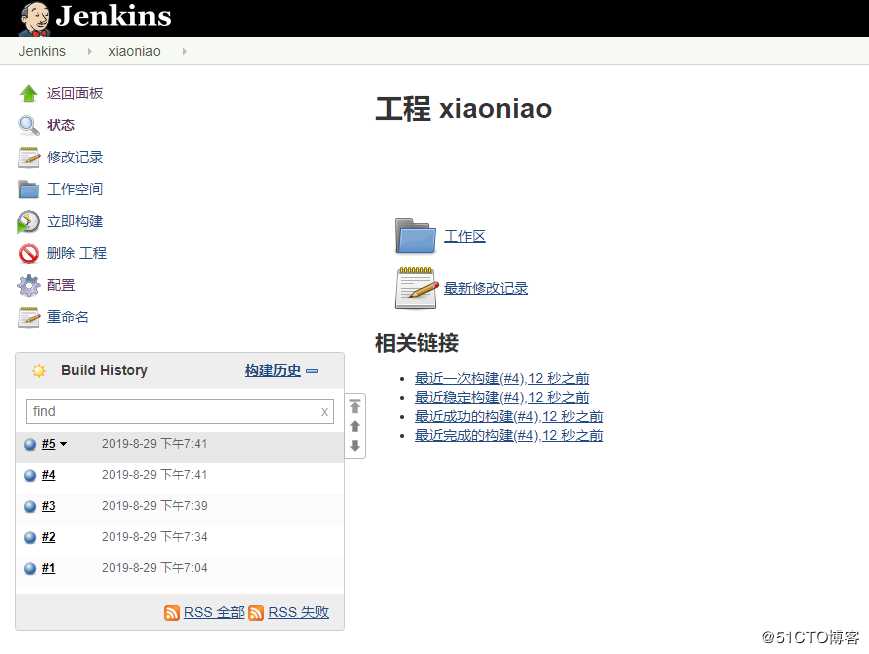

在jenkins上创建构建项目

#1.在node1上生成秘钥对

[root@k8s-node1 jenkins-k8s]# ssh-key

ssh-keygen ssh-keyscan

[root@k8s-node1 jenkins-k8s]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:BpstuSGo4XaoPG7u1uzVw07fmkuEm3yDdgQEKsnEtDQ root@k8s-node1

The key s randomart image is:

+---[RSA 2048]----+

|oE ... |

|+.+ . . |

| = . .. |

| .. *o |

|. . . *.So |

|.o. .+=* |

|.+o. ..@ = |

|++.o. + * + |

|B=o. . =o. |

+----[SHA256]-----+

[root@k8s-node1 jenkins-k8s]#

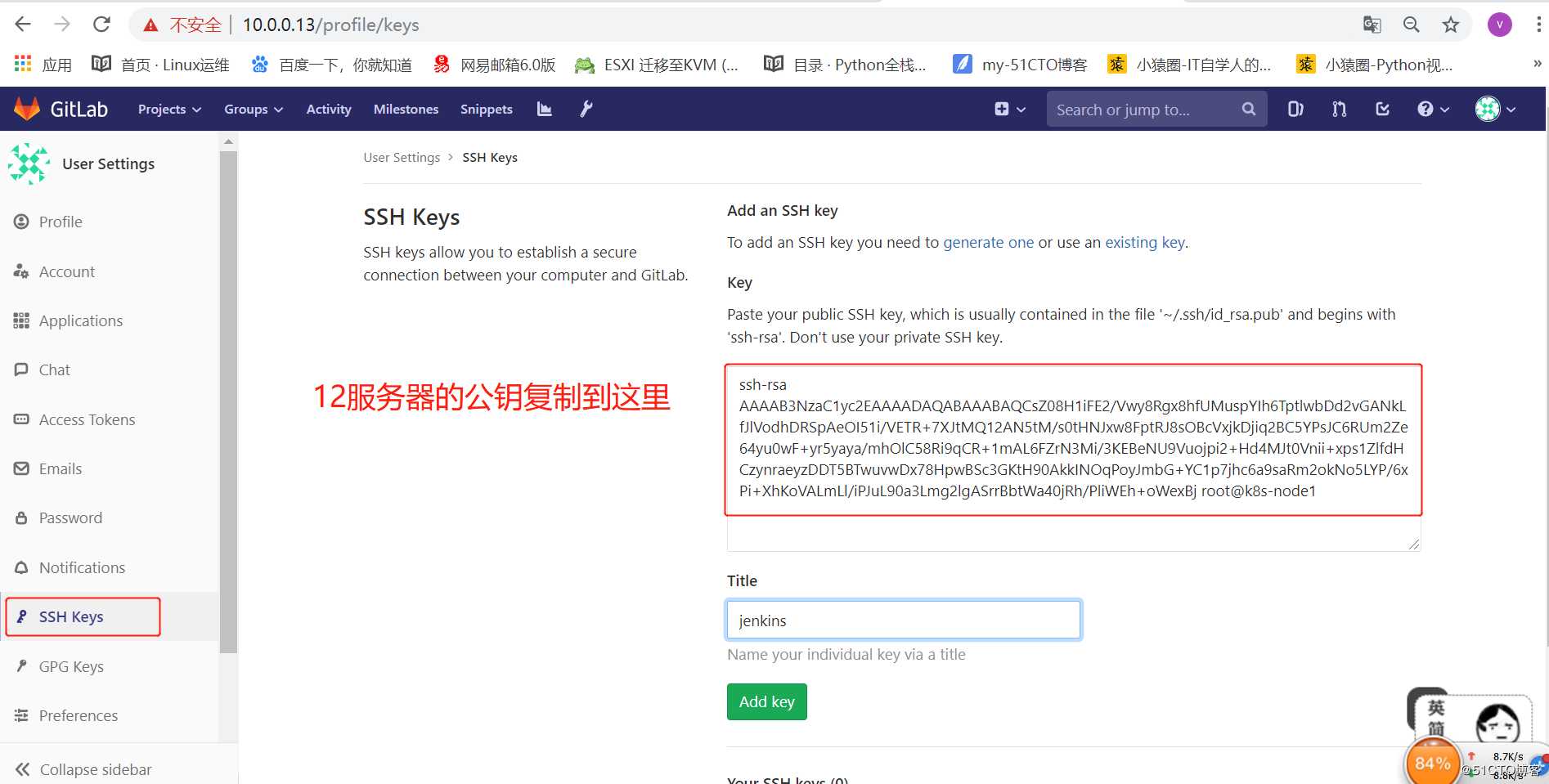

#2.复制公钥到github中

[root@k8s-node1 ~]# cat /root/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCsZ08H1iFE2/Vwy8Rgx8hfUMuspYIh6TptlwbDd2vGANkLfJlVodhDRSpAeOI51i/VETR+7XJtMQ12AN5tM/s0tHNJxw8FptRJ8sOBcVxjkDjiq2BC5YPsJC6RUm2Ze64yu0wF+yr5yaya/mhOlC58Ri9qCR+1mAL6FZrN3Mi/3KEBeNU9Vuojpi2+Hd4MJt0Vnii+xps1ZlfdHCzynraeyzDDT5BTwuvwDx78HpwBSc3GKtH90AkkINOqPoyJmbG+YC1p7jhc6a9saRm2okNo5LYP/6xPi+XhKoVALmLl/iPJuL90a3Lmg2lgASrrBbtWa40jRh/PliWEh+oWexBj root@k8s-node1

[root@k8s-node1 ~]#

[root@k8s-node1 ~]# ll /root/.jenkins/workspace/xiaoniao/

total 140

-rw-r--r-- 1 root root 15329 Aug 29 19:04 2000.png

-rw-r--r-- 1 root root 51562 Aug 29 19:04 21.js

-rw-r--r-- 1 root root 254 Aug 29 19:04 icon.png

drwxr-xr-x 2 root root 102 Aug 29 19:04 img

-rw-r--r-- 1 root root 3049 Aug 29 19:04 index.html

-rw-r--r-- 1 root root 63008 Aug 29 19:04 sound1.mp3

[root@k8s-node1 ~]#

#1.构建docker镜像并测试

[root@k8s-node2 xiaoniao]# pwd

/opt/xiaoniao

[root@k8s-node2 xiaoniao]# ll

total 144

-rw-r--r-- 1 root root 15329 Aug 2 2014 2000.png

-rw-r--r-- 1 root root 51562 Aug 2 2014 21.js

-rw-r--r-- 1 root root 59 Aug 29 19:13 dockerfile

-rw-r--r-- 1 root root 254 Aug 2 2014 icon.png

drwxr-xr-x 2 root root 102 Aug 8 2014 img

-rw-r--r-- 1 root root 3049 Aug 2 2014 index.html

-rw-r--r-- 1 root root 63008 Aug 2 2014 sound1.mp3

[root@k8s-node2 xiaoniao]# cat dockerfile

FROM 10.0.0.11:5000/nginx:1.13

ADD . /usr/share/nginx/html

[root@k8s-node2 xiaoniao]# vi .dockerignore

#不要把dockerfile这个文件上传到容器中

[root@k8s-node2 xiaoniao]# cat .dockerignore

dockerfile

[root@k8s-node2 xiaoniao]#

#创建镜像

[root@k8s-node2 xiaoniao]# docker build -t xiaoniao:v1 .

Sending build context to Docker daemon 328.2 kB

Step 1/2 : FROM 10.0.0.11:5000/nginx:1.13

---> ae513a47849c

Step 2/2 : ADD . /usr/share/nginx/html

---> 7ac78e054c58

Removing intermediate container 3fe76618255c

Successfully built 7ac78e054c58

[root@k8s-node2 xiaoniao]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

xiaoniao v1 7ac78e054c58 42 seconds ago 109 MB

#创建容器

[root@k8s-node2 xiaoniao]# docker run -d -p 88:80 xiaoniao:v1

ab6195eb8e19a0505ad8a4c41a9ea116fa962fd0a971bd4fb3fd37614e0c667b

[root@k8s-node2 xiaoniao]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ab6195eb8e19 xiaoniao:v1 "nginx -g ‘daemon ..." 5 seconds ago Up 4 seconds 0.0.0.0:88->80/tcp competent_easley

[root@k8s-node2 xiaoniao]#

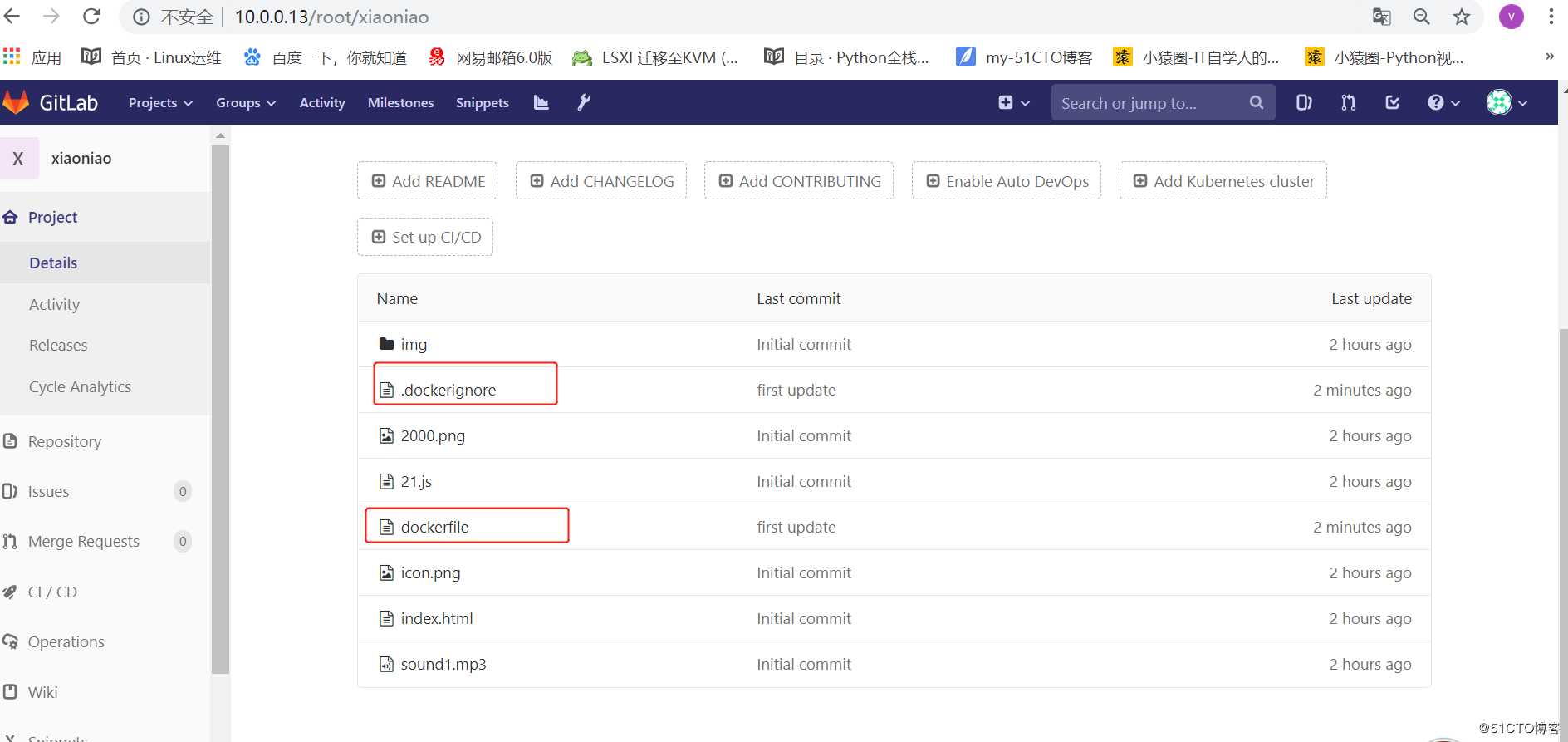

#把新加的内容传到github上

[root@k8s-node2 xiaoniao]# git add .

[root@k8s-node2 xiaoniao]# git commit -m "first update"

[master 747d882] first update

2 files changed, 3 insertions(+)

create mode 100644 .dockerignore

create mode 100644 dockerfile

[root@k8s-node2 xiaoniao]# git push -u origin master

Username for ‘http://10.0.0.13‘: root

Password for ‘http://root@10.0.0.13‘:

Counting objects: 5, done.

Compressing objects: 100% (3/3), done.

Writing objects: 100% (4/4), 377 bytes | 0 bytes/s, done.

Total 4 (delta 1), reused 0 (delta 0)

To http://10.0.0.13/root/xiaoniao.git

0212ea4..747d882 master -> master

Branch master set up to track remote branch master from origin.

[root@k8s-node2 xiaoniao]#

#查看node1上workspace下的内容

[root@k8s-node1 ~]# ll /root/.jenkins/workspace/xiaoniao/

total 144

-rw-r--r-- 1 root root 15329 Aug 29 19:04 2000.png

-rw-r--r-- 1 root root 51562 Aug 29 19:04 21.js

-rw-r--r-- 1 root root 59 Aug 29 19:34 dockerfile

-rw-r--r-- 1 root root 254 Aug 29 19:04 icon.png

drwxr-xr-x 2 root root 102 Aug 29 19:04 img

-rw-r--r-- 1 root root 3049 Aug 29 19:04 index.html

-rw-r--r-- 1 root root 63008 Aug 29 19:04 sound1.mp3

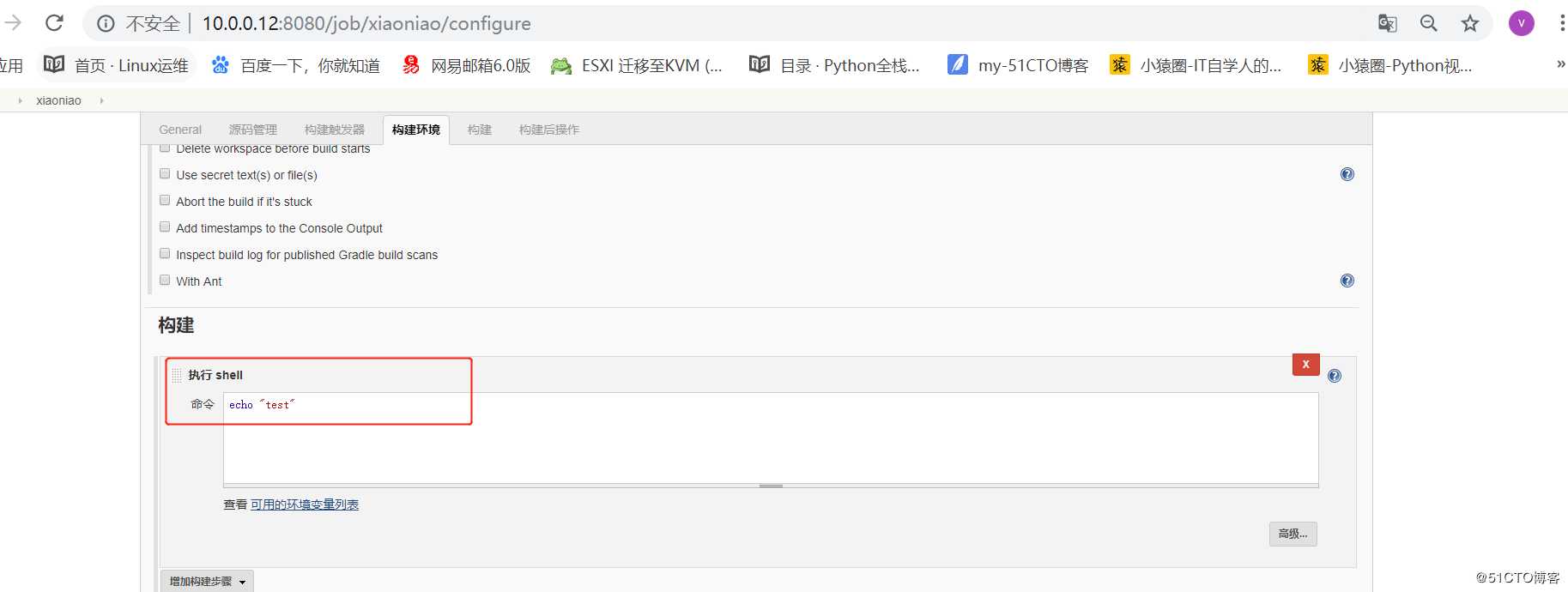

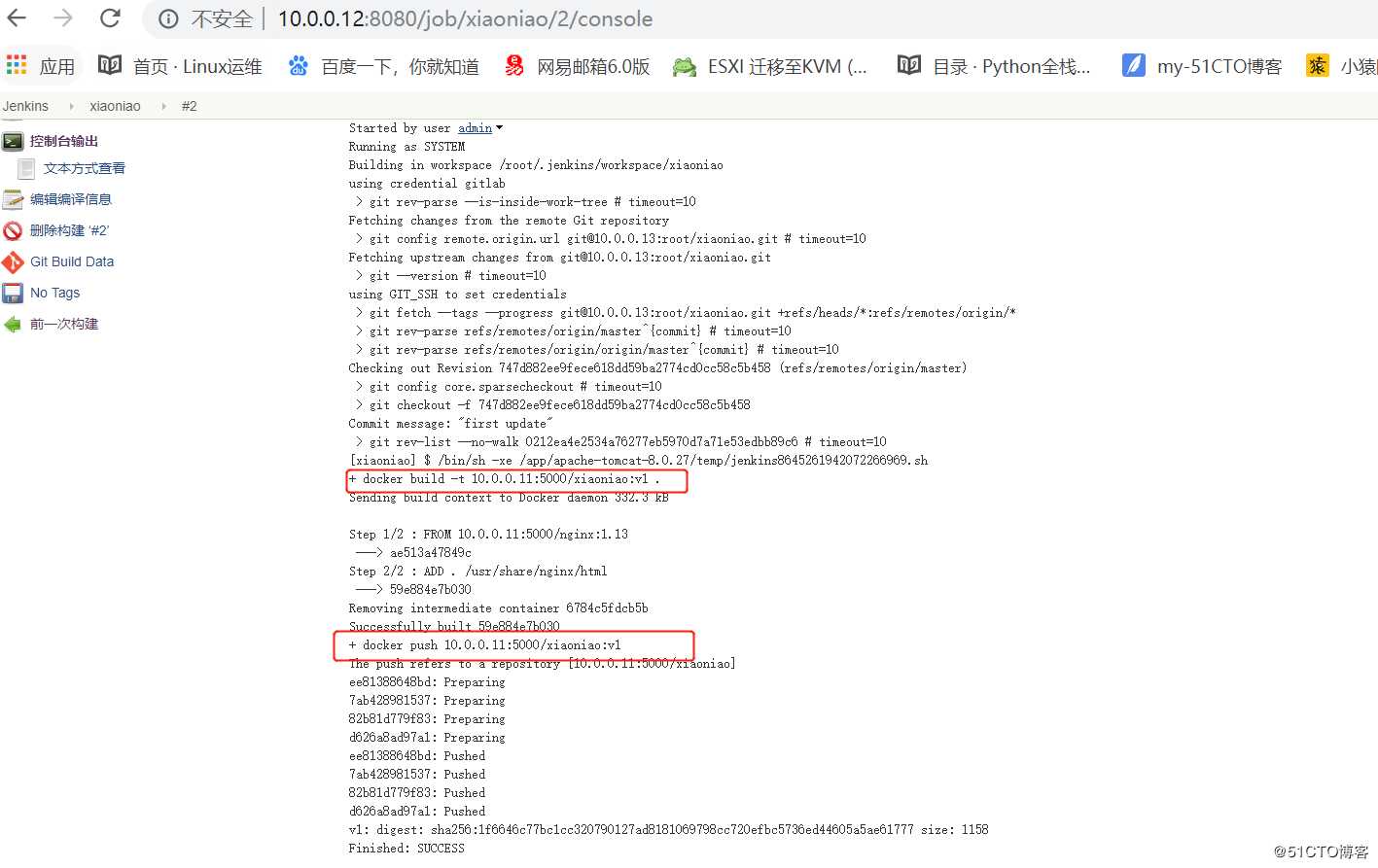

[root@k8s-node1 ~]# 使用jenkins环境变量

BUILD_ID

修改构建后脚本为

docker build -t 10.0.0.11:5000/xiaoniao:v$BUILD_ID .

docker push 10.0.0.11:5000/xiaoniao:v$BUILD_ID

#查看私有仓库中的版本

[root@k8s-master ~]# ls /opt/myregistry/docker/registry/v2/repositories/xiaoniao/_manifests/tags/

v1 v3 v4 v5

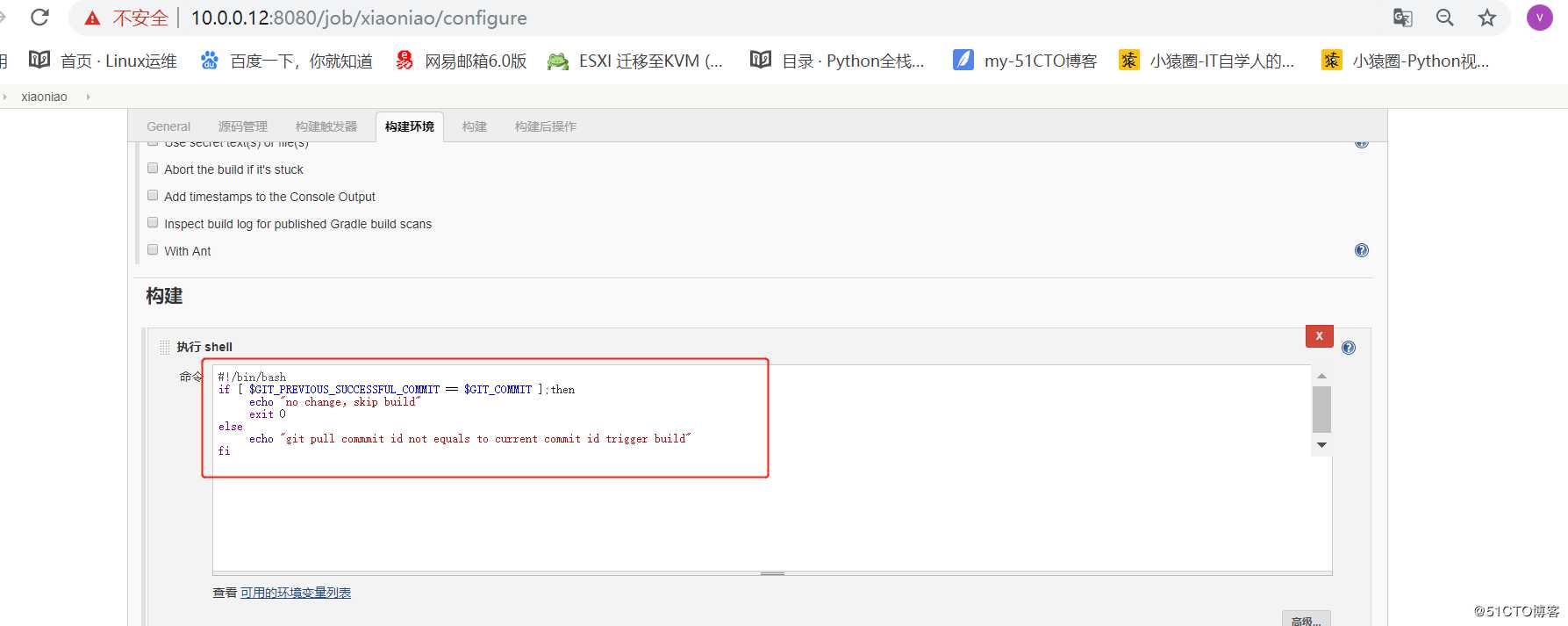

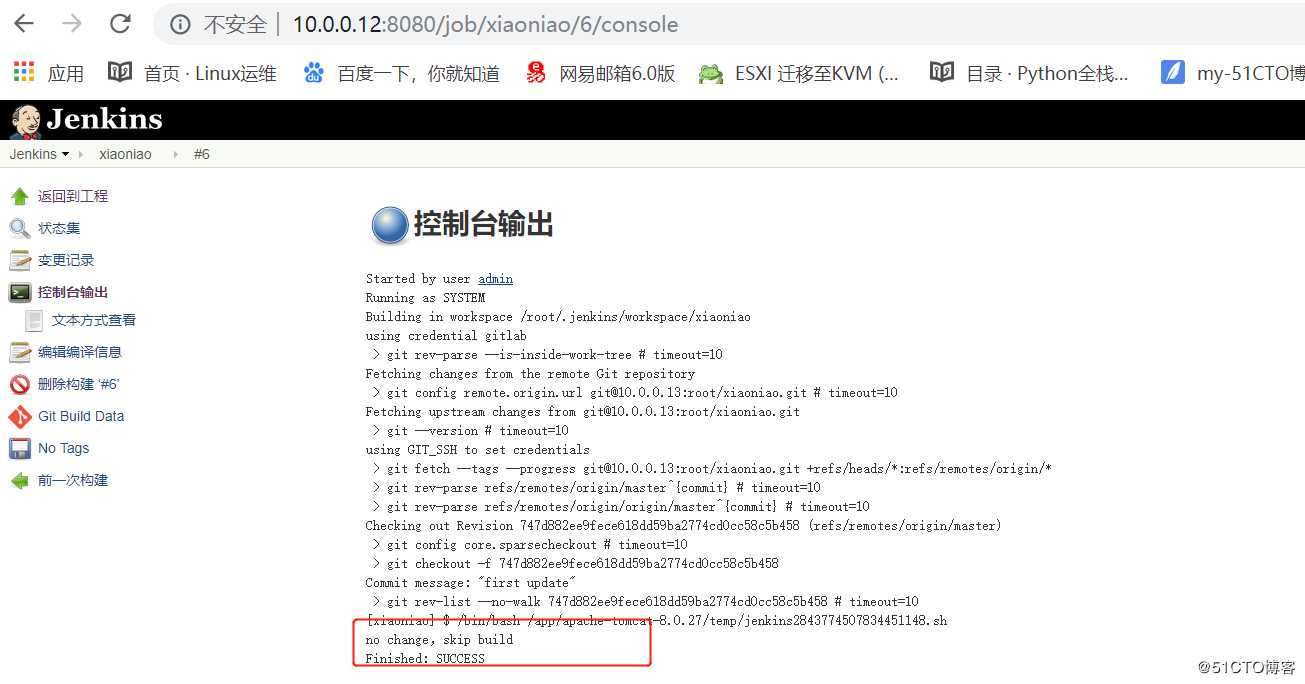

[root@k8s-master ~]# #防止误操作立即构建,没有更新,就不上传新版本了

#!/bin/bash

if [ $GIT_PREVIOUS_SUCCESSFUL_COMMIT == $GIT_COMMIT ];then

echo "no change,skip build"

exit 0

else

echo "git pull commmit id not equals to current commit id trigger build"

fi

[root@k8s-master ~]# ls /opt/myregistry/docker/registry/v2/repositories/xiaoniao/_manifests/tags/

v1 v3 v4 v5

[root@k8s-master ~]#

#1.k8s集群部署项目

#1.1创建deployment

[root@k8s-master ~]# kubectl run xiaoniao --image=10.0.0.11:5000/xiaoniao:v1 --replicas=2 --record

deployment "xiaoniao" created

#--record 是为了查看历史记录

[root@k8s-master ~]# kubectl rollout history deployment

deployments "xiaoniao"

REVISION CHANGE-CAUSE

1 kubectl run xiaoniao --image=10.0.0.11:5000/xiaoniao:v1 --replicas=2 --record

[root@k8s-master ~]#

#1.2查看,创建了两个副本

[root@k8s-master ~]# kubectl get all

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/xiaoniao 2 2 2 2 2m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d

NAME DESIRED CURRENT READY AGE

rs/xiaoniao-562012648 2 2 2 2m

NAME READY STATUS RESTARTS AGE

po/xiaoniao-562012648-jwk09 1/1 Running 0 2m

po/xiaoniao-562012648-px0fb 1/1 Running 0 2m

[root@k8s-master ~]#

#1.3暴露端口,让外部访问

[root@k8s-master ~]# kubectl expose deployment xiaoniao --port=80 --type=NodePort

service "xiaoniao" exposed

[root@k8s-master ~]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 1d

xiaoniao 10.254.10.198 <nodes> 80:32169/TCP 10s

[root@k8s-master ~]#

#1.4访问

[root@k8s-master ~]# curl 10.0.0.12:32169|grep ‘小鸟‘

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3049 100 3049 0 0 1315k 0 --:--:-- --:--:-- --:--:-- 2977k

<title>小鸟飞飞飞-文章库小游戏</title>

"tTitle": "小鸟飞飞飞-文章库小游戏",

document.title = "我玩小鸟飞飞飞过了"+t+"关!你能超过洒家我吗?";

[root@k8s-master ~]#

#2.k8s 版本升级和回滚

##2.1版本更新

[root@k8s-master ~]# kubectl set image deploy xiaoniao xiaoniao=10.0.0.11:5000/xiaoniao:v3

deployment "xiaoniao" image updated

[root@k8s-master ~]# kubectl set image deploy xiaoniao xiaoniao=10.0.0.11:5000/xiaoniao:v6

deployment "xiaoniao" image updated

##2.2查看历史版本

[root@k8s-master ~]# kubectl rollout history deployment xiaoniao

deployments "xiaoniao"

REVISION CHANGE-CAUSE

1 kubectl run xiaoniao --image=10.0.0.11:5000/xiaoniao:v1 --replicas=2 --record

2 kubectl set image deploy xiaoniao xiaoniao=10.0.0.11:5000/xiaoniao:v3

3 kubectl set image deploy xiaoniao xiaoniao=10.0.0.11:5000/xiaoniao:v6

[root@k8s-master ~]#

##2.3回滚:

[root@k8s-master ~]# kubectl rollout undo deploy xiaoniao

deployment "xiaoniao" rolled back

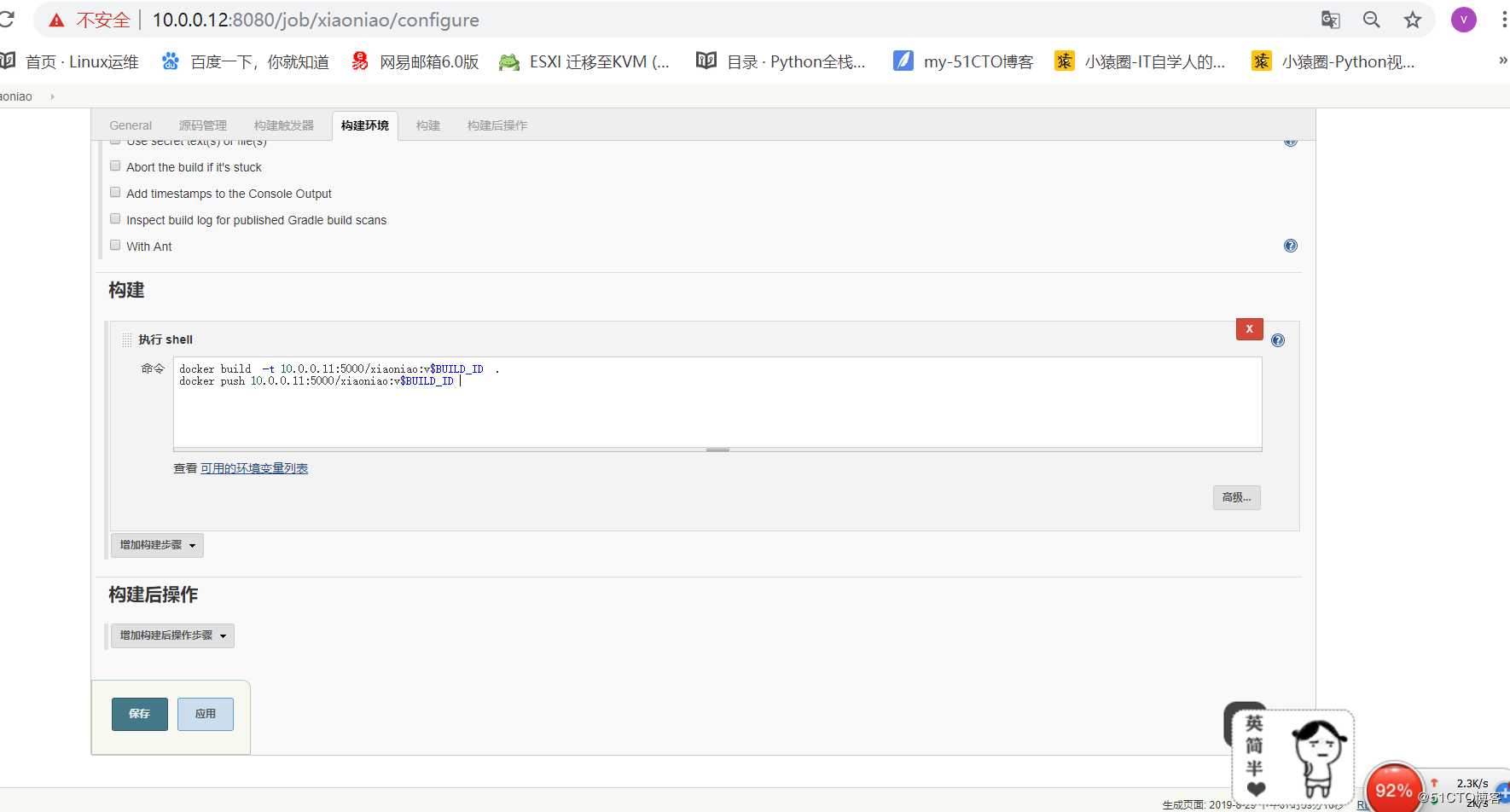

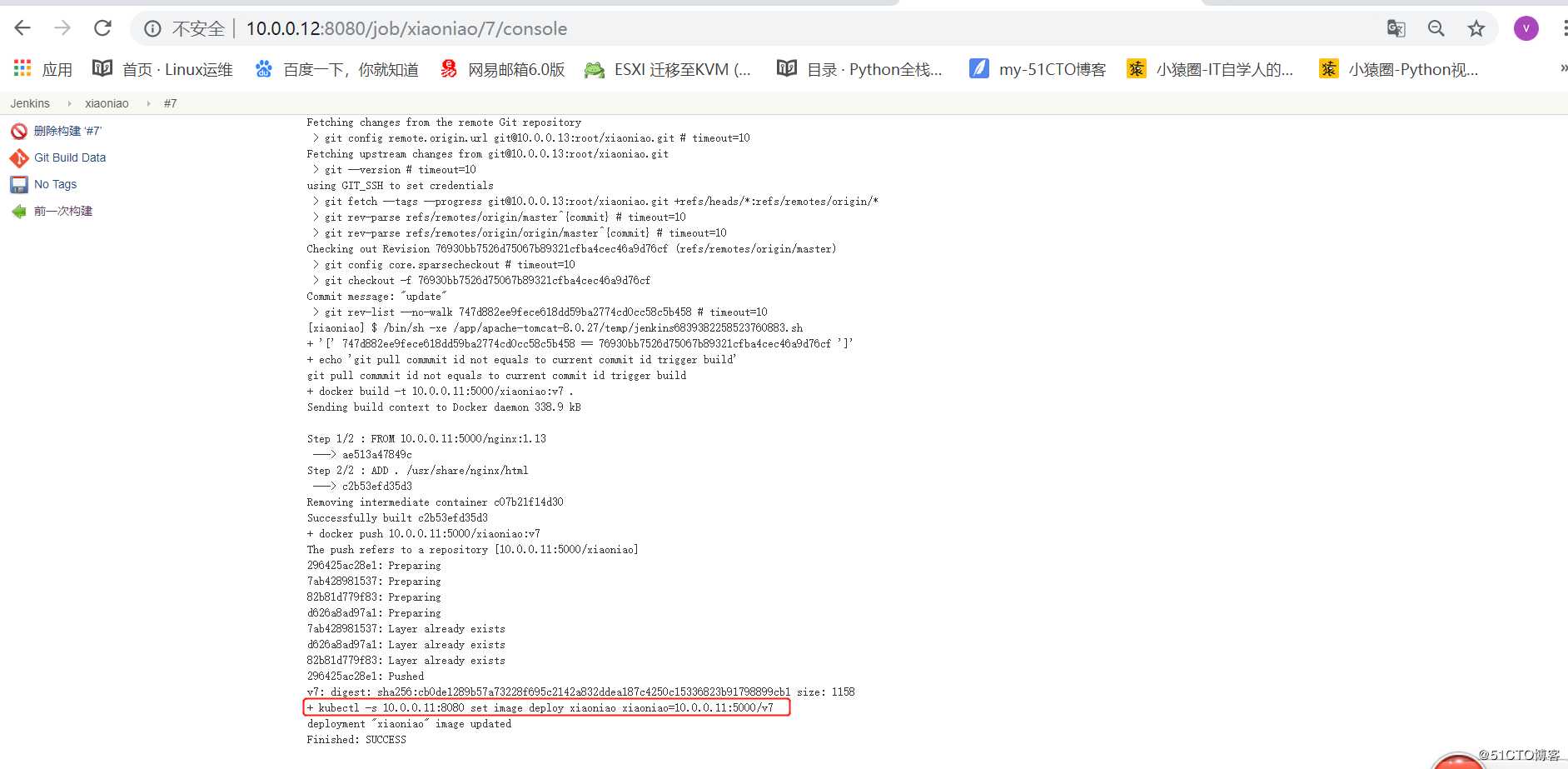

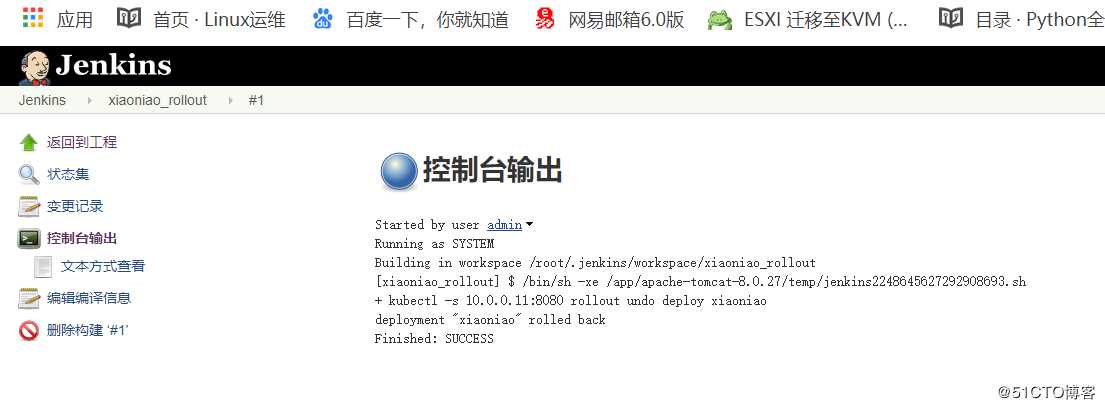

#3.jenkins实现一键k8s版本升级

修改构建执行shell脚本内容

if [ $GIT_PREVIOUS_SUCCESSFUL_COMMIT == $GIT_COMMIT ];then

echo "no change,skip build"

exit 0

else

echo "git pull commmit id not equals to current commit id trigger build"

docker build -t 10.0.0.11:5000/xiaoniao:v$BUILD_ID .

docker push 10.0.0.11:5000/xiaoniao:v$BUILD_ID

kubectl -s 10.0.0.11:8080 set image deploy xiaoniao xiaoniao=10.0.0.11:5000/xiaoniao:v$BUILD_ID

fi

#更新git中内容测试

[root@k8s-node2 xiaoniao]# echo "jiu yao jie shu le">over.html

[root@k8s-node2 xiaoniao]# git add .

[root@k8s-node2 xiaoniao]# git commit -m "update"

[master 76930bb] update

1 file changed, 1 insertion(+)

create mode 100644 over.html

[root@k8s-node2 xiaoniao]# git push -u origin master

Username for ‘http://10.0.0.13‘: root

Password for ‘http://root@10.0.0.13‘:

Counting objects: 4, done.

Compressing objects: 100% (2/2), done.

Writing objects: 100% (3/3), 282 bytes | 0 bytes/s, done.

Total 3 (delta 1), reused 0 (delta 0)

To http://10.0.0.13/root/xiaoniao.git

747d882..76930bb master -> master

Branch master set up to track remote branch master from origin.

[root@k8s-node2 xiaoniao]#

[root@k8s-master ~]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/xiaoniao 2 2 2 2 23m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 1d <none>

svc/xiaoniao 10.254.10.198 <nodes> 80:32169/TCP 19m run=xiaoniao

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/xiaoniao-1161273840 2 2 2 59s xiaoniao 10.0.0.11:5000/xiaoniao:v9 pod-template-hash=1161273840,run=xiaoniao

rs/xiaoniao-562012648 0 0 0 23m xiaoniao 10.0.0.11:5000/xiaoniao:v1 pod-template-hash=562012648,run=xiaoniao

rs/xiaoniao-711827946 0 0 0 16m xiaoniao 10.0.0.11:5000/xiaoniao:v3 pod-template-hash=711827946,run=xiaoniao

rs/xiaoniao-783916636 0 0 0 8m xiaoniao 10.0.0.11:5000/v7 pod-template-hash=783916636,run=xiaoniao

rs/xiaoniao-936550893 0 0 0 16m xiaoniao 10.0.0.11:5000/xiaoniao:v6 pod-template-hash=936550893,run=xiaoniao

NAME READY STATUS RESTARTS AGE IP NODE

po/xiaoniao-1161273840-28277 1/1 Running 0 58s 172.16.79.2 k8s-node1

po/xiaoniao-1161273840-r2ff1 1/1 Running 0 58s 172.16.48.3 k8s-node2

回滚

标签:查看 sch dso rman aop create using 准备 been

原文地址:https://blog.51cto.com/10983441/2433777