标签:style blog http io color os ar 使用 for

对于现在的计算机来讲,整个计算机的性能主要受磁盘IO速度的影响,内存、CPU包括主板总线的速度已经很快了。

dd命令功能很简单,就是从一个源读取数据以bit级的形式写到一个目标地址,通过这种方式我们就可以检测我们实际磁盘在linux系统中的读写性能,不需要经过任何检测软件而就是去读取数据,一般来讲用dd来检测磁盘的性能也被认为是最接近真实情况。

用法:dd if[数据从哪里读取,一般来讲从dev下的zero设备,这个设备不断返回0作为数据源] of[把读取的文件写入哪个文件] bs[block size,每次读写基本快的大小] count[总共读写多少个bs] conv=fdatasync[在linux中有很强的内存缓存机制,为了提高系统性能,linux会大量使用内存作为硬盘读写缓存,所以这里用这个参数来保证数据是直接写入硬盘]

示例:

dd if=/dev/zero of=testfile bs=1M count=512 conv=fdatasync

在我的虚拟机上结果如下:

[root@localhost ~]# dd if=/dev/zero of=testfile bs=1M count=512 conv=fdatasync 512+0 records in 512+0 records out 536870912 bytes (537 MB) copied, 19.6677 s, 27.3 MB/s

一般建议多次运行这个命令取平均值,在每次执行上面的命令前,建议用下面的命令来清除缓存:

echo 3 > /proc/sys/vm/drop_caches

通过dd命令测试往往不是很严谨也不是很科学,因为可能会受cpu使用率和后台服务影响。

hdparm命令专门用来去获取修改测试磁盘信息。hdparm必须在管理员权限下运行。

用法:hdparm -t 要测试的磁盘

示例:

# hdparm -t /dev/sda

结果:

[root@localhost ~]# hdparm -t /dev/sda /dev/sda: Timing buffered disk reads: 444 MB in 3.01 seconds = 147.35 MB/sec [root@localhost ~]# hdparm -t /dev/sda /dev/sda: Timing buffered disk reads: 808 MB in 3.00 seconds = 269.21 MB/sec

可以看到两次运行结果差距比较大,所以建议多次运行取平均值。

用这两种方式测试出来的结果是非常简单,专业测试磁盘性能时,不仅需要知道读写性能,还要区分读写数据大小(4k/16k/32k),还要测试是顺序读写还是随机读写,如果是机械硬盘还要测试内磁道和外磁道的速率差距等等。

可通过yum安装(不包含在linux默认的yum源中,建议安装repoforge源):

yum install -y bonnie++

用法:bonnie++ -u 用户名 -s 测试读写文件大小

示例:

bonnie++ -u root -s 2g

默认情况下,会写一个4G大小的文件,分为4份,会通过读写随机操作对系统IO进行全面测试,由于写的文件比较大,所以时间会比较长。

结果:

[root@localhost ~]# bonnie++ -u root -s 2g Using uid:0, gid:0. Writing a byte at a time...done Writing intelligently...done Rewriting...done Reading a byte at a time...done Reading intelligently...done start ‘em...done...done...done...done...done... Create files in sequential order...done. Stat files in sequential order...done. Delete files in sequential order...done. Create files in random order...done. Stat files in random order...done. Delete files in random order...done. Version 1.96 ------Sequential Output------ --Sequential Input- --Random- Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks-- Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP localhost.locald 2G 287 99 31885 20 59035 15 2795 99 514292 64 9491 421 Latency 42230us 2804ms 284ms 8198us 5820us 4819us Version 1.96 ------Sequential Create------ --------Random Create-------- localhost.localdoma -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete-- files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP 16 20946 92 +++++ +++ +++++ +++ 23169 94 +++++ +++ +++++ +++ Latency 2539us 853us 993us 1675us 284us 1234us 1.96,1.96,localhost.localdomain,1,1414376948,2G,,287,99,31885,20,59035,15,2795,99,514292,64,9491,421,16,,,,,20946,92,+++++,+++,+++++,+++,23169,94,+++++,+++,+++++,+++,42230us,2804ms,284ms,8198us,5820us,4819us,2539us,853us,993us,1675us,284us,1234us

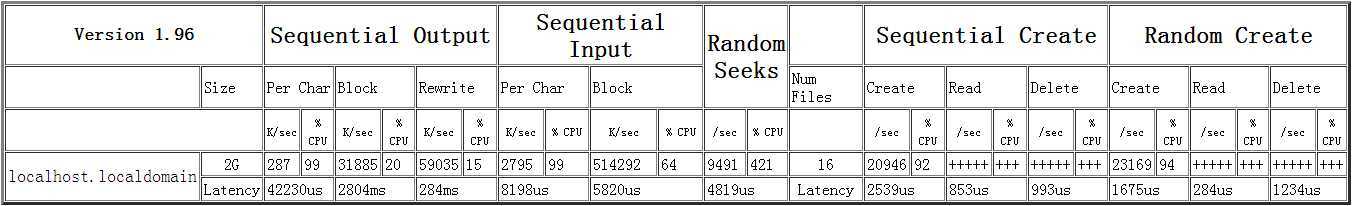

这个格式实在有点乱,不过好在这个软件还提供了把结果转换成html表格的工具(用最后一行转换):

echo 1.96,1.96,localhost.localdomain,1,1414376948,2G,,287,99,31885,20,59035,15,2795,99,514292,64,9491,421,16,,,,,20946,92,+++++,+++,+++++,+++,23169,94,+++++,+++,+++++,+++,42230us,2804ms,284ms,8198us,5820us,4819us,2539us,853us,993us,1675us,284us,1234us | bon_csv2html >> bon_result.html

bon_result.html的显示如下:

这个就好看多了,简单解释一下:

Sequential Output(顺序输出,实际是写操作)下的 Per Char是值用putc方式写,毫无疑问,因为cache的line总是大于1字节的,所以不停的骚扰CPU执行putc,看到cpu使用率是99%.写的速度是0.3MB/s,非常慢了。

Sequential Output下的block是按照block去写的,明显CPU使用率就下来了,速度也上去了,是31MB/s,跟上面用dd测试的差不多。

Sequential Input(顺序输入,实际是读操作)下的Per Char是指用getc的方式读文件,速度是2.5MB/s,CPU使用率是99%。

Sequential Input下的block是指按照block去读文件,速度是50MB/s,CPU使用率是64%。

Random Seeks是随机寻址,每秒寻址9000多次还算可以的。

Sequential Create(顺序创建文件)

Random Create(随机创建文件)

有的结果是很多+号,这表示bonner++认为值不可靠,于是不输出。一般来说是因为执行的很快,一般来说不是系统瓶颈,所以不用担心。

iozone提供的信息更全面和精确,所以iozone是系统性能测试中用的最多的工具之一。

iozone使用略复杂,这里只用最常用的一些参数:

-l:最小的进程数量,用于并发测试,不想测多进程可以设置为1。

-u:最大的进程数量,用于并发测试,不想测多进程可以设置为1。

-r:默认读写基本单位,如16k,这个值一般跟测试的应用有关,如要测试数据库,这个值就跟数据库的块大小一致。

-s:默认读写的大小,建议这个值大一些(一般为2倍内存大小),因为iozone并不会规避低层的缓存,所以如果值比较小,可能直接在内存中就完成了。

-F:指定缓存文件

示例:

iozone -l 1 -u 1 -r 16k -s 2g -F tempfile

结果:

Children see throughput for 1 initial writers = 31884.46 kB/sec Parent sees throughput for 1 initial writers = 30305.05 kB/sec Min throughput per process = 31884.46 kB/sec Max throughput per process = 31884.46 kB/sec Avg throughput per process = 31884.46 kB/sec Min xfer = 2097152.00 kB Children see throughput for 1 rewriters = 102810.49 kB/sec Parent sees throughput for 1 rewriters = 95660.98 kB/sec Min throughput per process = 102810.49 kB/sec Max throughput per process = 102810.49 kB/sec Avg throughput per process = 102810.49 kB/sec Min xfer = 2097152.00 kB Children see throughput for 1 readers = 450193.59 kB/sec Parent sees throughput for 1 readers = 450076.28 kB/sec Min throughput per process = 450193.59 kB/sec Max throughput per process = 450193.59 kB/sec Avg throughput per process = 450193.59 kB/sec Min xfer = 2097152.00 kB Children see throughput for 1 re-readers = 451833.53 kB/sec Parent sees throughput for 1 re-readers = 451756.47 kB/sec Min throughput per process = 451833.53 kB/sec Max throughput per process = 451833.53 kB/sec Avg throughput per process = 451833.53 kB/sec Min xfer = 2097152.00 kB Children see throughput for 1 reverse readers = 61854.02 kB/sec Parent sees throughput for 1 reverse readers = 61851.88 kB/sec Min throughput per process = 61854.02 kB/sec Max throughput per process = 61854.02 kB/sec Avg throughput per process = 61854.02 kB/sec Min xfer = 2097152.00 kB Children see throughput for 1 stride readers = 43441.66 kB/sec Parent sees throughput for 1 stride readers = 43439.83 kB/sec Min throughput per process = 43441.66 kB/sec Max throughput per process = 43441.66 kB/sec Avg throughput per process = 43441.66 kB/sec Min xfer = 2097152.00 kB Children see throughput for 1 random readers = 47707.72 kB/sec Parent sees throughput for 1 random readers = 47705.00 kB/sec Min throughput per process = 47707.72 kB/sec Max throughput per process = 47707.72 kB/sec Avg throughput per process = 47707.72 kB/sec Min xfer = 2097152.00 kB Children see throughput for 1 mixed workload = 50807.69 kB/sec Parent sees throughput for 1 mixed workload = 50806.24 kB/sec Min throughput per process = 50807.69 kB/sec Max throughput per process = 50807.69 kB/sec Avg throughput per process = 50807.69 kB/sec Min xfer = 2097152.00 kB Children see throughput for 1 random writers = 45131.93 kB/sec Parent sees throughput for 1 random writers = 43955.32 kB/sec Min throughput per process = 45131.93 kB/sec Max throughput per process = 45131.93 kB/sec Avg throughput per process = 45131.93 kB/sec Min xfer = 2097152.00 kB

从上面的结果中可以看到各种方式下系统磁盘的读写性能。

PS:其实下面还有结果,只是我的虚拟机磁盘满了,崩溃了。

标签:style blog http io color os ar 使用 for

原文地址:http://www.cnblogs.com/lurenjiashuo/p/4054460.html