标签:form str 分析 gre cond specific more 图片 util

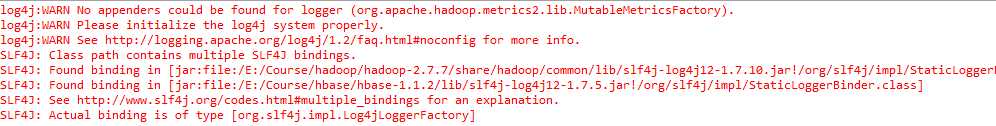

最近学习大数据Hadoop和hbase,但是奈何虚拟机速度更不上就想使用Windows下的eclipse链接Linux下的Hadoop等文件,但是使用Hadoop的控件可以连接好,而且也能链接hadoop的相关文件,但是到了hbase就会卡住不动,出现以下界面就一直不动。

经过修改别的虚拟机下的hosts文件什么的都没有解决,最后经过同学的提醒,可能不是我hbase的配置问题,有可能是代码的问题。最终真的发现是代码的问题:

public static void init(){ configuration = HBaseConfiguration.create(); configuration.set("hbase.rootdir","hdfs://192.168.43.18:9000"); try{ connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); }catch (IOException e){ e.printStackTrace(); } }

这是我之前的代码,但是其中的第二行会出现链接不上hbase(具体问题还在分析);修改成如下代码就ok:

public static void init(){ configuration = HBaseConfiguration.create(); configuration.set("hbase.zookeeper.quorum","192.168.43.18"); configuration.set("hbase.zookeeper.property.clientPort","2181"); try{ connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); }catch (IOException e){ e.printStackTrace(); } }

运行添加成功!!!(可算成功了)。

注:之上的截图中有一个错误:报错是因为没有给Hadoop安排存储日志文件的位置。

在程序的src目录下new->others->files创建一个log4j.properties文件,之后在里面添加如下内容,即可;

# Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # Define some default values that can be overridden by system properties hbase.root.logger=INFO,console hbase.security.logger=INFO,console hbase.log.dir=. hbase.log.file=hbase.log # Define the root logger to the system property "hbase.root.logger". log4j.rootLogger=${hbase.root.logger} # Logging Threshold log4j.threshold=ALL # # Daily Rolling File Appender # log4j.appender.DRFA=org.apache.log4j.DailyRollingFileAppender log4j.appender.DRFA.File=${hbase.log.dir}/${hbase.log.file} # Rollver at midnight log4j.appender.DRFA.DatePattern=.yyyy-MM-dd # 30-day backup #log4j.appender.DRFA.MaxBackupIndex=30 log4j.appender.DRFA.layout=org.apache.log4j.PatternLayout # Pattern format: Date LogLevel LoggerName LogMessage log4j.appender.DRFA.layout.ConversionPattern=%d{ISO8601} %-5p [%t] %c{2}: %m%n # Rolling File Appender properties hbase.log.maxfilesize=256MB hbase.log.maxbackupindex=20 # Rolling File Appender log4j.appender.RFA=org.apache.log4j.RollingFileAppender log4j.appender.RFA.File=${hbase.log.dir}/${hbase.log.file} log4j.appender.RFA.MaxFileSize=${hbase.log.maxfilesize} log4j.appender.RFA.MaxBackupIndex=${hbase.log.maxbackupindex} log4j.appender.RFA.layout=org.apache.log4j.PatternLayout log4j.appender.RFA.layout.ConversionPattern=%d{ISO8601} %-5p [%t] %c{2}: %m%n # # Security audit appender # hbase.security.log.file=SecurityAuth.audit hbase.security.log.maxfilesize=256MB hbase.security.log.maxbackupindex=20 log4j.appender.RFAS=org.apache.log4j.RollingFileAppender log4j.appender.RFAS.File=${hbase.log.dir}/${hbase.security.log.file} log4j.appender.RFAS.MaxFileSize=${hbase.security.log.maxfilesize} log4j.appender.RFAS.MaxBackupIndex=${hbase.security.log.maxbackupindex} log4j.appender.RFAS.layout=org.apache.log4j.PatternLayout log4j.appender.RFAS.layout.ConversionPattern=%d{ISO8601} %p %c: %m%n log4j.category.SecurityLogger=${hbase.security.logger} log4j.additivity.SecurityLogger=false #log4j.logger.SecurityLogger.org.apache.hadoop.hbase.security.access.AccessController=TRACE #log4j.logger.SecurityLogger.org.apache.hadoop.hbase.security.visibility.VisibilityController=TRACE # # Null Appender # log4j.appender.NullAppender=org.apache.log4j.varia.NullAppender # # console # Add "console" to rootlogger above if you want to use this # log4j.appender.console=org.apache.log4j.ConsoleAppender log4j.appender.console.target=System.err log4j.appender.console.layout=org.apache.log4j.PatternLayout log4j.appender.console.layout.ConversionPattern=%d{ISO8601} %-5p [%t] %c{2}: %m%n # Custom Logging levels log4j.logger.org.apache.zookeeper=INFO #log4j.logger.org.apache.hadoop.fs.FSNamesystem=DEBUG log4j.logger.org.apache.hadoop.hbase=INFO # Make these two classes INFO-level. Make them DEBUG to see more zk debug. log4j.logger.org.apache.hadoop.hbase.zookeeper.ZKUtil=INFO log4j.logger.org.apache.hadoop.hbase.zookeeper.ZooKeeperWatcher=INFO #log4j.logger.org.apache.hadoop.dfs=DEBUG # Set this class to log INFO only otherwise its OTT # Enable this to get detailed connection error/retry logging. # log4j.logger.org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation=TRACE # Uncomment this line to enable tracing on _every_ RPC call (this can be a lot of output) #log4j.logger.org.apache.hadoop.ipc.HBaseServer.trace=DEBUG # Uncomment the below if you want to remove logging of client region caching‘ # and scan of hbase:meta messages # log4j.logger.org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation=INFO # log4j.logger.org.apache.hadoop.hbase.client.MetaScanner=INFO

标签:form str 分析 gre cond specific more 图片 util

原文地址:https://www.cnblogs.com/huan-ch/p/11689016.html