标签:centos7 逻辑 count 本机 etc 配置 中间 eth0 slave

实现方式| OS | selinux | firewalld | 网卡 |

|---|---|---|---|

| CentOS7 | 关闭 | 关闭 | eth0、eth1 |

https://www.kernel.org/doc/Documentation/networking/bonding.txt

Linux bonding驱动程序提供了一种用于将多个网络接口聚合为单个逻辑“绑定”接口的方法。 绑定接口的行为取决于模式。 一般来说,模式提供热备用或负载平衡服务。此外,可以执行链路完整性监视

共7种(0-6)模式。默认是balance-rr(循环)

Mode0(balance-rr)循环策略:从第一个可用的从属设备到最后一个从属设备按顺序传输数据包。 此模式提供负载平衡和容错能力。

Mode1(active-backup)主动-备份策略:绑定中只有一个从属处于活动状态。当且仅当活动的从站发生故障时,其他从站才变为活动状态。绑定的MAC地址在外部仅在一个端口(网络适配器)上可见,以避免混淆交换机。

Mode2(balance-xor):为容错和负载平衡设置XOR(异或)策略。 使用此方法,接口会将传入请求的MAC地址与其中一个从NIC的MAC地址进行匹配。 一旦建立了链接,便从第一个可用接口开始依次发出传输。

Mode3(broadcast)广播策略:在所有从属接口上传输所有内容。 此模式提供容错能力。

Mode4(802.3ad):设置IEEE802.3ad动态链接聚合策略。创建共享相同速度和双工设置的聚合组。在活动聚合器中的所有从属上发送和接收。需要符合802.3ad要求的开关

Mode5(balance-tlb):设置传输负载平衡(TLB)策略以实现容错和负载平衡。 根据每个从接口上的当前负载分配传出流量。 当前从站接收到传入流量。 如果接收方从站发生故障,则另一个从站将接管发生故障的从站的MAC地址。

Mode6(balance-alb):设置和活动负载平衡(ALB)策略用于容错和负载平衡。 包括IPV4流量的发送和接收以及负载平衡。 通过ARP协商实现接收负载平衡

查看已添加的eth0、eth1网卡状态

[root@CentOS7 ~]# nmcli dev status

DEVICE TYPE STATE CONNECTION

eth0 ethernet disconnected --

eth1 ethernet disconnected --1)使用主备(active-backup)模式添加bonding接口

[root@CentOS7 ~]# nmcli con add type bond con-name bond01 ifname bond0 mode active-backup

Connection ‘bond01‘ (ca0305ce-110c-4411-a48e-5952a2c72716) successfully added.2)添加slave接口

[root@CentOS7 ~]# nmcli con add type bond-slave con-name bond01-slave0 ifname eth0 master bond0

Connection ‘bond01-slave0‘ (5dd5a90c-9a2f-4f1d-8fcc-c7f4b333e3d2) successfully added.

[root@CentOS7 ~]# nmcli con add type bond-slave con-name bond01-slave1 ifname eth1 master bond0

Connection ‘bond01-slave1‘ (a8989d38-cc0b-4a4e-942d-3a2e1eb8f95b) successfully added.3)启动slave接口

[root@CentOS7 ~]# nmcli con up bond01-slave0

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/14)

[root@CentOS7 ~]# nmcli con up bond01-slave1

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/15)4)启动bond接口

[root@CentOS7 ~]# nmcli con up bond01

Connection successfully activated (master waiting for slaves) (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/16)5)查看bond状态

[root@CentOS7 ~]# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eth0

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth0

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:0c:29:08:2a:73

Slave queue ID: 0

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:0c:29:08:2a:7d

Slave queue ID: 06)测试

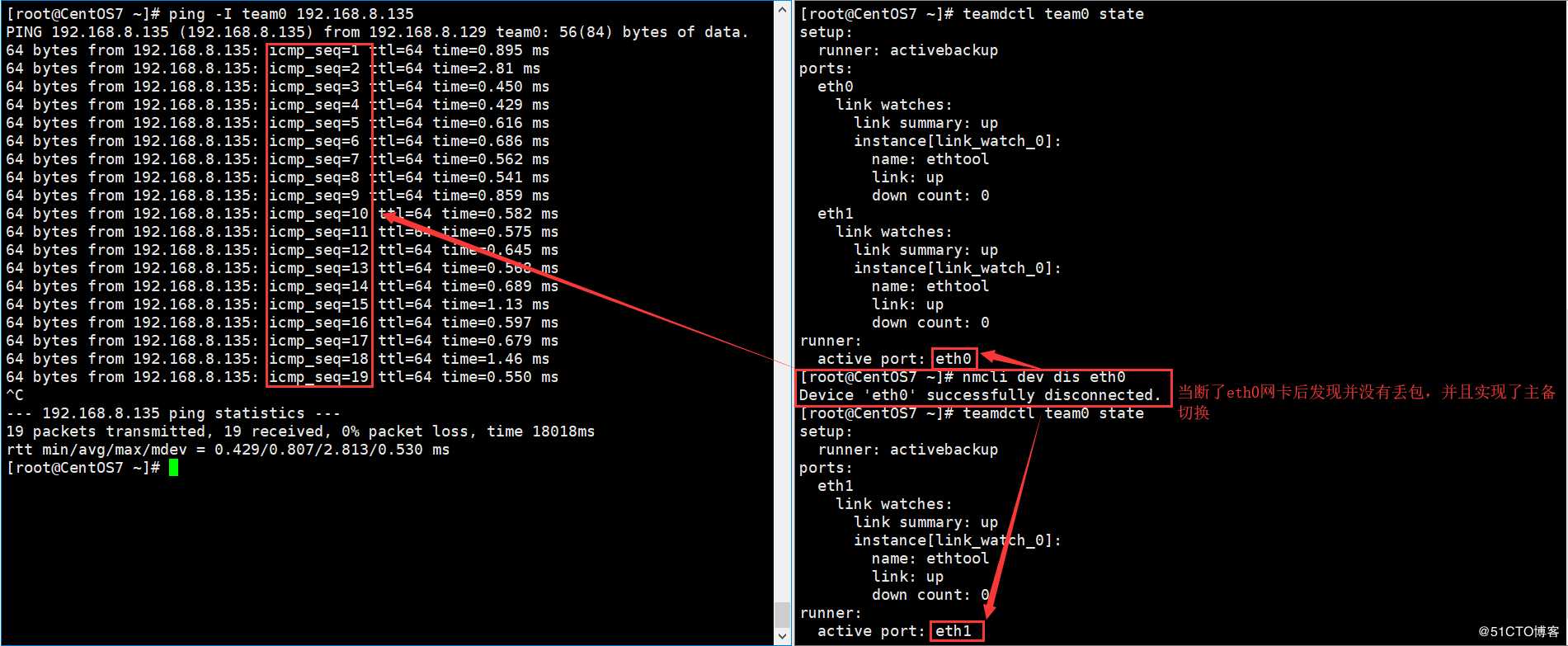

在另一台Linux主机ping本机的bond0接口ip,然后人为地断掉eth0网卡,看是否会发生主备切换

查看本机bond0接口ip

[root@CentOS7 ~]# ip ad show dev bond0|sed -rn ‘3s#.* (.*)/24.*#\1#p‘

192.168.8.129

[root@CentOS6 ~]# ping 192.168.8.129

PING 192.168.8.129 (192.168.8.129) 56(84) bytes of data.

64 bytes from 192.168.8.129: icmp_seq=1 ttl=64 time=0.600 ms

64 bytes from 192.168.8.129: icmp_seq=2 ttl=64 time=0.712 ms

64 bytes from 192.168.8.129: icmp_seq=3 ttl=64 time=2.20 ms

64 bytes from 192.168.8.129: icmp_seq=4 ttl=64 time=0.986 ms

64 bytes from 192.168.8.129: icmp_seq=7 ttl=64 time=0.432 ms

64 bytes from 192.168.8.129: icmp_seq=8 ttl=64 time=0.700 ms

64 bytes from 192.168.8.129: icmp_seq=9 ttl=64 time=0.571 ms

^C

--- 192.168.8.129 ping statistics ---

9 packets transmitted, 7 received, 22% packet loss, time 8679ms

rtt min/avg/max/mdev = 0.432/0.887/2.209/0.562 ms在另一台主机ping的时候,断掉eth0网卡发现中间丢了两个包

[root@CentOS7 ~]# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eth1

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth0

MII Status: down

Speed: Unknown

Duplex: Unknown

Link Failure Count: 4

Permanent HW addr: 00:0c:29:08:2a:73

Slave queue ID: 0

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 2

Permanent HW addr: 00:0c:29:08:2a:7d

Slave queue ID: 0查看当前的active slave是eth1,说明主备切换成功

nmcli命令配置完后自动生成的配置文件

[root@CentOS7 ~]# cd /etc/sysconfig/network-scripts/

[root@CentOS7 network-scripts]# ls ifcfg-bond*

ifcfg-bond01 ifcfg-bond-slave-eth0 ifcfg-bond-slave-eth1

[root@CentOS7 network-scripts]# cat ifcfg-bond01

BONDING_OPTS=mode=active-backup

TYPE=Bond

BONDING_MASTER=yes

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=dhcp

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=bond01

UUID=e5369ad8-2b8b-4cc1-aca2-67562282a637

DEVICE=bond0

ONBOOT=yes

[root@CentOS7 network-scripts]# cat ifcfg-bond-slave-eth0

TYPE=Ethernet

NAME=bond01-slave0

UUID=f6ed385e-e1ae-487d-b36a-43b13ac3f84f

DEVICE=eth0

ONBOOT=yes

MASTER_UUID=e5369ad8-2b8b-4cc1-aca2-67562282a637

MASTER=bond0

SLAVE=yes

bond01-slave1的配置文件和此文件基本一样broadcast(广播):数据通过所有端口传输

active-backup(主动备份):使用一个端口或链接,而将其他端口或链接保留为备份

round-robin(轮询):数据依次在所有端口上传输

loadbalance(负载均衡):具有活动的Tx负载平衡和基于BPF的Tx端口选择器

lacp:实现802.3ad链路聚合控制协议

1)查看已添加的eth0、eth1网卡状态

[root@CentOS7 ~]# nmcli dev status

DEVICE TYPE STATE CONNECTION

eth0 ethernet disconnected --

eth1 ethernet disconnected --2)使用主备(activebackup)模式添加名为team0的网络组接口

[root@CentOS7 ~]# nmcli con add type team ifname team0 con-name team0 config ‘{"runner":{"name":"activebackup"}}‘

Connection ‘team0‘ (28b4e208-339f-4eb2-ae0f-6b07621e7685) successfully added.3)添加从属网络到名为team0的网络组

[root@CentOS7 ~]# nmcli con add type team-slave ifname eth0 con-name team0-slave0 master team0

Connection ‘team0-slave0‘ (3c1b3008-ebeb-4e2d-9790-30111f1e1271) successfully added.

[root@CentOS7 ~]# nmcli con add type team-slave ifname eth1 con-name team0-slave1 master team04)启动网络组和从属网络

[root@CentOS7 ~]# nmcli con up team0

[root@CentOS7 ~]# nmcli con up team0-slave0

[root@CentOS7 ~]# nmcli con up team0-slave1

[root@CentOS7 ~]# nmcli dev status

DEVICE TYPE STATE CONNECTION

team0 team connected team0

eth0 ethernet connected team0-slave0

eth1 ethernet connected team0-slave1 5)查看网络组状态

[root@CentOS7 ~]# teamdctl team0 state

setup:

runner: activebackup

ports:

eth0

link watches:

link summary: up

instance[link_watch_0]:

name: ethtool

link: up

down count: 0

eth1

link watches:

link summary: up

instance[link_watch_0]:

name: ethtool

link: up

down count: 0

runner:

active port: eth06)测试

nmcli命令配置完后自动生成的配置文件

[root@CentOS7 ~]# cd /etc/sysconfig/network-scripts/

[root@CentOS7 network-scripts]# ls ifcfg-team0*

[root@CentOS7 network-scripts]# grep -v "^IPV6" ifcfg-team0

TEAM_CONFIG="{\"runner\":{\"name\":\"activebackup\"}}"

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=dhcp

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

NAME=team0

UUID=28b4e208-339f-4eb2-ae0f-6b07621e7685

DEVICE=team0

ONBOOT=yes

DEVICETYPE=Team

[root@CentOS7 network-scripts]# cat ifcfg-team0-slave0

NAME=team0-slave0

UUID=3c1b3008-ebeb-4e2d-9790-30111f1e1271

DEVICE=eth0

ONBOOT=yes

TEAM_MASTER=team0

DEVICETYPE=TeamPort

[root@CentOS7 network-scripts]# cat ifcfg-team0-slave1

NAME=team0-slave1

DEVICE=eth1

ONBOOT=yes

TEAM_MASTER=team0标签:centos7 逻辑 count 本机 etc 配置 中间 eth0 slave

原文地址:https://blog.51cto.com/hexiaoshuai/2445637