The PHP documentation is pretty clear on how to get a backtrace in PHP, but some of the explanations are confusing and seem focused on mod_php, instead of PHP-FPM. So here‘s the steps you can take to enable core dumps in PHP-FPM pools.

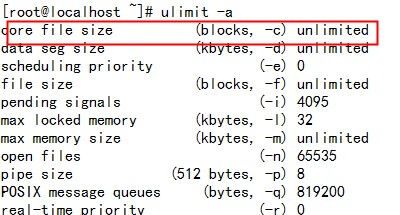

Enable core dumps on Linux

Chances are, your current Linux config doesn‘t support core dumps yet. You can enable them and set the location where the kernel will dump the core files.

$ echo ‘/tmp/coredump-%e.%p‘ > /proc/sys/kernel/core_pattern

You can use many different kinds of core dump variables for the filename, such as;

%% a single % character

%c core file size soft resource limit of crashing process (since

Linux 2.6.24)

%d dump mode—same as value returned by prctl(2) PR_GET_DUMPABLE

(since Linux 3.7)

%e executable filename (without path prefix)

%E pathname of executable, with slashes (‘/‘) replaced by

exclamation marks (‘!‘) (since Linux 3.0).

%g (numeric) real GID of dumped process

%h hostname (same as nodename returned by uname(2))

%p PID of dumped process, as seen in the PID namespace in which

the process resides

%P PID of dumped process, as seen in the initial PID namespace

(since Linux 3.12)

%s number of signal causing dump

%t time of dump, expressed as seconds since the Epoch,

1970-01-01 00:00:00 +0000 (UTC)

%u (numeric) real UID of dumped process

The example above will use the executable name (%e) and the pidfile (%p) in the filename. It‘ll dump in /tmp, as that will be writable to any kind of user.

Now that your kernel knows where to save the core dumps, it‘s time to change PHP-FPM.

Enable PHP-FPM core dumps per pool

To enable a core dump on a SIGSEGV, you can enable the rlimit_core option per PHP-FPM pool. Open your pool configuration and add the following.

rlimit_core = unlimited

Restart your PHP-FPM daemon (service php-fpm restart) to activate the config. Next time a SIGSEGV happens, your PHP-FPM logs will show you some more information.

WARNING: [pool poolname] child 20076 exited on signal 11 (SIGSEGV - core dumped) after 8.775895 seconds from start

You can find the core-dump in /tmp/coredump*.

$ ls /tmp/coredump* -rw------- 1 user group 220M /tmp/coredump-php-fpm.2393

The filename shows the program (php-fpm) and the PID (2393).

Reading the core dumps

This is one part that the PHP docs are pretty clear about, so just a copy paste with modified/updated paths.

First, you need gdb installed (yum install gdb) to get the backtraces. You then start the gdb binary like gdb $program-path $coredump-path. Since our program is php-fpm, which resides in /usr/sbin/php-fpm, we call the gdb binary like this.

$ gdb /usr/sbin/php-fpm /tmp/coredump-php-fpm.2393 (gdb loading all symbols ... ) ... Reading symbols from /usr/lib64/php/modules/memcache.so...(no debugging symbols found)...done. Loaded symbols for /usr/lib64/php/modules/memcache.so ... (gdb) bt #0 0x00007f8a8b6d7c37 in mmc_value_handler_multi () from /usr/lib64/php/modules/memcache.so #1 0x00007f8a8b6db9ad in mmc_unpack_value () from /usr/lib64/php/modules/memcache.so #2 0x00007f8a8b6e0637 in ?? () from /usr/lib64/php/modules/memcache.so #3 0x00007f8a8b6dd55b in mmc_pool_select () from /usr/lib64/php/modules/memcache.so #4 0x00007f8a8b6ddcc8 in mmc_pool_run () from /usr/lib64/php/modules/memcache.so #5 0x00007f8a8b6d7e92 in ?? () from /usr/lib64/php/modules/memcache.so #6 0x00007f8a8ac335cf in nr_php_curl_setopt () at /home/hudson/slave-workspace/workspace/PHP_Release_Agent/label/centos5-64-nrcamp/agent/php_curl.c:202 #7 0x0000000002b14fe0 in ?? () #8 0x0000000000000000 in ?? ()

The bt command will show you the PHP backtrace on the moment of the core dump. To exit gdb, just type quit.

There are other good-to-know gdb commands too, for instance -- from your gdb shell (which you started above), type the following:

$ gdb /usr/sbin/php-fpm /tmp/coredump-php-fpm.2393 (gdb) print (char *)(executor_globals.function_state_ptr->function)->common.function_name There is no member named function_state_ptr. (gdb) print (char *)executor_globals.active_op_array->function_name $1 = 0x7f97a47a6670 "_drupal_error_handler_real" (gdb) print (char *)executor_globals.active_op_array->filename $2 = 0x7f97a47a4458 "/var/www/vhosts/site.tld/htdocs/includes/errors.inc"

In summary:

- first print: can tell you which function call in PHP‘s user-land caused the error

- second print: can tell you which method got called that caused the error

- third print: can tell you which PHP file called that method/function that caused the segfault

Not all data is always available, but it can help narrow down your search.

You can dive even further into each line of the stacktrace. The number in front of each line is a frame number. You can access them like this. In this example, I‘ll explore frame 11.

(gdb) frame 11

#11 0x00007f97c3ee4f43 in zend_call_function (fci=fci@entry=0x7fff44686280, fci_cache=<optimized out>, fci_cache@entry=0x0) at /usr/src/debug/php-5.6.16/Zend/zend_execute_API.c:829

829 zend_execute(EG(active_op_array) TSRMLS_CC);

(gdb) info frame

Stack level 11, frame at 0x7fff44686280:

rip = 0x7f97c3ee4f43 in zend_call_function (/usr/src/debug/php-5.6.16/Zend/zend_execute_API.c:829); saved rip 0x7f97c3ee513f

called by frame at 0x7fff446862e0, caller of frame at 0x7fff44686140

...

(gdb) info locals

i =

execute_data = {opline = 0x0, function_state = {function = 0x7f97c5709f40, arguments = 0x7f97c3c3a1c0}, op_array = 0x0, object = 0x0, symbol_table = 0x7f97a2b14880, ...

args = {{gp_offset = 16, fp_offset = 48, overflow_arg_area = 0x7fff44686520, reg_save_area = 0x7fff44686430}}

usr_copy = {{gp_offset = 40, fp_offset = 48, overflow_arg_area = 0x7fff44686520, reg_save_area = 0x7fff44686430}}

params = 0x7f97c6494518

error_filename = 0x7f97a6152318 "/var/www/vhosts/drupal.hotelgiftbox.com/htdocs/sites/all/modules/contrib/mailmime/mailmime.inc"

(gdb) info args

fci = 0x7fff44686280

fci_cache = <optimized out>

The last line, info args, tells you which arguments were passed to this call. The info locals tells you which local variables were in use. You can inspect both, so for the info args we can look at the ‘fci‘ or ‘fci_cache‘ variables, for info locals we can look at ‘execute_data‘, ‘i‘ etc.

First, check which data type they are.

(gdb) ptype i

type = unsigned int

(gdb) ptype execute_data

type = struct _zend_execute_data {

struct _zend_op *opline;

zend_function_state function_state;

zend_op_array *op_array;

zval *object;

HashTable *symbol_table;

struct _zend_execute_data *prev_execute_data;

zval *old_error_reporting;

zend_bool nested;

zval **original_return_value;

zend_class_entry *current_scope;

zend_class_entry *current_called_scope;

zval *current_this;

struct _zend_op *fast_ret;

zval *delayed_exception;

call_slot *call_slots;

call_slot *call;

}

This tells us that ‘i‘ is an unsigned integer and that ‘execute_data‘ is a struct. To see the value of each variables, print them.

(gdb) print i $1 = 1

These commands get you a long way: you can investigate each frame, show the arguments and local values, and inspect the value of each variable.

It‘s time consuming tedious work, but it can get you to the fix.

I‘m an independent software developer

I‘m an independent software developer  & Linux sysadmin

& Linux sysadmin  , a general web geek &

, a general web geek &  .

. If you‘re stuck with a technical problem, I‘m

If you‘re stuck with a technical problem, I‘m

Comments

Guy Paddock Sunday, December 14, 2014 at 20:32 -

So… I think you and I are troubleshooting a similar issue with PHP-FPM, Memcache, and New Relic, judging from the date of your post and the fact that your post was exactly what I needed to get a coredump that looks eerily like mine.

Mattias Geniar Sunday, December 14, 2014 at 20:34 -

Haha, that’s very possible – this was indeed the result of a New Relic backtrace combined with Memcached. :-)

Guy Paddock Sunday, December 14, 2014 at 20:51 -

Were you able to find a workaround, or are you waiting on NR support like I am?

Mattias Geniar Sunday, December 14, 2014 at 20:54 -

It hasn’t occured since, actually. Since finding the core-dumps, I installed a NR update (somewhere around end of october 2014) for the PHP module. I’m waiting for new core dumps since then.

If it happens again, I’ll open a support case, but for now I’m unclear what the actual reason was. The release notes of the PHP module don’t show any fix for a SEGFAULT, but I’m just hoping this got quietly fixed: https://docs.newrelic.com/docs/release-notes/agent-release-notes/php-release-notes

If you do hear from them, please let me know? I’d be interested!

Guy Paddock Sunday, December 14, 2014 at 20:59 -

Hmm, well, we’re using 4.15.0.74 (“zanabazar”) and only seeing it on one out of several sites using Couchbase / Memcache on the same server.

There’s definitely something that triggers the behavior, but we haven’t figured out what it is.

Guy Paddock Monday, December 15, 2014 at 02:23 -

While waiting for New Relic support, I traced this issue to an issue filed against libmemcached 1.0.16 relating to a “double-free” of memory that appears to cause this type of problem:

https://bugs.launchpad.net/bugs/1126601

The fix appears to be in 1.0.17, but the Atomic Repo doesn’t have anything past 1.0.16.

In the mean time, I’ve rolled this package:

https://github.com/GuyPaddock/express-tech/tree/master/centos/libmemcached-1.0.18

Pat Monday, July 27, 2015 at 09:20 -

Thanks!!!! You saved me. I had a low-level issue that had my head scratching and your tutorial solved it.