标签:出现 harbor selinux als 方式 scp 使用 yam 关于

在网上看了不少关于Kubernetes的视频,虽然现在还未用上,但是也是时候总结记录一下,父亲常教我的一句话:学到手的东西总有一天会有用!我也相信在将来的某一天会用到现在所学的技术。废话不多扯了。。。。

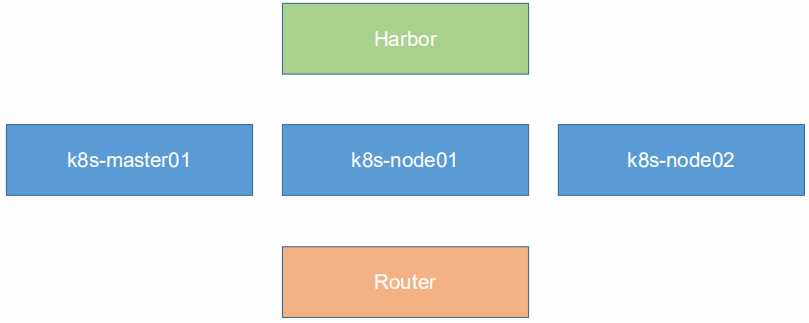

1、k8s-master01:master主服务器(存在单点故障)

2、k8s-node01、k8s-node02:2个工作节点

3、Harbor:私有仓库(简单记录搭建Harbor私服仓库)

4、Router:软路由(由于kubeadm是存放在谷歌云的,国内无法访问,K8S集群搭建之软路由的安装)

以下操作均是对3个K8S节点进行操作

hostnamectl set-hostname k8s-master01

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

vi /etc/hosts,添加如下配置

192.168.66.10 k8s-master01

192.168.66.20 k8s-node01

192.168.66.21 k8s-node02

yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

systemctl stop firewalld && systemctl disable firewalld

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

swapoff -a && sed -i ‘/ swap / s/^\(.*\)$/#\1/g‘ /etc/fstab

setenforce 0 && -i ‘s/^SELINUX=.*/SELINUX=disabled/‘ /etc/selinux/config

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nv_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -f /etc/sysctl.d/kubernetes.conf

#设置xiton.g时区为 中国/上海

timedatectl set-timezone Asia/Shanghai

#将当前的UTC时间写入硬件时钟

timedatectl set-local-rtc 0

#重启依赖于系统时间的服务

systemctl restart rsyslog

systemctl restart crond

systemctl stop postfix && systemctl disable postfix

mkdir /var/log/journal #持久化保存日志的目录

mkdir /etc/systemd/journald.conf.d

mkdir > /etc/systemd/journald.conf.d <<EOF

[Journal]

#持久化保存到磁盘

Storage=persistent

#压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

#最大占用空间10G

SystemMaxUse=10G

#单日志文件最大200M

SystemMaxFileSize=200M

#日志保存时间2周

MaxRetentionSec=2week

#不将日志转发达 syslog

ForwardToSyslog=no

EOF

systemctl restart systemd-journald

CentOS7.x自带的3.10.x内核存在一些Bugs,导致运行的Docker、ku‘bernetes不稳定。

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

#安装完成后检查 /boot/grub2/grub.cfg中对应内核menuentry中是否包含initrd16配置,如果没有,再安装一次

yum --enablerepo=elrepo-kernel install -y kernel-lt

#设置开机从新内核启动

grub2-set-default ‘CentOS Linux (4.4.189-1.el7.elrepo.x86_64) 7 (Core)‘

#重启机器

reboot

vim /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"],

"log-driver":"json-file",

"log-opts":

{

"max-size":"100m"

}

}

mkdir -p /etc/systemd/system/docker.service.d

systemctl daemon-reload && systemctl restart docker && systemctl enable docker

地址:https://developer.aliyun.com/mirror/kubernetes?spm=a2c6h.13651102.0.0.53322f70MCb4ok

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet-1.15.1 kubeadm-1.15.1 kubectl-1.15.1

systemctl enable kubelet.service

可能会先出现无socat依赖:可参考我这篇文章:Linux下RabbitMQ的安装及使用里面有安装socat依赖的过程

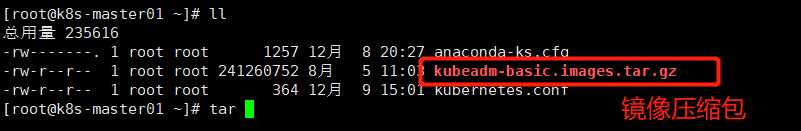

所以这里需要导入镜像,这里从别人通过kexue上网拉取的镜像:https://pan.baidu.com/share/init?surl=yrbtLGXqXaXmauScaif-3A,提取码:r6m5

tar -xvf kubeadm-basic.images.tar.gz

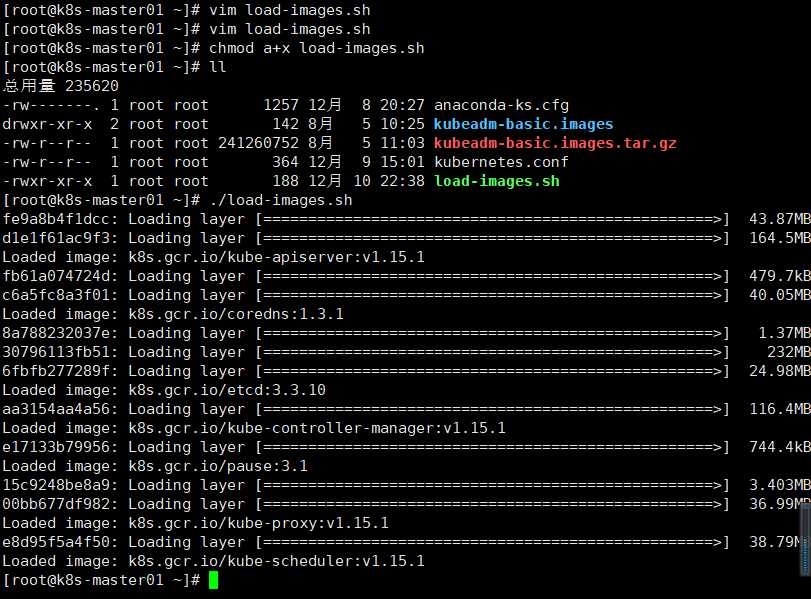

#!/bin/bash

ls /root/kubeadm-basic.images > /tmp/image-list.txt

cd /root/kubeadm-basic.images

for i in $(cat /tmp/image-list.txt)

do

docker load -i $i

done

rm -rf /tmp/image-list.txt

chmod a+x load-images.sh

scp -r kubeadm-basic.images load-images.sh root@k8s-node01:/root/

scp -r kubeadm-basic.images load-images.sh root@k8s-node02:/root/

./load-images.sh #root家目录执行该脚本

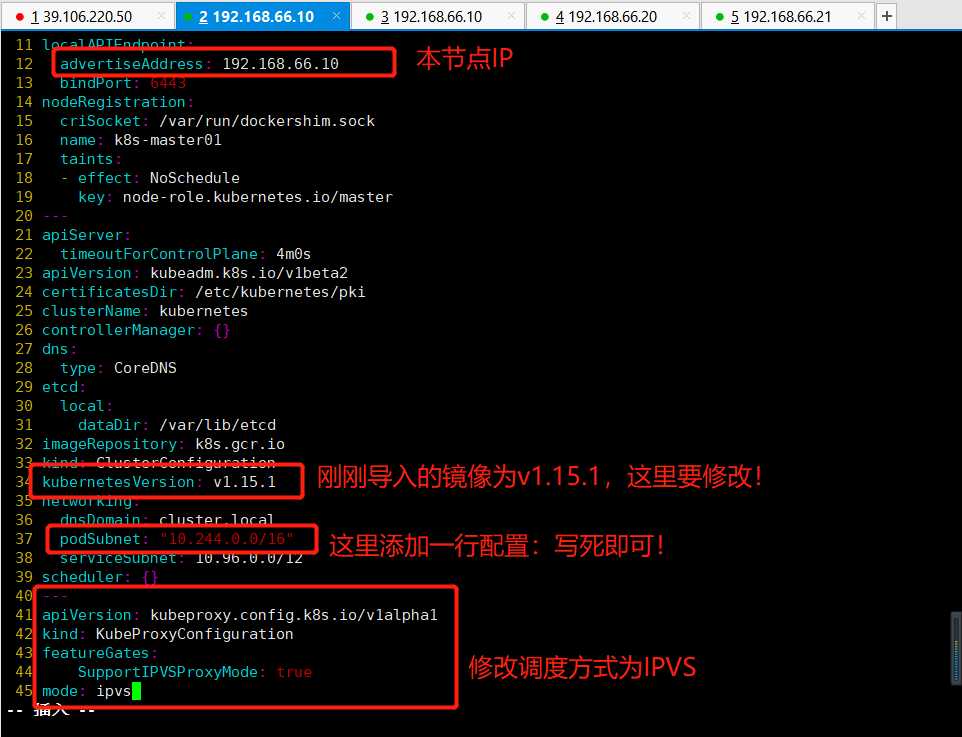

kubeadm config print init-defaults > kubeadm-config.yaml #将初始化文件打印至目标文件

View Code

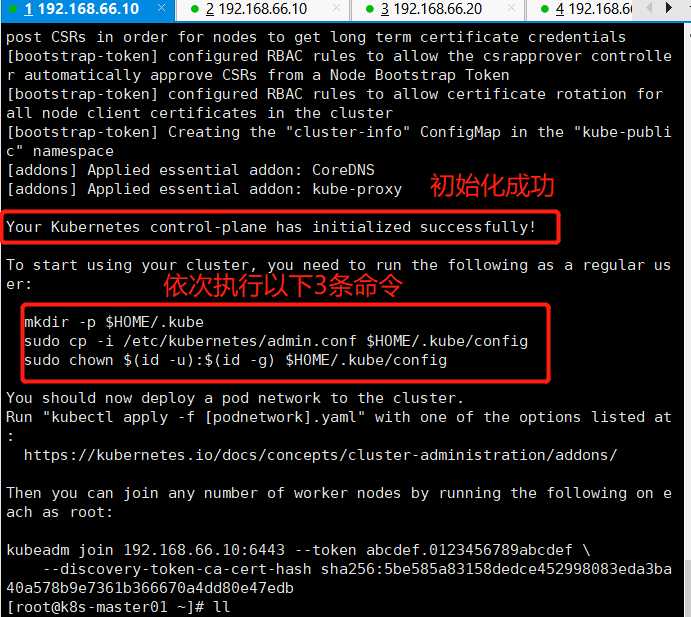

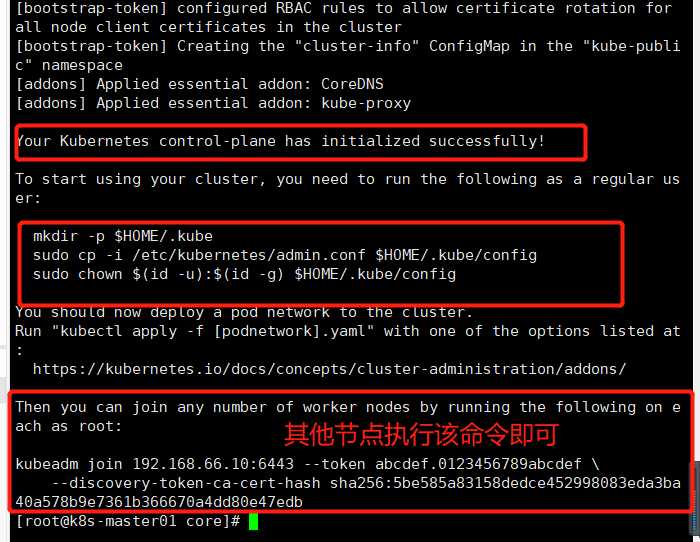

View Codekubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

依次执行3条命令

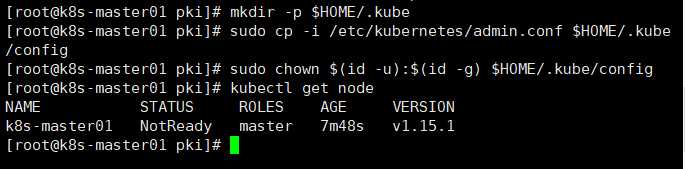

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

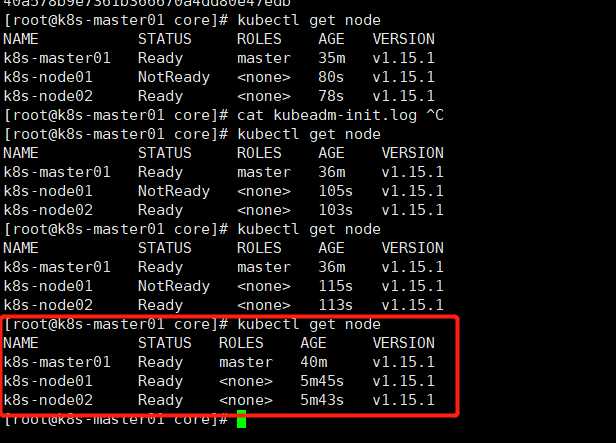

由上一步可以看出,node状态处于notready,这是我们没有实现扁平化网络,现在部署一下

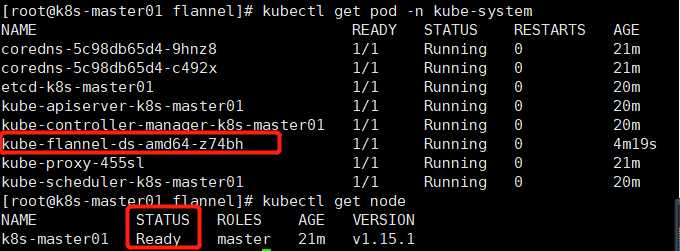

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl create -f kube-flannel.yml

kubectl get pod -n kube-system

kubectl get node #再次查看状态

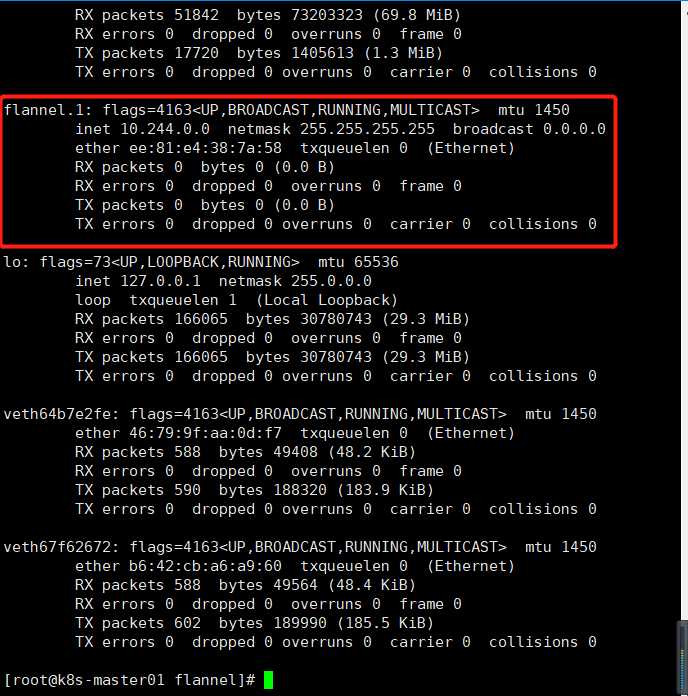

ifconfig也可查看到flannel

命令已经在在主节点初始化时日志里如下:

kubeadm join 192.168.66.10:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:5be585a83158dedce452998083eda3ba40a578b9e7361b366670a4dd80e47edb

在master主节点查看:kubectl get node

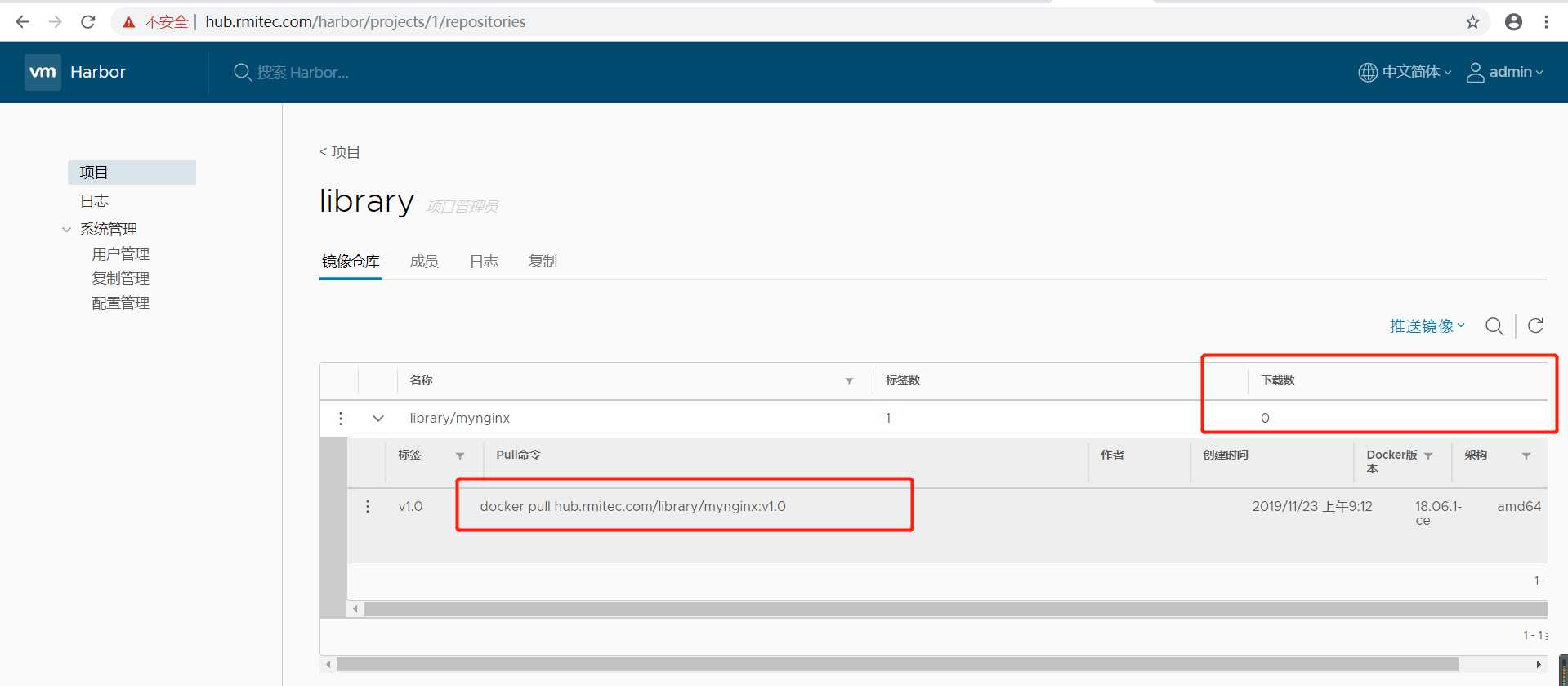

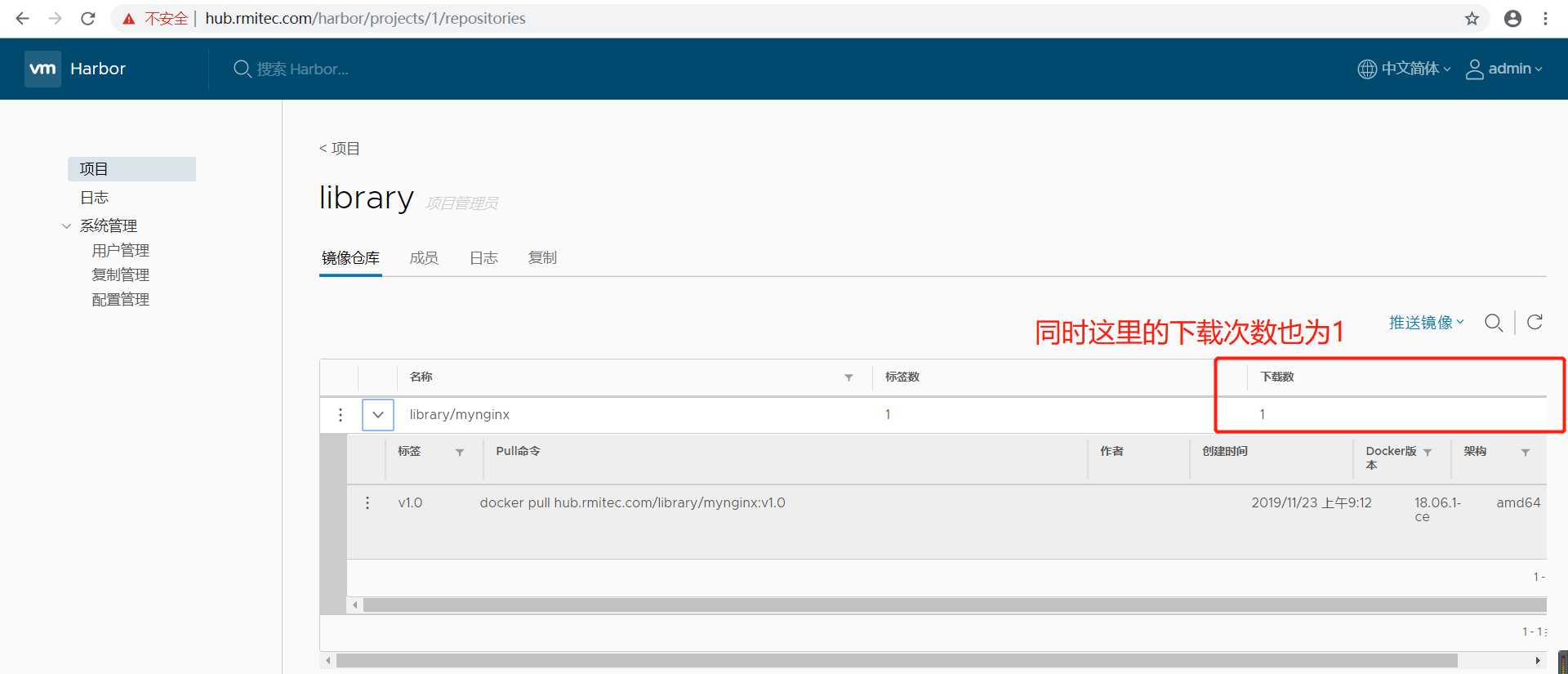

参考这里搭建私有仓库Harbor:简单记录搭建Harbor私服仓库

{

"exec-opts":["native.cgroupdriver=systemd"],

"insecure-registries": ["hub.rmitec.com"],

"log-driver":"json-file",

"log-opts":

{

"max-size":"100m"

}

}

192.168.66.10 k8s-master01

192.168.66.20 k8s-node01

192.168.66.21 k8s-node02

192.168.66.15 hub.rmitec.com

systemctl restart docker

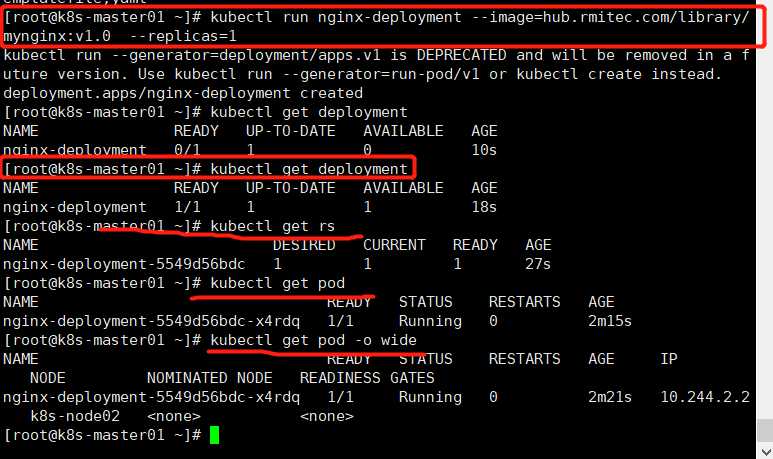

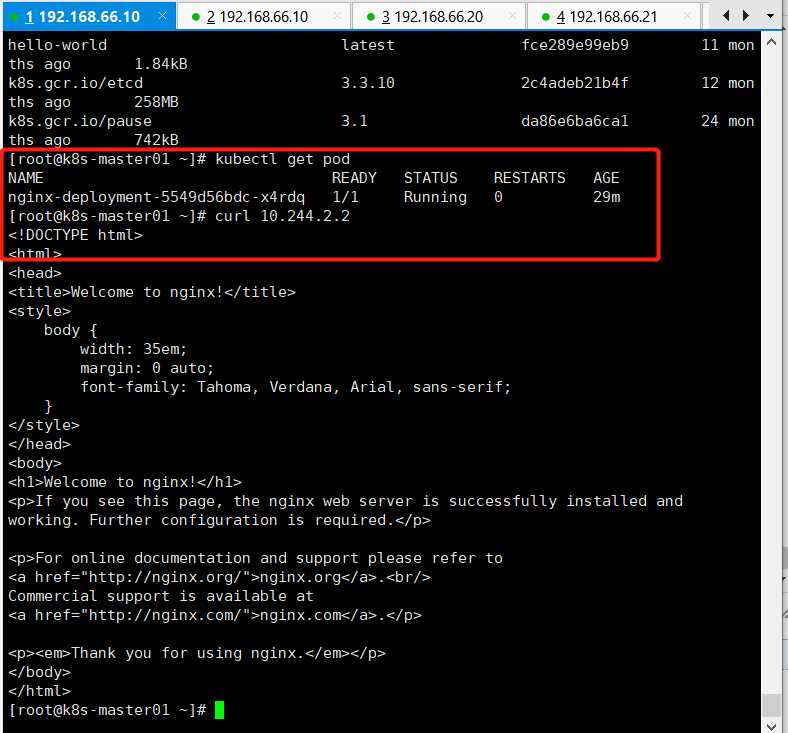

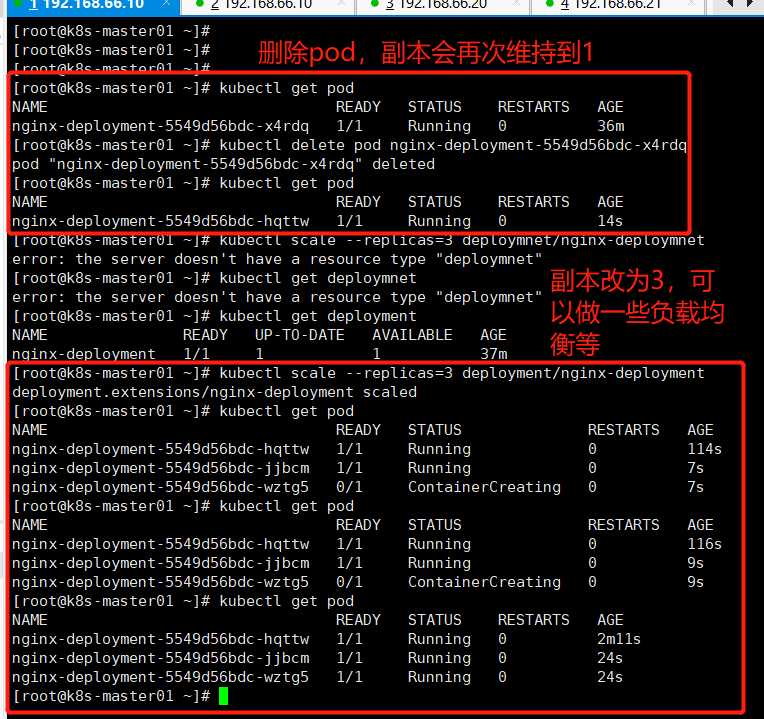

kubectl run nginx-deployment --image=hub.rmitec.com/library/mynginx:v1.0 --replicas=1 #运行nginx,指定副本数为1,从私有仓库Harbor

kubectl get deployment

kubectl get rs

kubectl get pod

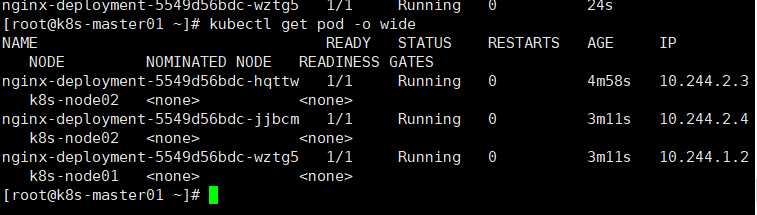

kubectl get pod -o wide

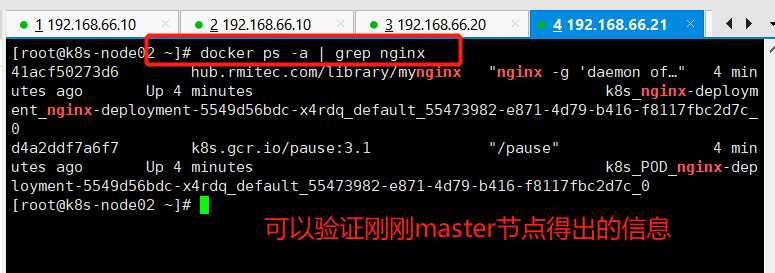

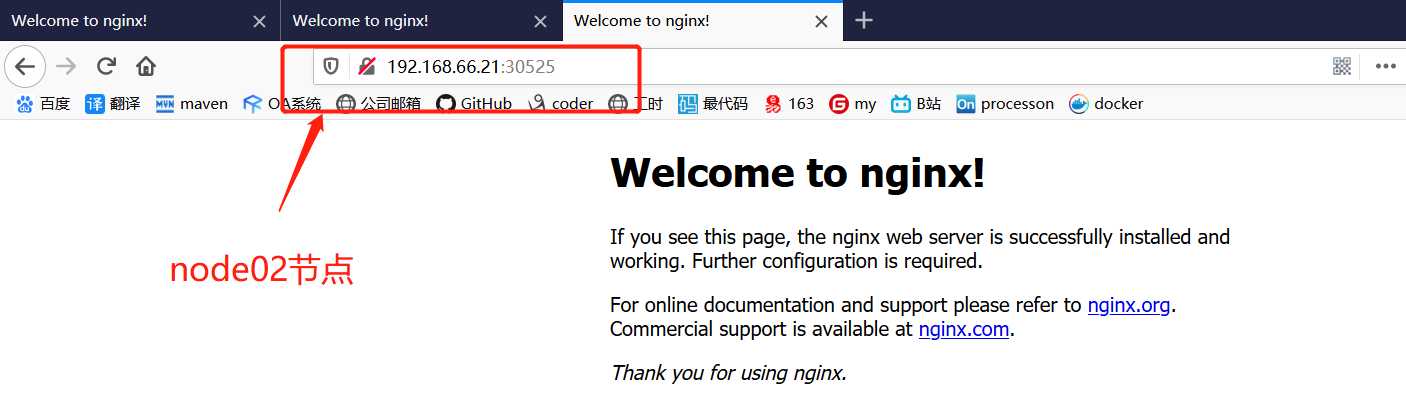

注:可以看出该nginx运行在node02节点,可以在node02节点查看

注:可以看到node02运行2个nginx,node01运行1个nginx

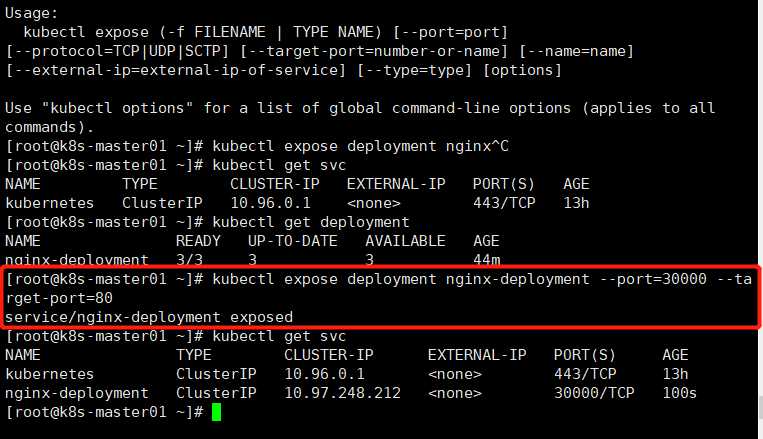

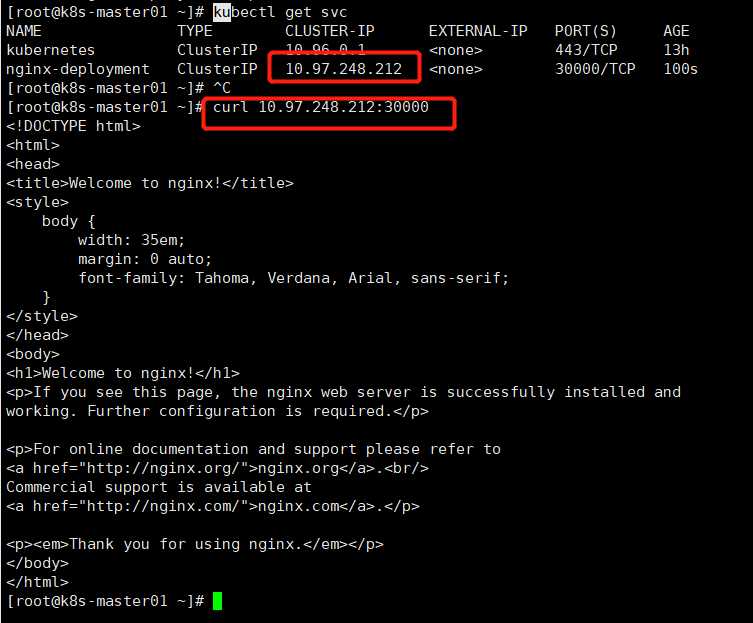

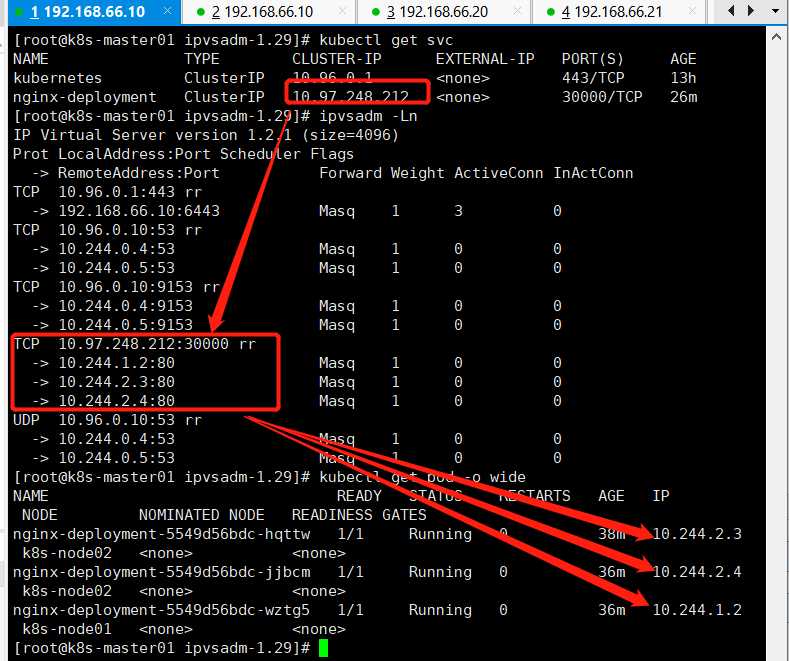

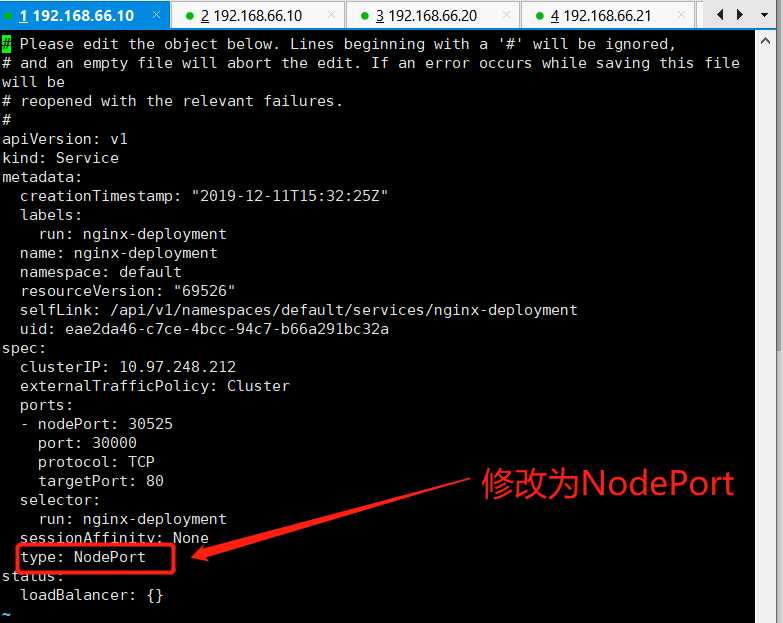

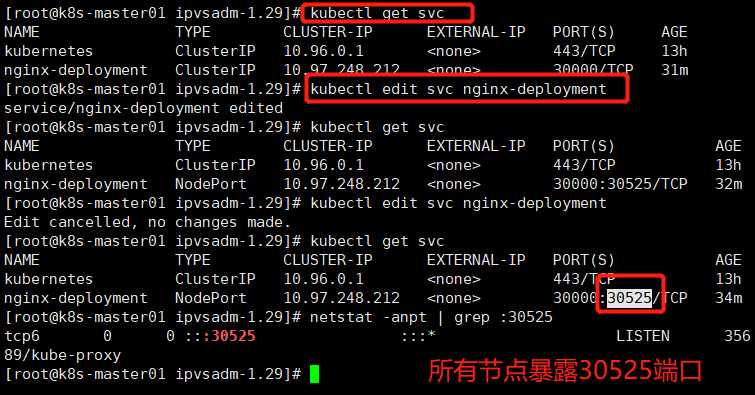

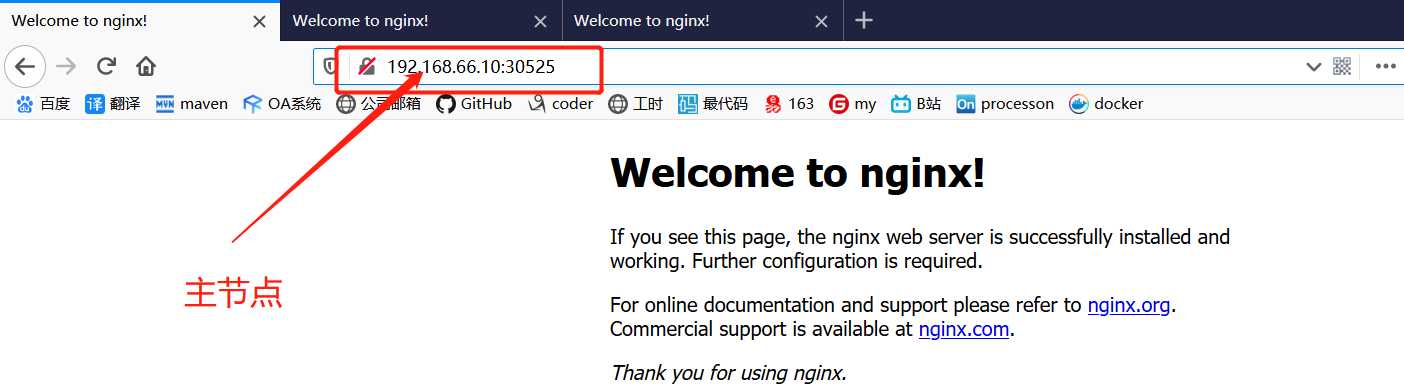

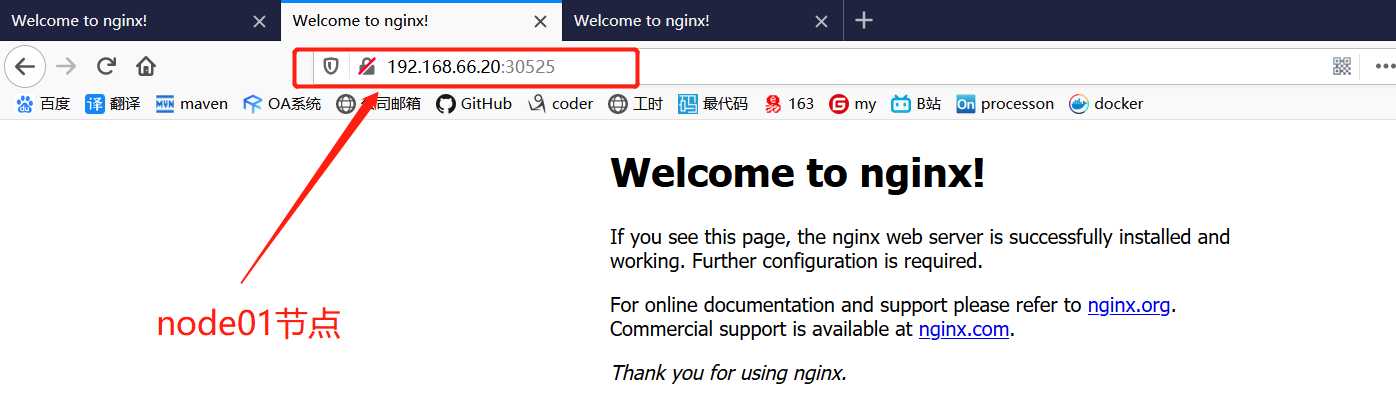

kubectl get svc

kubectl expose deployment nginx-deployment --port=30000 --target-port=80

kubectl edit svc nginx-deployment

从centos7镜像到搭建kubernetes集群(kubeadm方式安装)

标签:出现 harbor selinux als 方式 scp 使用 yam 关于

原文地址:https://www.cnblogs.com/rmxd/p/12026660.html