标签:json list 地址 获取 nbsp parent das client image

import requests from bs4 import BeautifulSoup import json import xlwt import pandas as pd #爬取页面函数 def catchhtml(url): kv = {‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36‘} #异常判断 try: data = requests.get(url, headers=kv, timeout=10) #查看状态码,判断爬取状态 data.raise_for_status() data.encoding = data.apparent_encoding #返回页面 return data.text except: return "爬取失败" #获取专辑 def getalbum(times, singer): all_albummid = [] for num in range(1, times): #获取信息的目标url url = f‘https://c.y.qq.com/soso/fcgi-bin/client_search_cp?&remoteplace=txt.yqq.album&t=8&p={num}&n=5&w={singer}&format=json‘ html = catchhtml(url) # 解析json数据 js = json.loads(html) # 定位到albumMID albumlist = js[‘data‘][‘album‘][‘list‘] for song in albumlist: albumMid = song[‘albumMID‘] all_albummid.append(albumMid) return all_albummid #获取专辑内的歌曲名 def getsong(albumlist): songs = [] for i in albumlist: html = catchhtml(f‘https://y.qq.com/n/yqq/album/{i}.html‘) #bs4格式化html页面 soup = BeautifulSoup(html, "html.parser") #遍历span标签内a标签的字符串 for span in soup.find_all("span", attrs="songlist__songname_txt"): #将字符串放进列表 songs.append(str(span.a.string).strip()) return songs #将数据存入文件 def write_file(data, saveurl): # 创建Workbook,相当于创建Excel book = xlwt.Workbook(encoding=‘utf-8‘) # 创建sheet,Sheet1为表的名字,cell_overwrite_ok为是否覆盖单元格 sheet1 = book.add_sheet(u‘Sheet1‘, cell_overwrite_ok=True) #将首行的首列设为song_name sheet1.write(0, 0, ‘歌曲名‘) r = 1 #r为row(行) for temp in data: sheet1.write(r, 0, temp) r += 1 #保存文件到给定的save_url book.save(saveurl) print(f‘写入文件成功,地址为{saveurl}‘) #读取文件 def read_file(singer): try: data = pd.read_excel(f‘D:/{singer}\‘s_songs.xls‘, names=[‘song_name‘]) print(‘读取文件成功‘) #返回文件内的数据 return data except: "文件不存在或文件名错误" #主函数 def main(): #临界值20个专辑 times = 20 #输入歌手名称 singer = ‘陈奕迅‘ #专辑MID列表 albumlist = getalbum(times, singer) #歌曲列表 songs = getsong(albumlist) #存储excel文件的位置 saveurl = f‘D:\\{singer}\‘s_songs.xls‘ #写入文件 write_file(songs, saveurl) #读取文件 read_file(singer) if __name__ == ‘__main__‘: main()

运行结果(截图)如下:

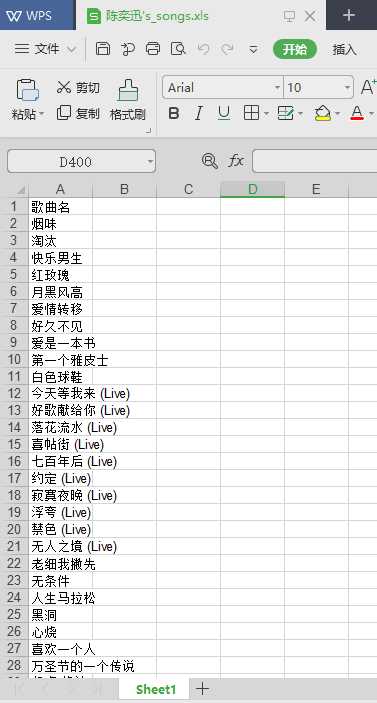

该Excel文件里面有陈奕迅的所有专辑名称和歌曲名称,列为一个名单,共700多行。部分截图如下:

#爬取页面函数 def catchhtml(url): kv = {‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36‘} #异常判断 try: data = requests.get(url, headers=kv, timeout=10) #查看状态码,判断爬取状态 data.raise_for_status() data.encoding = data.apparent_encoding #返回页面 return data.text except: return "爬取失败"

2.对数据进行清洗和处理

#获取专辑 def getalbum(times, singer): all_albummid = [] for num in range(1, times): #获取信息的目标url url = f‘https://c.y.qq.com/soso/fcgi-bin/client_search_cp?&remoteplace=txt.yqq.album&t=8&p={num}&n=5&w={singer}&format=json‘ html = catchhtml(url) # 解析json数据 js = json.loads(html) # 定位到albumMID albumlist = js[‘data‘][‘album‘][‘list‘] for song in albumlist: albumMid = song[‘albumMID‘] all_albummid.append(albumMid) return all_albummid #获取专辑内的歌曲名 def getsong(albumlist): songs = [] for i in albumlist: html = catchhtml(f‘https://y.qq.com/n/yqq/album/{i}.html‘) #bs4格式化html页面 soup = BeautifulSoup(html, "html.parser") #遍历span标签内a标签的字符串 for span in soup.find_all("span", attrs="songlist__songname_txt"): #将字符串放进列表 songs.append(str(span.a.string).strip()) return songs

#将数据存入文件 def write_file(data, saveurl): # 创建Workbook,相当于创建Excel book = xlwt.Workbook(encoding=‘utf-8‘) # 创建sheet,Sheet1为表的名字,cell_overwrite_ok为是否覆盖单元格 sheet1 = book.add_sheet(u‘Sheet1‘, cell_overwrite_ok=True) #将首行的首列设为song_name sheet1.write(0, 0, ‘歌曲名‘) r = 1 #r为row(行) for temp in data: sheet1.write(r, 0, temp) r += 1 #保存文件到给定的save_url book.save(saveurl) print(f‘写入文件成功,地址为{saveurl}‘)

标签:json list 地址 获取 nbsp parent das client image

原文地址:https://www.cnblogs.com/liuzelin958/p/12046492.html