标签:range 评价 lse soup jieba分词 用户 方案 实现 页面

名称:爬取爱彼迎房源信息(泉州地区)

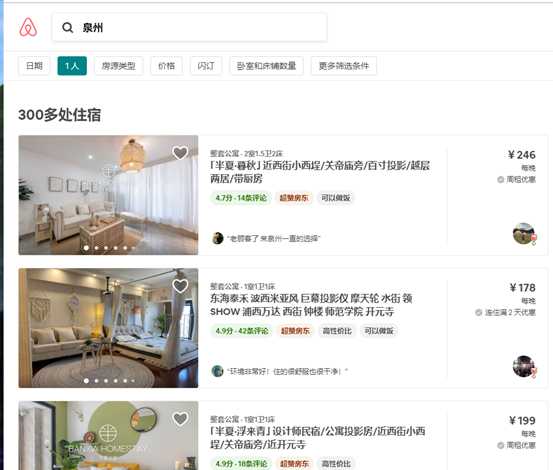

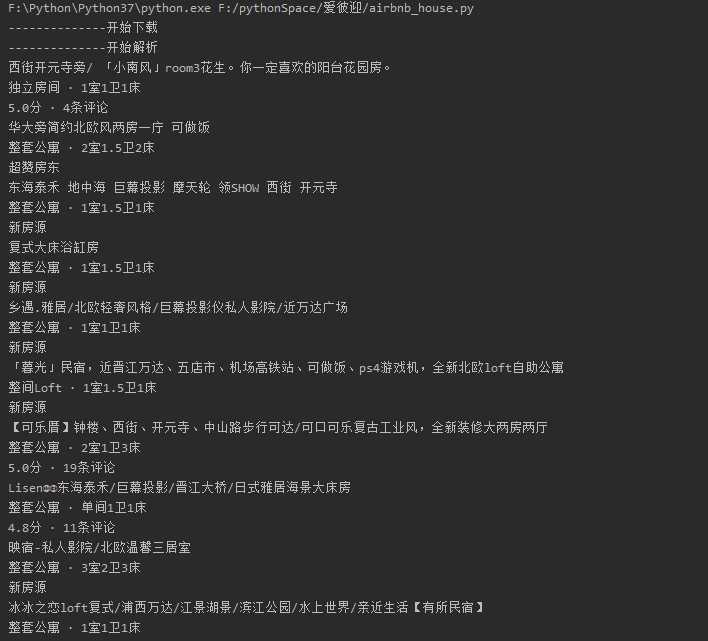

设计方案:使用request库和beautifulSoup库对爱彼迎网站进行访问,采集与处理数据,将爱彼迎房源数据分析出来、数据可视化和持久化。

技术难点主要是对爱彼迎页面的分析和采集。

三、网络爬虫程序设计(60分)

def getinfo(self,url): # 获取网页数据 try: #伪装UA ua = {‘user-agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36‘} #读取网页 r = requests.get(url, headers=ua) # 获取状态 r.raise_for_status() # 状态判断 if (r.status_code == 200): r.encoding = chardet.detect(r.content)["encoding"] return r.text return None except: return "下载错误" def getHouseInfo(self,html): ‘‘‘ 获取用户基本信息 ‘‘‘ # 初始化BeautifulSoup库 soup = BeautifulSoup(html, "html.parser") # 创建字典

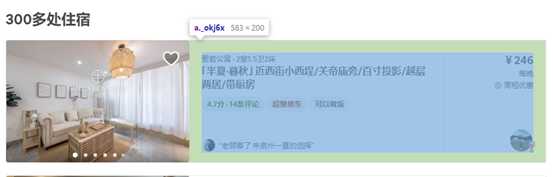

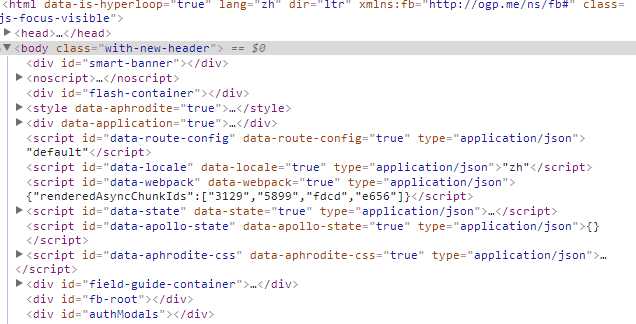

def getHouseInfo(self,html): ‘‘‘ 获取用户基本信息 ‘‘‘ # 初始化BeautifulSoup库 soup = BeautifulSoup(html, "html.parser") # 创建字典 datas = [] # div._1wbi47zw > div._hgs47m > div._10ejfg4u > div._y5sok6 > div._qlq27g > a._okj6x s = soup.select("div._1wbi47zw > div._hgs47m > div._10ejfg4u > div._y5sok6 > div._qlq27g > a._okj6x") #循环上面房间数据 for i in s: # 临时数组 data = {} # 取出房间名称 name = i.select("div._qrfr9x5") # 打印 print(str(name[0].get_text())) # 加入成员 data[‘房间名称‘] = str(name[0].get_text()) #取出房间的tags标签 tags = i.select("span._faldii7") # 打印 print(str(tags[0].get_text())) # 标签 data[‘标签‘] = str(tags[0].get_text()) # 打印 print(str(tags[1].get_text())) # 评分 data[‘评价‘] = str(tags[1].get_text()) # 加入字典 datas.append(data) # 返回 return datas

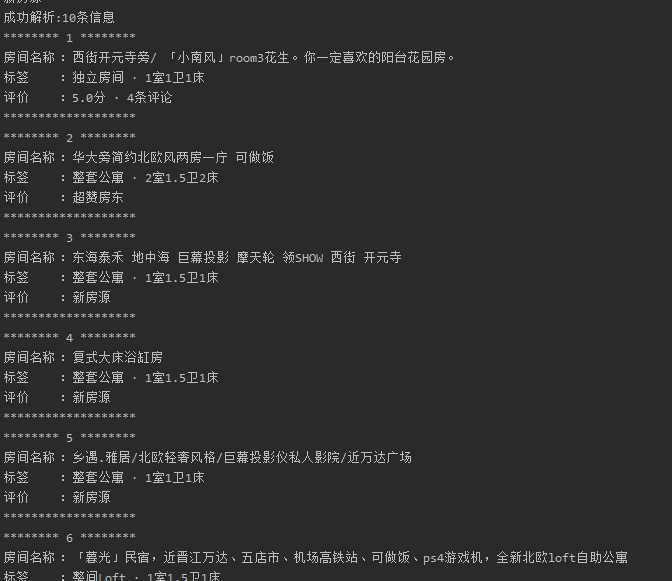

def grab(self): url = "https://www.airbnb.cn/s/%E6%B3%89%E5%B7%9E/homes" print(‘--------------开始下载‘) html = self.getinfo(url) print(‘--------------开始解析‘) house = self.getHouseInfo(html) print("成功解析:%s条信息"%len(house)) for i,value in enumerate(house): print(‘******** %s ********‘%(i+1)) for item in value.items(): print("%s\t:%s"%item) print(‘*******************‘) # 持久化 self.write_data(house) if __name__ == ‘__main__‘: house = house() house.grab()

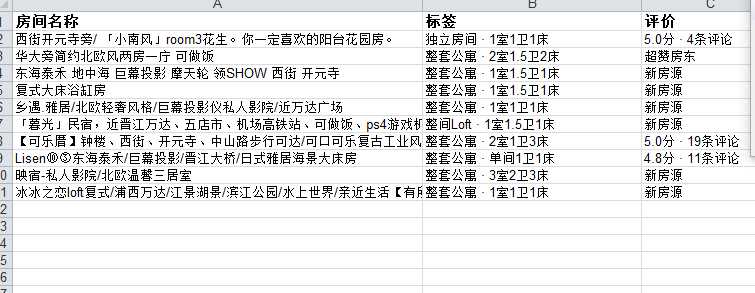

def set_style(self,name,height,bold=False): # 创建一个样式对象,初始化样式 style = xlwt.XFStyle() font = xlwt.Font() font.name = name font.bold = bold font.color_index = 4 font.height = height style.font = font return style ‘‘‘ 持久化 ‘‘‘ def write_data(self,datas): if datas is None: return None try: # print(help(self.sheet1.write())) top = list(datas[0].keys()) # 数据表头 for i in range(0, len(datas[0])): row = 0 col = i self.sheet1.write(row, col,top[i], self.set_style(‘Times New Roman‘, 220, True)) for i in range(0,len(datas)): row = i+1 col = 0 dictValue = list(datas[i].values()) for colIndex in range(0, len(dictValue)): col = colIndex self.sheet1.write(row, col,dictValue[colIndex]) self.sheet1.col(0).width = 15000 self.sheet1.col(1).width = 8000 self.sheet1.col(2).width = 5000 self.f.save(‘house.xls‘) except: print(‘持久化失败,请重新开始‘)

def grab(self): url = "https://www.airbnb.cn/s/%E6%B3%89%E5%B7%9E/homes" print(‘--------------开始下载‘) html = self.getinfo(url) print(‘--------------开始解析‘) house = self.getHouseInfo(html) print("成功解析:%s条信息"%len(house)) for i,value in enumerate(house): print(‘******** %s ********‘%(i+1)) for item in value.items(): print("%s\t:%s"%item) print(‘*******************‘) # 持久化 self.write_data(house) if __name__ == ‘__main__‘: house = house() house.grab()

# -*- coding: utf-8 -*- import requests from bs4 import BeautifulSoup import records import time import chardet import xlwt # 构造字典 dats = [] class house(object): ‘‘‘ 对象初始化 ‘‘‘ def __init__(self): self.f = xlwt.Workbook() # 给表格新建一个名为 sheer1 的工作簿 self.sheet1 = self.f.add_sheet(‘sheet1‘, cell_overwrite_ok=True) ‘‘‘ #设置表格样式 ‘‘‘ def set_style(self,name,height,bold=False): # 创建一个样式对象,初始化样式 style = xlwt.XFStyle() font = xlwt.Font() font.name = name font.bold = bold font.color_index = 4 font.height = height style.font = font return style ‘‘‘ 持久化 ‘‘‘ def write_data(self,datas): if datas is None: return None try: # print(help(self.sheet1.write())) top = list(datas[0].keys()) # 数据表头 for i in range(0, len(datas[0])): row = 0 col = i self.sheet1.write(row, col,top[i], self.set_style(‘Times New Roman‘, 220, True)) for i in range(0,len(datas)): row = i+1 col = 0 dictValue = list(datas[i].values()) for colIndex in range(0, len(dictValue)): col = colIndex self.sheet1.write(row, col,dictValue[colIndex]) self.sheet1.col(0).width = 15000 self.sheet1.col(1).width = 8000 self.sheet1.col(2).width = 5000 self.f.save(‘house.xls‘) except: print(‘持久化失败,请重新开始‘) def getinfo(self,url): # 获取网页数据 try: #伪装UA ua = {‘user-agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36‘} #读取网页 r = requests.get(url, headers=ua) # 获取状态 r.raise_for_status() # 状态判断 if (r.status_code == 200): r.encoding = chardet.detect(r.content)["encoding"] return r.text return None except: return "下载错误" def getHouseInfo(self,html): ‘‘‘ 获取用户基本信息 ‘‘‘ # 初始化BeautifulSoup库 soup = BeautifulSoup(html, "html.parser") # 创建字典 datas = [] # div._1wbi47zw > div._hgs47m > div._10ejfg4u > div._y5sok6 > div._qlq27g > a._okj6x s = soup.select("div._1wbi47zw > div._hgs47m > div._10ejfg4u > div._y5sok6 > div._qlq27g > a._okj6x") #循环上面房间数据 for i in s: # 临时数组 data = {} # 取出房间名称 name = i.select("div._qrfr9x5") # 打印 print(str(name[0].get_text())) # 加入成员 data[‘房间名称‘] = str(name[0].get_text()) #取出房间的tags标签 tags = i.select("span._faldii7") # 打印 print(str(tags[0].get_text())) # 标签 data[‘标签‘] = str(tags[0].get_text()) # 打印 print(str(tags[1].get_text())) # 评分 data[‘评价‘] = str(tags[1].get_text()) # 加入字典 datas.append(data) # 返回 return datas def grab(self): url = "https://www.airbnb.cn/s/%E6%B3%89%E5%B7%9E/homes" print(‘--------------开始下载‘) html = self.getinfo(url) print(‘--------------开始解析‘) house = self.getHouseInfo(html) print("成功解析:%s条信息"%len(house)) for i,value in enumerate(house): print(‘******** %s ********‘%(i+1)) for item in value.items(): print("%s\t:%s"%item) print(‘*******************‘) # 持久化 self.write_data(house) if __name__ == ‘__main__‘: house = house() house.grab()

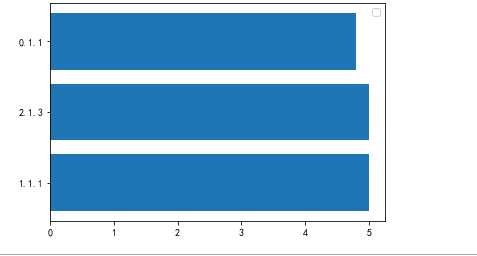

经过对主题数据的分析与可视化,可以清晰地了解泉州地区的房源位置、面积、装修风格等信息,清楚地看到哪些房源密集度更高,哪些房源面积大。

通过对本次程序设计任务完成,对python爬虫有了一定的了解,但是还是需要加强学习,在爬取过程中,对数据清洗、可视化等操作还不够熟练,还需进一步加强学习。

标签:range 评价 lse soup jieba分词 用户 方案 实现 页面

原文地址:https://www.cnblogs.com/wuxium/p/12075483.html