标签:truck rom glob scala 流量 build using 功能 designed

2.3 OpenFlow and Network OSes

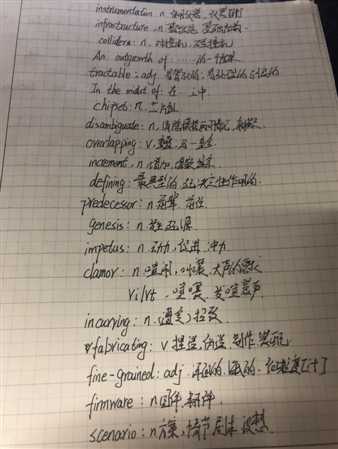

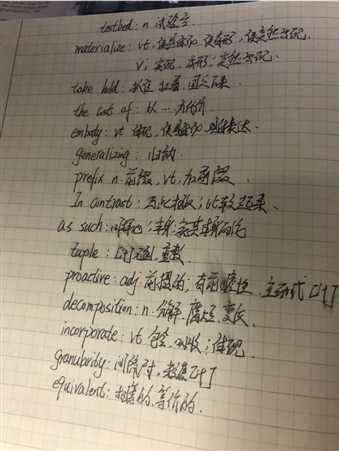

单词学习

翻译

In the mid-2000s, researchers and funding agencies gained interest in the idea of network experimentation at scale, encouraged by the success of experimental infrastructures (e.g., PlanetLab [6] and Emulab [83]), and the availability of separate government funding for large-scale “instrumentation” previously reserved for other disciplines to build expensive, shared infrastructure such as colliders and telescopes [52]. An outgrowth of this enthusiasm was the creation of the Global Environment for Networking Innovations (GENI) [33] with an NSF-funded GENI Project Office and the EU FIRE program [32]. Critics of these infrastructure-focused efforts pointed out that this large investment in infrastructure was not matched by well-conceived ideas to use it. In the midst of this, a group of researchers at Stanford created the Clean Slate Program and focused on experimentation at a more local and tractable scale: campus networks

2000年代中期,受实验基础设施成功的鼓励,研究人员和资助机构对大规模网络实验的想法产生了兴趣,政府为大型“仪器”单独提供资金,这些大型“仪器”以前是留给其他学科建造昂贵的共享基础设施,如对撞机和望远镜。这种热情的一个结果是创建了全球网络创新环境(GENI),由NSF资助的GENI项目办公室和欧盟消防计划。也有批评者指出,对基础设施的这一大笔投资并没有与使用它的精心构思的想法相匹配。在此过程中,斯坦福大学的一组研究人员创建了一个全新的项目,并将重点放在更本地化、更易处理的规模上——校园网。

Before the emergence of OpenFlow, the ideas underlying SDN faced a tension between the vision of fully programmable networks and pragmatism that would enable realworld deployment. OpenFlow struck a balance between these two goals by enabling more functions than earlier route controllers and building on existing switch hardware, through the increasing use of merchant-silicon chipsets in commodity switches. Although relying on existing switch hardware did somewhat limit flexibility, OpenFlow was almost immediately deployable, allowing the SDN movement to be both pragmatic and bold. The creation of the OpenFlow API [51] was followed quickly by the design of controller platforms like NOX [37] that enabled the creation of many new control applications

在OpenFlow出现之前,SDN的基本思想面临着完全可编程网络和实用主义之间的紧张关系,后者将使RealWorld部署成为可能。OpenFlow在这两个目标之间取得了平衡,它启用了比早期路由控制器更多的功能,并通过在商品交换机中越来越多地使用商用硅芯片组来构建现有的交换机硬件。尽管依赖现有的交换机硬件在一定程度上限制了灵活性,但OpenFlow几乎可以立即部署,这使得SDN的移动既实用又大胆,OpenFlow API的创建之后,紧接着是NOX这样的控制器平台的设计,它支持创建许多新的控制应用程序。

An OpenFlow switch has a table of packet-handling rules, where each rule has a pattern (that matches on bits in the packet header), a list of actions (e.g., drop, flood, forward out a particular interface, modify a header field, or send the packet to the controller), a set of counters (to track the number ofbytes and packets), and a priority (to disambiguate between rules with overlapping patterns). Upon receiving a packet, an OpenFlow switch identifies the highest-priority matching rule, performs the associated actions, and increments the counters

OpenFlow交换机有一个数据包处理规则表,其中每个规则都有一个模式(与数据包头中的位匹配)、一个操作列表(例如,丢弃、溢出、转发特定接口、修改头字段或将数据包发送到控制器)、一组计数器(用于跟踪字节和数据包的数量)和优先级(消除具有重叠模式的规则之间的歧义)。在接收到数据包时,OpenFlow交换机识别最高优先级匹配规则,执行相关操作,并递增计数器。

Technology push and use pull. Perhaps the defining feature of OpenFlow is its adoption in industry, especially as compared with its intellectual predecessors. This success can be attributed to a perfect storm of conditions between equipment vendors, chipset designers, network operators, and networking researchers. Before OpenFlow’s genesis, switch chipset vendors like Broadcom had already begun to open their APIs to allow programmers to control certain forwarding behaviors. The decision to open the chipset provided the necessary impetus to an industry that was already clamoring for more control over network devices. The availability of these chipsets also enabled a much wider range of companies to build switches, without incurring the substantial cost of designing and fabricating their own data-plane hardware.

技术推送和使用拉力。也许OpenFlow的定义特性是它在工业中的应用,特别是与它的杰出的前辈相比。这一成功可以归因于设备供应商、芯片组设计者、网络运营商和网络研究人员之间的完美风暴。在OpenFlow诞生之前,像Broadcom这样的芯片组供应商已经开始开放他们的API,允许程序员控制某些转发行为。开放芯片组的决定为一个已经要求对网络设备进行更多控制的行业提供了必要的动力。这些芯片组的提供还使更多的公司能够制造开关,而不需要花费大量的设计和制造数据平面硬件的费用。

The initial OpenFlow protocol standardized a data-plane model and a control-plane API by building on technology that switches already supported. Specifically, because network switches already supported fine-grained access control and flow monitoring, enabling OpenFlow’s initial set of capabilities on switch was as easy as performing a firmware upgrade—vendors did not need to upgrade the hardware to make their switches OpenFlow-capable

最初的OpenFlow协议将数据平面模型和控制平面API标准化,基于已经支持的技术,具体来说,由于网络交换机已经支持细粒度的访问控制和流监视,因此在交换机上启用OpenFlow的初始功能集就像执行固件升级一样简单,供应商不需要升级硬件以使其交换机具有OpenFlow功能。

OpenFlow’s initial target deployment scenario was campus networks, meeting the needs of a networking research community actively looking for ways to conduct experimental work on “clean-slate” network architectures within a researchfriendly operational setting. In the late 2000s, the OpenFlow group at Stanford led an effort to deploy OpenFlow testbeds across many campuses and demonstrate the capabilities of the protocol both on a single campus network and over a widearea backbone network spanning multiple campuses

OpenFlow最初的目标部署场景是校园网络,满足网络研究社区的需要,积极寻找方法在研究友好的操作环境下进行“干净的”网络架构的实验工作,在21世纪末,斯坦福的OpenFlow小组领导了一项在许多校园部署OpenFlow测试床的工作,并展示了该协议在单个校园网和跨多个校园的广域骨干网络上的能力。

As real SDN use cases materialized on these campuses, OpenFlow began to take hold in other realms, such as datacenter networks, where there was a distinct need to manage network traffic at large scales. In data centers, the cost of hiring engineers to write sophisticated control programs to run over large numbers of commodity switches proved to be more cost-effective than continuing to purchase closed, proprietary switches that could not support new features without substantial engagement with the equipment vendors. As vendors began to compete to sell both servers and switches for data centers, many smaller players in the network equipment marketplace embraced the opportunity to compete with the established router and switch vendors by supporting new capabilities like OpenFlow

当真正的SDN用例在这些校园中实现时,OpenFlow开始在其他领域中占据一席之地,比如数据中心网络,在那里有着大规模管理网络流量的明显需求。在数据中心,雇用工程师编写复杂的控制程序来运行大量商品交换机的成本比继续购买封闭的专有交换机更符合成本效益,因为如果没有设备供应商的大量参与,这些交换机就无法支持新功能。随着供应商开始争夺数据中心的服务器和交换机,网络设备市场上的许多较小的玩家接受了与现有路由器竞争的机会,并通过支持OpenFlow这样的新功能来交换供应商。

Intellectual contributions. Although OpenFlow embodied many of the principles from earlier work on the separation of control and data planes, the rise of OpenFlow offered several additional intellectual contributions:

虽然OpenFlow包含了以前关于控制和数据平面分离的工作中的许多原则,但是OpenFlow的兴起提供了一些额外的智力贡献。

Generalizing network devices and functions. Previous work on route control focused primarily on matching traffic by destination IP prefix. In contrast, OpenFlow rules could define forwarding behavior on traffic flows based on any set of 13 different packet headers. As such, OpenFlow conceptually unified many different types of network devices that differ only in terms of which header fields they match, and which actions they perform. A router matches on destination IP prefix and forwards out a link, whereas a switch matches on source MAC address (to perform MAC learning) and destination MAC address (to forward), and either floods or forwards out a single link. Network address translators and firewalls match on the five tuple (of source and destination IP addresses and port numbers, and the transport protocol) and either rewrites address and port fields, or drops unwanted traffic. OpenFlow also generalized the ruleinstallation techniques, allowing anything from proactive installation of coarse-grained rules (i.e., with “wildcards” for many header fields) to reactive installation of fine-grained rules, depending on the application. Still, OpenFlow does not offer data-plane support for deep packet inspection or connection reassembly; as such, OpenFlow alone cannot efficiently enable sophisticated middlebox functionality

概括网络设备和功能。以前关于路由控制的工作主要集中在通过目标IP前缀匹配流量。相反,OpenFlow规则可以根据13个不同的数据包报头来定义流量上的转发行为。因此,OpenFlow在概念上统一了许多不同类型的网络设备,它们只在匹配哪些标头字段和执行哪些操作方面有所不同。路由器匹配目标IP前缀并转发链路,而交换机匹配源MAC地址(执行MAC学习)和目标MAC地址(向前),或者洪流或转发单个链路。网络地址转换器和防火墙在五个元组(源和目的地ip地址和端口号以及传输协议)上匹配,或者重写地址和端口字段,或者删除不必要的通信量。OpenFlow还推广了规则安装技术,允许根据应用程序的不同,主动安装粗粒度规则(即对许多头字段使用“通配符”),到被动安装细粒度规则。尽管如此,OpenFlow并不为深度数据包检查或连接重组提供数据平面支持;因此,仅OpenFlow无法有效地启用复杂的中间盒功能。

The vision of a network operating system. In contrast to earlier research on active networks that proposed a node operating system, the work on OpenFlow led to the notion of a network operating system [37]. A network operating system is software that abstracts the installation of state in network switches from the logic and applications that control the behavior of the network. More generally, the emergence of a network operating system offered a conceptual decomposition of network operation into three layers [46]: (1) a data plane with an open interface; (2) a state management layer that is responsible for maintaining a consistent view of network state; (3) control logic that performs various operations depending on its view of network state

网络操作系统的愿景。与以前对主动网络提出节点操作系统的研究不同,OpenFlow的工作引出了网络操作系统的概念[37]。网络操作系统是将网络交换机中的状态从控制网络行为的逻辑和应用程序中抽象出来的软件。更广泛地说,网络操作系统的出现提供了网络操作的概念分解为三层:(1)具有开放接口的数据平面;(2)负责维护网络状态一致视图的状态管理层;;(3)根据其网络状态视图执行各种操作的控制逻辑。

Distributed state management techniques. Separating the control and data planes introduces new challenges concerning state management. Running multiple controllers is crucial for scalability, reliability, and performance, yet these replicas should work together to act like a single, logically centralized controller. Previous work on distributed route controllers [12, 79] only addressed these problems in the narrow context of route computation. To support arbitrary controller applications, the work on the Onix [46] controller introduced the idea of a network information base—a representation of the network topology and other control state shared by all controller replicas. Onix also incorporated past work in distributed systems to satisfy the state consistency and durability requirements. For example, Onix has a transactional persistent database backed by a replicated state machine for slowly-changing network state, as well as an in-memory distributed hash table for rapidly-changing state with weaker consistency requirements. More recently, the ONOS [55] system offers an open-source controller with similar functionality, using existing open-source software for maintaining consistency across distributed state and providing a network topology database to controller applications.

分离控制层和数据层带来了国家管理方面的新挑战。运行多个控制器对于可伸缩性、可靠性和性能至关重要,但是这些副本应该像单个逻辑集中式控制器一样协同工作,以前关于分布式路由控制器的工作[12,79]只在狭窄的路由计算环境下解决了这些问题,为了支持任意控制器应用,Onix[46]控制器的工作引入了网络信息库的概念--所有控制器副本共享的网络拓扑和其他控制状态的表示。Onix还将过去的工作合并到分布式系统中,以满足状态一致性和持久性要求。例如,Onix有一个事务持久数据库,由一个复制状态机支持,用于缓慢变化的网络状态,以及一个用于快速变化状态的内存中分布式哈希表,用于快速变化的状态,但一致性要求较弱。最近,Onos系统提供了一个开源控制器类似的功能,使用现有的开源软件来维护分布式状态的一致性,并向控制器应用程序提供网络拓扑数据库。

Myths and misconceptions. One myth concerning SDN is that the first packet of every traffic flow must go to the controller for handling. Indeed, some early systems like Ethane [16] worked this way, since they were designed to support fine-grained policies in small networks. In fact, SDN in general, and OpenFlow in particular, do not impose any assumptions about the granularity of rules or whether the controller handles any data traffic. Some SDN applications respond only to topology changes and coarse-grained traffic statistics and update rules infrequently in response to link failures or network congestion. Other applications may send the first packet of some larger traffic aggregate to the controller, but not a packet from every TCP or UDP connection.

有关SDN的一个神话是,每个业务流的第一个数据包必须由控制器处理。事实上,像Ethane这样的早期系统是这样工作的,因为它们是为了支持小型网络中的细粒度策略而计的。事实上,SDN(特别是OpenFlow)一般不会对规则的粒度或控制器是否处理任何数据流量强加任何假设。一些SDN应用程序只对拓扑变化和粗粒度流量统计数据做出响应,并且不频繁地响应链路故障或网络拥塞而更新规则。其他应用程序可能向控制器发送一些较大流量聚合的第一个数据包,但不是来自每个tcp或udp连接的数据包。

A second myth surrounding SDN is that the controller must be physically centralized. In fact, Onix [46] and ONOS [55] demonstrate that SDN controllers can—and should—be distributed. Wide-area deployments of SDN, as in Google’s private backbone [44], have many controllers spread throughout the network

围绕SDN的第二个神话是控制器必须在物理上集中。事实上,Onix和ONOS证明SDN控制器可以而且应该被分发。SDN的广域部署,如google的私有骨干,有许多控制器分布在整个网络中。

Finally, a commonly held misconception is that SDN and OpenFlow are equivalent; in fact, OpenFlow is merely one (widely popular) instantiation of SDN principles. Different APIs could be used to control network-wide forwarding behavior; previous work that focused on routing (using BGP as an API) could be considered one instantiation of SDN, for example, and architectures from various vendors (e.g., Cisco ONE and JunOS SDK) represent other instantiations of SDN that differ from OpenFlow

最后,一个普遍存在的误解是,SDN和OpenFlow是等价的;实际上,OpenFlow只是SDN原则的一个(广泛流行的)实例化。可以使用不同的api来控制网络范围内的转发行为;以前专注于路由的工作(使用bgp作为api)可以被认为是sdn的一个实例化,例如,来自不同供应商的体系结构(例如Cisco One和Junos SDK)代表了SDN不同于OpenFlow的其他实例化。

In search of control programs and use cases. Despite the initial excitement surrounding SDN, it is worth recognizing that SDN is merely a tool that enables innovation in network control. SDN neither dictates how that control should be designed nor solves any particular problem. Rather, researchers and network operators now have a platform at their disposal to help address longstanding problems in managing their networks and deploying new services. Ultimately, the success and adoption of SDN depends on whether it can be used to solve pressing problems in networking that were difficult or impossible to solve with earlier protocols. SDN has already proved useful for solving problems related to network virtualization, as we describe in the next section

寻找控制程序和用例。尽管SDN最初令人兴奋,但值得认识到的是,SDN仅仅是一种在网络控制方面实现创新的工具。SDN既不规定如何设计该控件,也不解决任何特定问题。相反,研究人员和网络运营商现在有了一个平台,可以帮助他们解决在管理网络和部署新服务方面长期存在的问题。最终,SDN的成功和采用取决于它是否可以用来解决网络中那些以前的协议难以解决或不可能解决的紧迫问题。正如我们在下一节中所描述的那样,SDN已经被证明对解决与网络虚拟化相关的问题很有用。

The Road to SDN: An Intellectual History of Programmable Networks (四)

标签:truck rom glob scala 流量 build using 功能 designed

原文地址:https://www.cnblogs.com/fcw245838813/p/12178369.html