标签:统一 clu stat search ali sys ddr 工作 firewall

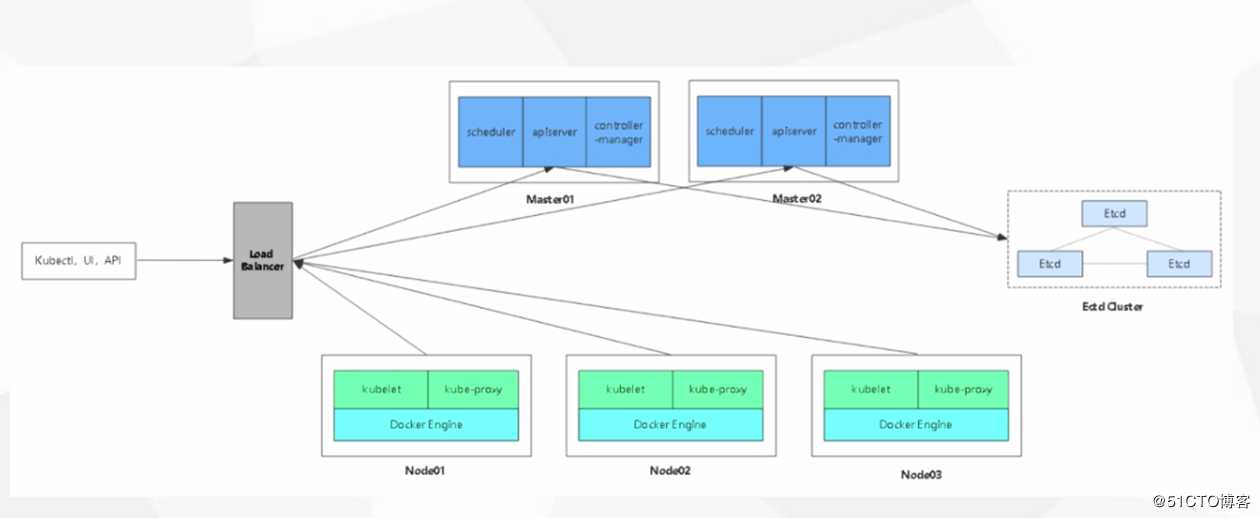

k8s环境规划总图

环境装备

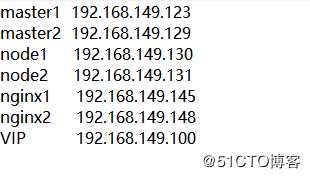

二台master节点 二台node节点 二台安装nginx做负载均衡和故障转移 以及飘逸地址vip

部署流程

关闭防火墙及安全功能

systemctl stop firewalld.service

setenforce 0

复制kubernetes目录到master2 (上个实验k8s目录)

scp -r /opt/kubernetes/ root@192.168.149.129:/opt

复制etcd目录到master2

scp -r /opt/etcd/ root@192.168.149.129:/opt

复制服务脚本

scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@192.168.149.129:/usr/lib/systemd/system/

修改配置文件,将ip地址改为本机地址

vim /opt/kubernetes/cfg/kube-apiserver

--bind-address=192.168.149.129

--advertise-address=192.168.149.129

追加修改环境变量并执行生效

vim /etc/profile

export PATH=$PATH:/opt/kubernetes/bin/

source /etc/profile

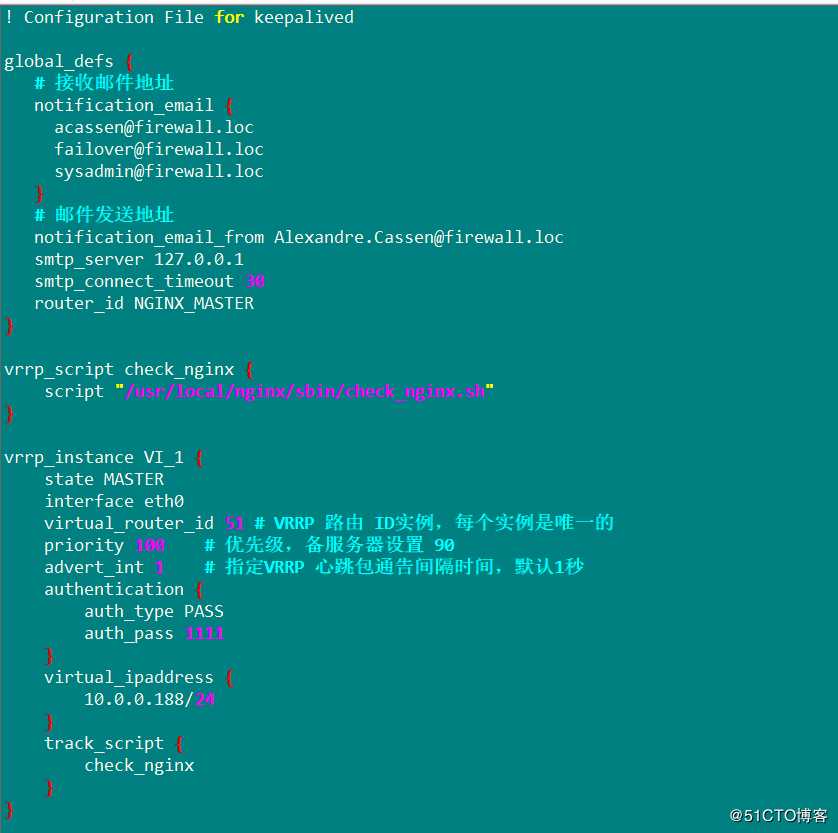

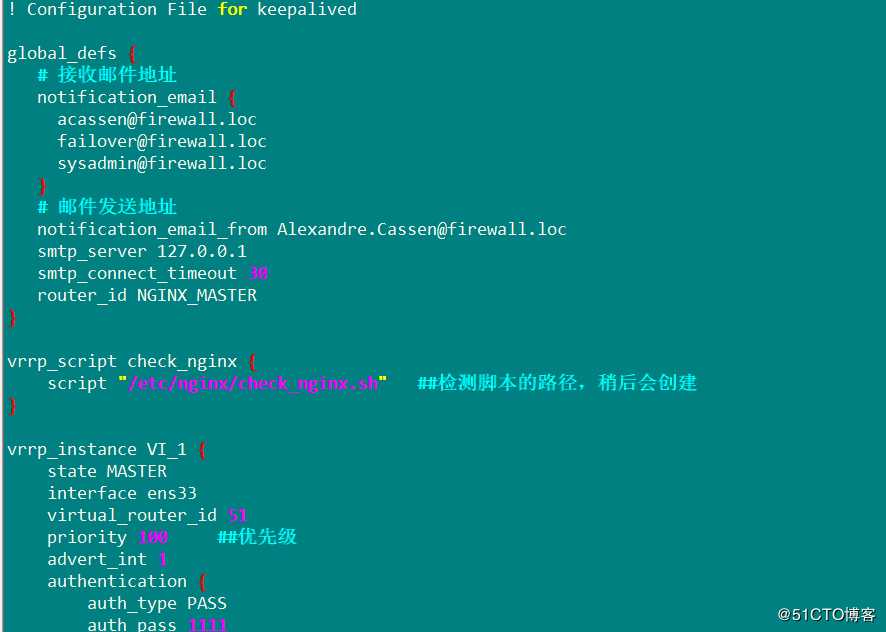

部署keepalive服务,准备脚本

vim keepalive.conf

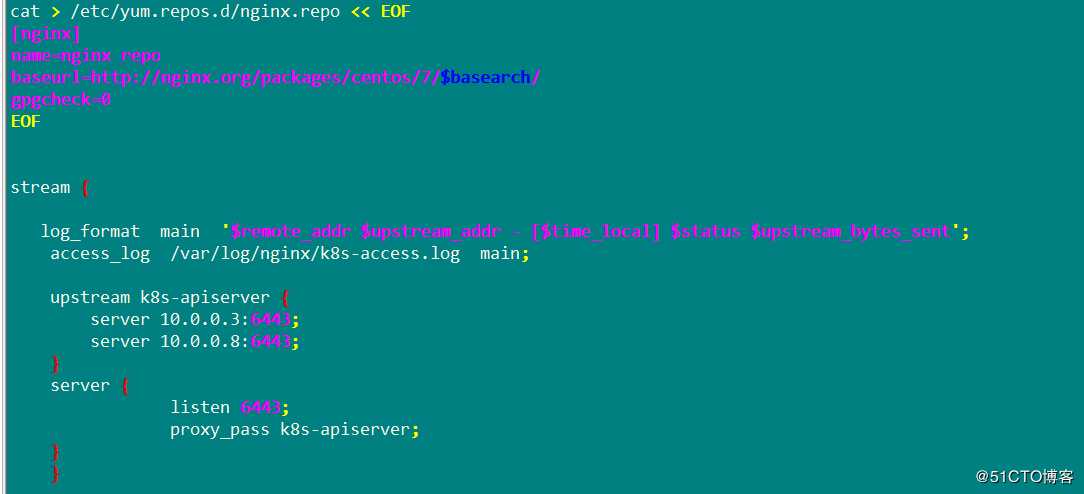

将脚本放入家目录中后,建立yum仓库

vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http:/ /nginx.org/packages/centos/7/$basearch/

gpgcheck=0

完成后,刷新yum仓库,下载nginx

yum list

yum install nginx -y

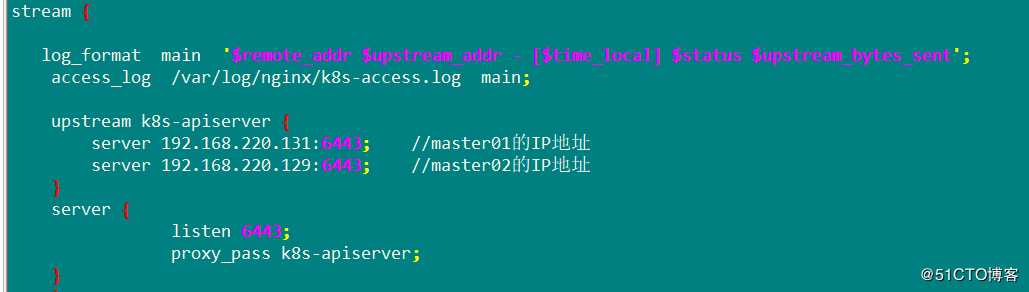

添加四层转发模块,开启服务

安装keepalive服务,将准备好的配置文件覆盖,并修改

yum install keepalived -y

cp keepalived.conf /etc/keepalived/keepalived.conf

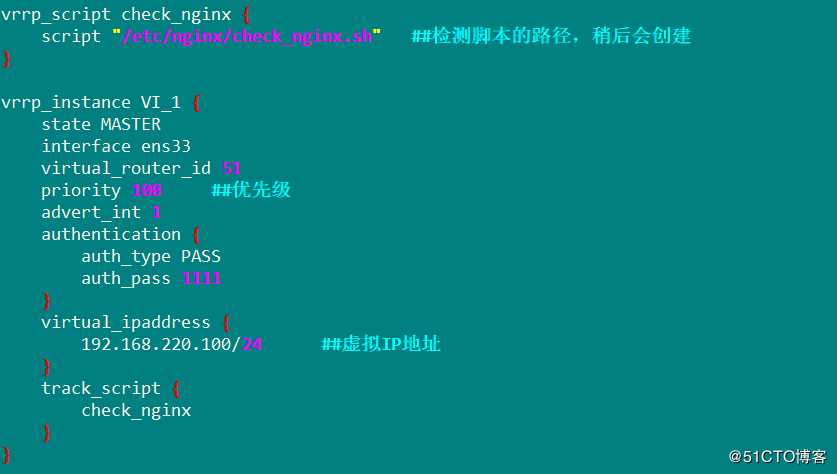

vim /etc/keepalived/keepalived.conf

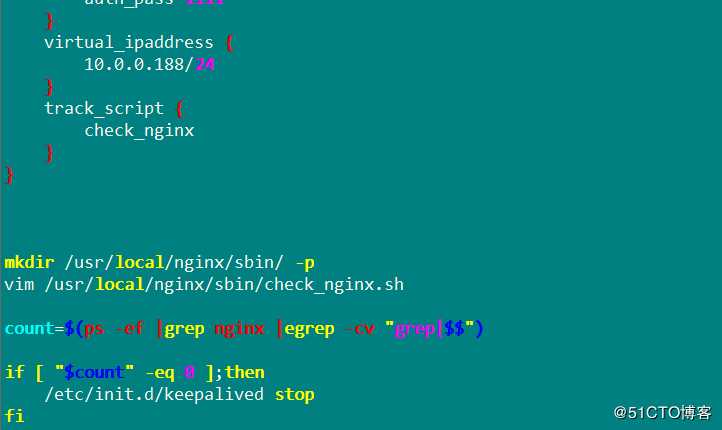

创建nginx脚本,并检测

vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fichmod +x /etc/nginx/check_nginx.sh

systemctl start keepalived.service

ip aens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:eb:11:2a brd ff:ff:ff:ff:ff:ff

inet 192.168.149.140/24 brd 192.168.142.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::53ba:daab:3e22:e711/64 scope link

valid_lft forever preferred_lft forever

node节点修改配置文件

cd /opt/kubernetes/cfg/

#配置文件统一修改为VIP

vim /opt/kubernetes/cfg/bootstrap.kubeconfigserver: https:/ /192.168.149.20:6443

#第5行改为Vip的地址vim /opt/kubernetes/cfg/kubelet.kubeconfig

server: https:/ /192.168.149.20:6443

#第5行改为Vip的地址vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

server: https:/ /192.168.149.20:6443

#第5行改为Vip的地址

替换完成后自检

grep 20 *

bootstrap.kubeconfig: server: https:/ /192.168.142.20:6443

kubelet.kubeconfig: server: https:/ /192.168.142.20:6443

kube-proxy.kubeconfig: server: https:/ /192.168.142.20:6443

在lb01上查看nginx的k8s日志

tail /var/log/nginx/k8s-access.log

192.168.142.140 192.168.142.129:6443 - [08/Feb/2020:19:20:40 +0800] 200 1119

192.168.142.140 192.168.142.120:6443 - [08/Feb/2020:19:20:40 +0800] 200 1119

192.168.142.150 192.168.142.129:6443 - [08/Feb/2020:19:20:44 +0800] 200 1120

192.168.142.150 192.168.142.120:6443 - [08/Feb/2020:19:20:44 +0800] 200 1120

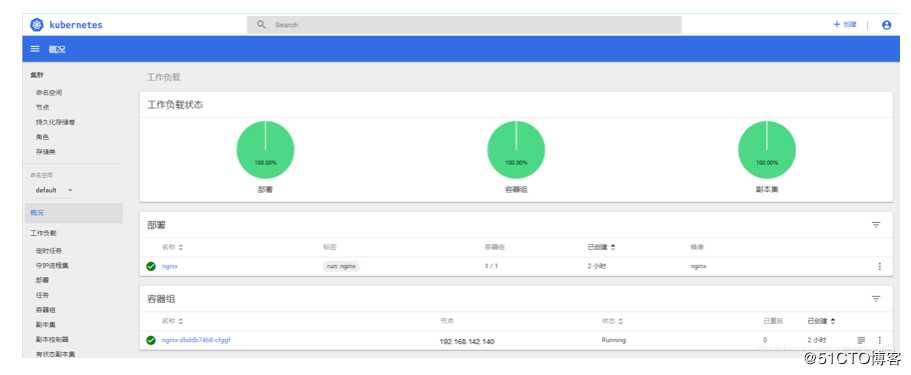

创建Pod

测试创建Pod

kubectl run nginx --image=nginx

查看状态

kubectl get pods

绑定群集中的匿名用户赋予管理员权限

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

创建UI显示界面

在master1上创建dashborad工作目录

mkdir /k8s/dashboard

cd /k8s/dashboard

上传官方的文件到该目录中

授权访问api

kubectl create -f dashboard-rbac.yaml

加密

kubectl create -f dashboard-secret.yaml配置应用

kubectl create -f dashboard-configmap.yaml控制器

kubectl create -f dashboard-controller.yaml发布访问

kubectl create -f dashboard-service.yaml

完成后查看创建在指定的kube-system命名空间下

kubectl get pods -n kube-system

查看如何访问

kubectl get pods,svc -n kube-system

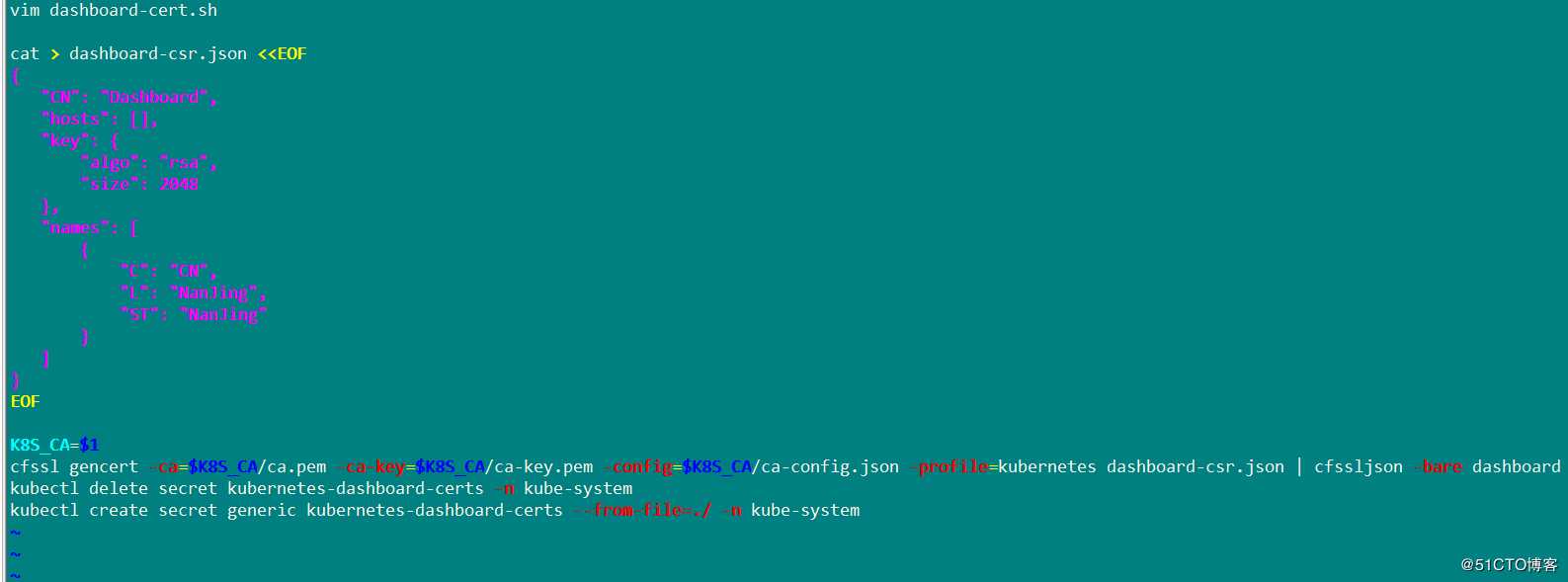

在master端,编写证书自签

重新应用新的自签证书

bash dashboard-cert.sh /root/k8s/apiserver/

修改yaml文件

vim dashboard-controller.yaml- --tls-key-file=dashboard-key.pem - --tls-cert-file=dashboard.pem重新进行部署

kubectl apply -f dashboard-controller.yaml生成令牌

kubectl create -f k8s-admin.yaml

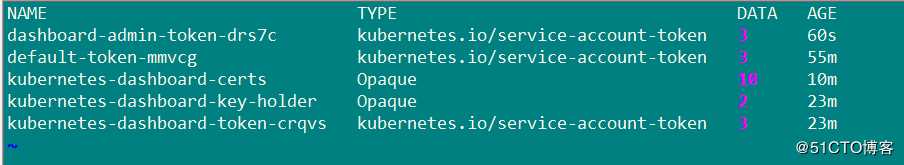

将令牌保存

kubectl get secret -n kube-system

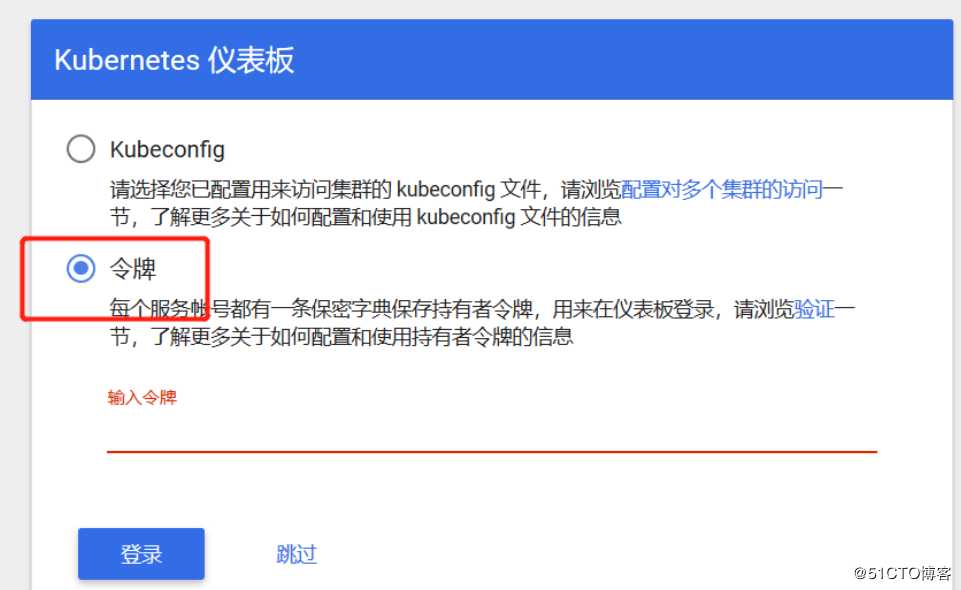

复制粘贴令牌登录

标签:统一 clu stat search ali sys ddr 工作 firewall

原文地址:https://blog.51cto.com/14449536/2470078