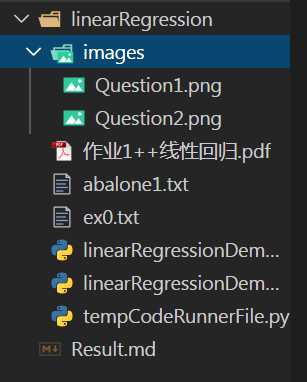

标签:params 图片 nump 算法 com 可视化 orm lin 导入

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

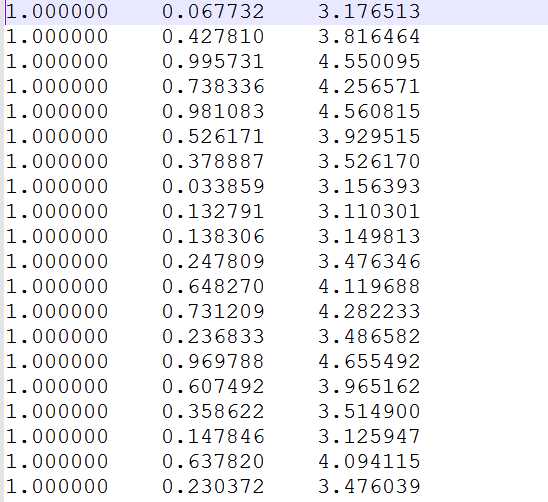

# 导入数据

def loadDataSet(filename):

x=[[],[]]

y=[]

with open(filename,'r') as f:

for line in f.readlines():

lineDataList=line.split('\t')

lineDataList=[float(x) for x in lineDataList]

x[0].append(lineDataList[0])

x[1].append(lineDataList[1])

y.append(lineDataList[2])

return x,y

# 转化为矩阵

def mat(x):

return np.matrix(np.array(x)).T

# 可视化

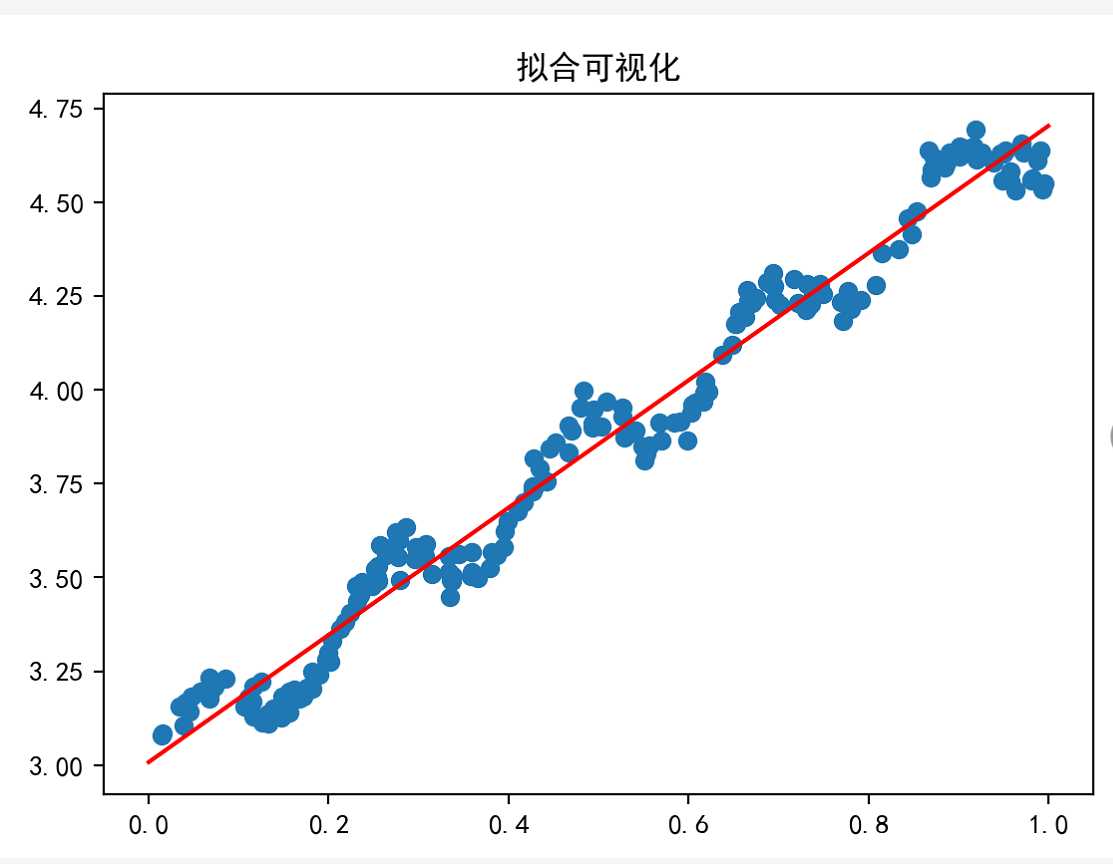

def dataVisual(xmat,ymat,k):

k1,k2=k[0],k[1]

matplotlib.rcParams['font.sans-serif'] = ['SimHei']

plt.title('拟合可视化')

plt.scatter(xmat[:,1].flatten().A[0],ymat[:,0].flatten().A[0])

x = np.linspace(0, 1, 50)

y=x*k2+k1

plt.plot(x,y,c='r')

plt.show()

# 求解回归的参数

def normalEquation(xmat,ymat):

temp=xmat.T.dot(xmat)

isInverse=np.linalg.det(xmat.T.dot(xmat))

if isInverse==0.0:

print('不可逆矩阵')

else:

inv=temp.I

return inv.dot(xmat.T).dot(ymat)

# 主函数

def main():

xAll,y=loadDataSet('linearRegression/ex0.txt')

xmat=mat(xAll)

ymat=mat(y)

res=normalEquation(xmat,ymat)

print(res)

k1,k2=res[0,0],res[1,0]

dataVisual(xmat,ymat,[k1,k2])

if __name__ == "__main__":

main()

import numpy as np

import matplotlib.pyplot as plt

import re

from sklearn.linear_model import SGDRegressor

# 将数据转化成为矩阵

def matrix(x):

return np.matrix(np.array(x)).T

# 加载数据

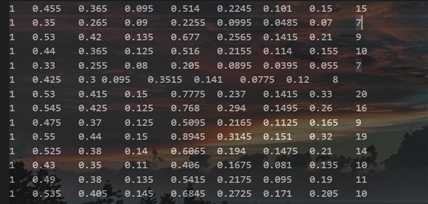

def loadData(fileName):

x=[]

y=[]

regex = re.compile('\s+')

with open(fileName,'r') as f:

readlines=f.readlines()

for line in readlines:

dataLine=regex.split(line)

dataList=[float(x) for x in dataLine[0:-1]]

xList=dataList[0:8]

x.append(xList)

y.append(dataList[-1])

return x,y

# 求解回归的参数

def normalEquation(xmat,ymat):

temp=xmat.T.dot(xmat)

isInverse=np.linalg.det(xmat.T.dot(xmat))

if isInverse==0.0:

print('不可逆矩阵')

return None

else:

inv=temp.I

return inv.dot(xmat.T).dot(ymat)

# 梯度下降求参数

def gradientDecent(xmat,ymat):

sgd=SGDRegressor()

sgd.fit(xmat,ymat)

return sgd.coef_

# 运行程序

def main():

x,y=loadData('linearRegression/abalone1.txt')

xmat=matrix(x).T

ymat=matrix(y)

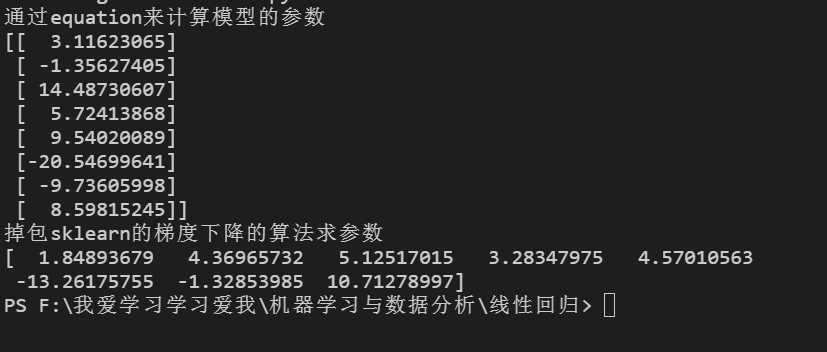

# 通过equation来计算模型的参数

theta=normalEquation(xmat,ymat)

print('通过equation来计算模型的参数')

print(theta)

# 掉包sklearn的梯度下降的算法求参数

print('掉包sklearn的梯度下降的算法求参数')

gtheta=gradientDecent(x,y)

print(gtheta)

if __name__ == "__main__":

main()

链接:https://pan.baidu.com/s/1JXrE4kbYsdVSSWjTUSDT3g

提取码:obxh

链接:https://pan.baidu.com/s/13wXq52wpKHbIlf3v21Qcgg

提取码:w4m5

python实现normal equation进行一元、多元线性回归

标签:params 图片 nump 算法 com 可视化 orm lin 导入

原文地址:https://www.cnblogs.com/mengxiaoleng/p/12506777.html