标签:mic poc als and avg += dict init code

1 #导入需要的模块 2 import torch 3 import torch.nn as nn 4 import torch.optim as optim 5 import torch.nn.functional as F 6 import torch.backends.cudnn as cudnn 7 import numpy as np 8 import torchvision 9 import torchvision.transforms as transforms 10 from torch.utils.data import DataLoader 11 from collections import Counter 12 13 #定义一些超参数 14 BATCHSIZE=100 15 DOWNLOAD_MNIST=False 16 EPOCHES=20 17 LR=0.001

1 #定义相关模型结构,这三个网络结构比较接近 2 class CNNNet(nn.Module): 3 def __init__(self): 4 super(CNNNet,self).__init__() 5 self.conv1 = nn.Conv2d(in_channels=3,out_channels=16,kernel_size=5,stride=1) 6 self.pool1 = nn.MaxPool2d(kernel_size=2,stride=2) 7 self.conv2 = nn.Conv2d(in_channels=16,out_channels=36,kernel_size=3,stride=1) 8 self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2) 9 self.fc1 = nn.Linear(1296,128) 10 self.fc2 = nn.Linear(128,10) 11 12 def forward(self,x): 13 x=self.pool1(F.relu(self.conv1(x))) 14 x=self.pool2(F.relu(self.conv2(x))) 15 #print(x.shape) 16 x=x.view(-1,36*6*6) 17 x=F.relu(self.fc2(F.relu(self.fc1(x)))) 18 return x 19 20 class Net(nn.Module): 21 def __init__(self): 22 super(Net, self).__init__() 23 self.conv1 = nn.Conv2d(3, 16, 5) 24 self.pool1 = nn.MaxPool2d(2, 2) 25 self.conv2 = nn.Conv2d(16, 36, 5) 26 #self.fc1 = nn.Linear(16 * 5 * 5, 120) 27 self.pool2 = nn.MaxPool2d(2, 2) 28 self.aap=nn.AdaptiveAvgPool2d(1) 29 #self.fc2 = nn.Linear(120, 84) 30 self.fc3 = nn.Linear(36, 10) 31 32 def forward(self, x): 33 x = self.pool1(F.relu(self.conv1(x))) 34 x = self.pool2(F.relu(self.conv2(x))) 35 #print(x.shape) 36 #x = x.view(-1, 16 * 5 * 5) 37 x = self.aap(x) 38 #print(x.shape) 39 #x = F.relu(self.fc2(x)) 40 x = x.view(x.shape[0], -1) 41 #print(x.shape) 42 x = self.fc3(x) 43 return x 44 45 class LeNet(nn.Module): 46 def __init__(self): 47 super(LeNet, self).__init__() 48 self.conv1 = nn.Conv2d(3, 6, 5) 49 self.conv2 = nn.Conv2d(6, 16, 5) 50 self.fc1 = nn.Linear(16*5*5, 120) 51 self.fc2 = nn.Linear(120, 84) 52 self.fc3 = nn.Linear(84, 10) 53 54 def forward(self, x): 55 out = F.relu(self.conv1(x)) 56 out = F.max_pool2d(out, 2) 57 out = F.relu(self.conv2(out)) 58 out = F.max_pool2d(out, 2) 59 out = out.view(out.size(0), -1) 60 out = F.relu(self.fc1(out)) 61 out = F.relu(self.fc2(out)) 62 out = self.fc3(out) 63 return out

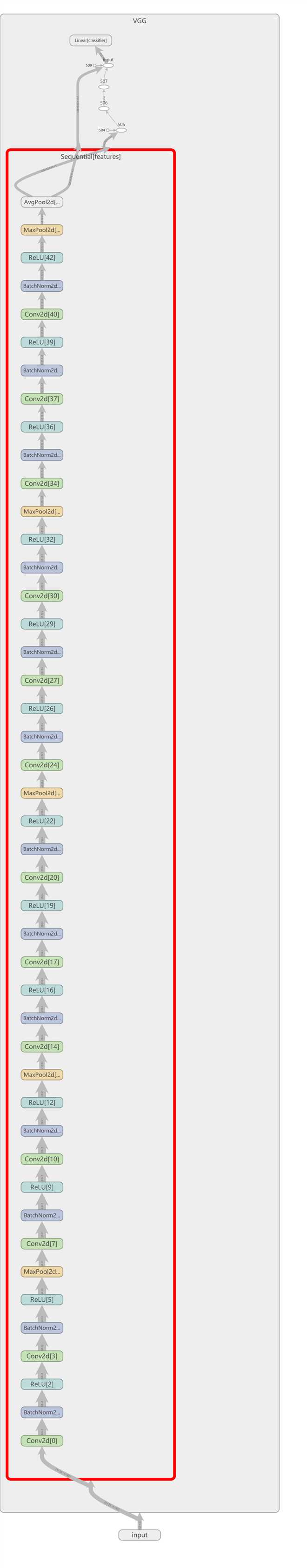

1 cfg = { 2 ‘VGG16‘: [64, 64, ‘M‘, 128, 128, ‘M‘, 256, 256, 256, ‘M‘, 512, 512, 512, ‘M‘, 512, 512, 512, ‘M‘], 3 ‘VGG19‘: [64, 64, ‘M‘, 128, 128, ‘M‘, 256, 256, 256, 256, ‘M‘, 512, 512, 512, 512, ‘M‘, 512, 512, 512, 512, ‘M‘], 4 } 5 6 7 class VGG(nn.Module): 8 def __init__(self, vgg_name): 9 super(VGG, self).__init__() 10 self.features = self._make_layers(cfg[vgg_name]) 11 self.classifier = nn.Linear(512, 10) 12 13 def forward(self, x): 14 out = self.features(x) 15 out = out.view(out.size(0), -1) 16 out = self.classifier(out) 17 return out 18 19 def _make_layers(self, cfg): 20 layers = [] 21 in_channels = 3 22 for x in cfg: 23 if x == ‘M‘: 24 layers += [nn.MaxPool2d(kernel_size=2, stride=2)] 25 else: 26 layers += [nn.Conv2d(in_channels, x, kernel_size=3, padding=1), 27 nn.BatchNorm2d(x), 28 nn.ReLU(inplace=True)] 29 in_channels = x 30 layers += [nn.AvgPool2d(kernel_size=1, stride=1)] 31 return nn.Sequential(*layers)

1 device = torch.device(‘cuda‘ if torch.cuda.is_available() else ‘cpu‘) 2 3 4 # Data 5 print(‘==> Preparing data..‘) 6 transform_train = transforms.Compose([ 7 transforms.RandomCrop(32, padding=4), 8 transforms.RandomHorizontalFlip(), 9 transforms.ToTensor(), 10 transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)), 11 ]) 12 13 transform_test = transforms.Compose([ 14 transforms.ToTensor(), 15 transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)), 16 ]) 17 18 trainset = torchvision.datasets.CIFAR10(root=r‘D:\JupyterNotebook\PyTorch深度学习代码\PyTorch深度学习代码及数据\pytorch-04\data‘, train=True, download=False, transform=transform_train) 19 trainloader = torch.utils.data.DataLoader(trainset, batch_size=128, shuffle=True, num_workers=2) 20 21 testset = torchvision.datasets.CIFAR10(root=r‘D:\JupyterNotebook\PyTorch深度学习代码\PyTorch深度学习代码及数据\pytorch-04\data‘, train=False, download=False, transform=transform_test) 22 testloader = torch.utils.data.DataLoader(testset, batch_size=100, shuffle=False, num_workers=2) 23 24 classes = (‘plane‘, ‘car‘, ‘bird‘, ‘cat‘, ‘deer‘, ‘dog‘, ‘frog‘, ‘horse‘, ‘ship‘, ‘truck‘) 25 26 # Model 27 print(‘==> Building model..‘) 28 net1 = CNNNet() 29 net2=Net() 30 net3=LeNet() 31 net4 = VGG(‘VGG16‘)

#把3个网络模型放在一个列表里 mlps=[net1.to(device),net2.to(device),net3.to(device)] optimizer=torch.optim.Adam([{"params":mlp.parameters()} for mlp in mlps],lr=LR) loss_function=nn.CrossEntropyLoss() for ep in range(EPOCHES): for img,label in trainloader: img,label=img.to(device),label.to(device) optimizer.zero_grad()#10个网络清除梯度 for mlp in mlps: mlp.train() out=mlp(img) loss=loss_function(out,label) loss.backward()#网络们获得梯度 optimizer.step() pre=[] vote_correct=0 mlps_correct=[0 for i in range(len(mlps))] for img,label in testloader: img,label=img.to(device),label.to(device) for i, mlp in enumerate( mlps): mlp.eval() out=mlp(img) _,prediction=torch.max(out,1) #按行取最大值 pre_num=prediction.cpu().numpy() mlps_correct[i]+=(pre_num==label.cpu().numpy()).sum() pre.append(pre_num) arr=np.array(pre) pre.clear() result=[Counter(arr[:,i]).most_common(1)[0][0] for i in range(BATCHSIZE)] vote_correct+=(result == label.cpu().numpy()).sum() print("epoch:" + str(ep)+"集成模型的正确率"+str(vote_correct/len(testloader))) for idx, coreect in enumerate( mlps_correct): print("模型"+str(idx)+"的正确率为:"+str(coreect/len(testloader)))

epoch:0集成模型的正确率48.14 模型0的正确率为:50.01 模型1的正确率为:38.74 模型2的正确率为:44.7 epoch:1集成模型的正确率54.28 模型0的正确率为:55.36 模型1的正确率为:43.8 模型2的正确率为:49.0 epoch:2集成模型的正确率58.89 模型0的正确率为:60.68 模型1的正确率为:48.3 模型2的正确率为:52.31 epoch:3集成模型的正确率60.43 模型0的正确率为:61.96 模型1的正确率为:49.04 模型2的正确率为:54.25 epoch:4集成模型的正确率62.24 模型0的正确率为:63.52 模型1的正确率为:51.0 模型2的正确率为:54.64 epoch:5集成模型的正确率62.62 模型0的正确率为:63.41 模型1的正确率为:52.57 模型2的正确率为:56.15 epoch:6集成模型的正确率63.88 模型0的正确率为:64.45 模型1的正确率为:53.33 模型2的正确率为:57.71 epoch:7集成模型的正确率64.24 模型0的正确率为:65.83 模型1的正确率为:52.49 模型2的正确率为:56.9 epoch:8集成模型的正确率66.51 模型0的正确率为:67.42 模型1的正确率为:55.8 模型2的正确率为:59.62 epoch:9集成模型的正确率66.83 模型0的正确率为:67.33 模型1的正确率为:56.49 模型2的正确率为:59.85 epoch:10集成模型的正确率67.67 模型0的正确率为:67.8 模型1的正确率为:56.91 模型2的正确率为:61.75 epoch:11集成模型的正确率67.96 模型0的正确率为:68.84 模型1的正确率为:56.77 模型2的正确率为:61.41 epoch:12集成模型的正确率68.75 模型0的正确率为:68.42 模型1的正确率为:58.25 模型2的正确率为:62.13 epoch:13集成模型的正确率68.13 模型0的正确率为:67.91 模型1的正确率为:58.76 模型2的正确率为:60.91 epoch:14集成模型的正确率68.91 模型0的正确率为:68.83 模型1的正确率为:58.62 模型2的正确率为:62.8 epoch:15集成模型的正确率69.3 模型0的正确率为:69.12 模型1的正确率为:60.16 模型2的正确率为:63.29 epoch:16集成模型的正确率69.15 模型0的正确率为:68.66 模型1的正确率为:59.63 模型2的正确率为:62.86 epoch:17集成模型的正确率70.94 模型0的正确率为:69.5 模型1的正确率为:60.39 模型2的正确率为:64.2 epoch:18集成模型的正确率70.97 模型0的正确率为:69.43 模型1的正确率为:60.27 模型2的正确率为:64.34 epoch:19集成模型的正确率71.33 模型0的正确率为:69.99 模型1的正确率为:60.71 模型2的正确率为:64.81

mlps=[net4.to(device)] optimizer=torch.optim.Adam([{"params":mlp.parameters()} for mlp in mlps],lr=LR) loss_function=nn.CrossEntropyLoss() for ep in range(EPOCHES): for img,label in trainloader: img,label=img.to(device),label.to(device) optimizer.zero_grad()#10个网络清除梯度 for mlp in mlps: mlp.train() out=mlp(img) loss=loss_function(out,label) loss.backward()#网络们获得梯度 optimizer.step() pre=[] vote_correct=0 mlps_correct=[0 for i in range(len(mlps))] for img,label in testloader: img,label=img.to(device),label.to(device) for i, mlp in enumerate( mlps): mlp.eval() out=mlp(img) _,prediction=torch.max(out,1) #按行取最大值 pre_num=prediction.cpu().numpy() mlps_correct[i]+=(pre_num==label.cpu().numpy()).sum() pre.append(pre_num) arr=np.array(pre) pre.clear() result=[Counter(arr[:,i]).most_common(1)[0][0] for i in range(BATCHSIZE)] vote_correct+=(result == label.cpu().numpy()).sum() #print("epoch:" + str(ep)+"集成模型的正确率"+str(vote_correct/len(testloader))) for idx, coreect in enumerate( mlps_correct): print("VGG16模型迭代"+str(ep)+"次的正确率为:"+str(coreect/len(testloader)))

VGG16模型迭代0次的正确率为:49.72 VGG16模型迭代1次的正确率为:64.76 VGG16模型迭代2次的正确率为:72.55 VGG16模型迭代3次的正确率为:75.42 VGG16模型迭代4次的正确率为:79.24 VGG16模型迭代5次的正确率为:79.82 VGG16模型迭代6次的正确率为:82.19 VGG16模型迭代7次的正确率为:82.14 VGG16模型迭代8次的正确率为:84.04 VGG16模型迭代9次的正确率为:84.61 VGG16模型迭代10次的正确率为:87.26 VGG16模型迭代11次的正确率为:85.57 VGG16模型迭代12次的正确率为:85.55 VGG16模型迭代13次的正确率为:86.79 VGG16模型迭代14次的正确率为:88.49 VGG16模型迭代15次的正确率为:87.19 VGG16模型迭代16次的正确率为:88.86 VGG16模型迭代17次的正确率为:88.56 VGG16模型迭代18次的正确率为:88.84 VGG16模型迭代19次的正确率为:88.19

from tensorboardX import SummaryWriter input1 = torch.rand(128,3,32,32) with SummaryWriter(log_dir=‘logs‘,comment=‘Net‘) as w: w.add_graph(net4,(input1,))

tensorboard --logdir=logs --port 6006

其中视图为VGG16结构:

VGG16和集成模型(LeNet,CNN,Net均匀池化)比较

标签:mic poc als and avg += dict init code

原文地址:https://www.cnblogs.com/Knight66666/p/12684447.html