标签:style blog http io color ar os 使用 sp

再写一个用BeautifulSoup抓站的工具,体会BeautifulSoup的强大。

根据小说索引页获取小说全部章节内容并在本地整合为小说全文。不过不是智能的,不同的站点对代码需要做相应的修改。

#!/usr/bin/env python import os import sys import re import time import chardet import urllib.request as ur from urllib.parse import urljoin,urlparse from bs4 import BeautifulSoup from threading import Thread class Download(Thread): #为每个章节分配多线程 def __init__(self,filepath,info): Thread.__init__(self) self.filepath = filepath (self.link,self.chapter) = info def run(self): print(‘开始下载: ‘+self.chapter) section(self.filepath,self.chapter,self.link) print(‘完成下载: ‘+self.chapter) def getData(url): #主要用于判断页面编码,但是发现BeautifulSoup自带判定能力,故废弃此函数 charsets = ‘utf8‘ response = ur.urlopen(url,timeout = 10) html = response.read() charinfo = chardet.detect(html) charsets = charinfo[‘encoding‘] data = html.decode(charsets) return data def merge(tmpFiles,targetFile): #将下载的章节合并 for tmpFile in tmpFiles: with open(targetFile,‘a+‘) as wfile: wfile.write(open(tmpFile,‘r‘).read()) os.remove(tmpFile) def content(link): #获取章节页面的小说内容。对于不同的站点,在此函数内修改获取章节内容的代码 html = ur.urlopen(link,timeout = 10) soup =BeautifulSoup(html) contents = soup.find(id = ‘readtext‘).p.span.text.replace(‘ ‘,‘\n‘) #BeautifulSoup会自动将 转换为空格,<br/>转换为特殊符号 return contents def section(filepath,chapter,link): #下载章节内容 while True: #反复请求页面 try: with open(filepath,‘w‘) as nfile: nfile.write(chapter+‘\n‘+content(link)+‘\n‘) break except: pass def index(url): indexs = [] while True: #反复请求页面 try: html = ur.urlopen(url,timeout = 10) #html = html.read().decode(‘gb2312‘) #html = getData(url) soup = BeautifulSoup(html,from_encoding = ‘gbk‘)#BeautifulSoup能自动识别编码,但是会将gbk页面识别为gb2312页面,可能导致页面内部分数据获取失败 break except: pass title = soup.find(name = ‘div‘,attrs = {‘class‘:‘booktext‘}).text indexDiv = soup.find(name = ‘div‘,attrs = {‘class‘:‘booktext‘}) indexUl = [ul for ul in indexDiv.find_all(‘ul‘) if ul][1:] for ul in indexUl: indexList = [li.a for li in ul.find_all(‘li‘) if li] index = [(urljoin(url,a.get(‘href‘)),a.text) for a in indexList if a] indexs +=index return indexs def novel(url): tmpFiles = [] tasks = [] try: indexs = index(url) tmpDir = os.path.join(os.getcwd(),‘tmp‘) if not os.path.exists(tmpDir): #创建章节片段存放的临时目录 os.mkdir(tmpDir) for i,info in enumerate(indexs): tmpFile = os.path.join(tmpDir,str(i)) tmpFiles.append(tmpFile) task = Download(tmpFile,info) #开启新线程下载章节内容 task.setDaemon(True) task.start() tasks.append(task) if len(tasks) >= 20: #将线程总数控制在20个以内,如果线程过多会导致程序崩溃 while len([task for task in tasks if task.isAlive()]): print( ‘进度: {} / {}‘.format(i+1-len([task for task in tasks if task.isAlive()]),len(indexs))) #显示下载进度 time.sleep(2) tasks = [] if i == len(indexs) - 1: while len([task for task in tasks if task.isAlive()]): print( ‘进度: {} / {}‘.format(len(indexs) - len([task for task in tasks if task.isAlive()]),len(indexs))) time.sleep(2) print( ‘进度: {} / {}‘.format(len(indexs),len(indexs))) print(‘开始整合......‘) merge(tmpFiles,os.path.join(os.getcwd(),title+‘.txt‘)) print(‘下载成功!‘) except Exception as ex: print(ex) print(‘下载失败!‘) sys.exit() def main(argv): try: novel(argv[0]) except KeyboardInterrupt as kbi: #使用<C-c>中断下载后仍然能将已下载的章节合并 tmpDir = os.path.join(os.getcwd(),‘tmp‘) if os.path.exists(tmpDir): tmpFiles = [os.path.join(tmpDir,tfile) for tfile in os.listdir(tmpDir) if os.path.isfile(os.path.join(tmpDir,tfile))] print(‘开始整合不完整的下载......‘) try: merge(tmpFiles,os.path.join(os.getcwd(),‘不完整文档.txt‘)) if os.path.exists(os.path.join(os.getcwd(),‘不完整文档.txt‘)): print(‘部分章节下载成功!‘) else: print(‘下载失败!‘) except: print(‘下载失败!‘) sys.exit() os.rmdir(tmpDir) else: print(‘下载失败!‘) sys.exit() if os.path.exists(os.path.join(os.getcwd(),‘tmp‘)): os.rmdir(os.path.join(os.getcwd(),‘tmp‘)) if __name__ == "__main__": if len(sys.argv) > 1: main(sys.argv[1:]) #http://www.lueqiu.com/

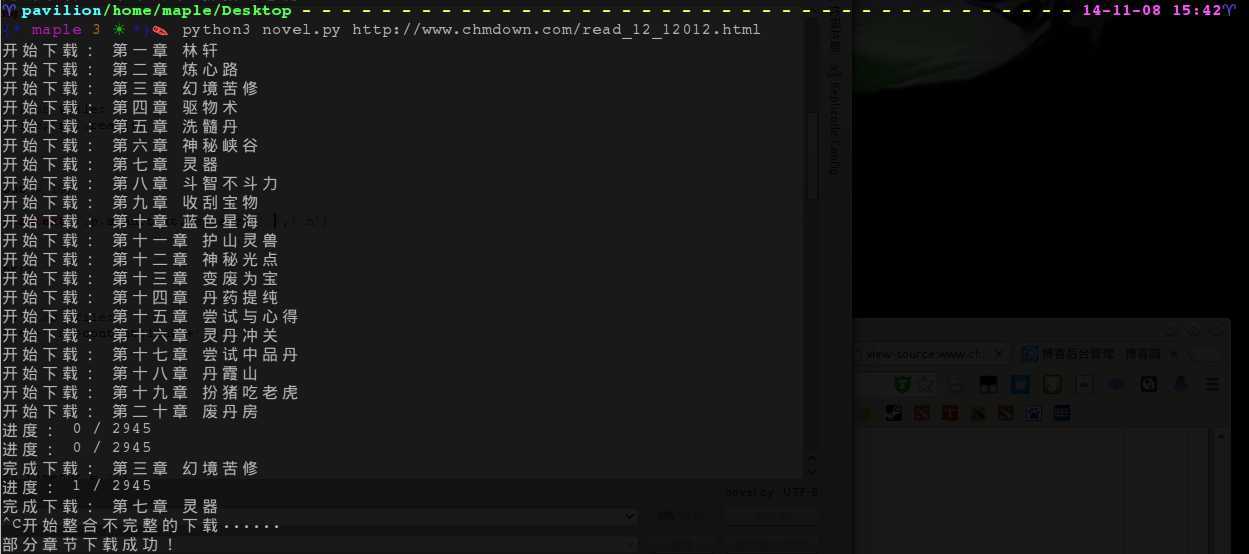

截图:

Python3利用BeautifulSoup4抓取站点小说全文的代码

标签:style blog http io color ar os 使用 sp

原文地址:http://www.cnblogs.com/itmaple/p/4083508.html