标签:add uber encoding 启动 空间 system tee tde account

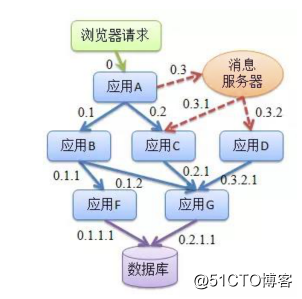

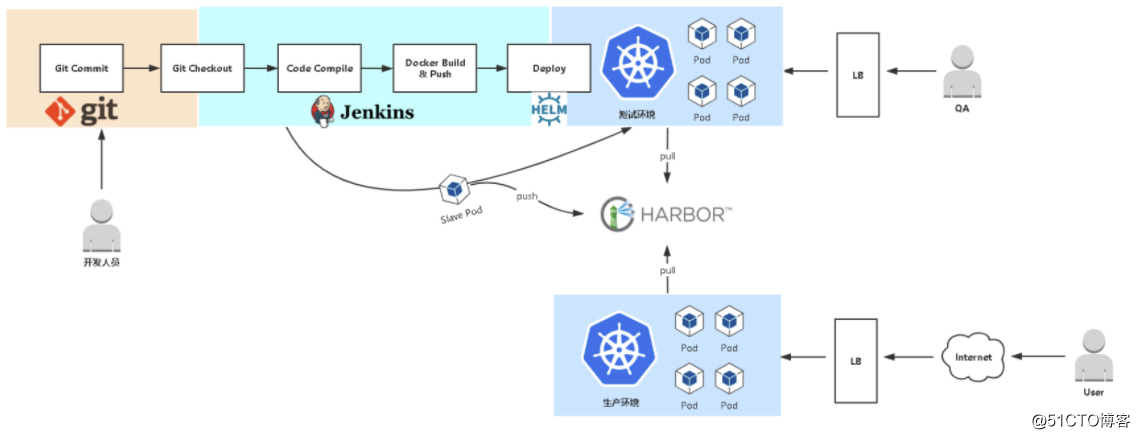

微服务链路监控& 自动发布随着微服务架构的流行,服务按照不同的维度进行拆分,一次请求往往需要涉及到多个服务。这些服务可能不同编程语言开发,不同团队开发,可能部署很多副本。因此,就需要一些可以帮助理解系统行为、用于分析性能问题的工具,以便发生故障的时候,能够快速定位和解决问题。全链路监控组件就在这样的问题背景下产生了。

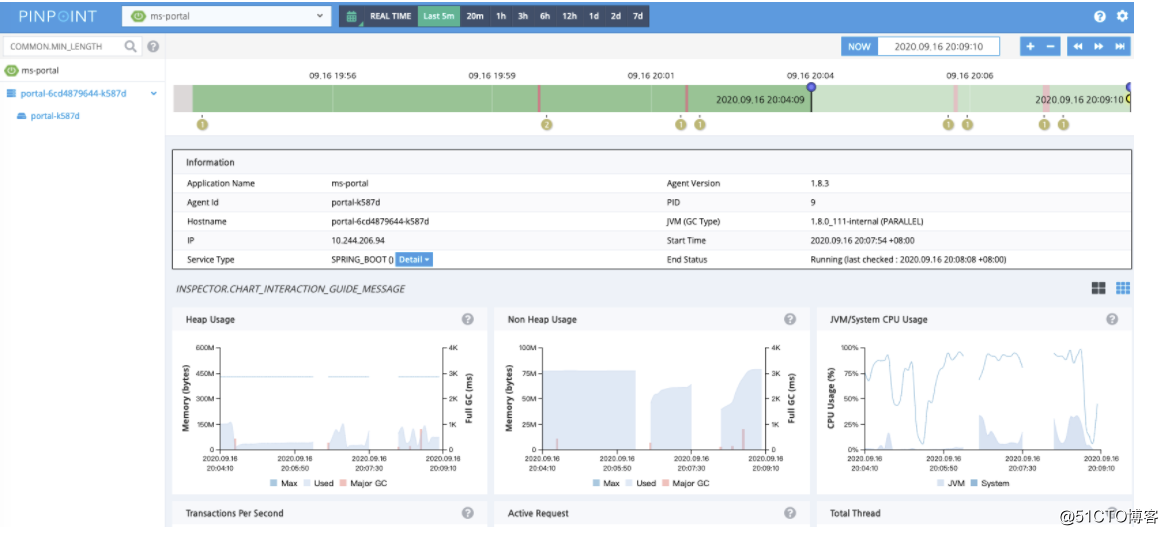

全链路性能监控 从整体维度到局部维度展示各项指标,将跨应用的所有调用链性能信息集中展现,可方便度量整体和局部性能,并且方便找到故障产生的源头,生产上可极大缩短故障排除时间。

全链路监控系统有很多,应从这几方面选择:

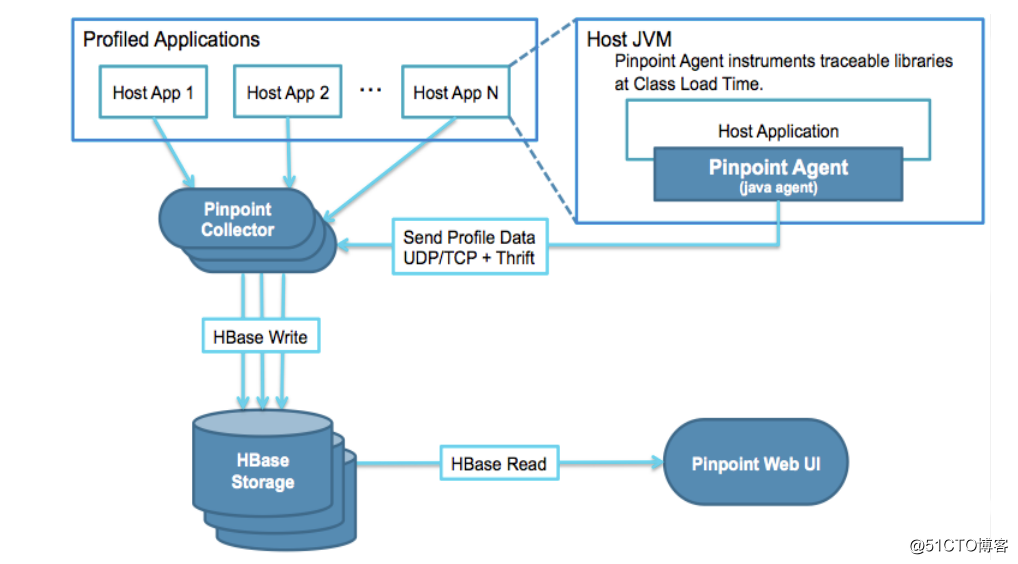

Pinpoint是一个APM(应用程序性能管理)工具,适用于用Java/PHP编写的大型分布式系统。

特性:

Docker部署:

首先要下载好pinpoint 镜像,然后docker load 导入

链接: https://pan.baidu.com/s/1-h8g7dxB9v6YiXMYVNv36Q 密码: u6qb

github下载慢的话可以直接将开源代码克隆到自己的gitee,然后下载,这样比较快

$ tar xf pinpoint-image.tar.gz && cd pinpoint-image

$ for i in $(ls ./); do docker load < $i; done # 导镜像

$ git clone https://github.com/naver/pinpoint-docker.git

$ cd pinpoint-docker && git checkout -b origin/1.8.5 #切换分支再操作

$ docker images

pinpointdocker/pinpoint-quickstart latest 09fd7f38d8e4 8 months ago 480MB

pinpointdocker/pinpoint-agent 1.8.5 ac7366387f2c 8 months ago 27.3MB

pinpointdocker/pinpoint-collector 1.8.5 034a20159cd7 8 months ago 534MB

pinpointdocker/pinpoint-web 1.8.5 3c58ee67076f 8 months ago 598MB

zookeeper 3.4 a27dff262890 8 months ago 256MB

pinpointdocker/pinpoint-mysql 1.8.5 99053614856e 8 months ago 455MB

pinpointdocker/pinpoint-hbase 1.8.5 1d3499afa5e9 8 months ago 992MB

flink 1.3.1 c08ccd5bb7a6 3 years ago 480MB

$ docker-compose pull && docker-compose up -d等待大概10min左右就能访问

eureka-service/pinpoint/pinpoint.config

gateway-service/pinpoint/pinpoint.config

order-service/order-service-biz/pinpoint/pinpoint.config

portal-service/pinpoint/pinpoint.config

product-service/product-service-biz/pinpoint/pinpoint.config

stock-service/stock-service-biz/pinpoint/pinpoint.config

# 将上述的配置文件修改如下:(我的pinpoint是192.168.56.14部署的)

profiler.collector.ip=192.168.56.14# eurake-server

$ vim eureka-service/Dockerfile

FROM java:8-jdk-alpine

LABEL maintainer 122725501@qq.com

RUN sed -i ‘s/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g‘ /etc/apk/repositories && apk add -U tzdata && rm -rf /var/cache/apk/* && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY ./target/eureka-service.jar ./

COPY pinpoint /pinpoint

EXPOSE 8888

CMD java -jar -javaagent:/pinpoint/pinpoint-bootstrap-1.8.3.jar -Dpinpoint.agentId=${HOSTNAME} -Dpinpoint.applicationName=ms-eureka -Deureka.instance.hostname=${MY_POD_NAME}.eureka.ms /eureka-service.jar

# gateway-service

$ vim gateway-service/Dockerfile

FROM java:8-jdk-alpine

LABEL maintainer 122725501@qq.com

RUN sed -i ‘s/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g‘ /etc/apk/repositories && apk add -U tzdata && rm -rf /var/cache/apk/* && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY ./target/gateway-service.jar ./

COPY pinpoint /pinpoint

EXPOSE 9999

CMD java -jar -javaagent:/pinpoint/pinpoint-bootstrap-1.8.3.jar -Dpinpoint.agentId=$(echo $HOSTNAME | awk -F- ‘{print "gateway-"$NF}‘) -Dpinpoint.applicationName=ms-gateway /gateway-service.jar

# order-service-biz/Dockerfile

$ order-service/order-service-biz/Dockerfile

FROM java:8-jdk-alpine

LABEL maintainer 122725501@qq.com

RUN sed -i ‘s/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g‘ /etc/apk/repositories && apk add -U tzdata && rm -rf /var/cache/apk/* && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY ./target/order-service-biz.jar ./

COPY pinpoint /pinpoint

EXPOSE 8020

CMD java -jar -javaagent:/pinpoint/pinpoint-bootstrap-1.8.3.jar -Dpinpoint.agentId=$(echo $HOSTNAME | awk -F- ‘{print "order-"$NF}‘) -Dpinpoint.applicationName=ms-order /order-service-biz.jar

# portal-service/Dockerfile

$ vim portal-service/Dockerfile

FROM java:8-jdk-alpine

LABEL maintainer 122725501@qq.com

RUN sed -i ‘s/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g‘ /etc/apk/repositories && apk add -U tzdata && rm -rf /var/cache/apk/* && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY ./target/portal-service.jar ./

COPY pinpoint /pinpoint

EXPOSE 8080

CMD java -jar -javaagent:/pinpoint/pinpoint-bootstrap-1.8.3.jar -Dpinpoint.agentId=$(echo $HOSTNAME | awk -F- ‘{print "portal-"$NF}‘) -Dpinpoint.applicationName=ms-portal /portal-service.jar

# product-service/product-service-biz/Dockerfile

FROM java:8-jdk-alpine

LABEL maintainer 122725501@qq.com

RUN sed -i ‘s/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g‘ /etc/apk/repositories && apk add -U tzdata && rm -rf /var/cache/apk/* && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY ./target/product-service-biz.jar ./

COPY pinpoint /pinpoint

EXPOSE 8010

CMD java -jar -javaagent:/pinpoint/pinpoint-bootstrap-1.8.3.jar -Dpinpoint.agentId=$(echo $HOSTNAME | awk -F- ‘{print "product-"$NF}‘) -Dpinpoint.applicationName=ms-product /product-service-biz.jar

# stock-service/stock-service-biz/Dockerfile

FROM java:8-jdk-alpine

LABEL maintainer 122725501@qq.com

RUN sed -i ‘s/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g‘ /etc/apk/repositories && apk add -U tzdata && rm -rf /var/cache/apk/* && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY ./target/stock-service-biz.jar ./

COPY pinpoint /pinpoint

EXPOSE 8030

CMD java -jar -javaagent:/pinpoint/pinpoint-bootstrap-1.8.3.jar -Dpinpoint.agentId=$(echo $HOSTNAME | awk -F- ‘{print "stock-"$NF}‘) -Dpinpoint.applicationName=ms-stock /stock-service-biz.jar主要在src/main/resources/application-fat.yml 配置文件中

1. msyql: order-service-biz,product-service-biz, stock-service-biz (**一定要配置上default ns 下的mysql svc**)

url: jdbc:mysql://java-demo-db-mysql.default:3306/tb_order?characterEncoding=utf-8

username: root

password: RRGynGS53N

2. eurake

defaultZone: http://eureka-0.eureka.ms:8888/eureka,http://eureka-1.eureka.ms:8888/eureka,http://eureka-2.eureka.ms:8888/eureka$ cd microservic-code/simple-microservice-dev4/k8s

$ cd k8s && ls

docker_build.sh eureka.yaml gateway.yaml order.yaml portal.yaml product.yaml stock.yaml

$ vim docker_build.sh #自动构建脚本

#!/bin/bash

docker_registry=hub.cropy.cn

kubectl create secret docker-registry registry-pull-secret --docker-server=$docker_registry --docker-username=admin --docker-password=Harbor12345 --docker-email=admin@122725501.com -n ms

service_list="eureka-service gateway-service order-service product-service stock-service portal-service"

service_list=${1:-${service_list}}

work_dir=$(dirname $PWD)

current_dir=$PWD

cd $work_dir

mvn clean package -Dmaven.test.skip=true

for service in $service_list; do

cd $work_dir/$service

if ls |grep biz &>/dev/null; then

cd ${service}-biz

fi

service=${service%-*}

image_name=$docker_registry/microservice/${service}:$(date +%F-%H-%M-%S)

docker build -t ${image_name} .

docker push ${image_name}

sed -i -r "s#(image: )(.*)#\1$image_name#" ${current_dir}/${service}.yaml

kubectl apply -f ${current_dir}/${service}.yaml

done

$ rm -fr *.yaml #删掉旧的yaml

$ cp ../../simple-microservice-dev3/k8s/*.yaml ./ #将之前改好的yaml放进来

$ ./docker_build.sh # 自动构建并上传镜像,同时启动服务

$ kubectl get pod -n ms # 查看构建之后的pod是否正常

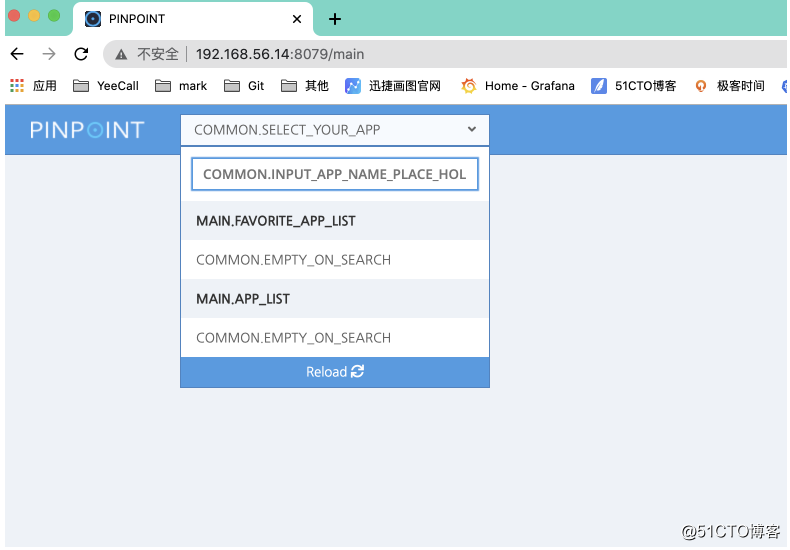

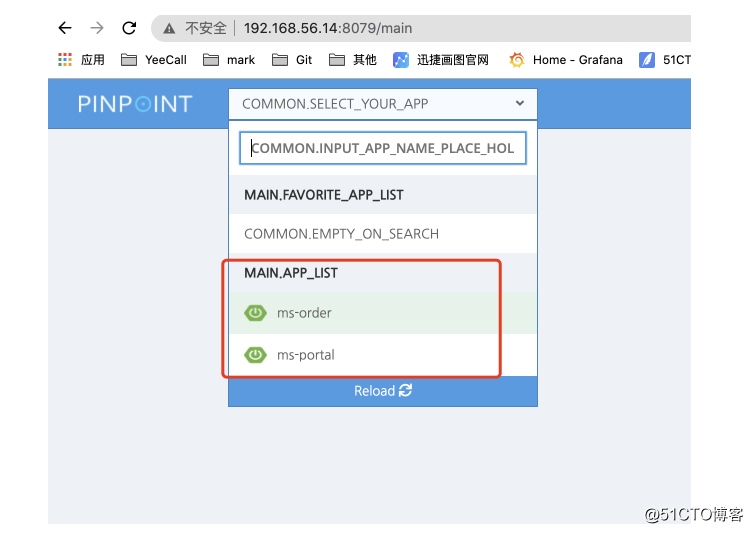

浏览器查看状态

资源消耗比较大,所以只展示这些

详情参考:

1、安装push插件

https://github.com/chartmuseum/helm-push

helm plugin install https://github.com/chartmuseum/helm-push如果网络下载不了,也可以直接解压课件里包:

# tar zxvf helm-push_0.7.1_linux_amd64.tar.gz

# mkdir -p /root/.local/share/helm/plugins/helm-push

# chmod +x /root/.local/share/helm/plugins/helm-push/bin/*

# mv bin plugin.yaml /root/.local/share/helm/plugins/helm-push2、 在Jenkins主机配置Docker可信任,如果是HTTPS需要拷贝证书

k8s集群所有节点都需要配置

$ cat /etc/docker/daemon.json

{

"graph": "/data/docker",

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"],

"insecure-registries": ["hub.cropy.cn"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

$ ls /etc/docker/certs.d/hub.cropy.cn/hub.pem #这就是harbor证书,放到docker目录下3、添加repo

$ helm repo add --username admin --password Harbor12345 myrepo https://hub.cropy.cn/chartrepo/library --ca-file /etc/docker/certs.d/hub.cropy.cn/hub.pem #需要制定自签证书否则会报错,建议k8s持续集成过程中habor关闭https,不然jenkins-slave部署过程中会报x509证书问题

$ helm repo add azure http://mirror.azure.cn/kubernetes/charts4、推送与安装Chart(例子)

$ helm pull azure/mysql --untar

$ helm package mysql

Successfully packaged chart and saved it to: /root/mysql-1.6.6.tgz

$ helm push mysql-1.6.6.tgz --username=admin --password=Harbor12345 https://hub.cropy.cn/chartrepo/library --ca-file /etc/docker/certs.d/hub.cropy.cn/hub.pem #需要制定自签证书,否则会报错192.168.56.17此节点部署,前提是安装好docker

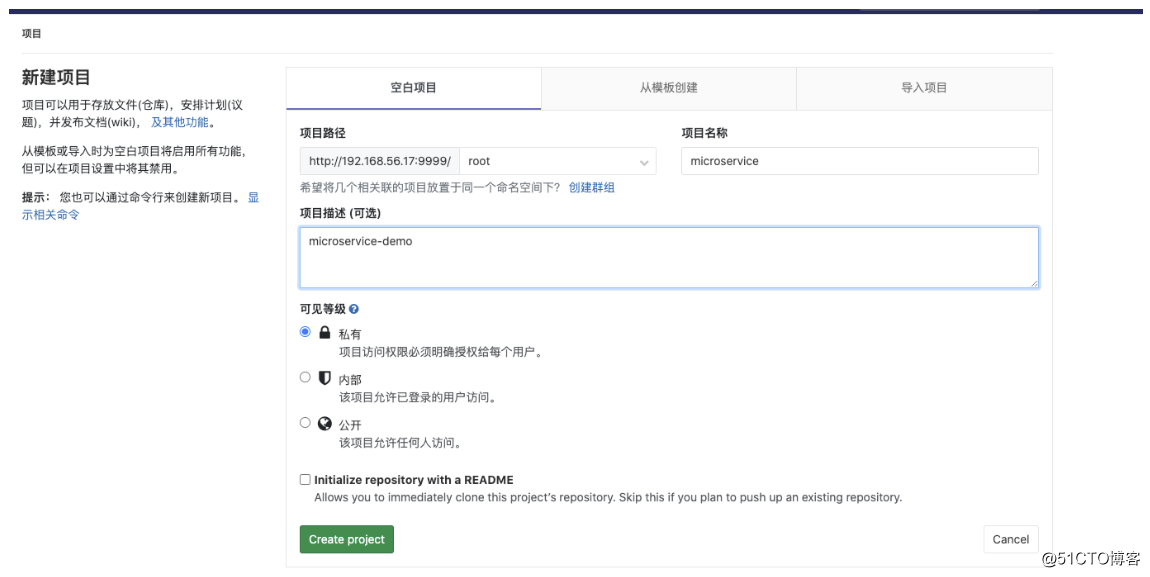

mkdir gitlab

cd gitlab

docker run -d --name gitlab -p 8443:443 -p 9999:80 -p 9998:22 -v $PWD/config:/etc/gitlab -v $PWD/logs:/var/log/gitlab -v $PWD/data:/var/opt/gitlab -v /etc/localtime:/etc/localtime --restart=always lizhenliang/gitlab-ce-zh:latest访问地址:http://IP:9999

初次会先设置管理员密码 ,然后登陆,默认管理员用户名root,密码就是刚设置的。

使用上一节课的dev3分支的代码(已经改好了的)

# 当时这个代码是放在k8s master节点上的

git config --global user.name "root"

git config --global user.email "root@example.com"

cd microservic-code/simple-microservice-dev3

find ./ -name target | xargs rm -fr #删除之前的构建记录

git init

git remote add origin http://192.168.56.17:9999/root/microservice.git

git add .

git commit -m ‘all‘

git push origin master建议关闭https功能,否则自签证书会影响helm 拉取的问题

$ tar zxvf harbor-offline-installer-v2.0.0.tgz

$ cd harbor

$ cp harbor.yml.tmpl harbor.yml

$ vi harbor.yml #需要证书的话可以自行cfssl,或者openssl生成

hostname: hub.cropy.cn

http:

port: 80

#https:

# port: 443

# certificate: /etc/harbor/ssl/hub.pem

# private_key: /etc/harbor/ssl/hub-key.pem

harbor_admin_password: Harbor12345

$ ./prepare

$ ./install.sh --with-chartmuseum

$ docker-compose ps创建方式如下,还需要导入对应的数据库

$ helm install java-demo-db --set persistence.storageClass="managed-nfs-storage" azure/mysql

$ kubectl get secret --namespace default java-demo-db-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echo

RRGynGS53N

mysql -h java-demo-db-mysql -pRRGynGS53N # 获取访问方式$ cd microservic-code/simple-microservice-dev3/k8s

$ kubectl apply -f eureka.yaml

$ kubectl get pod -n ms # 查看eurake 创建

$ unzip jenkins.zip && cd jenkins && ls ./ #压缩包地址

deployment.yml ingress.yml rbac.yml service-account.yml service.yml

$ kubectl apply -f .

$ kubectl get pod

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

java-demo-db-mysql ClusterIP 10.0.0.142 <none> 3306/TCP 6d20h

jenkins NodePort 10.0.0.146 <none> 80:30006/TCP,50000:32669/TCP 16m

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 27d访问http://192.168.56.11:30006/ 即可进行配置

密码以及插件地址更新(很重要)

$ cd /ifs/kubernetes/default-jenkins-home-pvc-fdc745cc-6fa9-4940-ae6d-82be4242d2c5/

$ cat secrets/initialAdminPassword #这是默认密码,填写到http://192.168.56.11:30006 登陆界面即可

edf37aff6dbc46728a3aa37a7f3d3a5a

# 默认从国外网络下载插件,会比较慢,建议修改国内源:

$ cd /ifs/kubernetes/default-jenkins-home-pvc-fdc745cc-6fa9-4940-ae6d-82be4242d2c5/updates/

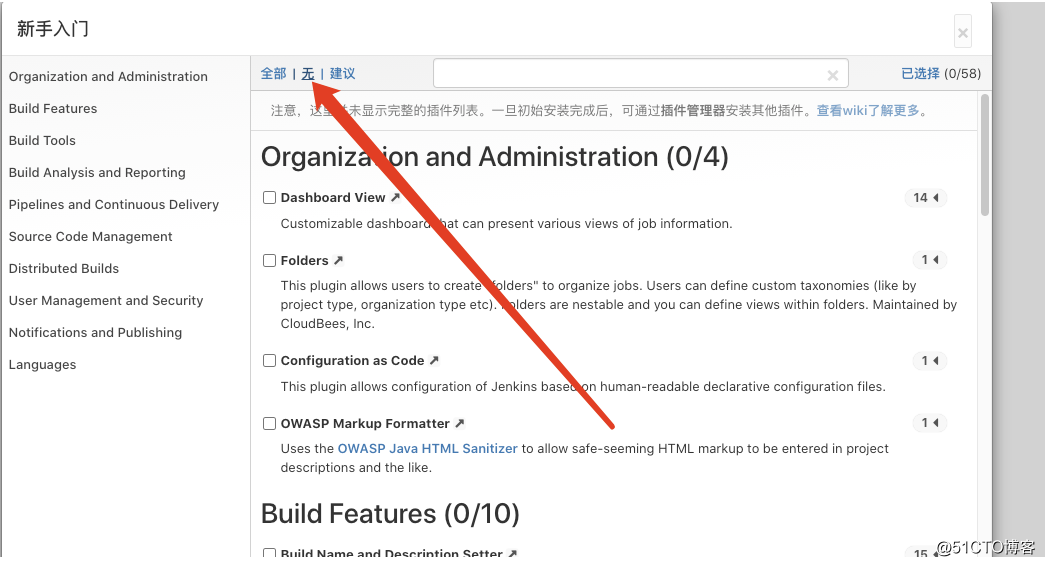

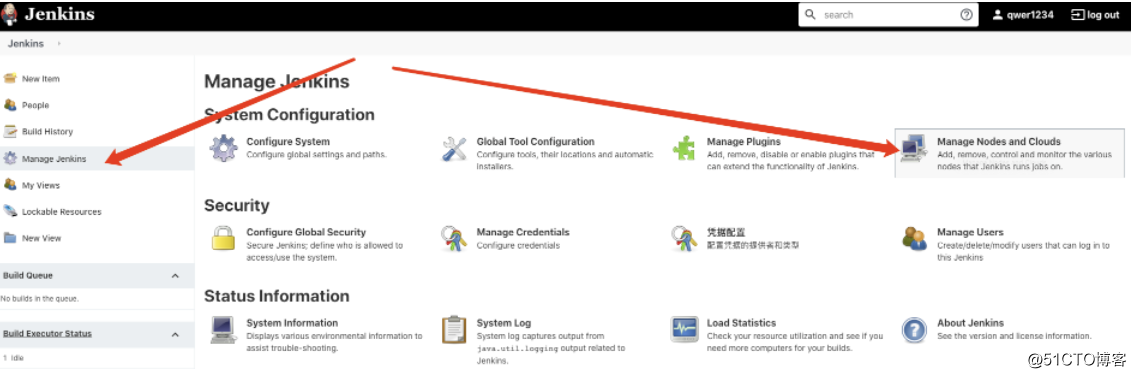

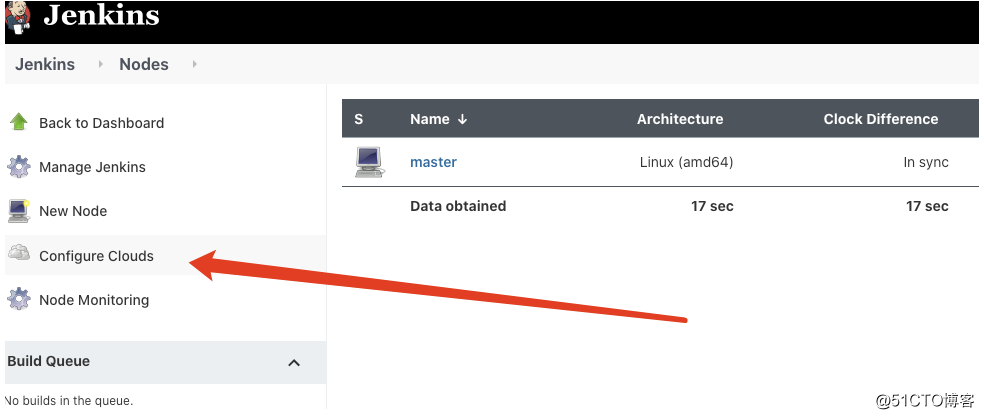

sed -i ‘s/http:\/\/updates.jenkins-ci.org\/download/https:\/\/mirrors.tuna.tsinghua.edu.cn\/jenkins/g‘ default.json && sed -i ‘s/http:\/\/www.google.com/https:\/\/www.baidu.com/g‘ default.json$ kubectl delete pod jenkins-754b6fb4b9-dxssj管理Jenkins->系统配置-->管理插件-->分别搜索Git Parameter/Git/Pipeline/kubernetes/Config File Provider,选中点击安装。

- Git Parameter:Git参数化构建

- Extended Choice Parameter: 参数化构建多选框

- Git:拉取代码

- Pipeline:流水线

- kubernetes:连接Kubernetes动态创建Slave代理

- Config File Provider:存储kubectl用于连接k8s集群的kubeconfig配置文件

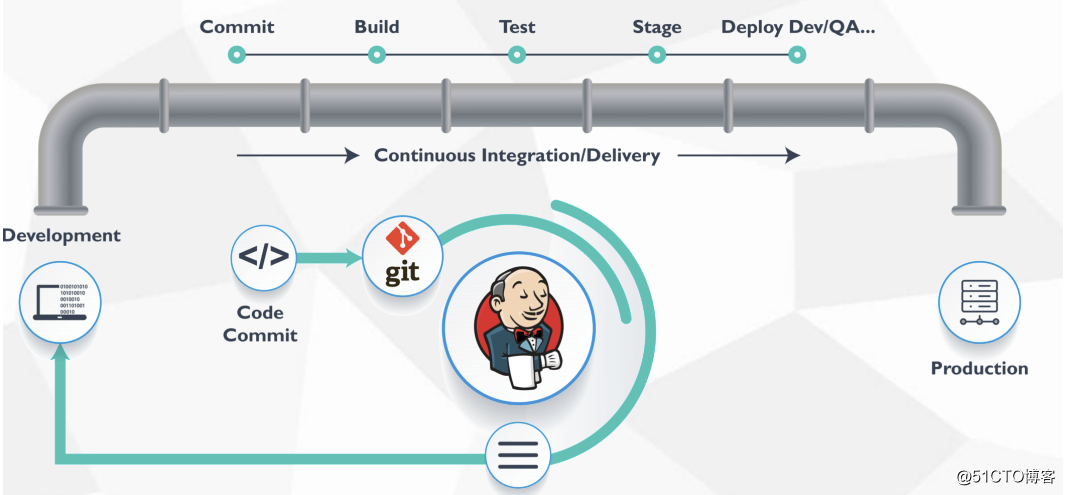

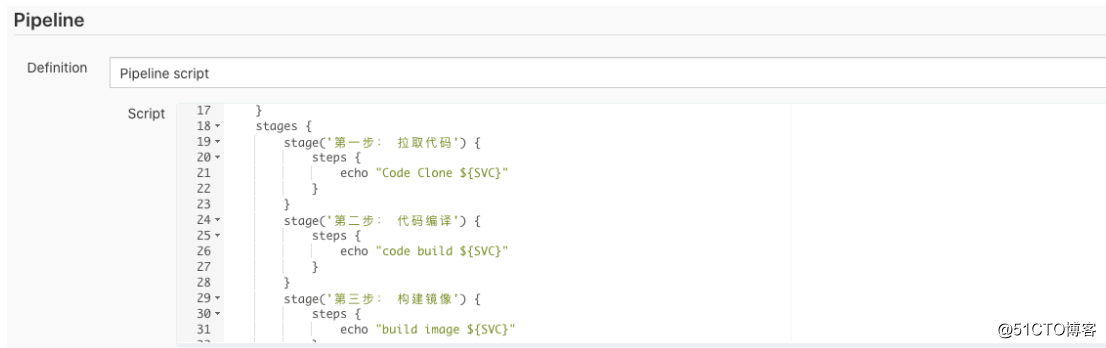

Jenkins Pipeline是一套插件,支持在Jenkins中实现集成和持续交付管道;

pipeline通过特定语法对简单到复杂的传输管道进行建模;

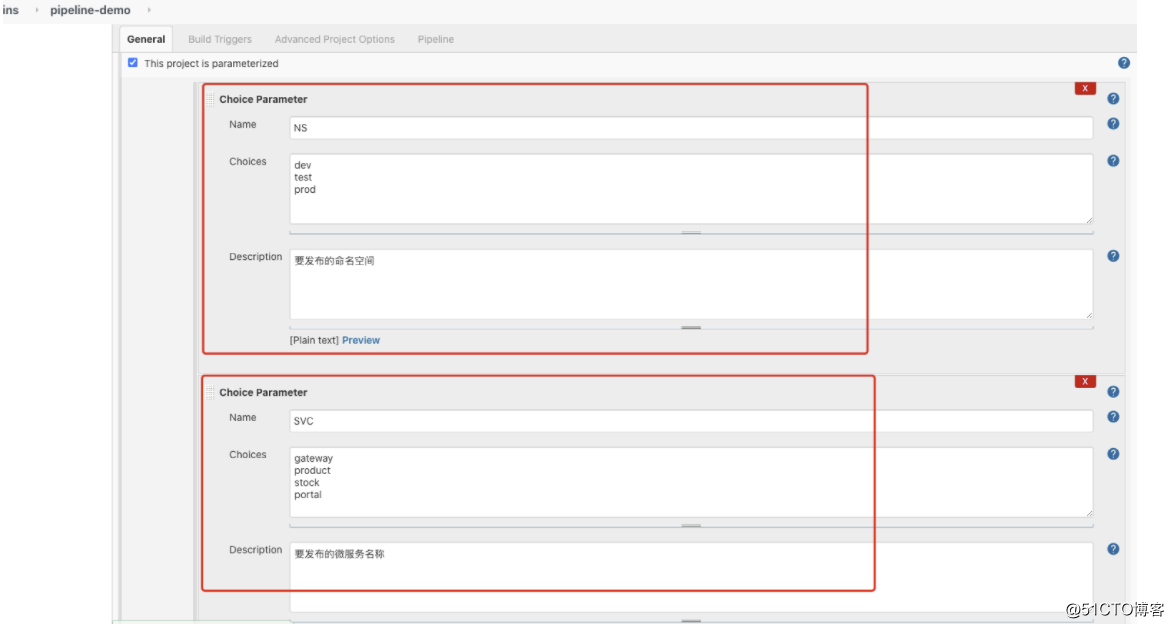

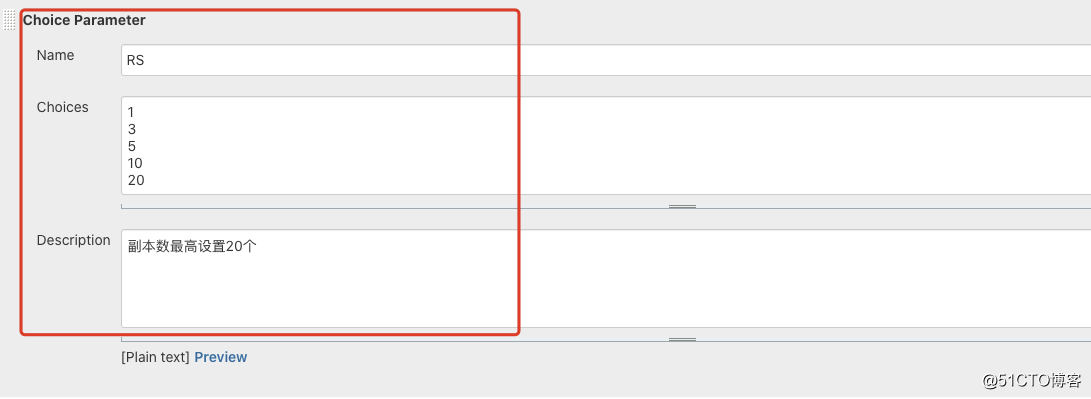

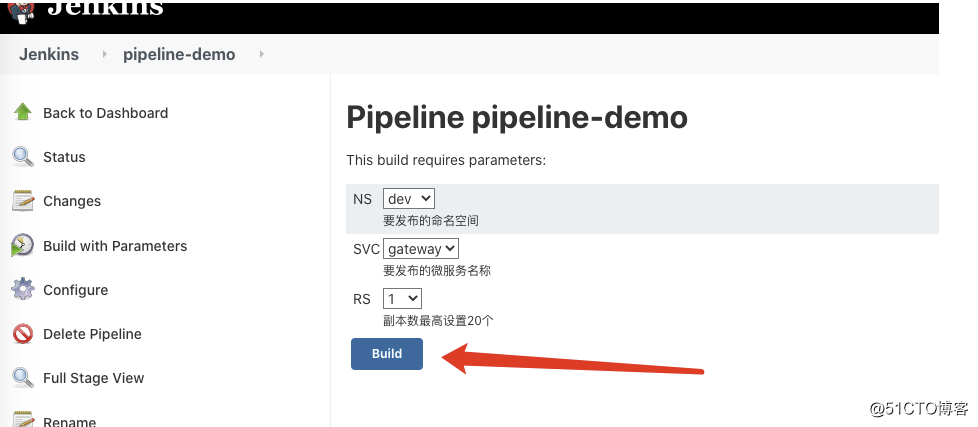

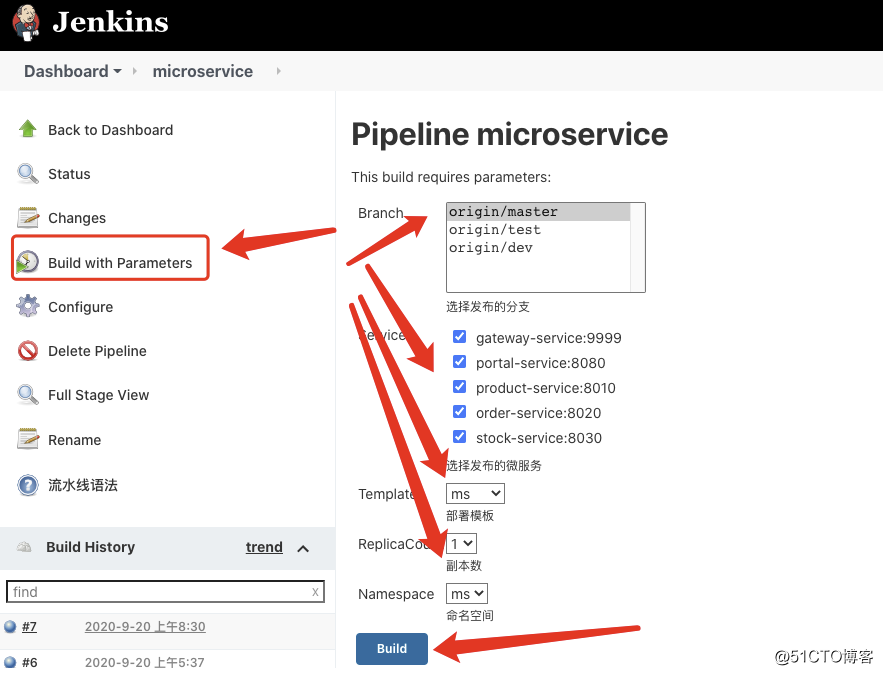

在实际环境中, 往往有很多项目,特别是微服务架构,如果每个服务都创建一个item,势必给运维工作量增加很大,因此可以通过Jenkins的参数化构建, 人工交互确认发布的环境配置、预期状态等。

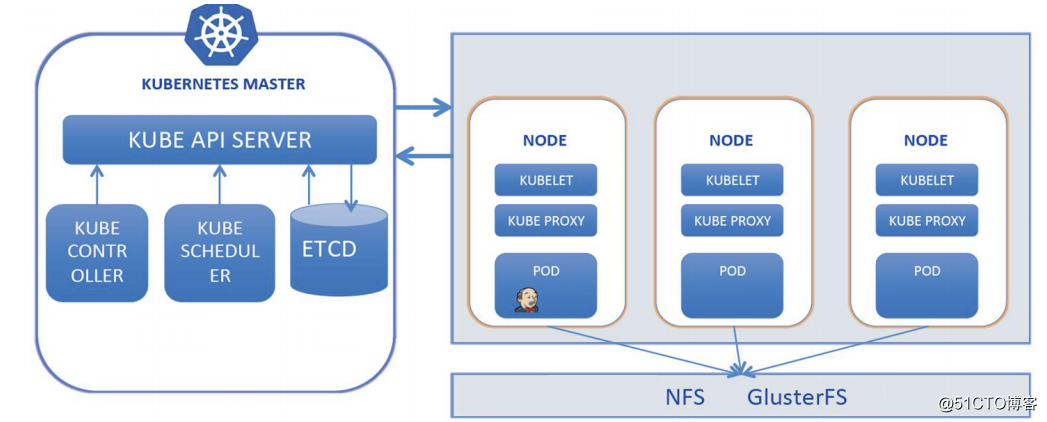

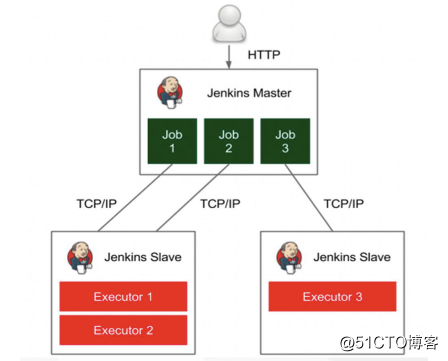

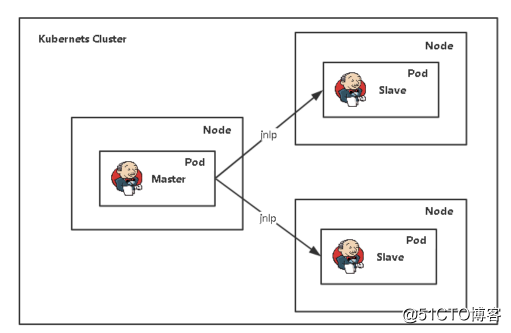

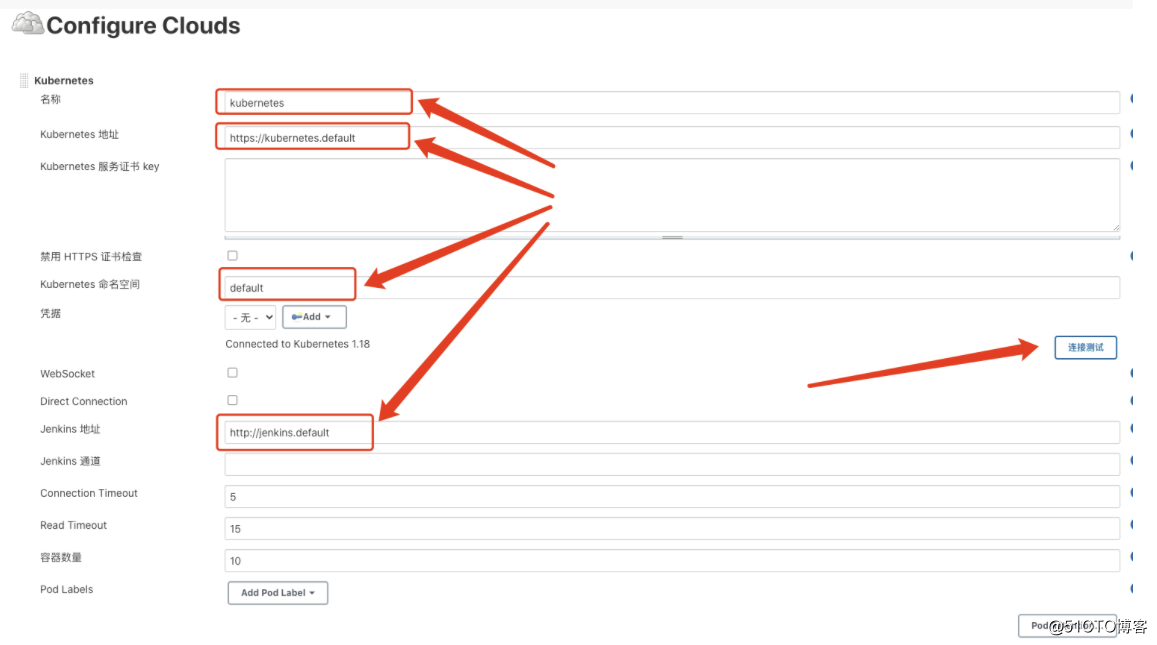

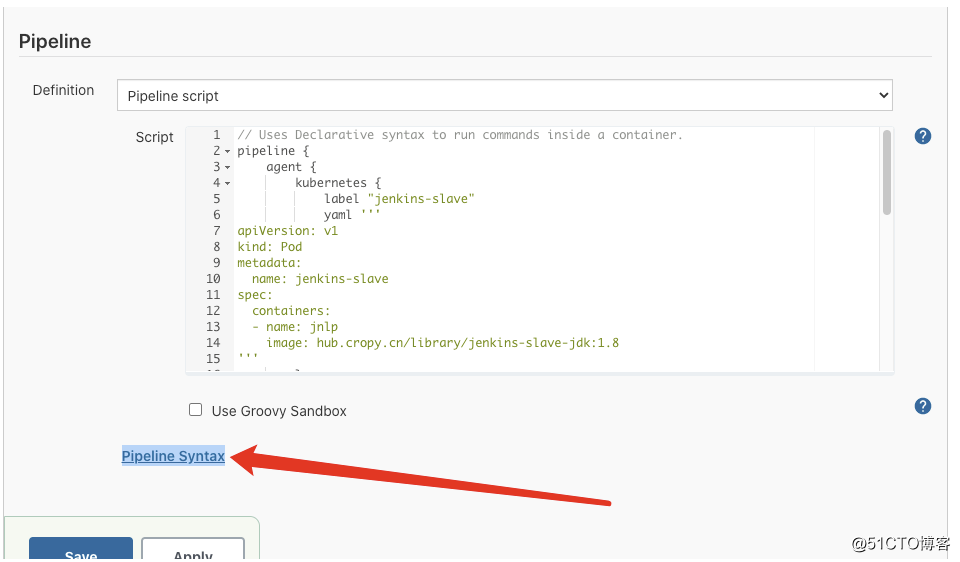

Kubernetes插件:Jenkins在Kubernetes集群中运行动态代理

插件介绍:https://github.com/jenkinsci/kubernetes-plugin

资料详见jenkins.zip

$ unzip jenkins-slave.zip && cd jenkins-slave && ls ./

Dockerfile helm jenkins-slave kubectl settings.xml slave.jar

$ vim Dockerfile

FROM centos:7

LABEL maintainer 122725501@qq.com

RUN yum install -y java-1.8.0-openjdk maven curl git libtool-ltdl-devel && yum clean all && rm -rf /var/cache/yum/* && mkdir -p /usr/share/jenkins

COPY slave.jar /usr/share/jenkins/slave.jar

COPY jenkins-slave /usr/bin/jenkins-slave

COPY settings.xml /etc/maven/settings.xml

RUN chmod +x /usr/bin/jenkins-slave

COPY helm kubectl /usr/bin/

ENTRYPOINT ["jenkins-slave"]

$ docker build -t hub.cropy.cn/library/jenkins-slave-jdk:1.8 .

$ docker push hub.cropy.cn/library/jenkins-slave-jdk:1.8

// Uses Declarative syntax to run commands inside a container.

pipeline {

agent {

kubernetes {

label "jenkins-slave"

yaml ‘‘‘

apiVersion: v1

kind: Pod

metadata:

name: jenkins-slave

spec:

containers:

- name: jnlp

image: hub.cropy.cn/library/jenkins-slave-jdk:1.8

‘‘‘

}

}

stages {

stage(‘第一步: 拉取代码‘) {

steps {

echo "Code Clone ${SVC}"

}

}

stage(‘第二步: 代码编译‘) {

steps {

echo "code build ${SVC}"

}

}

stage(‘第三步: 构建镜像‘) {

steps {

echo "build image ${SVC}"

}

}

stage(‘第四部: 发布到k8s平台‘) {

steps {

echo "deploy ${NS},replica: ${RS}"

}

}

}

}

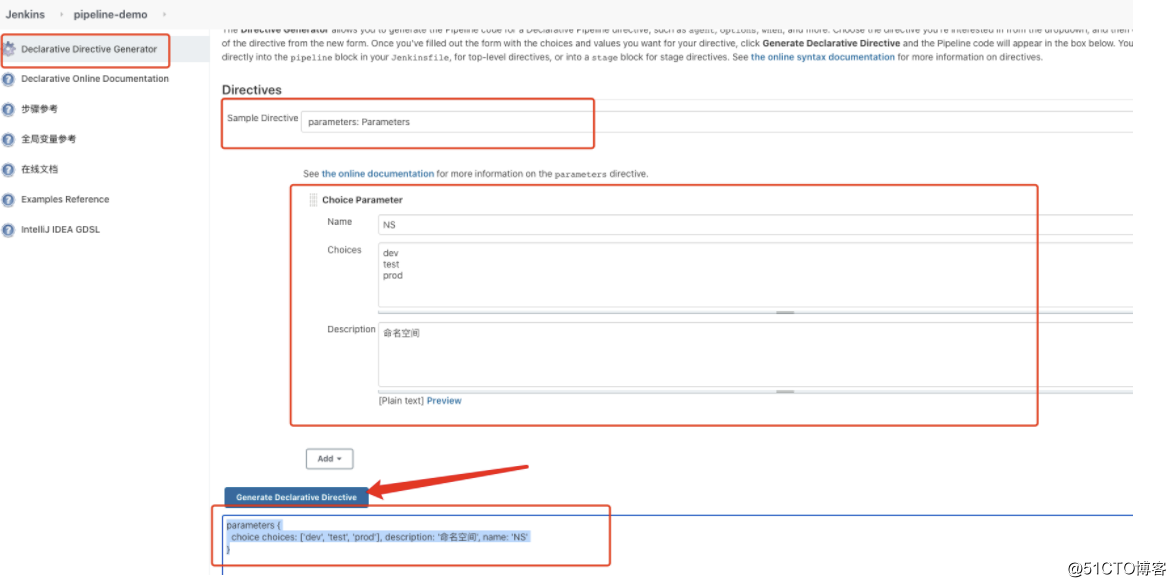

根据所选的内容自动生成grovy语法

# 1. 使用ansible部署的k8s集群,可以在master找到原先的ansible-install-k8s目录,需要拷贝ca

$ mkdir ~/kubeconfig_file && cd kubeconfig_file

$ vim admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

$ cp ~/ansible-install-k8s/ssl/k8s/ca* ./

$ cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

# 2. 创建kubeconfig文件

# 设置集群参数

kubectl config set-cluster kubernetes --server=https://192.168.56.11:6443 --certificate-authority=ca.pem --embed-certs=true --kubeconfig=config

# 设置上下文参数

kubectl config set-context default --cluster=kubernetes --user=cluster-admin --kubeconfig=config

# 设置默认上下文

kubectl config use-context default --kubeconfig=config

# 设置客户端认证参数

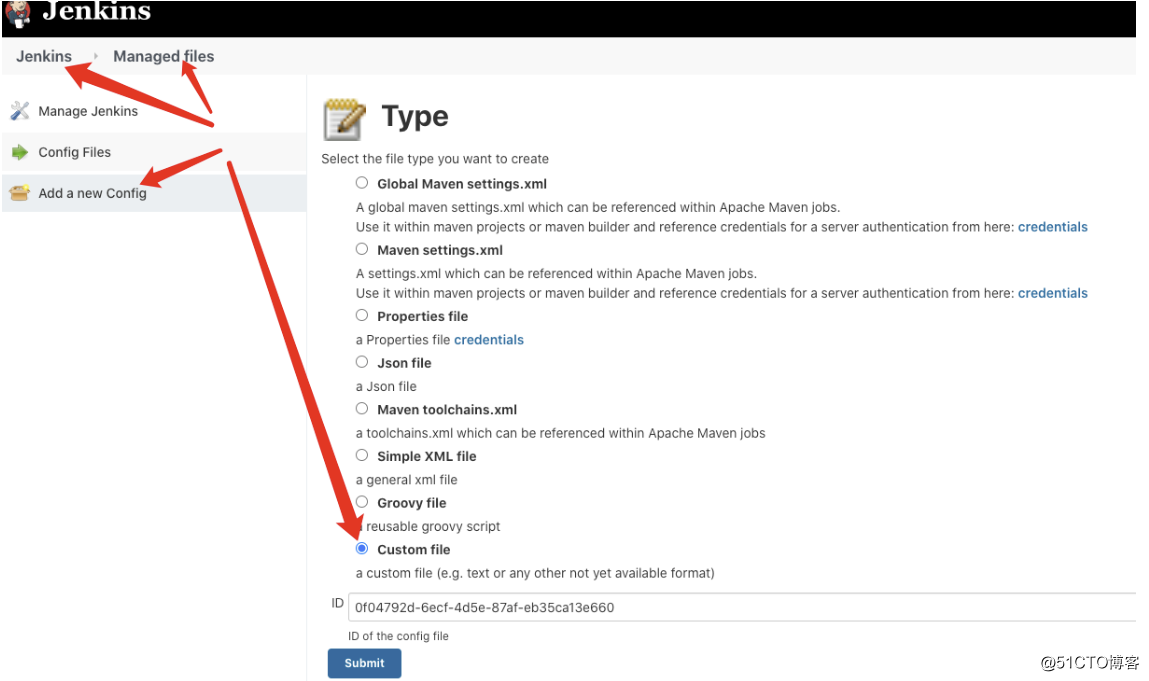

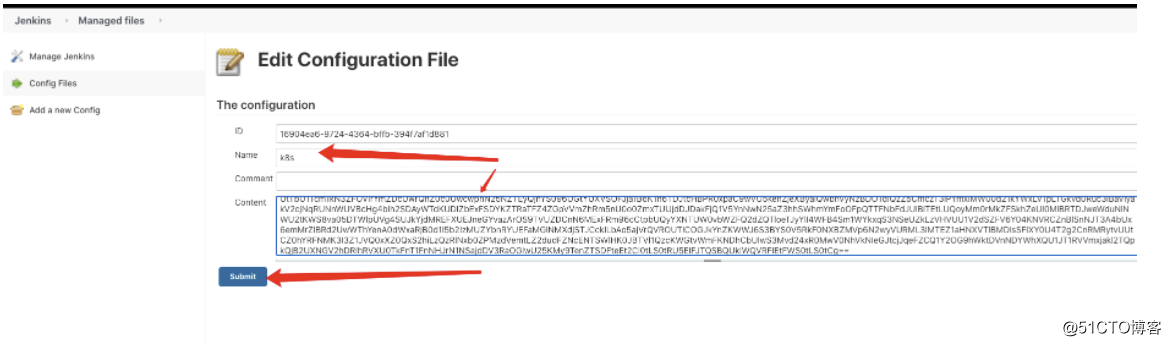

kubectl config set-credentials cluster-admin --certificate-authority=ca.pem --embed-certs=true --client-key=admin-key.pem --client-certificate=admin.pem --kubeconfig=config创建自定义配置

将k8s的config配置拷入配置内容

#!/usr/bin/env groovy

// 所需插件: Git Parameter/Git/Pipeline/Config File Provider/kubernetes/Extended Choice Parameter

// 公共

def registry = "hub.cropy.cn"

// 项目

def project = "microservice"

def git_url = "http://192.168.56.17:9999/root/microservice.git"

def gateway_domain_name = "gateway.ctnrs.com"

def portal_domain_name = "portal.ctnrs.com"

// 认证

def image_pull_secret = "registry-pull-secret"

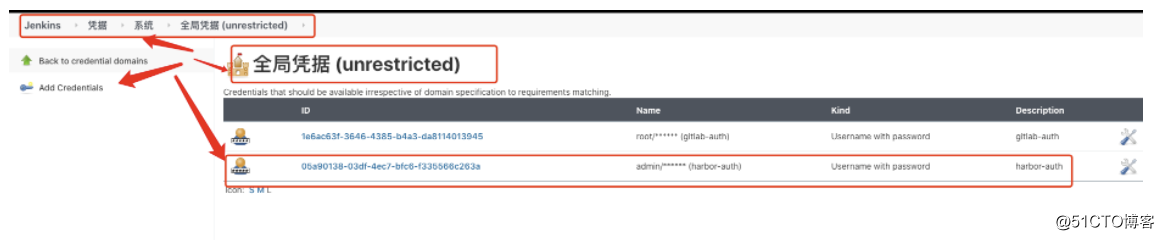

def harbor_registry_auth = "05a90138-03df-4ec7-bfc6-f335566c263a"

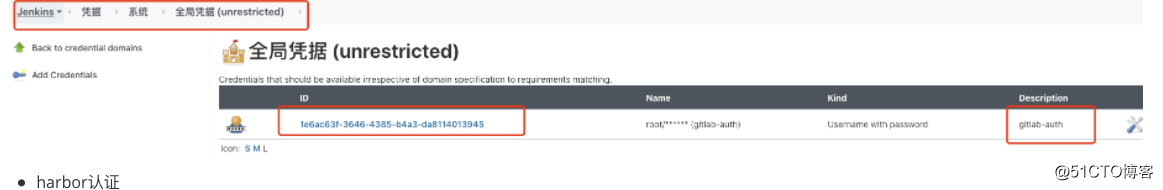

def git_auth = "1e6ac63f-3646-4385-b4a3-da8114013945"

// ConfigFileProvider ID

def k8s_auth = "16904ea6-9724-4364-bffb-394f7af1d881"

pipeline {

agent {

kubernetes {

label "jenkins-slave"

yaml """

apiVersion: v1

kind: Pod

metadata:

name: jenkins-slave

spec:

containers:

- name: jnlp

image: "${registry}/library/jenkins-slave-jdk:1.8"

imagePullPolicy: Always

volumeMounts:

- name: docker-cmd

mountPath: /usr/bin/docker

- name: docker-sock

mountPath: /var/run/docker.sock

- name: maven-cache

mountPath: /root/.m2

volumes:

- name: docker-cmd

hostPath:

path: /usr/bin/docker

- name: docker-sock

hostPath:

path: /var/run/docker.sock

- name: maven-cache

hostPath:

path: /tmp/m2

"""

}

}

parameters {

gitParameter branch: ‘‘, branchFilter: ‘.*‘, defaultValue: ‘‘, description: ‘选择发布的分支‘, name: ‘Branch‘, quickFilterEnabled: false, selectedValue: ‘NONE‘, sortMode: ‘NONE‘, tagFilter: ‘*‘, type: ‘PT_BRANCH‘

extendedChoice defaultValue: ‘none‘, description: ‘选择发布的微服务‘, multiSelectDelimiter: ‘,‘, name: ‘Service‘, type: ‘PT_CHECKBOX‘, value: ‘gateway-service:9999,portal-service:8080,product-service:8010,order-service:8020,stock-service:8030‘

choice (choices: [‘ms‘, ‘demo‘], description: ‘部署模板‘, name: ‘Template‘)

choice (choices: [‘1‘, ‘3‘, ‘5‘, ‘7‘], description: ‘副本数‘, name: ‘ReplicaCount‘)

choice (choices: [‘ms‘], description: ‘命名空间‘, name: ‘Namespace‘)

}

stages {

stage(‘拉取代码‘){

steps {

checkout([$class: ‘GitSCM‘,

branches: [[name: "${params.Branch}"]],

doGenerateSubmoduleConfigurations: false,

extensions: [], submoduleCfg: [],

userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_url}"]]

])

}

}

stage(‘代码编译‘) {

// 编译指定服务

steps {

sh """

mvn clean package -Dmaven.test.skip=true

"""

}

}

stage(‘构建镜像‘) {

steps {

withCredentials([usernamePassword(credentialsId: "${harbor_registry_auth}", passwordVariable: ‘password‘, usernameVariable: ‘username‘)]) {

sh """

docker login -u ${username} -p ‘${password}‘ ${registry}

for service in \$(echo ${Service} |sed ‘s/,/ /g‘); do

service_name=\${service%:*}

image_name=${registry}/${project}/\${service_name}:${BUILD_NUMBER}

cd \${service_name}

if ls |grep biz &>/dev/null; then

cd \${service_name}-biz

fi

docker build -t \${image_name} .

docker push \${image_name}

cd ${WORKSPACE}

done

"""

configFileProvider([configFile(fileId: "${k8s_auth}", targetLocation: "admin.kubeconfig")]){

sh """

# 添加镜像拉取认证

kubectl create secret docker-registry ${image_pull_secret} --docker-username=${username} --docker-password=${password} --docker-server=${registry} -n ${Namespace} --kubeconfig admin.kubeconfig |true

# 添加私有chart仓库

helm repo add --username ${username} --password ${password} myrepo http://${registry}/chartrepo/${project}

"""

}

}

}

}

stage(‘Helm部署到K8S‘) {

steps {

sh """

echo "deploy"

"""

}

}

}

}helm push ms-0.1.0.tgz --username=admin --password=Harbor12345 http://hub.cropy.cn/chartrepo/microservice pipeline如下:

#!/usr/bin/env groovy

// 所需插件: Git Parameter/Git/Pipeline/Config File Provider/kubernetes/Extended Choice Parameter

// 公共

def registry = "hub.cropy.cn"

// 项目

def project = "microservice"

def git_url = "http://192.168.56.17:9999/root/microservice.git"

def gateway_domain_name = "gateway.ctnrs.com"

def portal_domain_name = "portal.ctnrs.com"

// 认证

def image_pull_secret = "registry-pull-secret"

def harbor_registry_auth = "05a90138-03df-4ec7-bfc6-f335566c263a"

def git_auth = "1e6ac63f-3646-4385-b4a3-da8114013945"

// ConfigFileProvider ID

def k8s_auth = "16904ea6-9724-4364-bffb-394f7af1d881"

pipeline {

agent {

kubernetes {

label "jenkins-slave"

yaml """

apiVersion: v1

kind: Pod

metadata:

name: jenkins-slave

spec:

containers:

- name: jnlp

image: "${registry}/library/jenkins-slave-jdk:1.8"

imagePullPolicy: Always

volumeMounts:

- name: docker-cmd

mountPath: /usr/bin/docker

- name: docker-sock

mountPath: /var/run/docker.sock

- name: maven-cache

mountPath: /root/.m2

volumes:

- name: docker-cmd

hostPath:

path: /usr/bin/docker

- name: docker-sock

hostPath:

path: /var/run/docker.sock

- name: maven-cache

hostPath:

path: /tmp/m2

"""

}

}

parameters {

gitParameter branch: ‘‘, branchFilter: ‘.*‘, defaultValue: ‘‘, description: ‘选择发布的分支‘, name: ‘Branch‘, quickFilterEnabled: false, selectedValue: ‘NONE‘, sortMode: ‘NONE‘, tagFilter: ‘*‘, type: ‘PT_BRANCH‘

extendedChoice defaultValue: ‘none‘, description: ‘选择发布的微服务‘, multiSelectDelimiter: ‘,‘, name: ‘Service‘, type: ‘PT_CHECKBOX‘, value: ‘gateway-service:9999,portal-service:8080,product-service:8010,order-service:8020,stock-service:8030‘

choice (choices: [‘ms‘, ‘demo‘], description: ‘部署模板‘, name: ‘Template‘)

choice (choices: [‘1‘, ‘3‘, ‘5‘, ‘7‘], description: ‘副本数‘, name: ‘ReplicaCount‘)

choice (choices: [‘ms‘], description: ‘命名空间‘, name: ‘Namespace‘)

}

stages {

stage(‘拉取代码‘){

steps {

checkout([$class: ‘GitSCM‘,

branches: [[name: "${params.Branch}"]],

doGenerateSubmoduleConfigurations: false,

extensions: [], submoduleCfg: [],

userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_url}"]]

])

}

}

stage(‘代码编译‘) {

// 编译指定服务

steps {

sh """

mvn clean package -Dmaven.test.skip=true

"""

}

}

stage(‘构建镜像‘) {

steps {

withCredentials([usernamePassword(credentialsId: "${harbor_registry_auth}", passwordVariable: ‘password‘, usernameVariable: ‘username‘)]) {

sh """

docker login -u ${username} -p ‘${password}‘ ${registry}

for service in \$(echo ${Service} |sed ‘s/,/ /g‘); do

service_name=\${service%:*}

image_name=${registry}/${project}/\${service_name}:${BUILD_NUMBER}

cd \${service_name}

if ls |grep biz &>/dev/null; then

cd \${service_name}-biz

fi

docker build -t \${image_name} .

docker push \${image_name}

cd ${WORKSPACE}

done

"""

configFileProvider([configFile(fileId: "${k8s_auth}", targetLocation: "admin.kubeconfig")]){

sh """

# 添加镜像拉取认证

kubectl create secret docker-registry ${image_pull_secret} --docker-username=${username} --docker-password=${password} --docker-server=${registry} -n ${Namespace} --kubeconfig admin.kubeconfig |true

# 添加私有chart仓库

helm repo add --username ${username} --password ${password} myrepo http://${registry}/chartrepo/${project}

"""

}

}

}

}

stage(‘Helm部署到K8S‘) {

steps {

sh """

common_args="-n ${Namespace} --kubeconfig admin.kubeconfig"

for service in \$(echo ${Service} |sed ‘s/,/ /g‘); do

service_name=\${service%:*}

service_port=\${service#*:}

image=${registry}/${project}/\${service_name}

tag=${BUILD_NUMBER}

helm_args="\${service_name} --set image.repository=\${image} --set image.tag=\${tag} --set replicaCount=${replicaCount} --set imagePullSecrets[0].name=${image_pull_secret} --set service.targetPort=\${service_port} myrepo/${Template}"

# 判断是否为新部署

if helm history \${service_name} \${common_args} &>/dev/null;then

action=upgrade

else

action=install

fi

# 针对服务启用ingress

if [ \${service_name} == "gateway-service" ]; then

helm \${action} \${helm_args} --set ingress.enabled=true --set ingress.host=${gateway_domain_name} \${common_args}

elif [ \${service_name} == "portal-service" ]; then

helm \${action} \${helm_args} --set ingress.enabled=true --set ingress.host=${portal_domain_name} \${common_args}

else

helm \${action} \${helm_args} \${common_args}

fi

done

# 查看Pod状态

sleep 10

kubectl get pods \${common_args}

"""

}

}

}

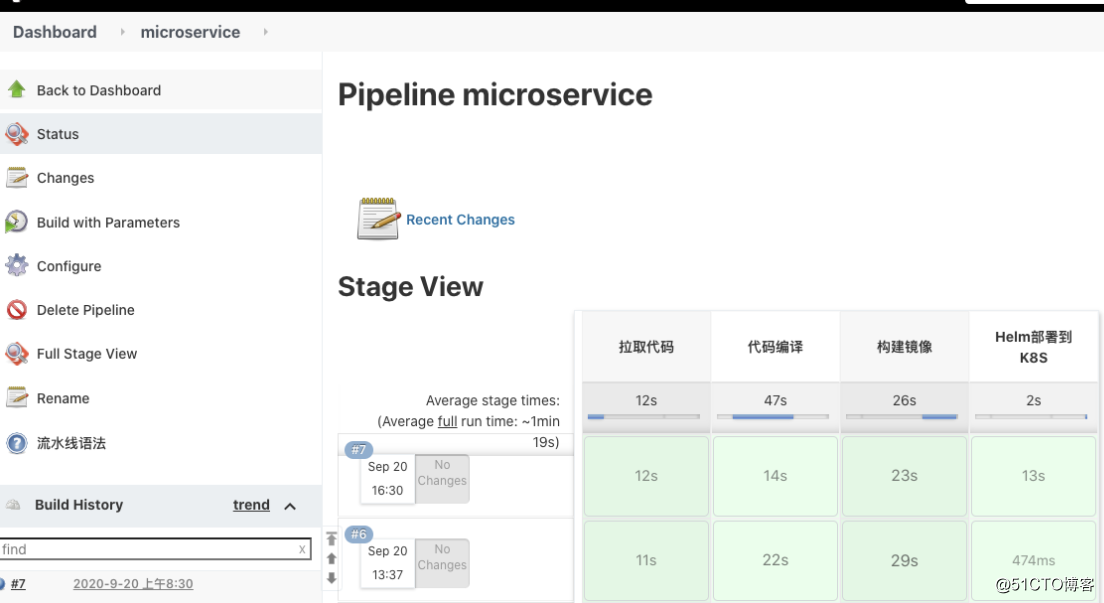

}发布如下

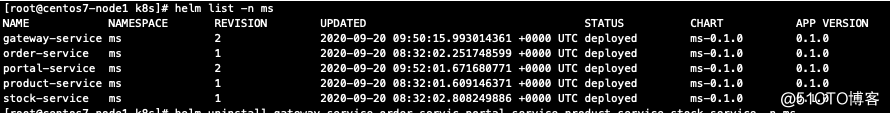

校验如下(k8s集群查看):

kubectl get pod -n ms

NAME READY STATUS RESTARTS AGE

eureka-0 1/1 Running 3 2d23h

eureka-1 1/1 Running 3 2d23h

eureka-2 1/1 Running 3 2d23h

ms-gateway-service-659c8596b6-t4hk7 0/1 Running 0 48s

ms-order-service-7cfc4d4b74-bxbsn 0/1 Running 0 46s

ms-portal-service-655c968c4-9pstl 0/1 Running 0 47s

ms-product-service-68674c7f44-8mghj 0/1 Running 0 47s

ms-stock-service-85676485ff-7s5rz 0/1 Running 0 45s

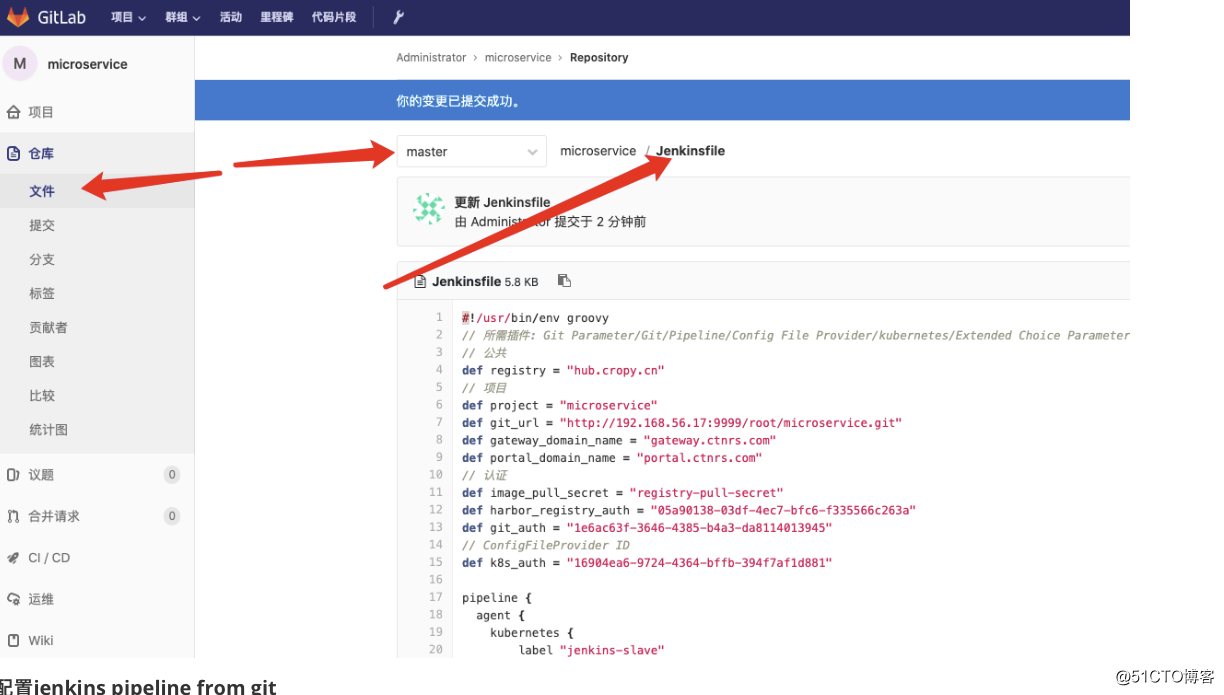

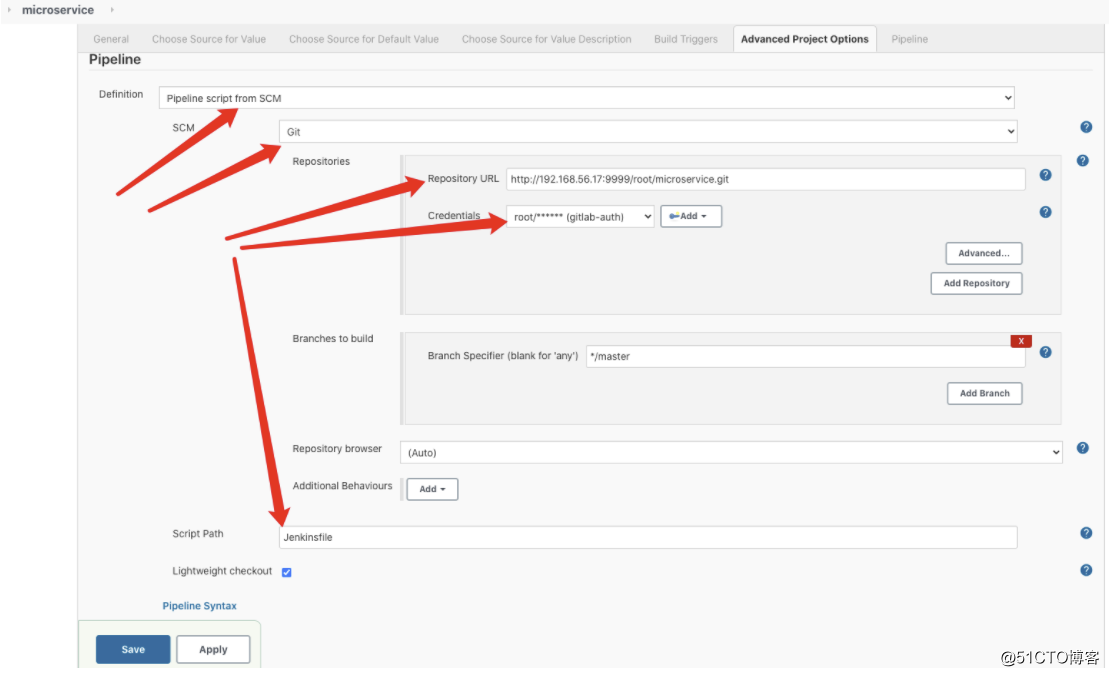

原理: 通过jenkins读取gitlab中的pipeline文件,从而实现版本控制来做到自动化

标签:add uber encoding 启动 空间 system tee tde account

原文地址:https://blog.51cto.com/13812615/2536254