标签:XML ted its node this code bash home rri

到http://mirror.bit.edu.cn/apache/hbase/下载对应的Hbase版本

我这里下载的是hbase-2.2.6-bin.tar.gz版本,是较稳定的版本。

解压:

tar -zxvf /home/hadoop/桌面/hbase-2.2.6-bin.tar.gz

移动位置并改名:

su

mv /home/hadoop/hbase-2.2.6 /usr/local/Hbase

更新文件夹所有者:cd /usr/local/

chown -R hadoop:hadoop ./Hbase

配置环境:

echo "export PATH=/usr/local/Hbase/bin:\$PATH" >> /etc/bash.bashrc source /etc/bash.bashrc exit cd /usr/local/Hbase/ echo "export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/" >> conf/hbase-env.sh cd ~ sudo vim /etc/profile

在profile的末尾添加

export HBASE_HOME=usr/local/Hbase

export PATH=$HBASE_HOME/bin:$PATH

修改配置文件:

cd /usr/local/Hbase/conf

vim hbase-env.sh

最下面的两行应该是:(前面如果配置了环境应该是只有第一行,那么只需要加入第二行就行了)

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ export HBASE_MANAGES_ZK=false

保存退出

vim hbase-site.xml

修改如下:

<configuration> <!-- The following properties are set for running HBase as a single process on a developer workstation. With this configuration, HBase is running in "stand-alone" mode and without a distributed file system. In this mode, and without further configuration, HBase and ZooKeeper data are stored on the local filesystem, in a path under the value configured for `hbase.tmp.dir`. This value is overridden from its default value of `/tmp` because many systems clean `/tmp` on a regular basis. Instead, it points to a path within this HBase installation directory. Running against the `LocalFileSystem`, as opposed to a distributed filesystem, runs the risk of data integrity issues and data loss. Normally HBase will refuse to run in such an environment. Setting `hbase.unsafe.stream.capability.enforce` to `false` overrides this behavior, permitting operation. This configuration is for the developer workstation only and __should not be used in production!__ See also https://hbase.apache.org/book.html#standalone_dist --> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <!-- 指定 hbase 在 HDFS 上的存储位置 --> <name>hbase.rootdir</name> <value>hdfs://master:9000/hbase</value> </property> <property> <!-- 指定 zookeeper 的地址--> <name>hbase.zookeeper.quorum</name> <value>master:2181,slave1:2181,slave2:2181,slave3:2181</value> </property> <property> <name>hbase.tmp.dir</name> <value>./tmp</value> </property> <property> <name>hbase.unsafe.stream.capability.enforce</name> <value>false</value> </property> </configuration>

保存退出

vim regionservers

修改如下:

master

slave1

slave2

slave3

启动测试:

顺序是:1.hadoop 2.zookeeper 3.hbase

hadoop只需在master上启动即可

start-all.sh

在每台虚拟机上启动zookeeper

cd /usr/local/zookeeper/bin/

./zkServer.sh start

只需在master上启动hbase即可

cd /usr/local/Hbase/bin/

./start-hbase.sh

启动hbase后显示

SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/Hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] running master, logging to /usr/local/Hbase/bin/../logs/hbase-hadoop-master-master.out slave2: running regionserver, logging to /usr/local/Hbase/bin/../logs/hbase-hadoop-regionserver-slave2.out slave3: running regionserver, logging to /usr/local/Hbase/bin/../logs/hbase-hadoop-regionserver-slave3.out slave1: running regionserver, logging to /usr/local/Hbase/bin/../logs/hbase-hadoop-regionserver-slave1.out master: running regionserver, logging to /usr/local/Hbase/bin/../logs/hbase-hadoop-regionserver-master.out

通过jps命令查看:(出现HMaster)

3088 NameNode 11046 HMaster 3478 ResourceManager 11606 Jps 11241 HRegionServer 10750 QuorumPeerMain

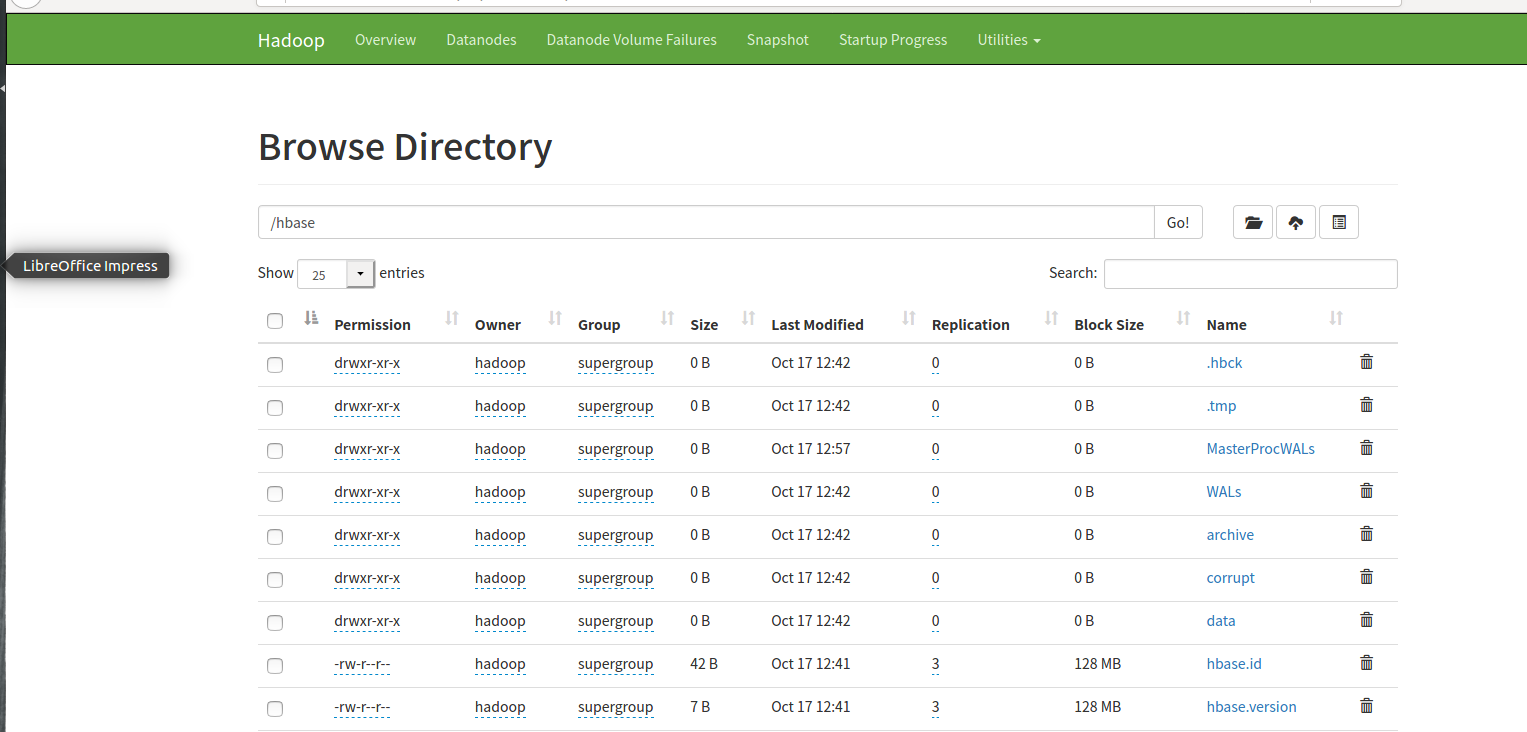

进入master的Browse Directory查看

出现hbase目录

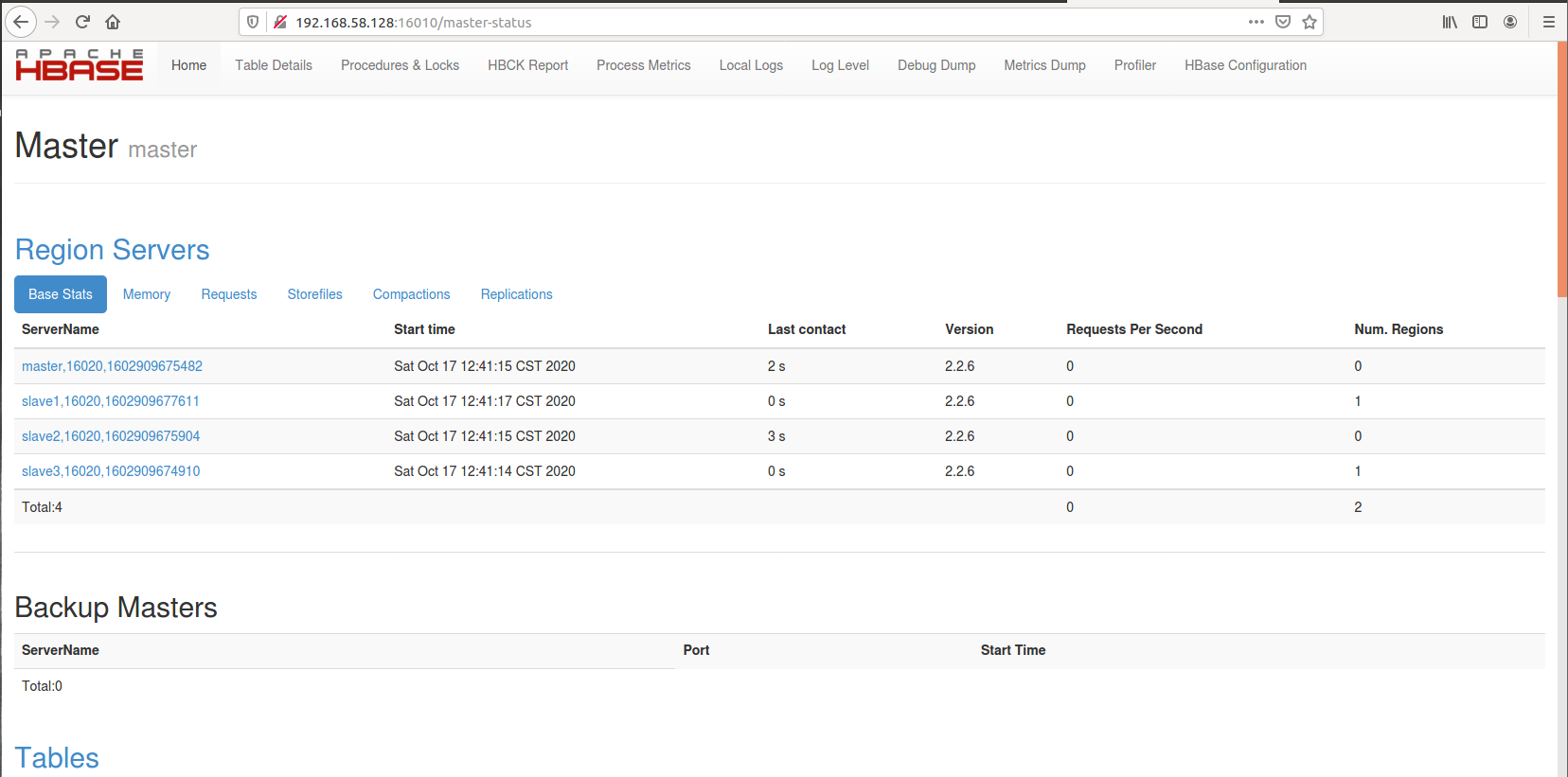

进入对应的16010端口查看:

出现hbase集群状况

标签:XML ted its node this code bash home rri

原文地址:https://www.cnblogs.com/a155-/p/13830625.html