标签:license apach ref red 命令 ddr 多个 mapr src

sudo scutil --set HostName localhost

1)ssh-keygen -t rsa (一路回车直到完成)

2)cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys (3)chmod og-wx ~/.ssh/authorized_keys

然后重启终端,在命令行下输入>ssh localhost 如果不需要输密码即可进入,证明设置成功。如果仍需要输入密码,那可能是文件权限的问题,尝试执行 chmod 755 ~/.ssh

brew install hadoop

<?xml version="1.0" encoding="UTF-8"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?><configuration><property><name>hadoop.tmp.dir</name><value>file:/usr/local/Cellar/hadoop/3.2.1/libexec/tmp</value></property><property><name>fs.defaultFS</name><value>hdfs://localhost:9000</value></property></configuration>

1 <?xml version="1.0" encoding="UTF-8"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?><configuration><property><name>dfs.permissions.enabled</name><value>false</value></property><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.namenode.name.dir</name><value>file:/usr/local/Cellar/hadoop/3.2.1/libexec/tmp/dfs/name</value></property><property><name>dfs.namenode.data.dir</name><value>file:/usr/local/Cellar/hadoop/3.2.1/libexec/tmp/dfs/data</value></property></configuration>

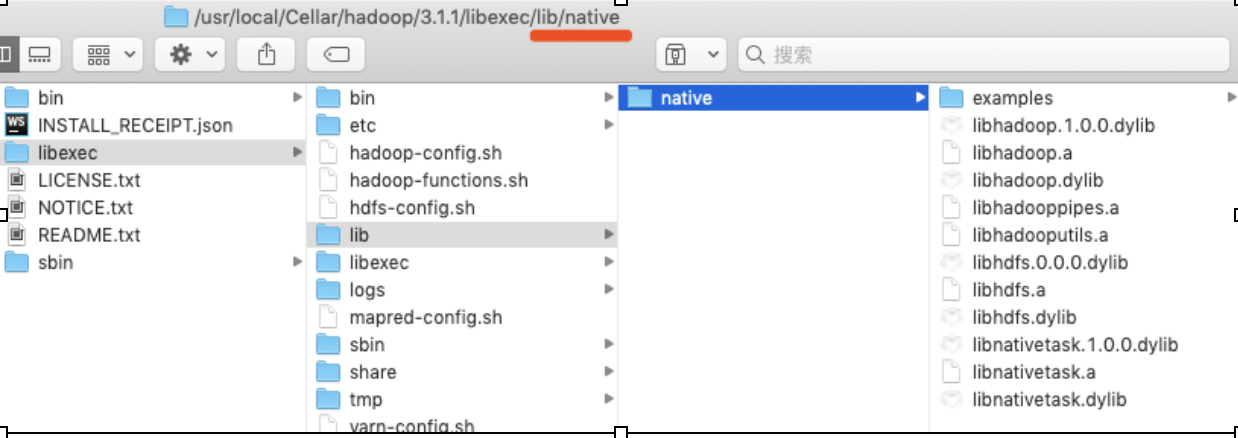

vi ~/.bash_profile export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_141.jdk/Contents/Home export HADOOP_HOME=/usr/local/Cellar/hadoop/3.1.1/libexec export HADOOP_ROOT_LOGGER=DEBUG,console export PATH=$PATH:${HADOOP_HOME}/bin source ~/.bash_profile

cd /usr/local/Cellar/hadoop/3.2.1/bin ./hdfs namenode -format

cd /usr/local/Cellar/hadoop/3.2.1/sbin # 使用start-dfs.sh或者start-all.sh来启动hadoop# 停止 sbin/stop-dfs.sh 或者 sbin/stop-all.sh ./start-dfs.sh

<?xml version="1.0"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?><configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property></configuration>

<?xml version="1.0"?><configuration><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.nodemanager.env-whitelist</name><value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value></property></configuration>

hadoop namenode -format sbin/start-dfs.sh

<configuration><!-- <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> --><property><name>mapreduce.job.tracker</name><value>hdfs://127.0.0.1:8001</value><final>true</final></property><property><name>mapreduce.map.memory.mb</name><value>200</value></property><property><name>mapreduce.reduce.memory.mb</name><value>200</value></property></configuration>

<configuration><!-- Site specific YARN configuration properties --><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.resourcemanager.address</name><value>127.0.0.1:8032</value></property><property><name>yarn.app.mapreduce.am.resource.mb</name><value>200</value></property><property><name>yarn.scheduler.minimum-allocation-mb</name><value>50</value></property><property><name>yarn.nodemanager.env-whitelist</name><value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value></property></configuration>

标签:license apach ref red 命令 ddr 多个 mapr src

原文地址:https://www.cnblogs.com/geogre123/p/14486052.html