标签:park 新建 app sch struct usr mic tty write

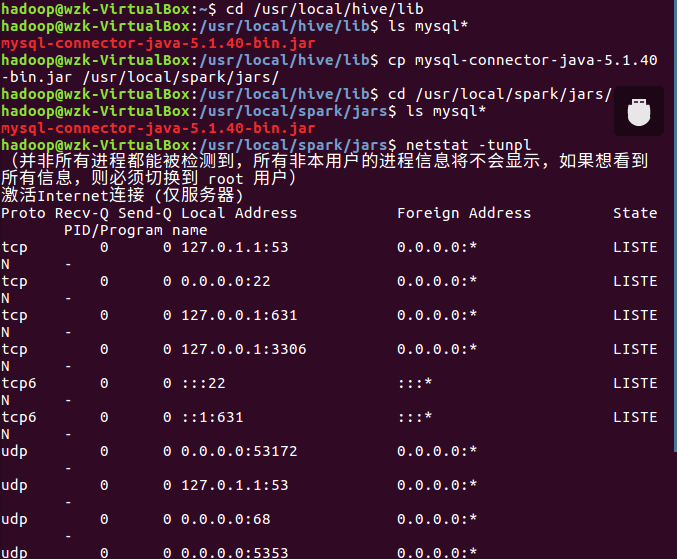

1. 安装启动检查Mysql服务。

##netstat -tunlp (3306)

cd /usr/local/hive/lib

ls mysql*

cp mysql-connector-java-5.1.40-bin.jar /usr/local/spark/jars/

cd /usr/local/spark/jars/

ls mysql*

netstat -tunpl

2. spark 连接mysql驱动程序。

##–cp /usr/local/hive/lib/mysql-connector-java-5.1.40-bin.jar /usr/local/spark/jars

3. 启动 Mysql shell,新建数据库spark,表student。

create database spark;#创建数据库

use spark;#使用数据库

create table student(id int(4),name char(20),gender char(4),age int (4));#创建表

insert into student values(1,‘Xueqian‘,‘F‘,23);

insert into student values(2,‘Weiliang‘,‘M‘,24);#插入两条数据

select * from student;#查询数据

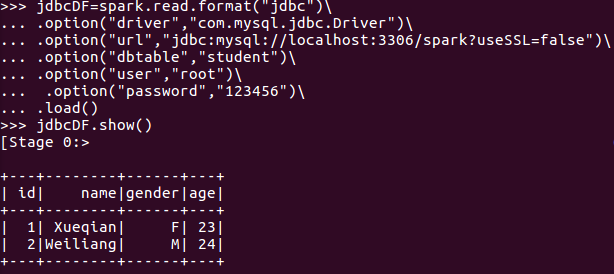

4. spark读取MySQL数据库中的数据

##spark.read.format("jdbc").option("url", "jdbc:mysql://localhost:3306/spark?useSSL=false") ... .load()

jdbcDF=spark.read.format("jdbc")\

... .option("driver","com.mysql.jdbc.Driver")\

... .option("url","jdbc:mysql://localhost:3306/spark?useSSL=false")\

... .option("dbtable","student")\

... .option("user","root")\

... .option("password","hadoop")\

... .load()

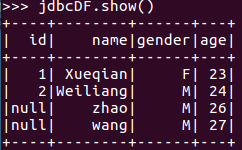

jdbcDF.show()

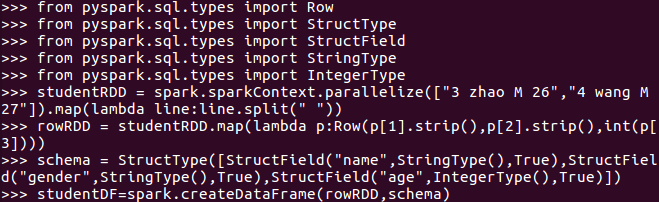

5. spark向MySQL数据库写入数据

##studentDF.write.format(‘jdbc’).option(…).mode(‘append’).save()

>>> from pyspark.sql.types import Row

>>> from pyspark.sql.types import StructType

>>> from pyspark.sql.types import StructField

>>> from pyspark.sql.types import StringType

>>> from pyspark.sql.types import IntegerType

>>> studentRDD = spark.sparkContext.parallelize(["3 zhao M 26","4 wang M 27"]).map(lambda line:line.split(" "))

>>> rowRDD = studentRDD.map(lambda p:Row(p[1].strip(),p[2].strip(),int(p[3])))

>>> schema = StructType([StructField("name",StringType(),True),StructField("gender",StringType(),True),StructField("age",IntegerType(),True)])

>>> studentDF=spark.createDataFrame(rowRDD,schema)

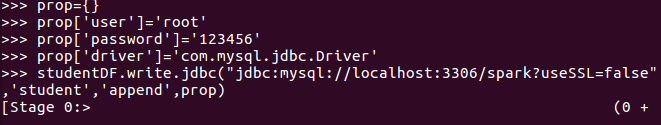

>>> prop={}

>>> prop[‘user‘]=‘root‘

>>> prop[‘password‘]=‘123456‘

>>> prop[‘driver‘]=‘com.mysql.jdbc.Driver‘

>>> studentDF.write.jdbc("jdbc:mysql://localhost:3306/spark?useSSL=false",‘student‘,‘append‘,prop)

标签:park 新建 app sch struct usr mic tty write

原文地址:https://www.cnblogs.com/kayss/p/14832326.html