标签:des style blog http ar io color os 使用

本文提供几个简单的实例,来说明如何使用hadoop的java API针对HDFS进行文件上传、创建、重命名、删除操作

本文地址:http://www.cnblogs.com/archimedes/p/hdfs-api-operations.html,转载请注明源地址。

通过FileSystem.copyFromLocalFile(Path src, Path dst)可将本地文件上传到HDFS指定的位置上,其中src和dst均为文件的完整路径

在《hadoop实战--搭建开发环境及编写Hello World》一文中的myHelloWorld项目下新建一个文件CopyFile.java,添加代码:

import java.util.*; import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.*; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; public class CopyFile { public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); FileSystem hdfs = FileSystem.get(conf); Path src =new Path("/home/wu/copy.txt"); //完整路径 Path dst =new Path("hdfs://localhost:9000/user/wu/in/"); hdfs.copyFromLocalFile(src, dst); System.out.println("Upload to" + conf.get("fs.default.name")); FileStatus files[] = hdfs.listStatus(dst); for(FileStatus file : files) { System.out.println(file.getPath()); } } }

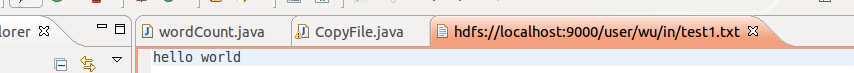

copy.txt为自己新建的一个测试文件,关于dst路径,可以双击in文件中的test1.txt,就会出现完整路径名(hdfs://localhost:9000/user/wu/in)

注意:main后面加上throws Exception,否则会报错

运行结果可以在控制台和文件夹中验证,控制台显示:

Upload tohdfs://localhost:9000/

hdfs://localhost:9000/user/wu/in/copy.txt

hdfs://localhost:9000/user/wu/in/test1.txt

hdfs://localhost:9000/user/wu/in/test2.txt

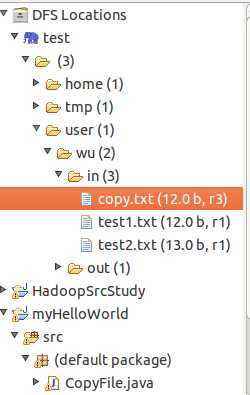

刷新项目,可以看到in文件下夹多了一个copy.txt文件

通过FileSystem.creat(Path f)可在HDFS上创建文件,其中f为文件的完整路径。

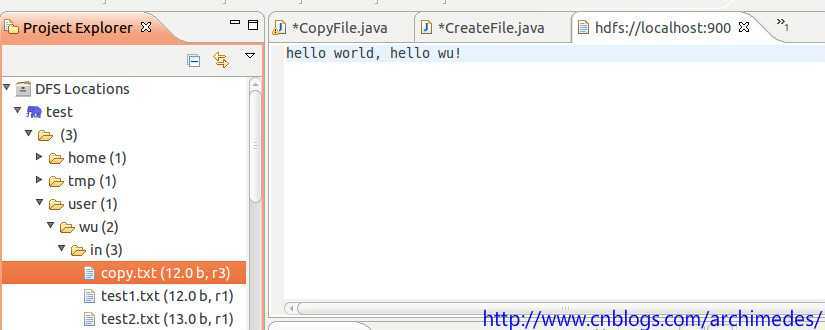

import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataOutputStream; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; public class CreateFile { public static void main(String[] args) throws Exception{ Configuration conf = new Configuration(); byte[] buff = "hello world, hello wu!".getBytes(); FileSystem hdfs = FileSystem.get(conf); Path dfs = new Path("hdfs://localhost:9000/user/wu/in/copy.txt"); FSDataOutputStream outputStream = hdfs.create(dfs); outputStream.write(buff,0, buff.length); } }

双击查看in文件夹中的copy.txt文件,内容如预期所示:

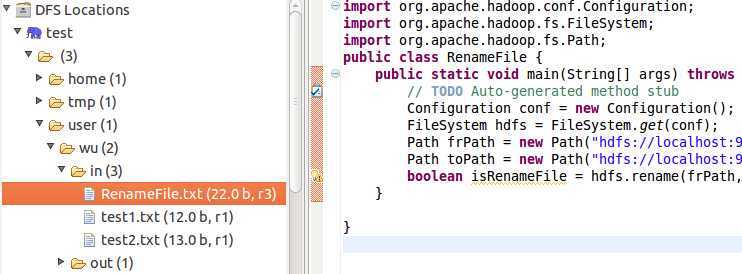

通过FileSystem.rename(Path src, Path dst)可为指定的HDFS文件重命名,其中src和dst均为文件的完整路径。

import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; public class RenameFile { public static void main(String[] args) throws Exception { // TODO Auto-generated method stub Configuration conf = new Configuration(); FileSystem hdfs = FileSystem.get(conf); Path frPath = new Path("hdfs://localhost:9000/user/wu/in/copy.txt"); Path toPath = new Path("hdfs://localhost:9000/user/wu/in/RenameFile.txt"); boolean isRenameFile = hdfs.rename(frPath, toPath); } }

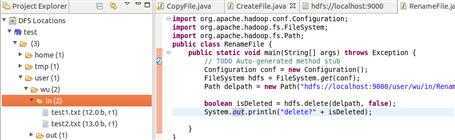

运行后的结果如下图:

通过FileSystem.delete(Path f,Boolean recursive)可删除指定的HDFS文件,其中f为需要删除文件的完整路径,recursive用来确定是否进行递归删除。

import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; public class RenameFile { public static void main(String[] args) throws Exception { // TODO Auto-generated method stub Configuration conf = new Configuration(); FileSystem hdfs = FileSystem.get(conf); Path delpath = new Path("hdfs://localhost:9000/user/wu/in/RenameFile.txt"); boolean isDeleted = hdfs.delete(delpath, false); System.out.println("delete?" + isDeleted); } }

运行后的结果如下图:

通过FileStatus.getModificationTime()可以查看指定HDFS文件的修改时间。

import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.Path; public class GetLTime { public static void main(String[] args) throws Exception{ // TODO Auto-generated method stub Configuration conf = new Configuration(); FileSystem hdfs = FileSystem.get(conf); Path fpath = new Path("hdfs://localhost:9000/user/wu/in/hdfstest.txt"); FileStatus fileStatus = hdfs.getFileStatus(fpath); long modificationTime = fileStatus.getModificationTime(); System.out.println("Modification time is " + modificationTime); } }

运行结果如下:

Modification time is 1418719100449

通过FileSystem.exists(Path f)可查看指定HDFS文件是否存在,其中f为文件的完整路径。

import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.Path; public class CheckFile { public static void main(String[] args) throws Exception { // TODO Auto-generated method stub Configuration conf = new Configuration(); FileSystem hdfs = FileSystem.get(conf); Path findfile = new Path("hdfs://localhost:9000/user/wu/in/hdfstest.txt"); boolean isExists = hdfs.exists(findfile); System.out.println("is exists? " + isExists); } }

运行结果如下:

is exists? true

通过FileSystem.getFileBlockLocation(FileStatus file,long start,long len)可查找指定文件在HDFS集群上的位置,其中file为文件的完整路径,start和len来标识查找文件的路径。

import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.BlockLocation; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.Path; public class FileLoc { public static void main(String[] args) throws Exception{ // TODO Auto-generated method stub Configuration conf = new Configuration(); FileSystem hdfs = FileSystem.get(conf); Path fpath = new Path("hdfs://localhost:9000/user/wu/in"); FileStatus filestatus = hdfs.getFileStatus(fpath); BlockLocation[] blkLocations = hdfs.getFileBlockLocations(filestatus, 0, filestatus.getLen()); int blockLen = blkLocations.length; System.out.println(blockLen); for(int i = 0; i < blockLen; i++) { String[] hosts = blkLocations[i].getHosts(); System.out.println("block " + i + "location:" + hosts[i]); } } }

《实战Hadop:开启通向云计算的捷径.刘鹏》

标签:des style blog http ar io color os 使用

原文地址:http://www.cnblogs.com/archimedes/p/hdfs-api-operations.html