标签:

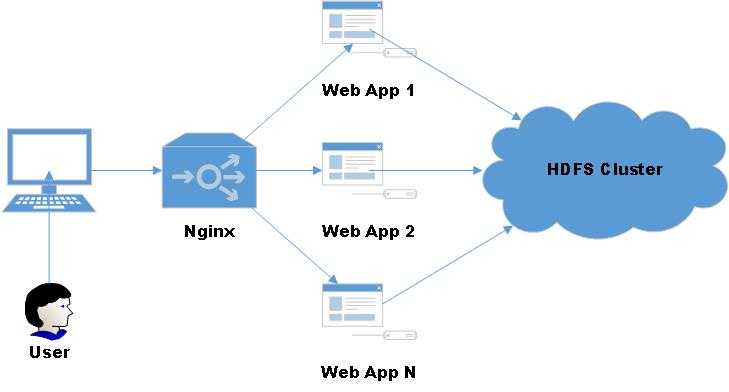

利用hdfs的api,可以实现向hdfs的文件、目录读写,利用这一套API可以设计一个简易的山寨版云盘,见下图:

为了方便操作,将常用的文件读写操作封装了一个工具类:

1 package yjmyzz; 2 3 import org.apache.hadoop.conf.Configuration; 4 import org.apache.hadoop.fs.FSDataOutputStream; 5 import org.apache.hadoop.fs.FileSystem; 6 import org.apache.hadoop.fs.Path; 7 import org.apache.hadoop.io.IOUtils; 8 9 import java.io.ByteArrayOutputStream; 10 import java.io.IOException; 11 import java.io.InputStream; 12 import java.io.OutputStream; 13 14 /** 15 * Created by jimmy on 15/5/20. 16 */ 17 public class HDFSUtil { 18 19 20 private HDFSUtil() { 21 22 } 23 24 /** 25 * 判断路径是否存在 26 * 27 * @param conf 28 * @param path 29 * @return 30 * @throws IOException 31 */ 32 public static boolean exits(Configuration conf, String path) throws IOException { 33 FileSystem fs = FileSystem.get(conf); 34 return fs.exists(new Path(path)); 35 } 36 37 /** 38 * 创建文件 39 * 40 * @param conf 41 * @param filePath 42 * @param contents 43 * @throws IOException 44 */ 45 public static void createFile(Configuration conf, String filePath, byte[] contents) throws IOException { 46 FileSystem fs = FileSystem.get(conf); 47 Path path = new Path(filePath); 48 FSDataOutputStream outputStream = fs.create(path); 49 outputStream.write(contents); 50 outputStream.close(); 51 fs.close(); 52 } 53 54 /** 55 * 创建文件 56 * 57 * @param conf 58 * @param filePath 59 * @param fileContent 60 * @throws IOException 61 */ 62 public static void createFile(Configuration conf, String filePath, String fileContent) throws IOException { 63 createFile(conf, filePath, fileContent.getBytes()); 64 } 65 66 /** 67 * @param conf 68 * @param localFilePath 69 * @param remoteFilePath 70 * @throws IOException 71 */ 72 public static void copyFromLocalFile(Configuration conf, String localFilePath, String remoteFilePath) throws IOException { 73 FileSystem fs = FileSystem.get(conf); 74 Path localPath = new Path(localFilePath); 75 Path remotePath = new Path(remoteFilePath); 76 fs.copyFromLocalFile(true, true, localPath, remotePath); 77 fs.close(); 78 } 79 80 /** 81 * 删除目录或文件 82 * 83 * @param conf 84 * @param remoteFilePath 85 * @param recursive 86 * @return 87 * @throws IOException 88 */ 89 public static boolean deleteFile(Configuration conf, String remoteFilePath, boolean recursive) throws IOException { 90 FileSystem fs = FileSystem.get(conf); 91 boolean result = fs.delete(new Path(remoteFilePath), recursive); 92 fs.close(); 93 return result; 94 } 95 96 /** 97 * 删除目录或文件(如果有子目录,则级联删除) 98 * 99 * @param conf 100 * @param remoteFilePath 101 * @return 102 * @throws IOException 103 */ 104 public static boolean deleteFile(Configuration conf, String remoteFilePath) throws IOException { 105 return deleteFile(conf, remoteFilePath, true); 106 } 107 108 /** 109 * 文件重命名 110 * 111 * @param conf 112 * @param oldFileName 113 * @param newFileName 114 * @return 115 * @throws IOException 116 */ 117 public static boolean renameFile(Configuration conf, String oldFileName, String newFileName) throws IOException { 118 FileSystem fs = FileSystem.get(conf); 119 Path oldPath = new Path(oldFileName); 120 Path newPath = new Path(newFileName); 121 boolean result = fs.rename(oldPath, newPath); 122 fs.close(); 123 return result; 124 } 125 126 /** 127 * 创建目录 128 * 129 * @param conf 130 * @param dirName 131 * @return 132 * @throws IOException 133 */ 134 public static boolean createDirectory(Configuration conf, String dirName) throws IOException { 135 FileSystem fs = FileSystem.get(conf); 136 Path dir = new Path(dirName); 137 boolean result = fs.mkdirs(dir); 138 fs.close(); 139 return result; 140 } 141 142 /** 143 * 读取文件内容 144 * 145 * @param conf 146 * @param filePath 147 * @return 148 * @throws IOException 149 */ 150 public static String readFile(Configuration conf, String filePath) throws IOException { 151 String fileContent = null; 152 FileSystem fs = FileSystem.get(conf); 153 Path path = new Path(filePath); 154 InputStream inputStream = null; 155 ByteArrayOutputStream outputStream = null; 156 try { 157 inputStream = fs.open(path); 158 outputStream = new ByteArrayOutputStream(inputStream.available()); 159 IOUtils.copyBytes(inputStream, outputStream, conf); 160 fileContent = outputStream.toString(); 161 } finally { 162 IOUtils.closeStream(inputStream); 163 IOUtils.closeStream(outputStream); 164 fs.close(); 165 } 166 return fileContent; 167 } 168 }

简单的测试了一下:

1 @Test 2 public void testHDFSUtil() throws IOException { 3 Configuration conf = new Configuration(); 4 String newDir = "/test"; 5 //检测路径是否存在 测试 6 if (HDFSUtil.exits(conf, newDir)) { 7 System.out.println(newDir + " 已存在!"); 8 } else { 9 //创建目录测试 10 boolean result = HDFSUtil.createDirectory(conf, newDir); 11 if (result) { 12 System.out.println(newDir + " 创建成功!"); 13 } else { 14 System.out.println(newDir + " 创建失败!"); 15 } 16 } 17 String fileContent = "Hi,hadoop. I love you"; 18 String newFileName = newDir + "/myfile.txt"; 19 //创建文件测试 20 HDFSUtil.createFile(conf, newFileName, fileContent); 21 //读取文件内容 测试 22 System.out.println(HDFSUtil.readFile(conf, newFileName)); 23 //删除文件测试 24 //System.out.println(HDFSUtil.deleteFile(conf, newDir)); 25 }

注:测试时,不要忘记了在resources目录下放置core-site.xml文件,不然IDE环境下,代码不知道去连哪里的HDFS

标签:

原文地址:http://www.cnblogs.com/yjmyzz/p/hadoop-hdfs-api-sample.html