标签:

场景介绍:主机mac pro,安装了两台虚拟机,虚拟机均为Ubuntu系统

ubuntu系统配置jdk

1、到 Sun 的官网下载

http://www.oracle.com/technetwork/java/javase/downloads/jdk7-downloads-1880260.html

2、解压所下载的文件

lixiaojiao@ubuntu:~/software$ tar -zxvf jdk-7u79-linux-x64.tar.gz

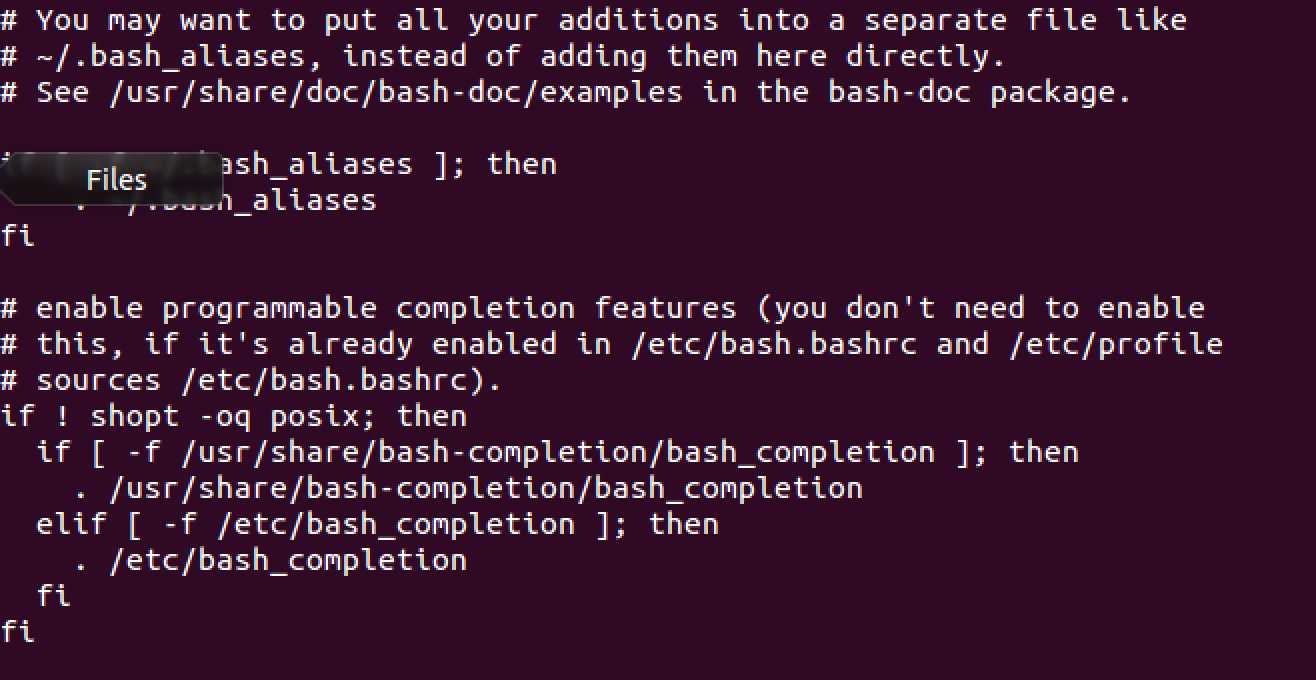

3、配置java环境变量

lixiaojiao@ubuntu:~/software$ vi ~/.bashrc

跳至文件结束处

添加

export JAVA_HOME=/home/lixiaojiao/software/jdk1.7.0_79 export CLASS_PATH=.:$CLASS_PATH:$JAVA_HOME/lib export PATH=.:$PATH:$JAVA_HOME/bin

如图所示

保存并退出

4、配置完毕后并没有立即生效,需要使用下面命令后生效

lixiaojiao@ubuntu:~/software$ source ~/.bashrc

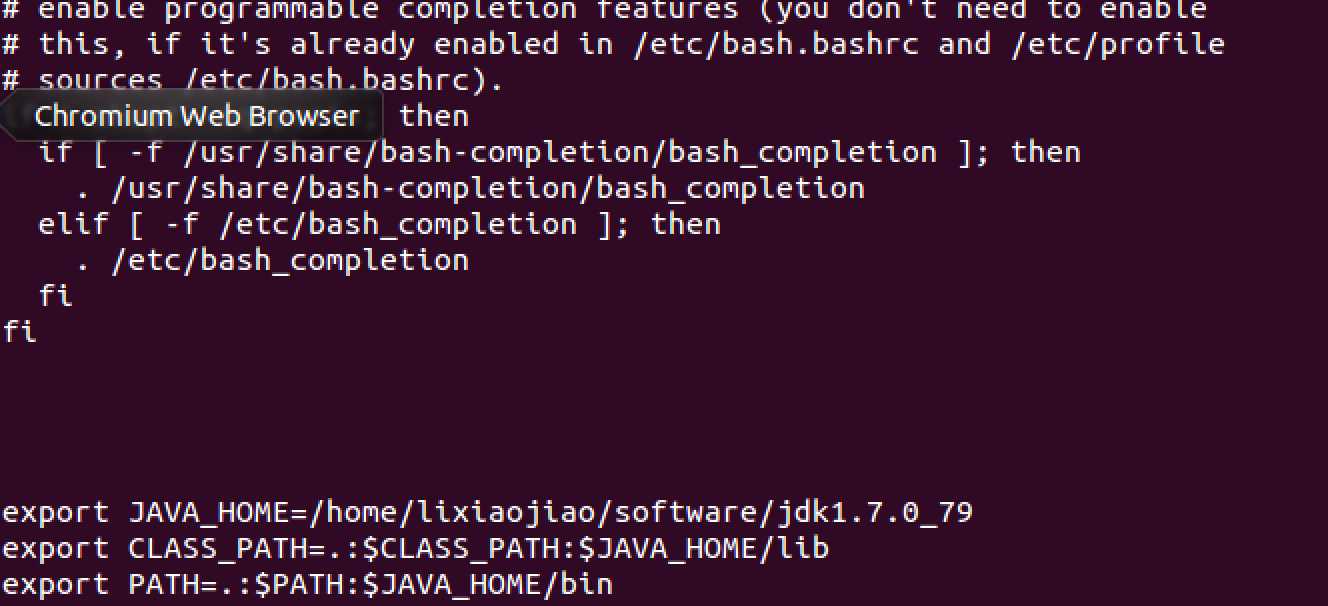

5、验证是否配置成功,出现下面效果证明成功

lixiaojiao@ubuntu:~/software$ java -version

mac机器配置hadoop2.6.1环境(java环境之前已经配置)

1、解压hadoop下载文件

lixiaojiaodeMacBook-Pro:zipFiles lixiaojiao$ tar -zxvf hadoop-2.6.1.tar.gz

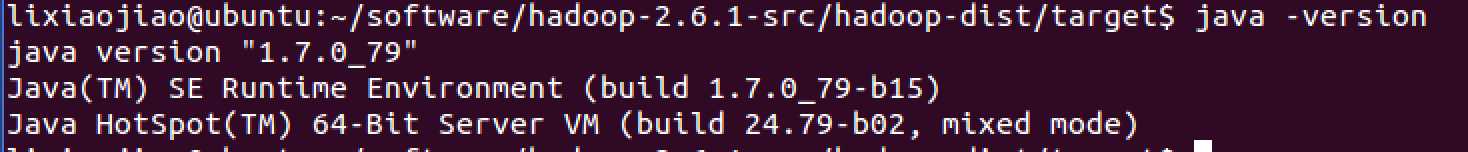

2.本人将云计算相关的放入到目录中cloudcomputing,查看目录结构

3.设置ssh远程登录

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ ssh-keygen -t rsa -P ""

执行以下命令

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

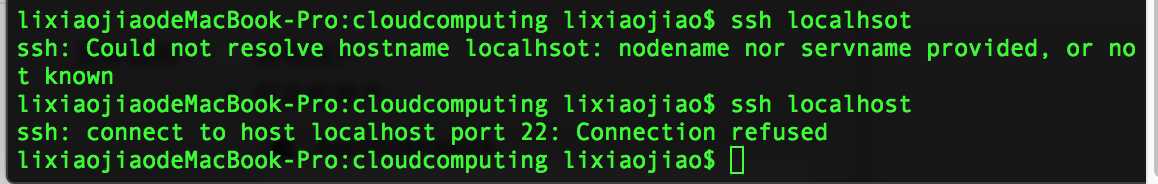

验证是否成功

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ ssh localhost

出现下图,失败。。

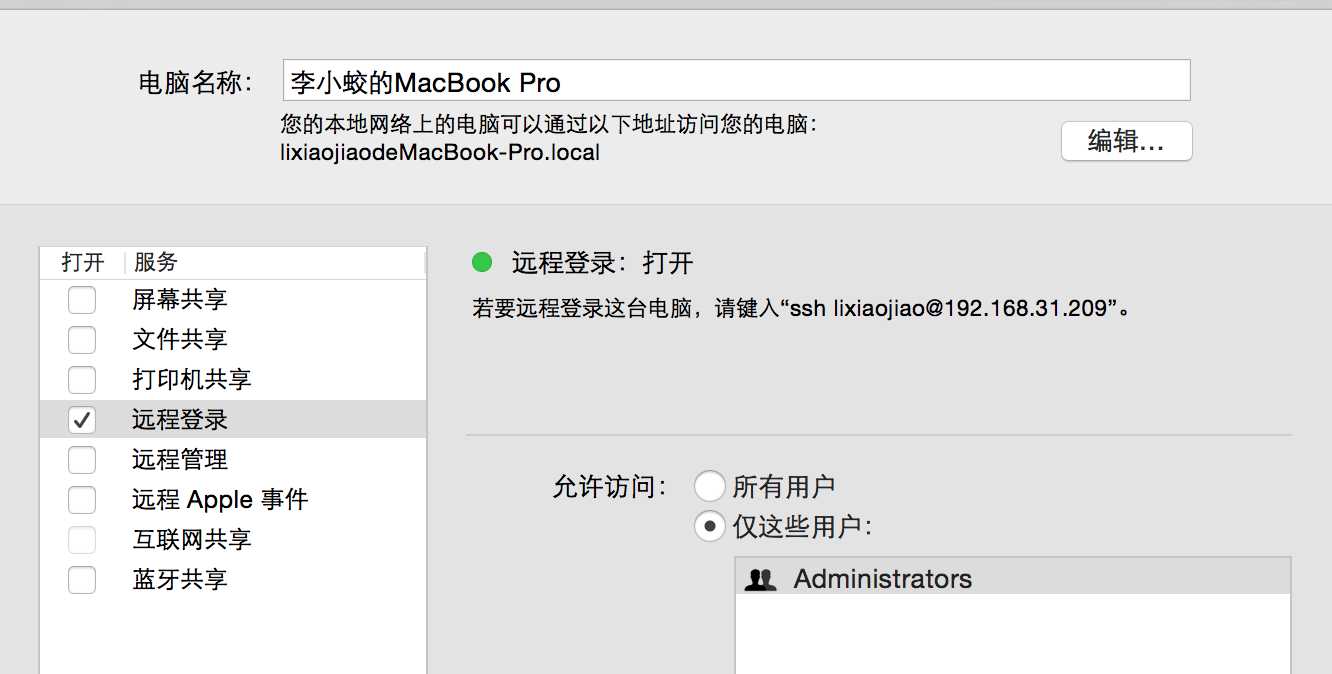

原因是系统的ssh远程登录没有打开

首先在系统偏好设置->共享->远程登录,打开远程登录

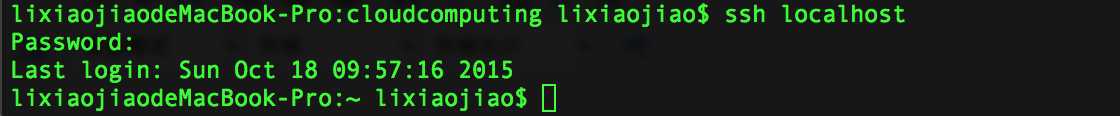

再次执行命令

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ ssh localhost

出现下图证明成功

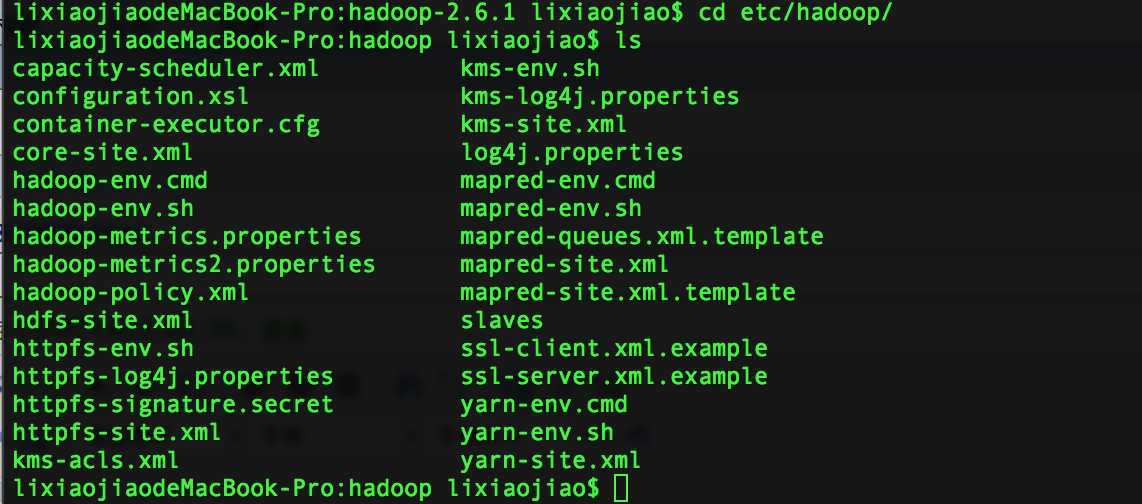

3. 切换到etc目录中,查看配置文件

lixiaojiaodeMacBook-Pro:cloudcomputing lixiaojiao$ cd hadoop-2.6.1/etc/hadoop/

4.修改配置文件

切换到/Users/lixiaojiao/software/cloudcomputing/hadoop-2.6.1/etc/hadoop目录

(1) 配置core-site.xml

lixiaojiaodeMacBook-Pro:hadoop lixiaojiao$ vi core-site.xml

添加在

<configuration>

</configuration>中间增加如下配置

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

(2) 配置yarn-site.xml

增加如下配置

<property>

<name>yarn.noCHdemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

(3) 创建和配置mapred-site.xml,将该目录中的mapred-site.xml.template复制为mapred-site.xml并添加配置如下

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

(4) 配置hdfs-site.xml,首先在

/Users/lixiaojiao/software/cloudcomputing/hadoop-2.6.1/中新建目录,hdfs/data和hdfs/name,并添加如下配置

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/Users/lixiaojiao/software/cloudcomputing/hadoop-2.6.1/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/Users/lixiaojiao/software/cloudcomputing/hadoop-2.6.1/hdfs/data</value>

</property>

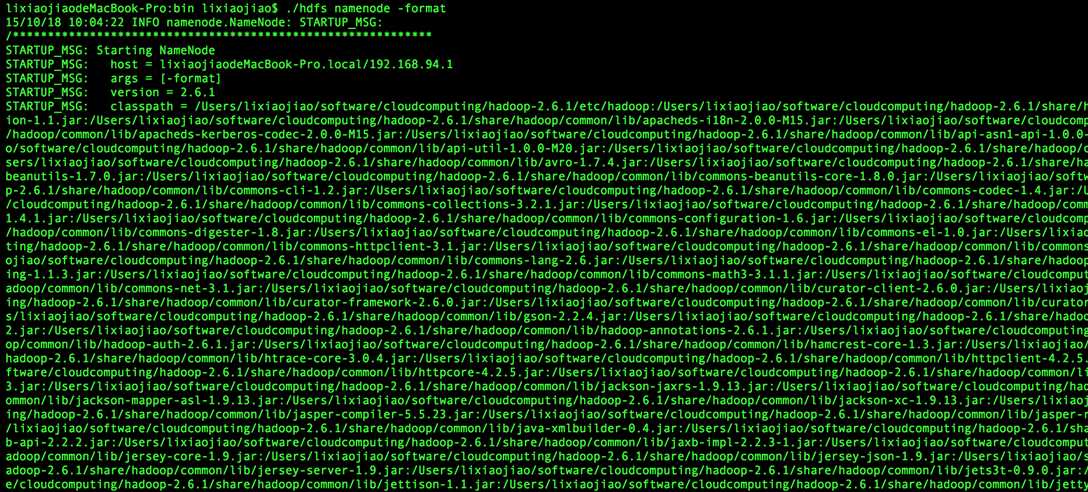

(5) 格式化hdfs

lixiaojiaodeMacBook-Pro:bin lixiaojiao$ ./hdfs namenode -format

出现下图

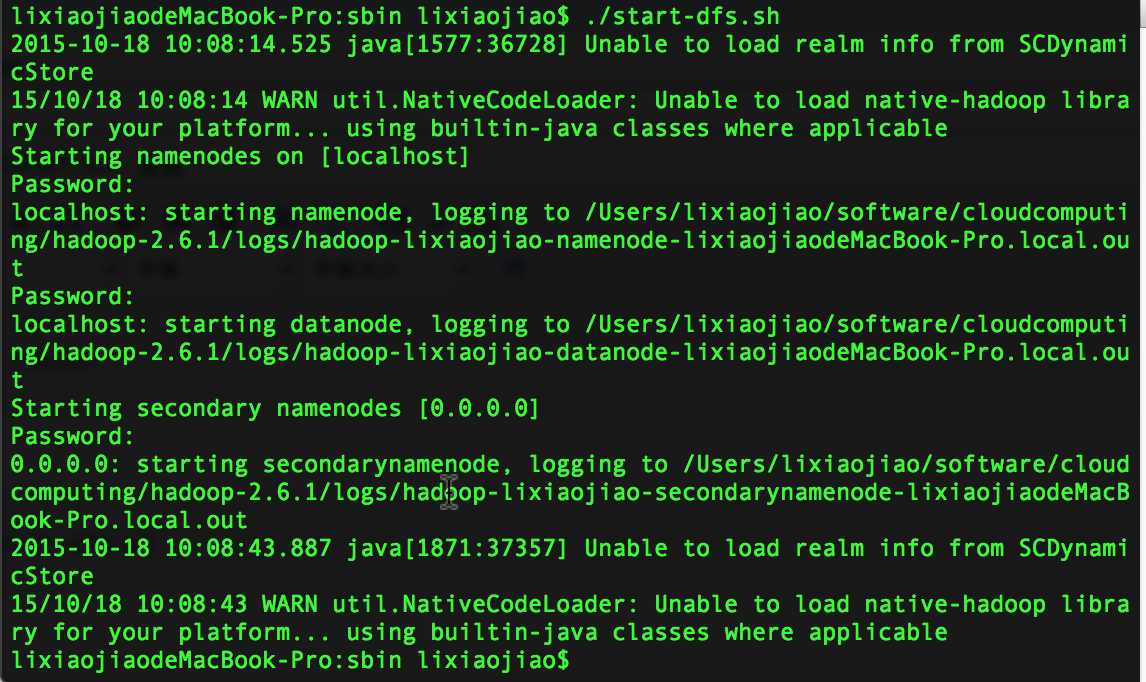

(6) 启动hadoop

切换到sbin目录下

lixiaojiaodeMacBook-Pro:bin lixiaojiao$ cd ../sbin/

执行

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ ./start-dfs.sh

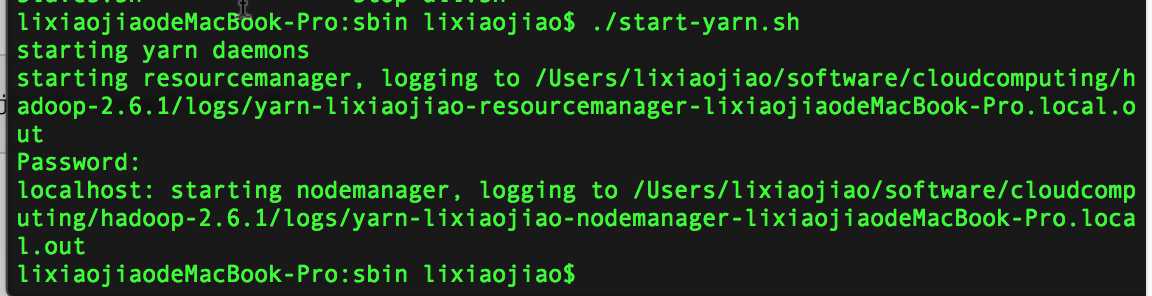

执行

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ ./start-yarn.sh

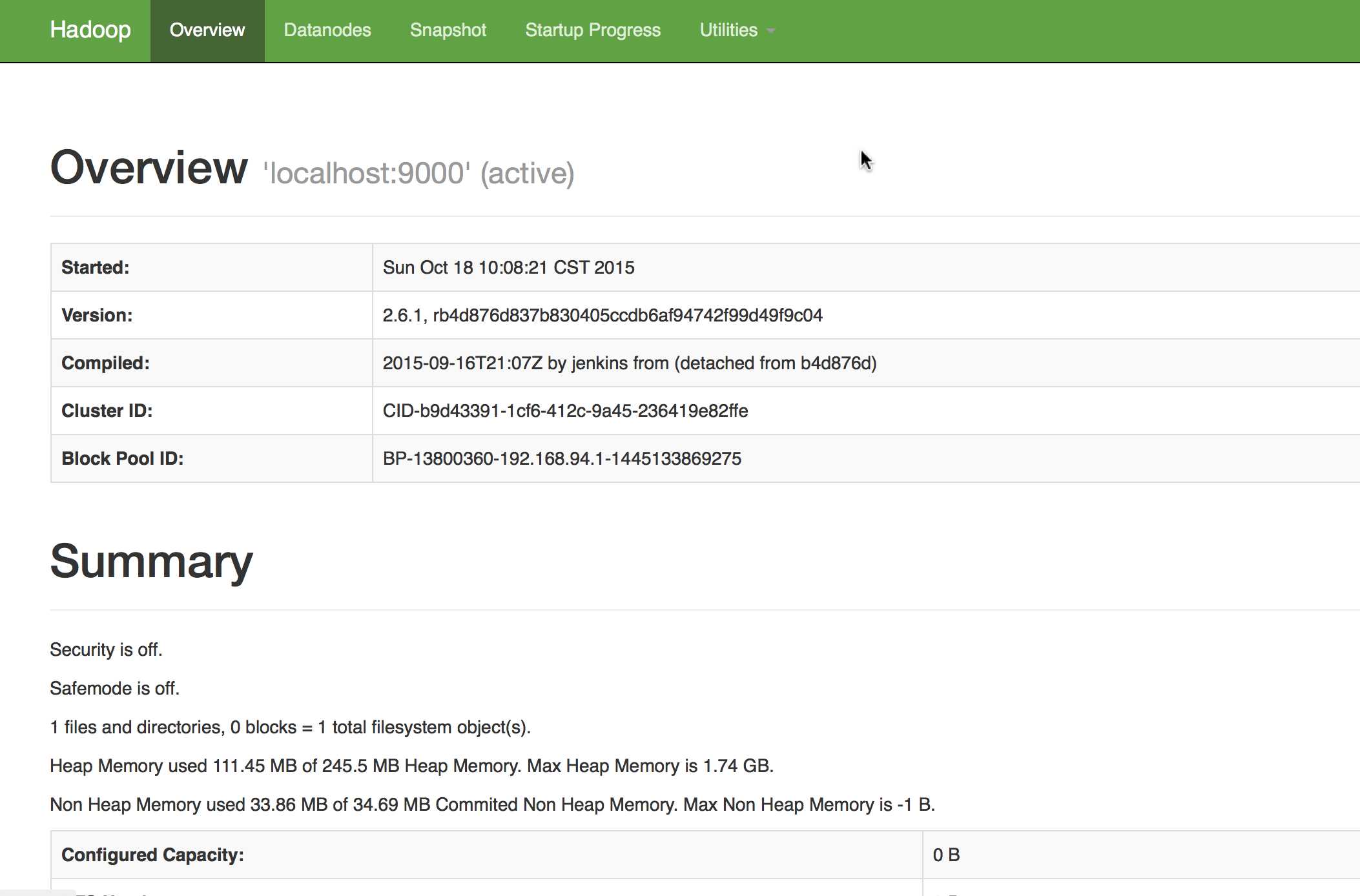

浏览器打开 http://localhost:50070/,会看到hdfs管理页面

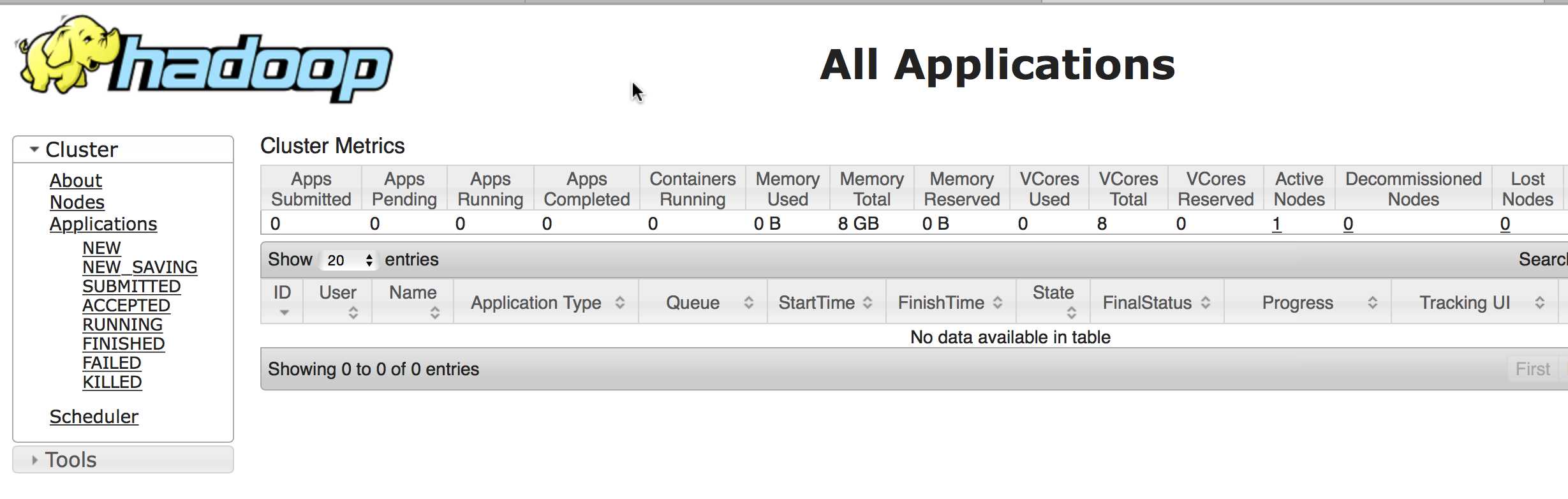

浏览器打开 http://localhost:8088/,会看到hadoop进程管理页面

在第六部运行

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ ./start-dfs.sh

时候出现下面提示

computing/hadoop-2.6.1/logs/hadoop-lixiaojiao-secondarynamenode-lixiaojiaodeMacBook-Pro.local.out 2015-10-18 10:08:43.887 java[1871:37357] Unable to load realm info from SCDynamicStore 15/10/18 10:08:43 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable lixiaojiaodeMacBook-Pro:sbin lixiaojiao$

原因为官方提供的lib目录中.so文件是在32位系统下编译的,如果是但是本人的mac机器是64位系统,需要自己下载源码在64位上重新编译,由于本人下载源码尝试了很久也没成功,最终放弃了,下载了牛人编译好的64位打包程序,地址位http://yun.baidu.com/s/1c0rfIOo#dir/path=%252Fbuilder,并下载这个正常的32位hadoop程序包,http://www.aboutyun.com/thread-6658-1-1.html,下载成功后,将下载的64位build文件中的native覆盖掉lib目录下的native文件,并重新按照上面的部分进行配置。

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ ./start-all.sh

当重新执行到上面的命令时候出现下面问题:

lixiaojiaodeMacBook-Pro:sbin lixiaojiao$ ./start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh 2015-10-19 21:18:29.414 java[5782:72819] Unable to load realm info from SCDynamicStore

按照网上牛人的做法还是不行,最后只有换jdk,重新安装jdk

#export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.7.0_25.jdk/Contents/Home

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.7.0_79.jdk/Contents/Home/

验证是否成功

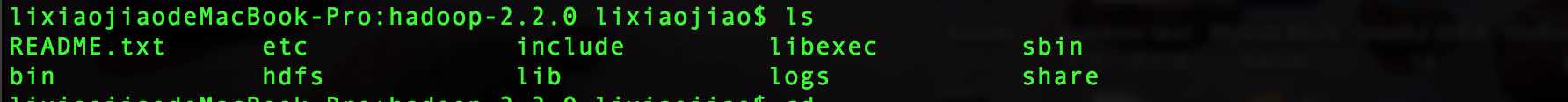

创建input目录

lixiaojiaodeMacBook-Pro:hadoop-2.2.0 lixiaojiao$ hadoop fs -mkdir -p input

将本地文件上传到hdfs文件系统中

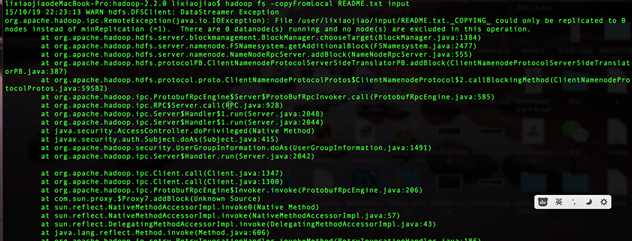

lixiaojiaodeMacBook-Pro:cloudcomputing lixiaojiao$ hadoop fs -copyFromLocal README.txt input

出现下面新问题

将fs.default.name中的IP地址改为127.0.0.1。

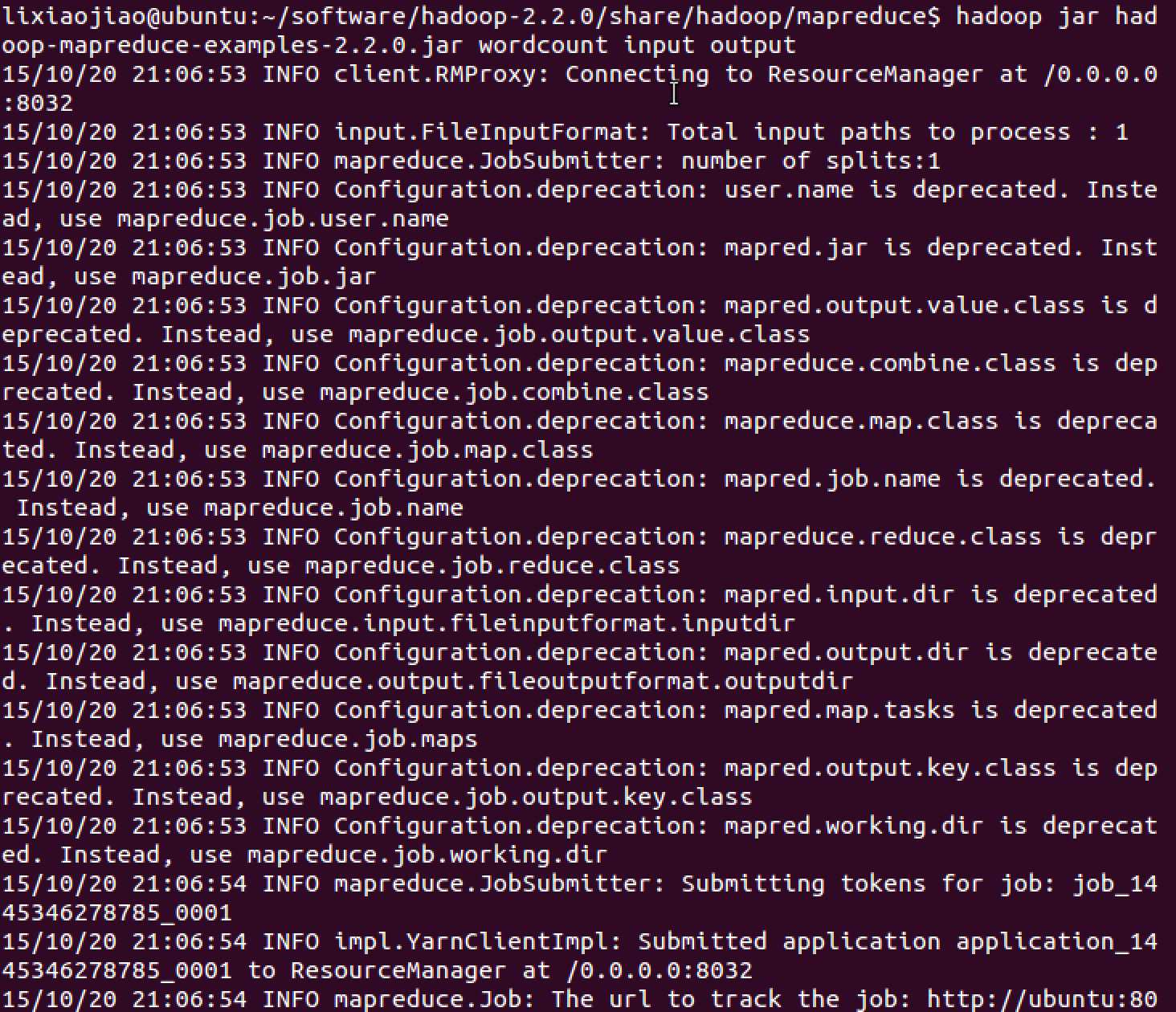

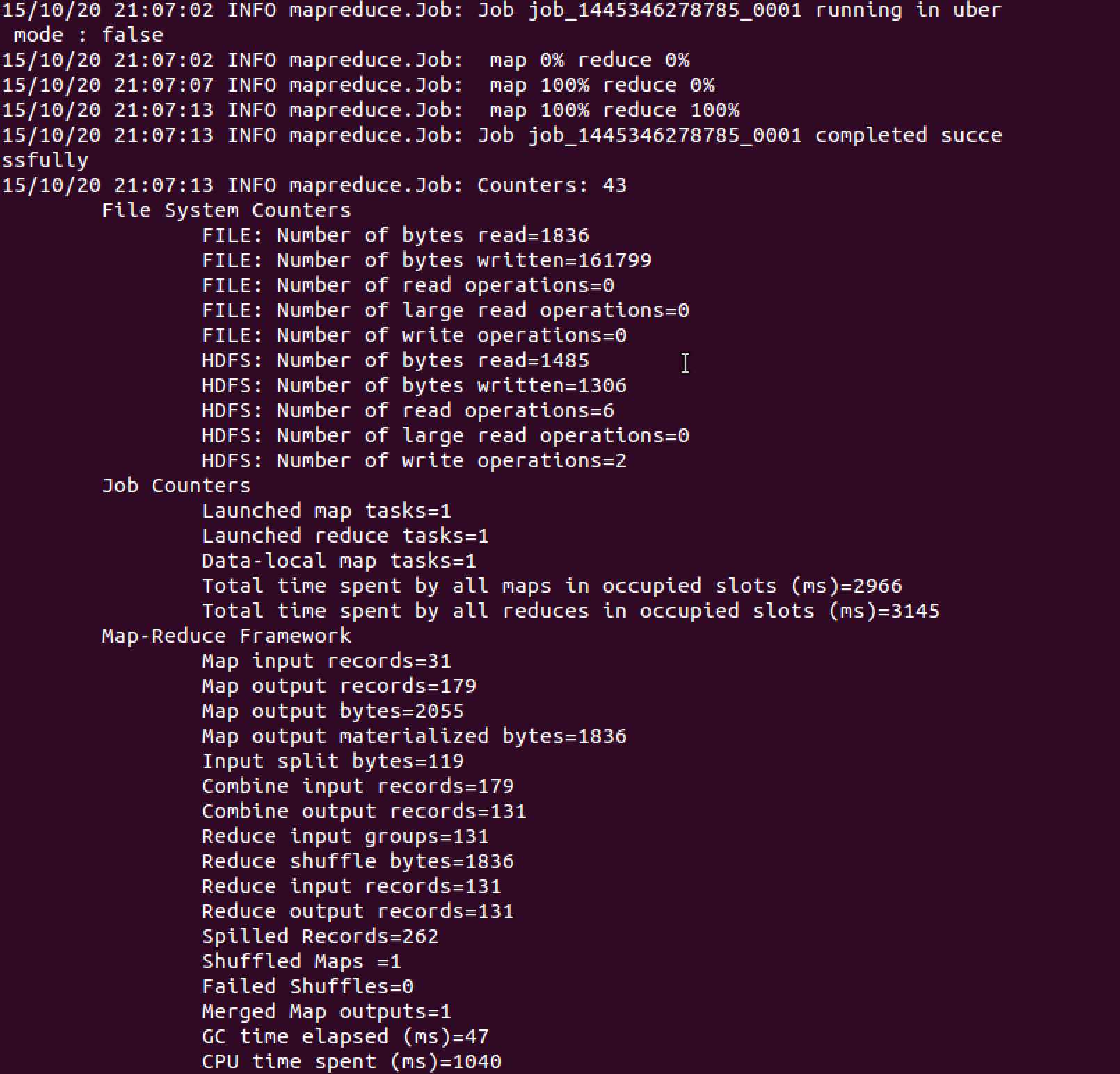

切换到share/hadoop/mapreduce目录中执行下面语句

hadoop jar hadoop-mapreduce-examples-2.2.0.jar wordcount input output

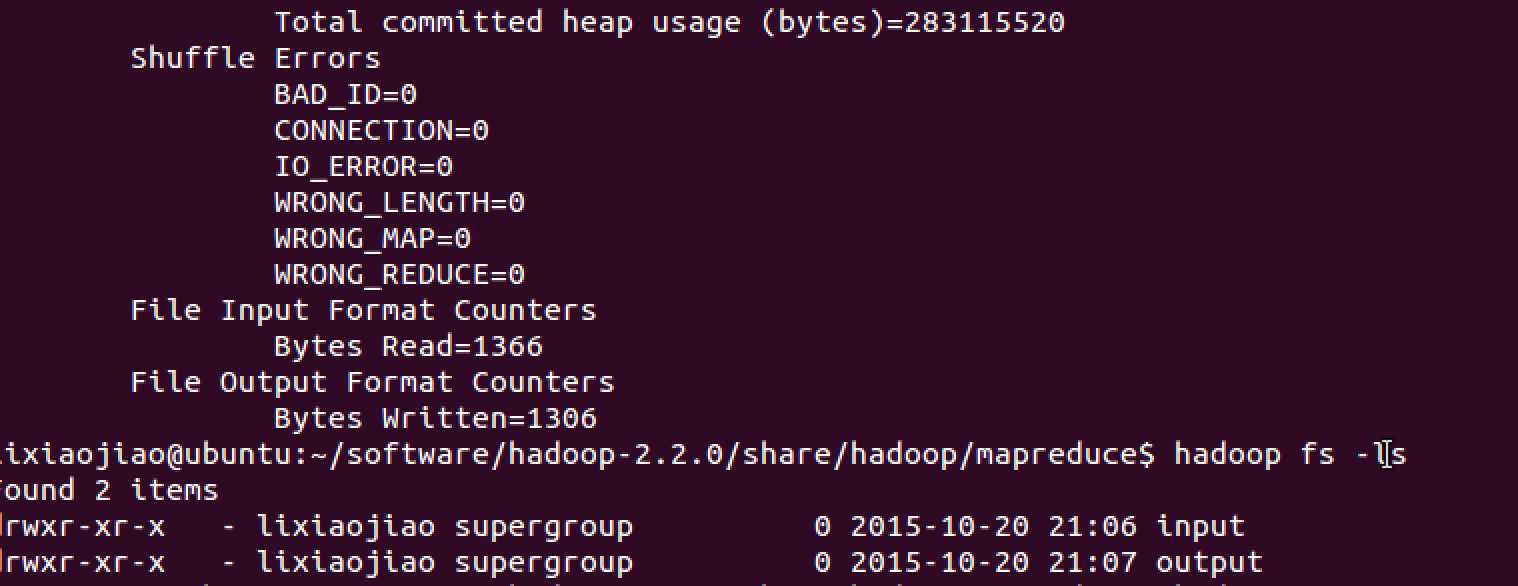

执行下面命令看是否生成output目录

hadoop fs -ls

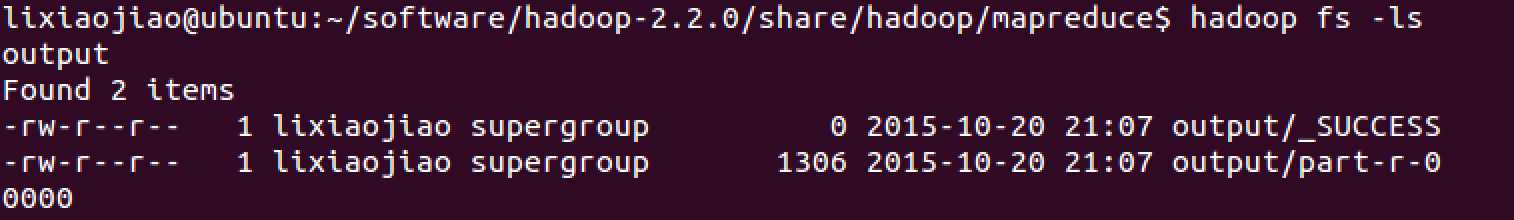

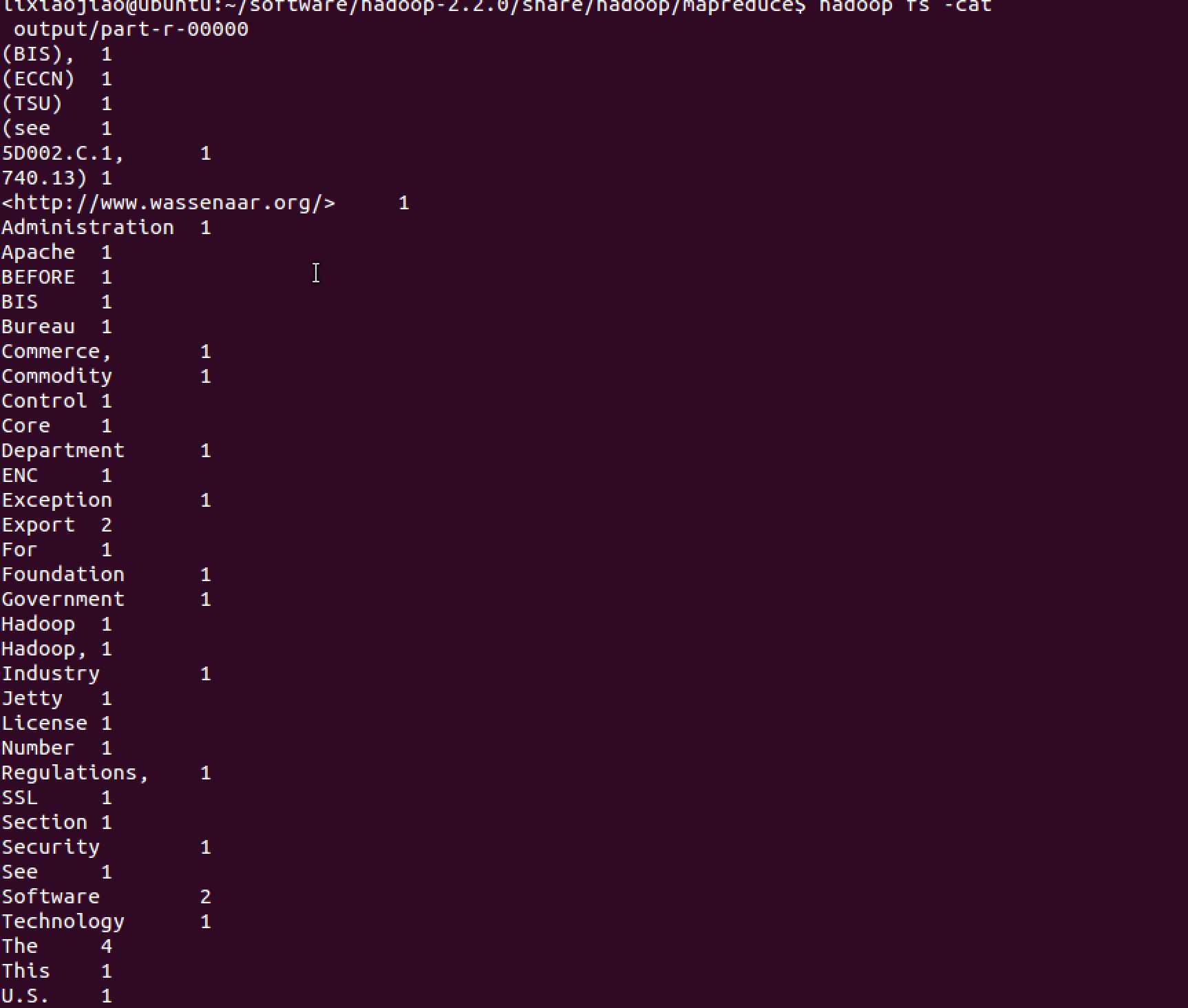

执行下面命令查看具体结果

hadoop fs -cat

因此本人为了方便直接将配置好的hadoop文件copy到其他的Ubuntu系统中,直接使用scp命令,需要Ubuntu系统中开启ssh

lixiaojiaodeMacBook-Pro:cloudcomputing lixiaojiao$ scp -r hadoop-2.2.0 lixiaojiao@192.168.31.126:/home/lixiaojiao/software

lixiaojiaodeMacBook-Pro:cloudcomputing lixiaojiao$ scp -r hadoop-2.2.0 lixiaojiao@192.168.31.218:/home/lixiaojiao/software

然后通过执行之上的wordcount程序验证是否成功

标签:

原文地址:http://www.cnblogs.com/lixiaojiao-hit/p/4886899.html