标签:

1 from HTMLParser import HTMLParser 2 import urllib2 3 import re 4 from time import sleep 5 6 7 class MyHTMLParser(HTMLParser): 8 def __init__(self): 9 HTMLParser.__init__(self) 10 self.links = [] 11 12 def handle_starttag(self, tag, attrs): 13 #print "Encountered the beginning of a %s tag" % tag 14 if tag == "a": 15 if len(attrs) == 0: pass 16 else: 17 for (variable,value) in attrs: 18 if variable == ‘href‘: 19 if value.find(‘/vul/list/page/‘)+1: 20 self.links.append(value) 21 22 class PageContentParser(HTMLParser): 23 def __init__(self): 24 HTMLParser.__init__(self) 25 self.flag = 0 26 self.urllist = [] # url 27 self.title = [] #title 28 def handle_starttag(self, tag, attrs): 29 #print "Encountered the beginning of a %s tag" % tag 30 if tag == "a": 31 if len(attrs) == 0: pass 32 else: 33 for (variable,value) in attrs: 34 if value.find(‘/vul/info/qid/QTVA‘)+1: 35 self.flag = 1 36 self.urllist.append(value) 37 #url write to native 38 sleep(0.5) 39 f = open("./butian.html",‘a+‘) 40 f.write("http://loudong.360.cn"+value+"---------") 41 f.close 42 def handle_data(self,data): 43 if self.flag == 1: 44 y = data.decode("utf-8") 45 k = y.encode("gb18030") 46 self.title.append(k) 47 self.flag = 0 48 #storage to native 49 sleep(0.5) 50 f = open(‘./butian.html‘,‘a+‘) 51 f.write(k+"<br>") 52 f.close 53 54 55 56 if __name__ == "__main__": 57 print "start........" 58 content = urllib2.urlopen("http://loudong.360.cn/vul/list/").read() 59 hp = MyHTMLParser() 60 hp.feed(content) 61 num = int(filter(str.isdigit, hp.links[-1])) #get the total page 62 hp.close() 63 print num 64 for i in range(num): 65 page = i + 1 66 pagecontent = urllib2.urlopen("http://loudong.360.cn/vul/list/page/"+str(page)).read() 67 page = PageContentParser() 68 page.feed(pagecontent) 69 page.close()

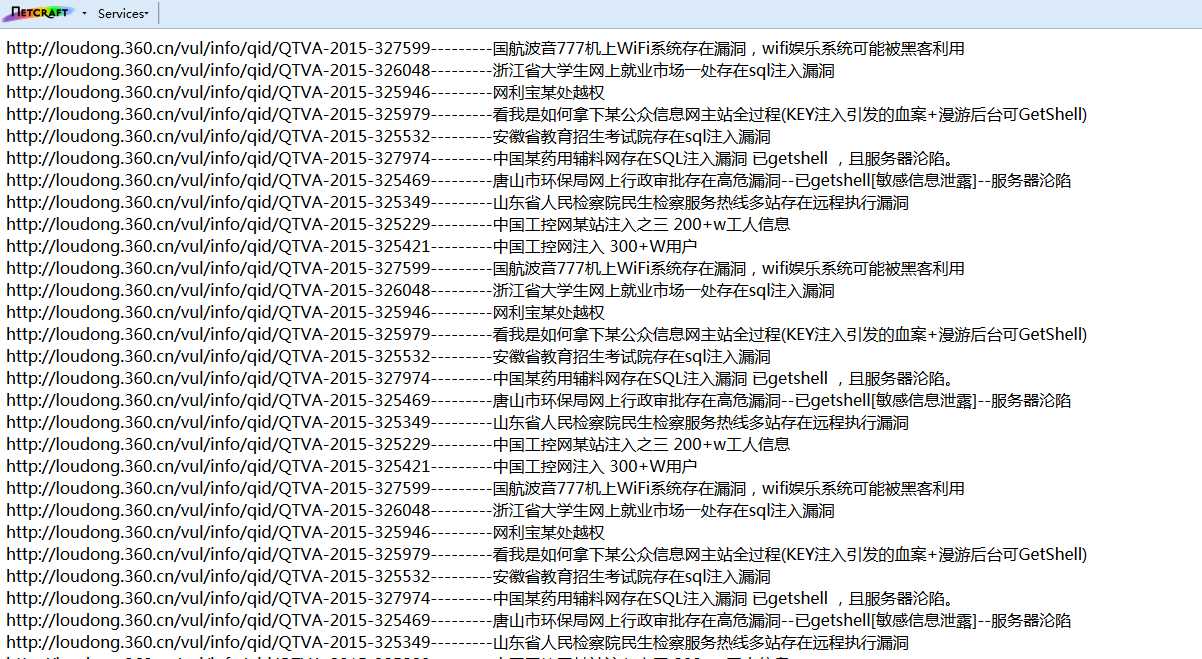

效果如下:

标签:

原文地址:http://www.cnblogs.com/elliottc/p/4964504.html