标签:

1 <?xml version="1.0" encoding="UTF-8"?> 2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3 <!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15 --> 16 17 <!-- Put site-specific property overrides in this file. --> 18 19 <configuration> 20 <property> 21 <name>hadoop.tmp.dir</name> 22 <value>/home/hadoop/tmp</value> 23 <description>Abase for other temporary directories.</description> 24 </property> 25 <property> 26 <name>fs.defaultFS</name> 27 <value>hdfs://Master.Hadoop:9000</value> 28 </property> 29 <property> 30 <name>io.file.buffer.size</name> 31 <value>4096</value> 32 </property> 33 </configuration>

1 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 2 <!-- 3 Licensed under the Apache License, Version 2.0 (the "License"); 4 you may not use this file except in compliance with the License. 5 You may obtain a copy of the License at 6 7 http://www.apache.org/licenses/LICENSE-2.0 8 9 Unless required by applicable law or agreed to in writing, software 10 distributed under the License is distributed on an "AS IS" BASIS, 11 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 12 See the License for the specific language governing permissions and 13 limitations under the License. See accompanying LICENSE file. 14 --> 15 16 <!-- Put site-specific property overrides in this file. --> 17 18 <configuration> 19 <property> 20 <name>dfs.namenode.name.dir</name> 21 <value>file:///home/hadoop/dfs/name</value> 22 </property> 23 <property> 24 <name>dfs.datanode.data.dir</name> 25 <value>file:///home/hadoop/dfs/data</value> 26 </property> 27 <property> 28 <name>dfs.replication</name> 29 <value>2</value> 30 </property> 31 <property> 32 <name>dfs.nameservices</name> 33 <value>hadoop-cluster1</value> 34 </property> 35 <property> 36 <name>dfs.namenode.secondary.http-address</name> 37 <value>Master.Hadoop:50090</value> 38 </property> 39 <property> 40 <name>dfs.webhdfs.enabled</name> 41 <value>true</value> 42 </property> 43 </configuration>

11.4 vi mapred-site.xml(若不存在则从template文件copy)

1 <?xml version="1.0"?> 2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3 <!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15 --> 16 17 <!-- Put site-specific property overrides in this file. --> 18 19 <configuration> 20 <property> 21 <name>mapreduce.framework.name</name> 22 <value>yarn</value> 23 <final>true</final> 24 </property> 25 26 <property> 27 <name>mapreduce.jobtracker.http.address</name> 28 <value>Master.Hadoop:50030</value> 29 </property> 30 <property> 31 <name>mapreduce.jobhistory.address</name> 32 <value>Master.Hadoop:10020</value> 33 </property> 34 <property> 35 <name>mapreduce.jobhistory.webapp.address</name> 36 <value>Master.Hadoop:19888</value> 37 </property> 38 <property> 39 <name>mapred.job.tracker</name> 40 <value>http://Master.Hadoop:9001</value> 41 </property> 42 </configuration>

11.5 vi yarn-site.xml

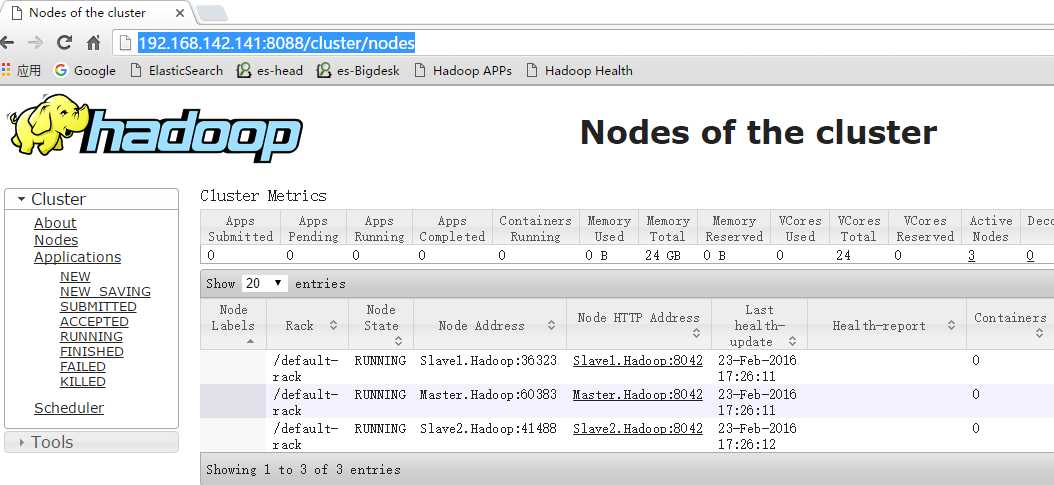

1 <?xml version="1.0"?> 2 <!-- 3 Licensed under the Apache License, Version 2.0 (the "License"); 4 you may not use this file except in compliance with the License. 5 You may obtain a copy of the License at 6 7 http://www.apache.org/licenses/LICENSE-2.0 8 9 Unless required by applicable law or agreed to in writing, software 10 distributed under the License is distributed on an "AS IS" BASIS, 11 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 12 See the License for the specific language governing permissions and 13 limitations under the License. See accompanying LICENSE file. 14 --> 15 <configuration> 16 17 <!-- Site specific YARN configuration properties --> 18 <property> 19 <name>yarn.resourcemanager.hostname</name> 20 <value>Master.Hadoop</value> 21 </property> 22 <property> 23 <name>yarn.nodemanager.aux-services</name> 24 <value>mapreduce_shuffle</value> 25 </property> 26 <property> 27 <name>yarn.resourcemanager.address</name> 28 <value>Master.Hadoop:8032</value> 29 </property> 30 <property> 31 <name>yarn.resourcemanager.scheduler.address</name> 32 <value>Master.Hadoop:8030</value> 33 </property> 34 <property> 35 <name>yarn.resourcemanager.resource-tracker.address</name> 36 <value>Master.Hadoop:8031</value> 37 </property> 38 <property> 39 <name>yarn.resourcemanager.admin.address</name> 40 <value>Master.Hadoop:8033</value> 41 </property> 42 <property> 43 <name>yarn.resourcemanager.webapp.address</name> 44 <value>Master.Hadoop:8088</value> 45 </property> 46 </configuration>

.png)

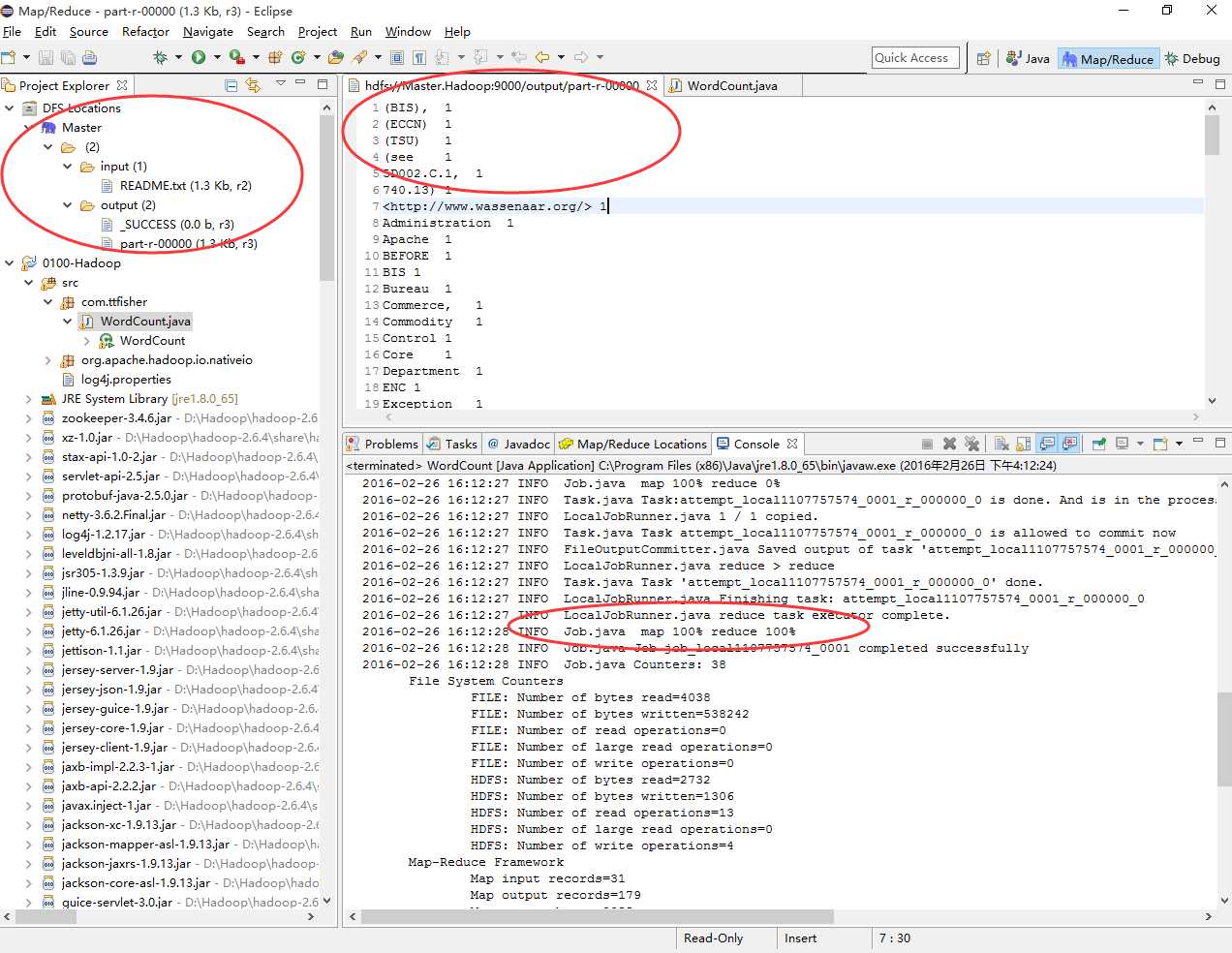

1 package com.ttfisher; 2 3 import java.io.IOException; 4 import java.util.StringTokenizer; 5 import org.apache.hadoop.conf.Configuration; 6 import org.apache.hadoop.fs.Path; 7 import org.apache.hadoop.io.IntWritable; 8 import org.apache.hadoop.io.Text; 9 import org.apache.hadoop.mapreduce.Job; 10 import org.apache.hadoop.mapreduce.Mapper; 11 import org.apache.hadoop.mapreduce.Reducer; 12 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 13 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 14 import org.apache.hadoop.util.GenericOptionsParser; 15 16 public class WordCount { 17 18 public static class TokenizerMapper extends Mapper<Object, Text, Text, IntWritable> { 19 20 private final static IntWritable one = new IntWritable(1); 21 private Text word = new Text(); 22 23 public void map(Object key, Text value, Context context) 24 throws IOException, InterruptedException { 25 26 StringTokenizer itr = new StringTokenizer(value.toString()); 27 while (itr.hasMoreTokens()) { 28 word.set(itr.nextToken()); 29 context.write(word, one); 30 } 31 } 32 } 33 34 public static class IntSumReducer extends Reducer<Text, IntWritable, Text, IntWritable> { 35 36 private IntWritable result = new IntWritable(); 37 38 public void reduce(Text key, Iterable<IntWritable> values,Context context) 39 throws IOException, InterruptedException { 40 41 int sum = 0; 42 for (IntWritable val : values) { 43 sum += val.get(); 44 } 45 result.set(sum); 46 context.write(key, result); 47 } 48 } 49 50 public static void main(String[] args) throws Exception { 51 52 Configuration conf = new Configuration(); 53 String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs(); 54 if (otherArgs.length != 2) { 55 System.err.println("Usage: wordcount <in> <out>"); 56 System.exit(2); 57 } 58 59 Job job = new Job(conf, "word count"); 60 job.setJarByClass(WordCount.class); 61 job.setMapperClass(TokenizerMapper.class); 62 job.setCombinerClass(IntSumReducer.class); 63 job.setReducerClass(IntSumReducer.class); 64 job.setOutputKeyClass(Text.class); 65 job.setOutputValueClass(IntWritable.class); 66 FileInputFormat.addInputPath(job, new Path(otherArgs[0])); 67 FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); 68 System.exit(job.waitForCompletion(true) ? 0 : 1); 69 } 70 }

20.3【运行参数】

.png)

标签:

原文地址:http://www.cnblogs.com/bigshushu/p/5367959.html