标签:style blog http color 使用 os strong 数据

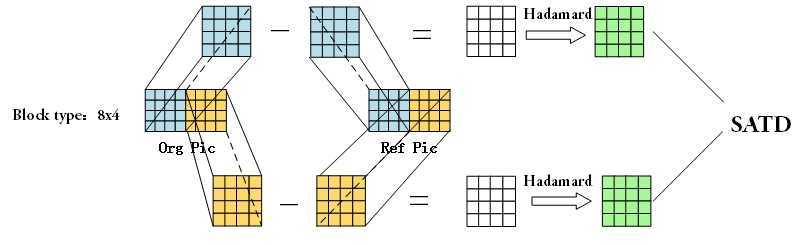

在1/2,1/4像素运动估计这一阶段中,对于像素残差,可以选择采用哈达玛变换来代替离散余弦变换进行高低频的分离。

优点:哈达玛矩阵全是+1,-1,因此只需要进行加减法就可以得到变换结果,比离散余弦变换更高效

缺点:高低频分离效果没有离散余弦变换好,原始数据越是均匀分布,经转换后的数据越集中于边角,反之集中力越差

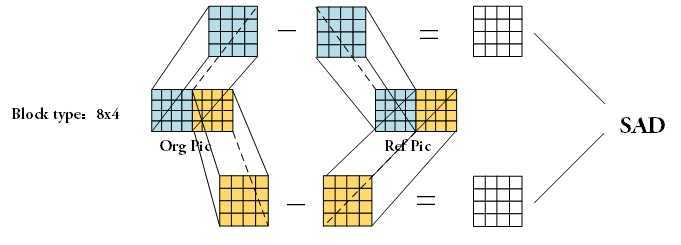

在采用了哈达玛变换的情况,为了达到更精确的估计效果,计算像素残差的SAD需要更变为SATD,即对像素残差进行哈达玛矩阵变换后得到的矩阵元素的绝对值之和

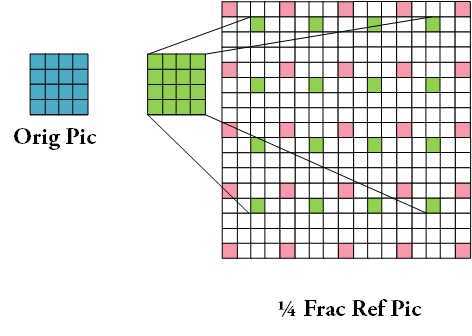

1/4像素参考图像像素采集,然后与当前块进行SAD方式如下:

/*! ************************************************************************ * \brief * Functions for fast fractional分数、小数 pel motion estimation. * 1. int AddUpSADQuarter() returns SADT of a fractiona pel MV * 2. int FastSubPelBlockMotionSearch () proceed the fast fractional pel ME * \authors: Zhibo Chen * Dept.of EE, Tsinghua Univ. * \date : 2003.4 计算分块代价 ************************************************************************ */ int AddUpSADQuarter(int pic_pix_x,int pic_pix_y,int blocksize_x,int blocksize_y, int cand_mv_x,int cand_mv_y, StorablePicture *ref_picture, pel_t** orig_pic, int Mvmcost, int min_mcost,int useABT)//adaptive block transform { int abort_search, y0, x0, rx0, ry0, ry; pel_t *orig_line; int diff[16], *d; int mcost = Mvmcost; int yy,kk,xx; int curr_diff[MB_BLOCK_SIZE][MB_BLOCK_SIZE]; // for ABT SATD calculation //2004.3.3 pel_t **ref_pic = ref_picture->imgY_ups;//经过插值的图像 参考图像 int img_width = ref_picture->size_x; int img_height = ref_picture->size_y; for (y0=0, abort_search=0; y0<blocksize_y && !abort_search; y0+=4)//4*4块 { ry0 = ((pic_pix_y+y0)<<2) + cand_mv_y; for (x0=0; x0<blocksize_x; x0+=4) { rx0 = ((pic_pix_x+x0)<<2) + cand_mv_x; d = diff; orig_line = orig_pic [y0 ]; ry=ry0; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+ 12, img_height, img_width); orig_line = orig_pic [y0+1]; ry=ry0+4; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+ 12, img_height, img_width); orig_line = orig_pic [y0+2]; ry=ry0+8; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+ 12, img_height, img_width); orig_line = orig_pic [y0+3]; ry=ry0+12; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+ 12, img_height, img_width); if (!useABT) { if ((mcost += SATD (diff, input->hadamard)) > min_mcost) { abort_search = 1;//终止搜索 break; } } else // copy diff to curr_diff for ABT SATD calculation { for (yy=y0,kk=0; yy<y0+4; yy++) for (xx=x0; xx<x0+4; xx++, kk++) curr_diff[yy][xx] = diff[kk]; } } } return mcost; }

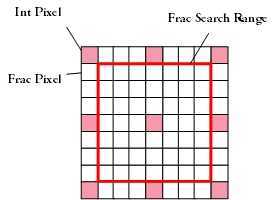

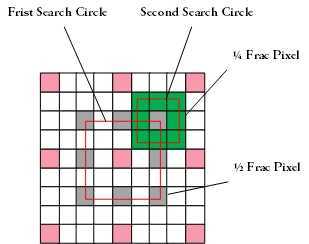

采用1/4像素为基准进行运动向量搜索,搜索起始点周围7x7的1/4像素范围(即当前像素与周围像素点之间的1/4像素点)。该模式只在子宏块(blocktype>3)时使用

在进行搜索之前,需要确定搜索中心点,在前面的整像素搜索之后,得到的最佳搜索位置为整像素点(mvInt),但是预测运动向量(mvp)有可能不是处于整像素的位置,因此在确定搜索中心时,需要在mvInt与mvp间取最低cost的一个作为搜索中心

搜索方式为the third step with a small search pattern(小菱形模板反复搜索)

小范围的子像素搜索是线性的(JVT-F017r1 3.1.2),即基本上都是朝着一个方向进行的,因此循环次数取边界长度7,而不会去搜索该范围内所有的位置

int // ==> minimum motion cost after search FastSubPelBlockMotionSearch (pel_t** orig_pic, // <-- original pixel values for the AxB block int ref, // <-- reference frame (0... or -1 (backward)) int list, int pic_pix_x, // <-- absolute x-coordinate of regarded AxB block int pic_pix_y, // <-- absolute y-coordinate of regarded AxB block int blocktype, // <-- block type (1-16x16 ... 7-4x4) int pred_mv_x, // <-- motion vector predictor (x) in sub-pel units int pred_mv_y, // <-- motion vector predictor (y) in sub-pel units int* mv_x, // <--> in: search center (x) / out: motion vector (x) - in pel units int* mv_y, // <--> in: search center (y) / out: motion vector (y) - in pel units int search_pos2, // <-- search positions for half-pel search (default: 9) int search_pos4, // <-- search positions for quarter-pel search (default: 9) int min_mcost, // <-- minimum motion cost (cost for center or huge value) double lambda, int useABT) // <-- lagrangian parameter for determining motion cost { static int Diamond_x[4] = {-1, 0, 1, 0};//菱形搜索 static int Diamond_y[4] = {0, 1, 0, -1}; int mcost; int cand_mv_x, cand_mv_y; int incr = list==1 ? ((!img->fld_type)&&(enc_picture!=enc_frame_picture)&&(img->type==B_SLICE)) : (enc_picture==enc_frame_picture)&&(img->type==B_SLICE) ;//未见使用 int list_offset = ((img->MbaffFrameFlag)&&(img->mb_data[img->current_mb_nr].mb_field))? img->current_mb_nr%2 ? 4 : 2 : 0; StorablePicture *ref_picture = listX[list+list_offset][ref]; pel_t **ref_pic = ref_picture->imgY_ups; int lambda_factor = LAMBDA_FACTOR (lambda); int mv_shift = 0; int check_position0 = (blocktype==1 && *mv_x==0 && *mv_y==0 && input->hadamard && !input->rdopt && img->type!=B_SLICE && ref==0); int blocksize_x = input->blc_size[blocktype][0]; int blocksize_y = input->blc_size[blocktype][1]; int pic4_pix_x = (pic_pix_x << 2); int pic4_pix_y = (pic_pix_y << 2); int max_pos_x4 = ((ref_picture->size_x/*img->width*/-blocksize_x+1)<<2); int max_pos_y4 = ((ref_picture->size_y/*img->height*/-blocksize_y+1)<<2); int min_pos2 = (input->hadamard ? 0 : 1); int max_pos2 = (input->hadamard ? max(1,search_pos2) : search_pos2); int search_range_dynamic,iXMinNow,iYMinNow,i; int iSADLayer,m,currmv_x,currmv_y,iCurrSearchRange; int search_range = input->search_range; int pred_frac_mv_x,pred_frac_mv_y,abort_search; int mv_cost; int pred_frac_up_mv_x, pred_frac_up_mv_y; *mv_x <<= 2; *mv_y <<= 2; if ((pic4_pix_x + *mv_x > 1) && (pic4_pix_x + *mv_x < max_pos_x4 - 2) && (pic4_pix_y + *mv_y > 1) && (pic4_pix_y + *mv_y < max_pos_y4 - 2) )//判断是否出界 { PelY_14 = FastPelY_14; } else { PelY_14 = UMVPelY_14; } search_range_dynamic = 3;//dynamic动态 pred_frac_mv_x = (pred_mv_x - *mv_x)%4; pred_frac_mv_y = (pred_mv_y - *mv_y)%4; pred_frac_up_mv_x = (pred_MV_uplayer[0] - *mv_x)%4; pred_frac_up_mv_y = (pred_MV_uplayer[1] - *mv_y)%4; //SearchState 小数 pel search 插值像素搜索标记 ,搜索过的位置会被标记,被标记过的位置不会重新搜索 memset(SearchState[0],0,(2*search_range_dynamic+1)*(2*search_range_dynamic+1)); if(input->hadamard) { cand_mv_x = *mv_x; cand_mv_y = *mv_y; mv_cost = MV_COST (lambda_factor, mv_shift, cand_mv_x, cand_mv_y, pred_mv_x, pred_mv_y); mcost = AddUpSADQuarter(pic_pix_x,pic_pix_y,blocksize_x,blocksize_y,cand_mv_x,cand_mv_y,ref_picture/*ref_pic*//*Wenfang Fu 2004.3.12*/,orig_pic,mv_cost,min_mcost,useABT); //计算分块和MV总代价 SearchState[search_range_dynamic][search_range_dynamic] = 1; if (mcost < min_mcost) { min_mcost = mcost; currmv_x = cand_mv_x; currmv_y = cand_mv_y; } } else { SearchState[search_range_dynamic][search_range_dynamic] = 1; currmv_x = *mv_x; currmv_y = *mv_y; } if(pred_frac_mv_x!=0 || pred_frac_mv_y!=0)//mvp { cand_mv_x = *mv_x + pred_frac_mv_x; cand_mv_y = *mv_y + pred_frac_mv_y; mv_cost = MV_COST (lambda_factor, mv_shift, cand_mv_x, cand_mv_y, pred_mv_x, pred_mv_y); mcost = AddUpSADQuarter(pic_pix_x,pic_pix_y,blocksize_x,blocksize_y,cand_mv_x,cand_mv_y,ref_picture/*ref_pic*//*Wenfang Fu 2004.3.12*/,orig_pic,mv_cost,min_mcost,useABT); SearchState[cand_mv_y -*mv_y + search_range_dynamic][cand_mv_x - *mv_x + search_range_dynamic] = 1; if (mcost < min_mcost) { min_mcost = mcost; currmv_x = cand_mv_x; currmv_y = cand_mv_y; } } iXMinNow = currmv_x;//iXMinNow当前搜索中心 currmv_x最佳MV iYMinNow = currmv_y; iCurrSearchRange = 2*search_range_dynamic+1; //采用iCurrSearchRange,总面积是iCurrSearchRange*iCurrSearchRange //而采用一条边作为搜索范围,表明是单一方向的搜索, //为什么呢,因为小范围内的搜索一般都是低频,即搜索方向不会有太大变化 //JVT-F017r1 3.1.2 for(i=0;i<iCurrSearchRange;i++) { abort_search=1;//终止搜索标记 1终止 0继续 iSADLayer = 65536; for (m = 0; m < 4; m++) { cand_mv_x = iXMinNow + Diamond_x[m]; cand_mv_y = iYMinNow + Diamond_y[m]; if(abs(cand_mv_x - *mv_x) <=search_range_dynamic && abs(cand_mv_y - *mv_y)<= search_range_dynamic) { if(!SearchState[cand_mv_y -*mv_y+ search_range_dynamic][cand_mv_x -*mv_x+ search_range_dynamic])//未被搜索 { mv_cost = MV_COST (lambda_factor, mv_shift, cand_mv_x, cand_mv_y, pred_mv_x, pred_mv_y); mcost = AddUpSADQuarter(pic_pix_x,pic_pix_y,blocksize_x,blocksize_y,cand_mv_x,cand_mv_y,ref_picture/*ref_pic*//*Wenfang Fu 2004.3.12*/,orig_pic,mv_cost,min_mcost,useABT); SearchState[cand_mv_y - *mv_y + search_range_dynamic][cand_mv_x - *mv_x + search_range_dynamic] = 1; if (mcost < min_mcost) { min_mcost = mcost; currmv_x = cand_mv_x; currmv_y = cand_mv_y; abort_search = 0; } } } } iXMinNow = currmv_x; iYMinNow = currmv_y; if(abort_search) break; } *mv_x = currmv_x; *mv_y = currmv_y; //===== return minimum motion cost ===== return min_mcost; }

搜索分为两个步骤:

/*! *********************************************************************** * \brief以确定的最佳像素点为中心,进行半像素估计,在以最佳半像素点为中心,进行 1/4像素估计 * Sub pixel block motion search *********************************************************************** */ int // ==> minimum motion cost after search SubPelBlockMotionSearch (pel_t** orig_pic, // <-- original pixel values for the AxB block int ref, // <-- reference frame (0... or -1 (backward)) int list, // <-- reference picture list int pic_pix_x, // <-- absolute x-coordinate of regarded AxB block int pic_pix_y, // <-- absolute y-coordinate of regarded AxB block int blocktype, // <-- block type (1-16x16 ... 7-4x4) int pred_mv_x, // <-- motion vector predictor (x) in sub-pel units int pred_mv_y, // <-- motion vector predictor (y) in sub-pel units int* mv_x, // <--> in: search center (x) / out: motion vector (x) - in pel units int* mv_y, // <--> in: search center (y) / out: motion vector (y) - in pel units int search_pos2, // <-- 半像素搜索点的位置 (default: 9) int search_pos4, // <-- search positions for quarter-pel search (default: 9) int min_mcost, // <-- minimum motion cost (cost for center or huge value) double lambda // <-- lagrangian parameter for determining motion cost ) { int diff[16], *d; int pos, best_pos, mcost, abort_search; int y0, x0, ry0, rx0, ry; int cand_mv_x, cand_mv_y; int max_pos_x4, max_pos_y4; pel_t *orig_line; pel_t **ref_pic; StorablePicture *ref_picture; int lambda_factor = LAMBDA_FACTOR (lambda); int mv_shift = 0; int check_position0 = (blocktype==1 && *mv_x==0 && *mv_y==0 && input->hadamard && !input->rdopt && img->type!=B_SLICE && ref==0); int blocksize_x = input->blc_size[blocktype][0]; int blocksize_y = input->blc_size[blocktype][1]; int pic4_pix_x = (pic_pix_x << 2);//换算成以1/4像素为单位的坐标 int pic4_pix_y = (pic_pix_y << 2); int min_pos2 = (input->hadamard ? 0 : 1); int max_pos2 = (input->hadamard ? max(1,search_pos2) : search_pos2); int list_offset = ((img->MbaffFrameFlag)&&(img->mb_data[img->current_mb_nr].mb_field))? img->current_mb_nr%2 ? 4 : 2 : 0; int apply_weights = ( (active_pps->weighted_pred_flag && (img->type == P_SLICE || img->type == SP_SLICE)) || (active_pps->weighted_bipred_idc && (img->type == B_SLICE))); //是否使用权重计算代价 int img_width, img_height; ref_picture = listX[list+list_offset][ref]; if (apply_weights) { ref_pic = listX[list+list_offset][ref]->imgY_ups_w; } else ref_pic = listX[list+list_offset][ref]->imgY_ups; //做过1/4像素插值的图像放在imgY_ups img_width = ref_picture->size_x; img_height = ref_picture->size_y;//未做插值的图像的宽高 max_pos_x4 = ((ref_picture->size_x - blocksize_x+1)<<2);//最大1/4像素搜索位置 max_pos_y4 = ((ref_picture->size_y - blocksize_y+1)<<2); /********************************* ***** ***** ***** HALF-PEL REFINEMENT ***** ***** ***** *********************************/ //===== convert search center to quarter-pel units ===== 先变成1/4像素的运动向量 *mv_x <<= 2; *mv_y <<= 2; //===== set function for getting pixel values ===== if ((pic4_pix_x + *mv_x > 1) && (pic4_pix_x + *mv_x < max_pos_x4 - 2) && (pic4_pix_y + *mv_y > 1) && (pic4_pix_y + *mv_y < max_pos_y4 - 2) )//判断半像素是否出界 { PelY_14 = FastPelY_14;//没出界 } else { PelY_14 = UMVPelY_14; } //===== loop over search positions ===== for (best_pos = 0, pos = min_pos2; pos < max_pos2; pos++) //半像素搜索,只搜索最近的9个点(max_pos2==9) { cand_mv_x = *mv_x + (spiral_search_x[pos] << 1); // quarter-pel units cand_mv_y = *mv_y + (spiral_search_y[pos] << 1); // quarter-pel units //----- set motion vector cost ----- mcost = MV_COST (lambda_factor, mv_shift, cand_mv_x, cand_mv_y, pred_mv_x, pred_mv_y); if (check_position0 && pos==0) //中央像素点位置为当前宏块位置 { mcost -= WEIGHTED_COST (lambda_factor, 16); } if (mcost >= min_mcost) continue; //----- add up SATD ----- for (y0=0, abort_search=0; y0<blocksize_y && !abort_search; y0+=4) { ry0 = ((pic_pix_y+y0)<<2) + cand_mv_y;//搜索中心 for (x0=0; x0<blocksize_x; x0+=4)//4*4块计算SATD { rx0 = ((pic_pix_x+x0)<<2) + cand_mv_x; d = diff;//共享内存空间 //找到1/4像素的搜索中心,根据插值后的像素值与整数像素值同位置出相减 orig_line = orig_pic [y0 ]; ry=ry0; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+12, img_height, img_width); orig_line = orig_pic [y0+1]; ry=ry0+4; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+12, img_height, img_width); orig_line = orig_pic [y0+2]; ry=ry0+8; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+12, img_height, img_width); orig_line = orig_pic [y0+3]; ry=ry0+12; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+12, img_height, img_width); if ((mcost += SATD (diff, input->hadamard)) > min_mcost) { abort_search = 1; break; }//比最小代价大,终止搜索 } } if (mcost < min_mcost) { min_mcost = mcost; best_pos = pos; } } if (best_pos) { *mv_x += (spiral_search_x [best_pos] << 1); *mv_y += (spiral_search_y [best_pos] << 1); } /************************************ ***** ***** ***** QUARTER-PEL REFINEMENT ***** ***** ***** ************************************/ //===== set function for getting pixel values ===== if ((pic4_pix_x + *mv_x > 1) && (pic4_pix_x + *mv_x < max_pos_x4 - 1) && (pic4_pix_y + *mv_y > 1) && (pic4_pix_y + *mv_y < max_pos_y4 - 1) ) { PelY_14 = FastPelY_14; } else { PelY_14 = UMVPelY_14; } //===== loop over search positions ===== for (best_pos = 0, pos = 1; pos < search_pos4; pos++)//1/4像素搜索,只搜索最近的9个点(max_pos2==9) { cand_mv_x = *mv_x + spiral_search_x[pos]; // quarter-pel units cand_mv_y = *mv_y + spiral_search_y[pos]; // quarter-pel units //----- set motion vector cost ----- mcost = MV_COST (lambda_factor, mv_shift, cand_mv_x, cand_mv_y, pred_mv_x, pred_mv_y); if (mcost >= min_mcost) continue; //----- add up SATD ----- for (y0=0, abort_search=0; y0<blocksize_y && !abort_search; y0+=4) { ry0 = ((pic_pix_y+y0)<<2) + cand_mv_y; for (x0=0; x0<blocksize_x; x0+=4) { rx0 = ((pic_pix_x+x0)<<2) + cand_mv_x; d = diff; orig_line = orig_pic [y0 ]; ry=ry0; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+12, img_height, img_width); orig_line = orig_pic [y0+1]; ry=ry0+4; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+12, img_height, img_width); orig_line = orig_pic [y0+2]; ry=ry0+8; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d++ = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+12, img_height, img_width); orig_line = orig_pic [y0+3]; ry=ry0+12; *d++ = orig_line[x0 ] - PelY_14 (ref_pic, ry, rx0 , img_height, img_width); *d++ = orig_line[x0+1] - PelY_14 (ref_pic, ry, rx0+ 4, img_height, img_width); *d++ = orig_line[x0+2] - PelY_14 (ref_pic, ry, rx0+ 8, img_height, img_width); *d = orig_line[x0+3] - PelY_14 (ref_pic, ry, rx0+12, img_height, img_width); if ((mcost += SATD (diff, input->hadamard)) > min_mcost) { abort_search = 1; break; } } } if (mcost < min_mcost) { min_mcost = mcost; best_pos = pos; } } if (best_pos) { *mv_x += spiral_search_x [best_pos]; *mv_y += spiral_search_y [best_pos]; } //===== return minimum motion cost ===== return min_mcost; }

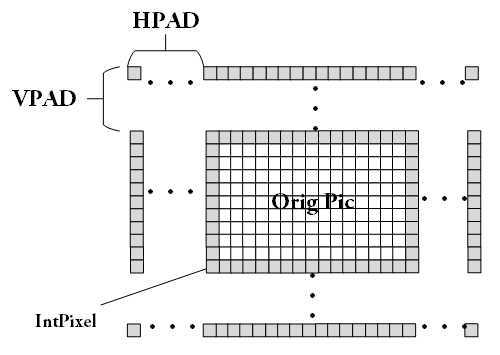

在做插值之前,需要先对原图像添加边框(Pad),即在原图上下左右四个方向分别添加边界像素点,像素点的取值为边界像素点的值,可以参考Edge Process

在JM8.6时,HPad与VPad都是4,而在JM18.6,HPad为32,VPad为20

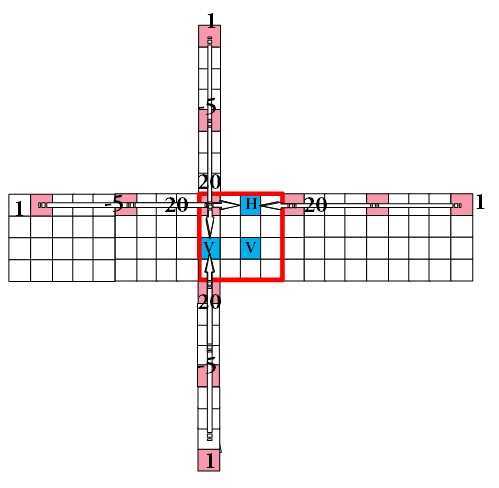

插值过程可以分为6个步骤:

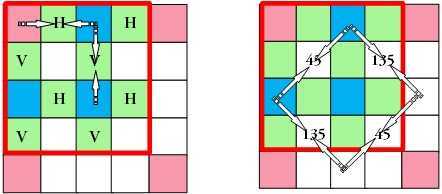

1/2像素插值

1/4像素水平、垂直插值 1/4像素正反45°插值

以上步骤是以JM8.6为蓝本,JM18.6有自己的方式,具体可以参考下面的code

JM8.6

/*! ************************************************************************ * \brief * Upsample 4 times, store them in out4x. Color is simply copied * * \par Input: * srcy, srcu, srcv, out4y, out4u, out4v * * \par Side Effects_ * Uses (writes) img4Y_tmp. This should be moved to a static variable * in this module ************************************************************************/ void UnifiedOneForthPix (StorablePicture *s) { int is; int i, j, j4; int ie2, je2, jj, maxy; byte **out4Y; byte *ref11; byte **imgY = s->imgY; int img_width =s->size_x; int img_height=s->size_y; // don‘t upsample twice if (s->imgY_ups || s->imgY_11) return; s->imgY_11 = malloc ((s->size_x * s->size_y) * sizeof (byte)); if (NULL == s->imgY_11) no_mem_exit("alloc_storable_picture: s->imgY_11"); get_mem2D (&(s->imgY_ups), (2*IMG_PAD_SIZE + s->size_y)*4, (2*IMG_PAD_SIZE + s->size_x)*4); if (input->WeightedPrediction || input->WeightedBiprediction) { s->imgY_11_w = malloc ((s->size_x * s->size_y) * sizeof (byte)); get_mem2D (&(s->imgY_ups_w), (2*IMG_PAD_SIZE + s->size_y)*4, (2*IMG_PAD_SIZE + s->size_x)*4); } out4Y = s->imgY_ups; ref11 = s->imgY_11; //chj 水平半像素内插 for (j = -IMG_PAD_SIZE; j < s->size_y + IMG_PAD_SIZE; j++) { for (i = -IMG_PAD_SIZE; i < s->size_x + IMG_PAD_SIZE; i++) { jj = max (0, min (s->size_y - 1, j)); is = (ONE_FOURTH_TAP[0][0] * (imgY[jj][max (0, min (s->size_x - 1, i))] + imgY[jj][max (0, min (s->size_x - 1, i + 1))]) + ONE_FOURTH_TAP[1][0] * (imgY[jj][max (0, min (s->size_x - 1, i - 1))] + imgY[jj][max (0, min (s->size_x - 1, i + 2))]) + ONE_FOURTH_TAP[2][0] * (imgY[jj][max (0, min (s->size_x - 1, i - 2))] + imgY[jj][max (0, min (s->size_x - 1, i + 3))])); img4Y_tmp[j + IMG_PAD_SIZE][(i + IMG_PAD_SIZE) * 2] = imgY[jj][max (0, min (s->size_x - 1, i))] * 1024; // 1/1 pix pos img4Y_tmp[j + IMG_PAD_SIZE][(i + IMG_PAD_SIZE) * 2 + 1] = is * 32; // 1/2 pix pos } } //chj 垂直半像素内插 for (i = 0; i < (s->size_x + 2 * IMG_PAD_SIZE) * 2; i++) { for (j = 0; j < s->size_y + 2 * IMG_PAD_SIZE; j++) { j4 = j * 4; maxy = s->size_y + 2 * IMG_PAD_SIZE - 1; // change for TML4, use 6 TAP vertical filter /*1/2像素用6抽头滤波器,系数为ONE_FOURTH_TAP (20 -5 1 1 -5 20) */ is = (ONE_FOURTH_TAP[0][0] * (img4Y_tmp[j][i] + img4Y_tmp[min (maxy, j + 1)][i]) + ONE_FOURTH_TAP[1][0] * (img4Y_tmp[max (0, j - 1)][i] + img4Y_tmp[min (maxy, j + 2)][i]) + ONE_FOURTH_TAP[2][0] * (img4Y_tmp[max (0, j - 2)][i] + img4Y_tmp[min (maxy, j + 3)][i])) / 32; PutPel_14 (out4Y, (j - IMG_PAD_SIZE) * 4, (i - IMG_PAD_SIZE * 2) * 2, (pel_t) max (0, min (255, (int) ((img4Y_tmp[j][i] + 512) / 1024)))); // 1/2 pix PutPel_14 (out4Y, (j - IMG_PAD_SIZE) * 4 + 2, (i - IMG_PAD_SIZE * 2) * 2, (pel_t) max (0, min (255, (int) ((is + 512) / 1024)))); // 1/2 pix } } /* 1/4 pix */ /*1/4像素直接用平均值*/ /* luma */ ie2 = (s->size_x + 2 * IMG_PAD_SIZE - 1) * 4; je2 = (s->size_y + 2 * IMG_PAD_SIZE - 1) * 4; for (j = 0; j < je2 + 4; j += 2) for (i = 0; i < ie2 + 3; i += 2) { /* ‘-‘ 水平内插 */ PutPel_14 (out4Y, j - IMG_PAD_SIZE * 4, i - IMG_PAD_SIZE * 4 + 1, (pel_t) (max (0, min (255,(int) (FastPelY_14 (out4Y, j - IMG_PAD_SIZE * 4, i - IMG_PAD_SIZE * 4, img_height, img_width) + FastPelY_14 (out4Y, j - IMG_PAD_SIZE * 4, min (ie2 + 2, i + 2) - IMG_PAD_SIZE * 4, img_height, img_width)+1) / 2)))); } for (i = 0; i < ie2 + 4; i++) { for (j = 0; j < je2 + 3; j += 2) { if (i % 2 == 0) { /* ‘|‘ 垂直内插*/ PutPel_14 (out4Y, j - IMG_PAD_SIZE * 4 + 1, i - IMG_PAD_SIZE * 4, (pel_t) (max (0, min (255, (int) (FastPelY_14 (out4Y, j - IMG_PAD_SIZE * 4, i - IMG_PAD_SIZE * 4, img_height, img_width) + FastPelY_14 (out4Y, min (je2 + 2, j + 2) - IMG_PAD_SIZE * 4, i - IMG_PAD_SIZE * 4, img_height, img_width)+1) / 2)))); } else if ((j % 4 == 0 && i % 4 == 1) || (j % 4 == 2 && i % 4 == 3)) { /* ‘/‘ 45度内插 */ PutPel_14 (out4Y, j - IMG_PAD_SIZE * 4 + 1, i - IMG_PAD_SIZE * 4, (pel_t) (max (0, min (255, (int) (FastPelY_14 (out4Y, j - IMG_PAD_SIZE * 4, min (ie2 + 2, i + 1) - IMG_PAD_SIZE * 4, img_height, img_width) + FastPelY_14 (out4Y, min (je2 + 2, j + 2) - IMG_PAD_SIZE * 4, i - IMG_PAD_SIZE * 4 - 1, img_height, img_width) + 1) / 2)))); } else { /* ‘\‘ 反向45度内插 */ PutPel_14 (out4Y, j - IMG_PAD_SIZE * 4 + 1, i - IMG_PAD_SIZE * 4, (pel_t) (max (0, min (255, (int) (FastPelY_14 (out4Y, j - IMG_PAD_SIZE * 4, i - IMG_PAD_SIZE * 4 - 1, img_height, img_width) + FastPelY_14 (out4Y, min (je2 + 2, j + 2) - IMG_PAD_SIZE * 4, min (ie2 + 2, i + 1) - IMG_PAD_SIZE * 4, img_height, img_width) + 1) / 2)))); } } } /* Chroma: 色度只是复制整数点,无内插 */ /* for (j = 0; j < img->height_cr; j++) { memcpy (outU[j], imgU[j], img->width_cr); // just copy 1/1 pix, interpolate "online" memcpy (outV[j], imgV[j], img->width_cr); } */ // Generate 1/1th pel representation (used for integer pel MV search) /* ref11存放的是整像素resolution的图像 out4Y存放的是1/4像素resolution的图像 要把整像素值从out4Y复制到ref11对应的位置 */ GenerateFullPelRepresentation (out4Y, ref11, s->size_x, s->size_y); }

JM18.6

/*! ************************************************************************ * \brief * Creates the 4x4 = 16 images that contain quarter-pel samples * sub-sampled at different spatial orientations; * enables more efficient implementation * * \param p_Vid * pointer to VideoParameters structure * \param s * pointer to StorablePicture structure s************************************************************************ */ void getSubImagesLuma( VideoParameters *p_Vid, StorablePicture *s ) { imgpel ****cImgSub = s->p_curr_img_sub; int otf_shift = ( p_Vid->p_Inp->OnTheFlyFractMCP == OTF_L1 ) ? (1) : (0) ; // 0 1 2 3 // 4 5 6 7 // 8 9 10 11 // 12 13 14 15 //由于p_curr_img_sub是4维的 4x4xYxX, //而上方的矩阵cImgSub就是头两维的4x4矩阵 //所以cImgSub[][]中的地址所指向的都是一整副图像(X x Y) //// INTEGER PEL POSITIONS //// // sub-image 0 [0][0] // simply copy the integer pels if (cImgSub[0][0][0] != s->p_curr_img[0]) { getSubImageInteger( s, cImgSub[0][0], s->p_curr_img); } else { getSubImageInteger_s( s, cImgSub[0][0], s->p_curr_img); } //// HALF-PEL POSITIONS: SIX-TAP FILTER //// // sub-image 2 [0][2] // HOR interpolate (six-tap) sub-image [0][0] getHorSubImageSixTap( p_Vid, s, cImgSub[0][2>>otf_shift], cImgSub[0][0] ); // sub-image 8 [2][0] // VER interpolate (six-tap) sub-image [0][0] getVerSubImageSixTap( p_Vid, s, cImgSub[2>>otf_shift][0], cImgSub[0][0]); // sub-image 10 [2][2] // VER interpolate (six-tap) sub-image [0][2] getVerSubImageSixTapTmp( p_Vid, s, cImgSub[2>>otf_shift][2>>otf_shift]); if( !p_Vid->p_Inp->OnTheFlyFractMCP ) { //// QUARTER-PEL POSITIONS: BI-LINEAR INTERPOLATION //// // sub-image 1 [0][1] getSubImageBiLinear ( s, cImgSub[0][1], cImgSub[0][0], cImgSub[0][2]); // sub-image 4 [1][0] getSubImageBiLinear ( s, cImgSub[1][0], cImgSub[0][0], cImgSub[2][0]); // sub-image 5 [1][1] getSubImageBiLinear ( s, cImgSub[1][1], cImgSub[0][2], cImgSub[2][0]); // sub-image 6 [1][2] getSubImageBiLinear ( s, cImgSub[1][2], cImgSub[0][2], cImgSub[2][2]); // sub-image 9 [2][1] getSubImageBiLinear ( s, cImgSub[2][1], cImgSub[2][0], cImgSub[2][2]); // sub-image 3 [0][3] getHorSubImageBiLinear ( s, cImgSub[0][3], cImgSub[0][2], cImgSub[0][0]); // sub-image 7 [1][3] getHorSubImageBiLinear ( s, cImgSub[1][3], cImgSub[0][2], cImgSub[2][0]); // sub-image 11 [2][3] getHorSubImageBiLinear ( s, cImgSub[2][3], cImgSub[2][2], cImgSub[2][0]); // sub-image 12 [3][0] getVerSubImageBiLinear ( s, cImgSub[3][0], cImgSub[2][0], cImgSub[0][0]); // sub-image 13 [3][1] getVerSubImageBiLinear ( s, cImgSub[3][1], cImgSub[2][0], cImgSub[0][2]); // sub-image 14 [3][2] getVerSubImageBiLinear ( s, cImgSub[3][2], cImgSub[2][2], cImgSub[0][2]); // sub-image 15 [3][3] getDiagSubImageBiLinear( s, cImgSub[3][3], cImgSub[0][2], cImgSub[2][0]); } }

h.264 fast,1/2,1/4像素运动估计与插值处理,布布扣,bubuko.com

标签:style blog http color 使用 os strong 数据

原文地址:http://www.cnblogs.com/TaigaCon/p/3859396.html