标签:

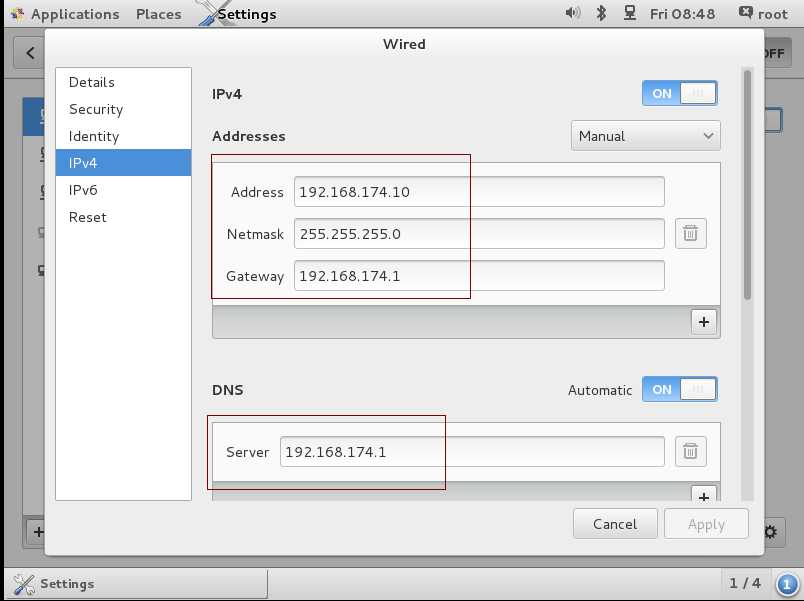

配置IP地址:

设置IP地址网关,如下为master机器设置,slave1与slave2按照同样方法配置。

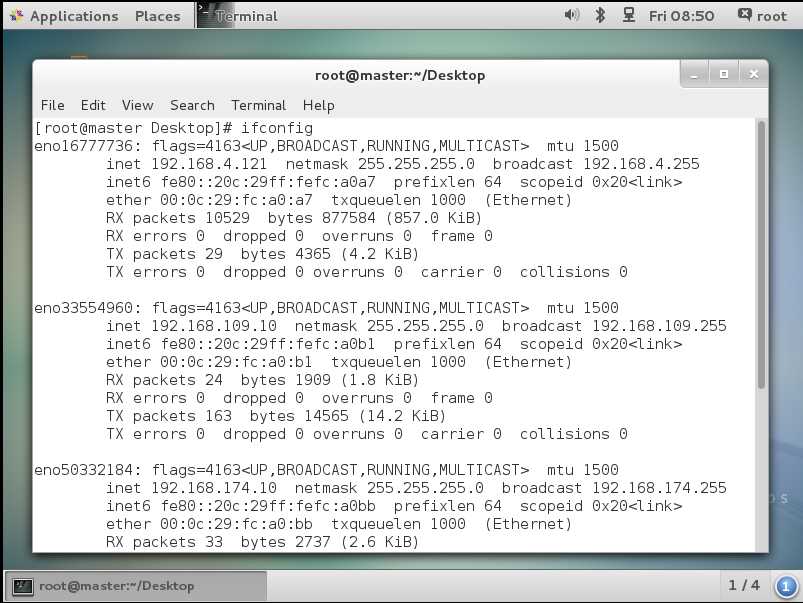

配置完成,重启网卡service network restart ,

查看ip ifconfig

三台虚拟机,master,slave1,slave2

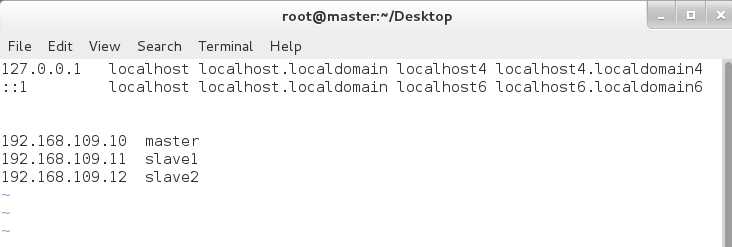

hosts映射:

修改 :vim /etc/hosts

检查配置是否生效

[root@master ~]# ping slave1

PING slave1 (192.168.109.11) 56(84) bytes of data.

64 bytes from slave1 (192.168.109.11): icmp_seq=1 ttl=64 time=1.87 ms

64 bytes from slave1 (192.168.109.11): icmp_seq=2 ttl=64 time=0.505 ms

64 bytes from slave1 (192.168.109.11): icmp_seq=3 ttl=64 time=0.462 ms

[root@slave1 ~]# ping master

PING master (192.168.109.10) 56(84) bytes of data.

64 bytes from master (192.168.109.10): icmp_seq=1 ttl=64 time=0.311 ms

64 bytes from master (192.168.109.10): icmp_seq=2 ttl=64 time=0.465 ms

64 bytes from master (192.168.109.10): icmp_seq=3 ttl=64 time=0.541 ms

[root@slave2 ~]# ping master

PING master (192.168.109.10) 56(84) bytes of data.

64 bytes from master (192.168.109.10): icmp_seq=1 ttl=64 time=1.08 ms

64 bytes from master (192.168.109.10): icmp_seq=2 ttl=64 time=0.463 ms

64 bytes from master (192.168.109.10): icmp_seq=3 ttl=64 time=0.443 ms

如上两两互ping。

防火墙关闭:

查看防火墙状态

[root@master ~]# service iptables status

Redirecting to /bin/systemctl status iptables.service

iptables.service

Loaded: not-found (Reason: No such file or directory)

Active: inactive (dead)

由于我的虚拟机没有安装防火墙,所以显示不出来

如果安装了,以root用户使用如下命令关闭iptables

chkconfig iptables off

关闭sellinux:

[root@master ~]# vim /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled ------此处修改为disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

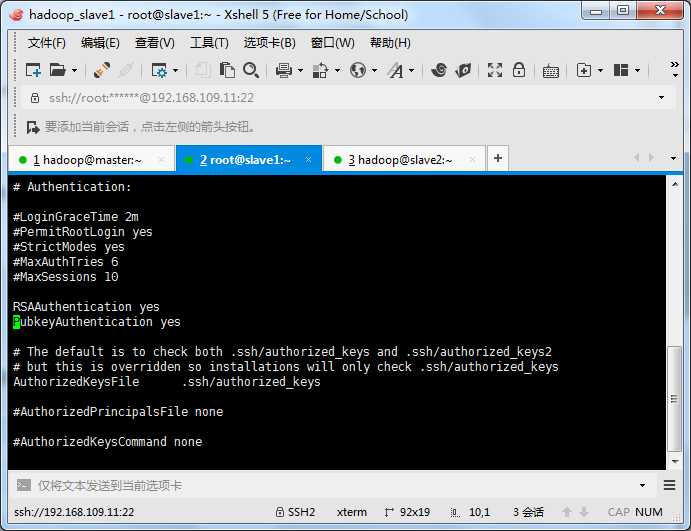

配置ssh互信:

以root用户使用vi /etc/ssh/sshd_config,打开sshd_config配置文件,开放三个配置,如下图所示:

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

配置后重启服务

service sshd restart

使用hadoop用户登录在三个节点中使用如下命令生成私钥和公钥;

[hadoop@master ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory ‘/home/hadoop/.ssh‘.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

ee:da:84:e1:9a:e6:06:30:68:89:8e:24:5d:80:e2:de hadoop@master

The key‘s randomart image is:

+--[ RSA 2048]----+

| ... |

|o . |

|=... |

|*=. |

|Bo. . S |

|.o.E . + |

| . o o |

| oo + |

| ++ ..o |

+-----------------+

[hadoop@master ~]$

合并公钥到authorized_keys文件,在Master服务器,进入/hadoop/.ssh目录,通过SSH命令合并,

cat id_rsa.pub>> authorized_keys

ssh hadoop@slave1 cat ~/.ssh/id_rsa.pub>> authorized_keys

ssh hadoop@slave2 cat ~/.ssh/id_rsa.pub>> authorized_keys

[hadoop@master ~]$ cd .ssh/

[hadoop@master .ssh]$ ls

id_rsa id_rsa.pub

[hadoop@master .ssh]$ cat id_rsa.pub>> authorized_keys

[hadoop@master .ssh]$ ls

authorized_keys id_rsa id_rsa.pub

[hadoop@master .ssh]$ ls

authorized_keys id_rsa id_rsa.pub known_hosts

[hadoop@master .ssh]$ ls -l

total 16

-rw-rw-r-- 1 hadoop hadoop 790 May 13 09:55 authorized_keys

-rw------- 1 hadoop hadoop 1679 May 13 09:57 id_rsa

-rw-r--r-- 1 hadoop hadoop 395 May 13 09:57 id_rsa.pub

-rw-r--r-- 1 hadoop hadoop 183 May 13 09:55 known_hosts

[hadoop@master .ssh]$ ssh hadoop@slave1 cat ~/.ssh/id_rsa.pub>> authorized_keys

hadoop@slave1‘s password:

[hadoop@master .ssh]$ ls -l

total 16

-rw-rw-r-- 1 hadoop hadoop 1185 May 13 09:57 authorized_keys

-rw------- 1 hadoop hadoop 1679 May 13 09:57 id_rsa

-rw-r--r-- 1 hadoop hadoop 395 May 13 09:57 id_rsa.pub

-rw-r--r-- 1 hadoop hadoop 183 May 13 09:55 known_hosts

[hadoop@master .ssh]$ ssh hadoop@slave2 cat ~/.ssh/id_rsa.pub>> authorized_keys

The authenticity of host ‘slave2 (192.168.109.12)‘ can‘t be established.

ECDSA key fingerprint is 4e:f1:2c:3e:34:c1:36:75:ae:1c:a1:38:cf:45:bc:f8.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘slave2,192.168.109.12‘ (ECDSA) to the list of known hosts.

hadoop@slave2‘s password:

[hadoop@master .ssh]$ ls -l

total 16

-rw-rw-r-- 1 hadoop hadoop 1580 May 13 09:58 authorized_keys

-rw------- 1 hadoop hadoop 1679 May 13 09:57 id_rsa

-rw-r--r-- 1 hadoop hadoop 395 May 13 09:57 id_rsa.pub

-rw-r--r-- 1 hadoop hadoop 366 May 13 09:58 known_hosts

[hadoop@master .ssh]$

在将密码文件分发出去

[hadoop@master .ssh]$ scp authorized_keys hadoop@slave1:/home/hadoop/.ssh

hadoop@slave1‘s password:

authorized_keys 100% 1580 1.5KB/s 00:00

[hadoop@master .ssh]$ scp authorized_keys hadoop@slave2:/home/hadoop/.ssh

hadoop@slave2‘s password:

authorized_keys 100% 1580 1.5KB/s 00:00

[hadoop@master .ssh]$

在三台机器中使用如下设置authorized_keys读写权限

chmod 400 authorized_keys

测试ssh免密码登录是否生效

[hadoop@master .ssh]$ ssh slave1

Last login: Fri May 13 10:23:09 2016 from master

[hadoop@slave1 ~]$ ssh slave2

Last login: Fri May 13 10:23:33 2016 from slave1

[hadoop@slave2 ~]$ ssh master

The authenticity of host ‘master (192.168.109.10)‘ can‘t be established.

ECDSA key fingerprint is 4e:f1:2c:3e:34:c1:36:75:ae:1c:a1:38:cf:45:bc:f8.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘master,192.168.109.10‘ (ECDSA) to the list of known hosts.

Last login: Fri May 13 09:46:50 2016

[hadoop@master ~]$

经过测试 OK

hadoop安装配置:

解压缩hadoop压缩文件

[root@master ~]# cd /tmp/

[root@master tmp]# ls

[root@master tmp]# tar -zxvf hadoop-2.4.1.tar.gz

[root@master tmp]# mv hadoop-2.4.1 /usr

[root@master tmp]# chown -R hadoop /usr/hadoop-2.4.1

在Hadoop目录下创建子目录:

[hadoop@master hadoop-2.4.1]$ mkdir -p tmp

[hadoop@master hadoop-2.4.1]$ mkdir -p name

[hadoop@master hadoop-2.4.1]$ mkdir -p data

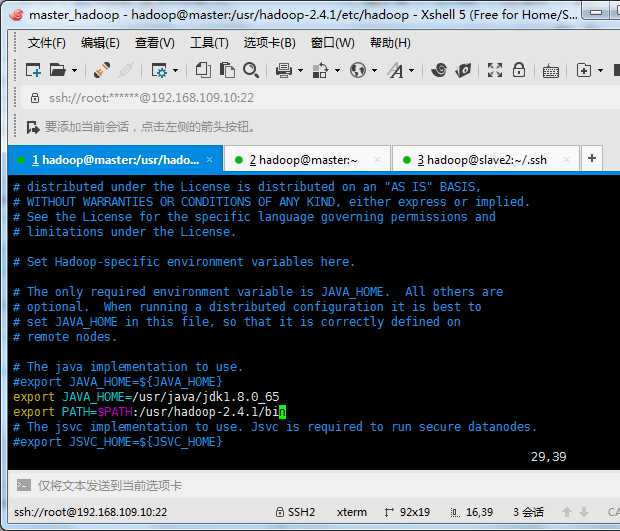

配置hadoop中jdk和hadoop/bin路径

[hadoop@master ~]$ cd /usr/hadoop-2.4.1/etc/hadoop

[hadoop@master hadoop]$ vim hadoop-env.sh

确认是否生效

[hadoop@master hadoop]$ source hadoop-env.sh

[hadoop@master hadoop]$ hadoop version

Hadoop 2.4.1

Subversion http://svn.apache.org/repos/asf/hadoop/common -r 1604318

Compiled by jenkins on 2014-06-21T05:43Z

Compiled with protoc 2.5.0

From source with checksum bb7ac0a3c73dc131f4844b873c74b630

This command was run using /usr/hadoop-2.4.1/share/hadoop/common/hadoop-common-2.4.1.jar

[hadoop@master hadoop]$

[hadoop@master hadoop-2.4.1]$ cd etc/hadoop/

[hadoop@master hadoop]$ vim core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/hadoop-2.4.1/tmp</value>

</property>

<property>

<name>hadoop.proxyuser.hduser.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hduser.groups</name>

<value>*</value>

</property>

</configuration>

~

# resolve links - $0 may be a softlink

export YARN_CONF_DIR="${YARN_CONF_DIR:-$HADOOP_YARN_HOME/conf}"

# some Java parameters

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

export JAVA_HOME=/usr/java/jdk1.8.0_65 ------------

export PATH=$PATH:/usr/hadoop-2.4.1/bin ------------

if [ "$JAVA_HOME" != "" ]; then

#echo "run java in $JAVA_HOME"

JAVA_HOME=$JAVA_HOME

fi

if [ "$JAVA_HOME" = "" ]; then

echo "Error: JAVA_HOME is not set."

exit 1

fi

[hadoop@master hadoop]$ vim hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/hadoop-2.4.1/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/hadoop-2.4.1/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

[hadoop@master hadoop-2.4.1]$ cd etc/hadoop/

[hadoop@master hadoop]$ cp mapred-site.xml.template mapred-site.xml

[hadoop@master hadoop]$ vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

vi slaves

slave1

slave2

创建文件夹,切换到root,改权限,每个节点都按此步骤

[hadoop@slave1 ~]$ mkdir -p /usr/hadoop-2.4.1

mkdir: cannot create directory ‘/usr/hadoop-2.4.1’: Permission denied

[hadoop@slave1 ~]$ su - root

Password:

Last login: Fri May 13 09:10:15 CST 2016 on pts/0

Last failed login: Fri May 13 11:51:04 CST 2016 from master on ssh:notty

There were 3 failed login attempts since the last successful login.

[root@slave1 ~]# mkdir -p /usr/hadoop-2.4.1

[root@slave1 ~]# chown -R hadoop /usr/hadoop-2.4.1/

[root@slave1 ~]#

scp -r /usr/hadoop-2.4.1 slave1:/usr/

scp -r /usr/hadoop-2.4.1 slave2:/usr/

[hadoop@master bin]$./hdfs namenode -format

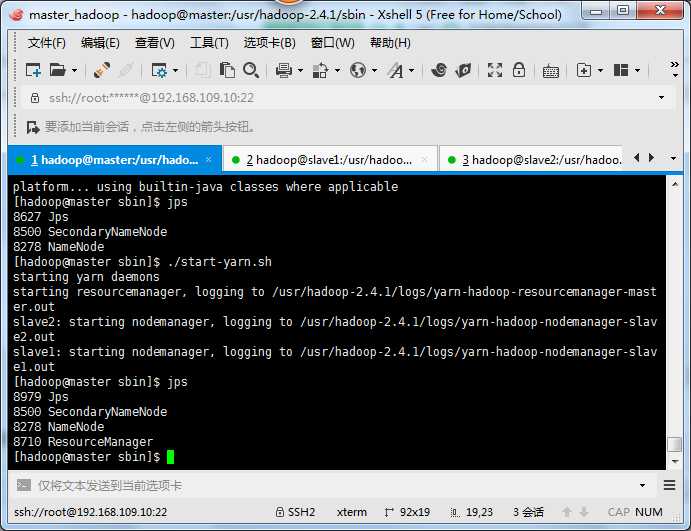

cd hadoop-2.4.1/sbin

./start-dfs.sh

./start-yarn.sh

成功

问题:

[hadoop@master sbin]$ ./start-dfs.sh

Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /usr/hadoop-2.4.1/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It‘s highly recommended that you fix the library with ‘execstack -c <libfile>‘, or link it with ‘-z noexecstack‘.

Incorrect configuration: namenode address dfs.namenode.servicerpc-address or dfs.namenode.rpc-address is not configured.

Starting namenodes on [2016-05-13 13:39:48,187 WARN [main] util.NativeCodeLoader (NativeCodeLoader.java:<clinit>(62)) - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable]

Error: Cannot find configuration directory: /etc/hadoop

Error: Cannot find configuration directory: /etc/hadoop

Starting secondary namenodes [2016-05-13 13:39:49,547 WARN [main] util.NativeCodeLoader (NativeCodeLoader.java:<clinit>(62)) - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

0.0.0.0]

Error: Cannot find configuration directory: /etc/hadoop

Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /usr/hadoop-2.4.1/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It‘s highly recommended that you fix the library with ‘execstack -c <libfile>‘, or link it with ‘-z noexecstack‘.

解决方法:export HADOOP_CONF_DIR=/usr/hadoop-2.4.1/etc/hadoop

问题:电脑重新开机后如下

[hadoop@master hadoop]$ hadoop version

bash: hadoop: command not found...

[hadoop@master hadoop]$ vi ~/.bash_profile

PATH=$PATH:$HOME/.local/bin:$HOME/bin:$HOME/bin:/usr/hadoop-2.4.1/bin

export PATH

[hadoop@master hadoop]$ vi ~/.bash_profile

[hadoop@master hadoop]$ hadoop version

Hadoop 2.4.1

Subversion http://svn.apache.org/repos/asf/hadoop/common -r 1604318

Compiled by jenkins on 2014-06-21T05:43Z

Compiled with protoc 2.5.0

From source with checksum bb7ac0a3c73dc131f4844b873c74b630

This command was run using /usr/hadoop-2.4.1/share/hadoop/common/hadoop-common-2.4.1.jar

标签:

原文地址:http://www.cnblogs.com/xhwangspark/p/5489876.html