标签:des style blog http color 使用 os io

各种配置在命令行状态下,多用crm进行

这个类型是全局配置,主要包含下面两个:

quorum的意思是最低法定人数,pacemaker能够继续工作所需要的最少的active的node的个数,这个数是(num of nodes)/2 + 1

如果不能达到法定人数的时候行为如何呢?

ignore表示继续运行,如果是两个Node的cluster,只要有一个挂了,就小于最小法定数目了,所有要设为ignore

freeze表示已经运行的resource还是运行的,但是不能加入新的resource了。

stop表示所有的resource都会停止工作

stonith全称Shoot-The-Other-Node-In-The-Head,一枪毙命或者一枪爆头

当一个Node的heartbeat没有反应了,但是不代表这台机器不访问和写入数据,尤其是在DRBD的情况下,这个没有反应的Node很可能写入脏数据,所以通过电源管理系统ipmi,直接关掉机器是最好的保护数据的方法

设置

crm configure propertry no-quorum-policy=ignore

crm configure property stonith-enabled=false

查看

crm configure show

IP地址,apache,mysql这些服务都是Cluster resources

resource由resource agent管理

resource agent有多种类型,可以用下面的命令查看

# crm ra classes

lsb

ocf / heartbeat pacemaker redhat

service

stonith

upstart

LSB全称Linux Standards Base,是由操作系统提供的在/etc/init.d下面的script,包含start, stop, restart, reload, force-reload, status方法。然而这些方法和操作系统有关,依据不同的操作系统,不同的实现

root@pacemaker01:/home/openstack# crm ra list lsb

acpid apache2 apparmor apport atd

console-setup corosync corosync-notifyd cron dbus

dns-clean friendly-recovery grub-common halt irqbalance

killprocs kmod logd networking ondemand

openhpid pacemaker pppd-dns procps rc

rc.local rcS reboot resolvconf rsync

rsyslog screen-cleanup sendsigs single ssh

sudo udev umountfs umountnfs.sh umountroot

unattended-upgrades urandom

root@pacemaker01:/home/openstack# ls /etc/init.d/

acpid corosync grub-common networking rc rsync ssh unattended-upgrades

apache2 corosync-notifyd halt ondemand rc.local rsyslog sudo urandom

apparmor cron irqbalance openhpid rcS screen-cleanup udev

apport dbus killprocs pacemaker README sendsigs umountfs

atd dns-clean kmod pppd-dns reboot single umountnfs.sh

console-setup friendly-recovery logd procps resolvconf skeleton umountroot

OCF全称Open Cluster Framework

这种resource agent屏蔽了不同的操作系统,提供了标准的实现,在目录/usr/lib/ocf/resource.d/provider中,支持start, stop, status, monitor, meta-data方法。

root@pacemaker01:/home/openstack# crm ra list ocf heartbeat

AoEtarget AudibleAlarm CTDB ClusterMon Delay Dummy

EvmsSCC Evmsd Filesystem ICP IPaddr IPaddr2

IPsrcaddr IPv6addr LVM LinuxSCSI MailTo ManageRAID

ManageVE Pure-FTPd Raid1 Route SAPDatabase SAPInstance

SendArp ServeRAID SphinxSearchDaemon Squid Stateful SysInfo

VIPArip VirtualDomain WAS WAS6 WinPopup Xen

Xinetd anything apache asterisk conntrackd db2

dhcpd drbd eDir88 ethmonitor exportfs fio

iSCSILogicalUnit iSCSITarget ids iscsi jboss ldirectord

lxc mysql mysql-proxy named nfsserver nginx

oracle oralsnr pgsql pingd portblock postfix

pound proftpd rsyncd rsyslog scsi2reservation sfex

slapd symlink syslog-ng tomcat varnish vmware

root@pacemaker01:/home/openstack# ls /usr/lib/ocf/resource.d/heartbeat/

anything Delay Filesystem iSCSILogicalUnit ManageVE pingd rsyslog Squid vmware

AoEtarget dhcpd fio iSCSITarget mysql portblock SAPDatabase Stateful WAS

apache drbd ICP jboss mysql-proxy postfix SAPInstance symlink WAS6

asterisk Dummy ids ldirectord named pound scsi2reservation SysInfo WinPopup

AudibleAlarm eDir88 IPaddr LinuxSCSI nfsserver proftpd SendArp syslog-ng Xen

ClusterMon ethmonitor IPaddr2 LVM nginx Pure-FTPd ServeRAID tomcat Xinetd

conntrackd Evmsd IPsrcaddr lxc oracle Raid1 sfex varnish

CTDB EvmsSCC IPv6addr MailTo oralsnr Route slapd VIPArip

db2 exportfs iscsi ManageRAID pgsql rsyncd SphinxSearchDaemon VirtualDomain

例如我们看IPaddr2 /usr/lib/ocf/resource.d/heartbeat/IPaddr2

start会调用ip_start

ip_start会调用add_interface $OCF_RESKEY_ip $NETMASK $BRDCAST $NIC $IFLABEL

add_interface会调用$IP2UTIL -f inet addr add $ipaddr/$netmask brd $broadcast dev $iface

这里$IP2UTIL就是一个环境变量

root@pacemaker01:/usr/lib/ocf/lib/heartbeat# ls

apache-conf.sh ocf-binaries ocf-rarun ocf-shellfuncs sapdb-nosha.sh

http-mon.sh ocf-directories ocf-returncodes ora-common.sh sapdb.sh

root@pacemaker01:/usr/lib/ocf/lib/heartbeat# grep -r "IP2UTIL" *

ocf-binaries:: ${IP2UTIL:=ip}

在这里定义了,不同的操作系统命令可能不同。

Resource有多种类型

在配置一个primitives类型的resource的时候,可以先查看帮助

crm ra info ocf:heartbeat:IPaddr2

这里面有所有可以设置的parameters

Manages virtual IPv4 addresses (Linux specific version) (ocf:heartbeat:IPaddr2)

Parameters (* denotes required, [] the default):

ip* (string): IPv4 address

The IPv4 address to be configured in dotted quad notation, for example

"192.168.1.1".

cidr_netmask (string): CIDR netmask

broadcast (string): Broadcast address

mac (string): Cluster IP MAC address

Operations‘ defaults (advisory minimum):

start timeout=20s

stop timeout=20s

status timeout=20s interval=10s

monitor timeout=20s interval=10s

所有我们可以这样configure resource

crm configure primitive myIP ocf:heartbeat:IPaddr2 params ip=127.0.0.99 op monitor interval=60s

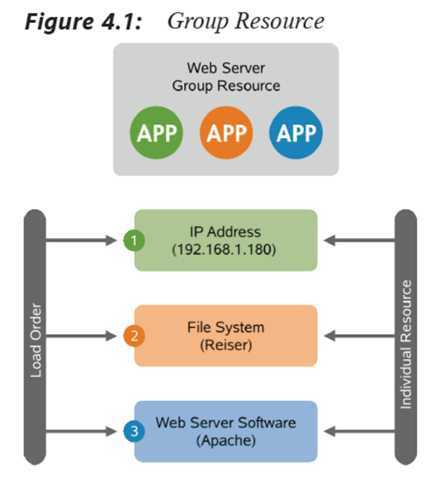

有一些resource是绑定在一起的,这些resource要么同时运行在同一个node上,要么同时运行在另外的node上。

如下面的图,Web Server就是一个Group Resource,它包含三个子resource, IP Addr,Apache, Filesystem

Group有以下的属性

要配置group resource,首先需要定义primitive resource

crm configure primitive Public-IP ocf:heartbeat:IPaddr2 params ip=1.2.3.4 id=p.public-ip

crm configure primitive Email lsb:exim params id=p.lsb-exim

下面生个一个group

crm configure group mygroup Public-IP Email

如果想改变group

crm configure modgroup mygroup add p.lsb-exim before p.public-ip

删除子resource

crm configure modgroup mygroup remove p.lsb-exim

clone的目的是部署多个active-active的resource,使得它在多个机器上同时运行。

有三种clone:

Ananymous Clone是最简单的一种,多个资源同时运行在多个地方,每个资源都是完全一样的,每个机器只能运行一个active的resource。

比如处于只读状态的apache,就是很好的例子,因为只读,他们可以很好的协同工作而没有冲突。

例如我们创建一个apache的resource,不添加constraint

crm configure primitive WebSite ocf:heartbeat:apache params configfile=/etc/apache2/apache2.conf statusurl="http://127.0.0.1/server-status" op monitor interval=1min

crm configure op_defaults timeout=240s

# crm_mon -1

Last updated: Sat Aug 2 13:24:46 2014

Last change: Sat Aug 2 13:24:38 2014 via cibadmin on pacemaker01

Stack: corosync

Current DC: pacemaker01 (1084777482) - partition with quorum

Version: 1.1.10-42f2063

3 Nodes configured

2 Resources configured

Online: [ pacemaker01 pacemaker02 pacemaker03 ]

ClusterIP (ocf::heartbeat:IPaddr2): Started pacemaker01

WebSite (ocf::heartbeat:apache): Started pacemaker03

这个时候website运行在pacemaker3上,我们在每个节点ps aux一下

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# ps aux | grep apache

root 32560 0.0 0.0 11744 900 pts/0 S+ 13:25 0:00 grep --color=auto apache

root@pacemaker02:/home/openstack# ps aux | grep apache

root 4504 0.0 0.0 11744 900 pts/0 S+ 13:25 0:00 grep --color=auto apache

root@pacemaker03:/home/openstack# ps aux | grep apache

root 4455 0.0 0.1 71300 2564 ? Ss 13:24 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

www-data 4456 0.0 0.2 360464 4252 ? Sl 13:24 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

www-data 4457 0.0 0.2 557136 4928 ? Sl 13:24 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

root 4592 0.0 0.0 11744 900 pts/0 S+ 13:25 0:00 grep --color=auto apache

下面我们创建一个apache-clone

crm configure clone apache-clone WebSite

这里我们使用了很多的默认值,clone-max默认为每个节点都启动,clone-node-max默认为每个节点最多启动一个

这样三个节点的apache都启动起来了

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# ps aux | grep apache

root 410 0.0 0.0 11744 900 pts/0 S+ 13:27 0:00 grep --color=auto apache

root 32754 0.0 0.1 71300 2560 ? Ss 13:27 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

www-data 32755 0.0 0.4 360464 8332 ? Sl 13:27 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

www-data 32756 0.0 0.4 491600 9008 ? Sl 13:27 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

root@pacemaker02:/home/openstack# ps aux | grep apache

root 4533 0.0 0.1 71300 2564 ? Ss 13:27 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

www-data 4534 0.0 0.2 360464 4252 ? Sl 13:27 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

www-data 4535 0.0 0.2 491600 4928 ? Sl 13:27 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

root 4647 0.0 0.0 11744 900 pts/0 S+ 13:27 0:00 grep --color=auto apache

root@pacemaker03:/home/openstack# ps aux | grep apache

root 4455 0.0 0.1 71300 2564 ? Ss 13:24 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

www-data 4456 0.0 0.2 491600 4924 ? Sl 13:24 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

www-data 4457 0.0 0.2 622672 4928 ? Sl 13:24 0:00 /usr/sbin/apache2 -DSTATUS -f /etc/apache2/apache2.conf

root 4694 0.0 0.0 11744 900 pts/0 S+ 13:27 0:00 grep --color=auto apache

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# crm_mon -1

Last updated: Sat Aug 2 13:27:20 2014

Last change: Sat Aug 2 13:27:14 2014 via cibadmin on pacemaker01

Stack: corosync

Current DC: pacemaker01 (1084777482) - partition with quorum

Version: 1.1.10-42f2063

3 Nodes configured

4 Resources configured

Online: [ pacemaker01 pacemaker02 pacemaker03 ]

ClusterIP (ocf::heartbeat:IPaddr2): Started pacemaker01

Clone Set: apache-clone [WebSite]

Started: [ pacemaker01 pacemaker02 pacemaker03 ]

我们试图将IPaddr2在三个node切换,就看出每个node都启动了apache

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker01</body>

</html>

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# crm resource move ClusterIP pacemaker02

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker02</body>

</html>

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# crm resource move ClusterIP pacemaker03

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker03</body>

</html>

当然我们可以创建三个IPaddr2,和这三个apache分别做成一个group,前面加一个haproxy,就可以负载均衡了。

Anonymous Clone每个resource agent都可以,不需要什么特殊的处理,只要把resource启动起来就可以了。

第二种是Globally Unique Clones

这种clone,一个resource虽然被clone成多个,但是每个clone不一样,比如启动了三个apache,一个是财经新闻,一个是政治新闻,一个是娱乐新闻

为了支持global unique clone,必须要自己写相应的resource agent,至少lsb的不可以。

Copies of a clone are identified by appending a colon and a numerical offset, eg. apache:2. 这个数字称为clone id

需要resource agent根据clone id的不同进行不同的操作。

globally-unique=‘true‘的resource可以做到下面的两点:

默认的resource中实现了这种clone的就是IPaddr2

IPaddr2中我们可以看到下面的代码

$IPTABLES -I INPUT -d $OCF_RESKEY_ip -i $NIC -j CLUSTERIP \

--new \

--clustermac $IF_MAC \

--total-nodes $IP_INC_GLOBAL \

--local-node $IP_INC_NO \

--hashmode $IP_CIP_HASH

这是使用的IPtables中的CLUSTERIP target

一般来说,一个网络上,IP应该是唯一的,并且只有一个机器拥有这个IP,当进行arp寻找IP的时候,只有一个机器恢复。

为了实现Load Balancer,IPtables使得多个机器都拥有这个IP,并且有这个IP的clustermac。根据sourse ip以及source port进行hash运算,toal-nodes是总共的拥有这个IP的node的数量,local-node是这是第几个node,hashmod是指进行hash的方式,默认是sourceip-sourceport,出了这个iptables规则,还会生成/proc/net/ipt_CLUSTERIP/VIP_ADDRESS,当有arp的时候,会hash计算出哪个node应该反映,于是哪个host进行应答

我们先配置一个clone-vip

root@pacemaker01:~# crm configure clone clone-vip ClusterIP meta clone-max=‘2‘ clone-node-max=‘1‘ globally-unique=‘true‘

root@pacemaker01:~# crm_mon -1

Last updated: Sat Aug 2 18:46:40 2014

Last change: Sat Aug 2 18:46:31 2014 via cibadmin on pacemaker01

Stack: corosync

Current DC: pacemaker01 (1084777482) - partition with quorum

Version: 1.1.10-42f2063

3 Nodes configured

3 Resources configured

Online: [ pacemaker01 pacemaker02 pacemaker03 ]

WebSite (ocf::heartbeat:apache): Started pacemaker02

Clone Set: clone-vip [ClusterIP] (unique)

ClusterIP:0 (ocf::heartbeat:IPaddr2): Started pacemaker01

ClusterIP:1 (ocf::heartbeat:IPaddr2): Started pacemaker03

可以看出和apache不同,配置了两个不同的ClusterIP,一个是ClusterIP:0,一个是ClusterIP:1,都带clone id做后缀

我们先去pacemaker01上去看看

root@pacemaker01:~# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:9b:d5:11 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.10/24 brd 192.168.100.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.100.100/24 brd 192.168.100.255 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe9b:d511/64 scope link

valid_lft forever preferred_lft forever

root@pacemaker01:~# iptables -nvL

Chain INPUT (policy ACCEPT 489 packets, 47504 bytes)

pkts bytes target prot opt in out source destination

0 0 CLUSTERIP all -- eth0 * 0.0.0.0/0 192.168.100.100 CLUSTERIP hashmode=sourceip-sourceport clustermac=31:B3:55:6F:CE:87 total_nodes=2 local_node=1 hash_init=0

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 708 packets, 87757 bytes)

pkts bytes target prot opt in out source destination

再去pacemaker02上看看

root@pacemaker03:/home/openstack# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:9b:d5:33 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.12/24 brd 192.168.100.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.100.100/24 brd 192.168.100.255 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe9b:d533/64 scope link

valid_lft forever preferred_lft forever

root@pacemaker03:/home/openstack# iptables -nvL

Chain INPUT (policy ACCEPT 610 packets, 78996 bytes)

pkts bytes target prot opt in out source destination

0 0 CLUSTERIP all -- eth0 * 0.0.0.0/0 192.168.100.100 CLUSTERIP hashmode=sourceip-sourceport clustermac=31:B3:55:6F:CE:87 total_nodes=2 local_node=2 hash_init=0

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 390 packets, 44182 bytes)

pkts bytes target prot opt in out source destination

看到两个iptables的不同了吧,就是local_node不同

root@pacemaker03:/home/openstack# crm configure clone clone-apache WebSite

root@pacemaker03:/home/openstack# crm_mon -1

Last updated: Sat Aug 2 18:49:40 2014

Last change: Sat Aug 2 18:49:33 2014 via cibadmin on pacemaker03

Stack: corosync

Current DC: pacemaker01 (1084777482) - partition with quorum

Version: 1.1.10-42f2063

3 Nodes configured

5 Resources configured

Online: [ pacemaker01 pacemaker02 pacemaker03 ]

Clone Set: clone-vip [ClusterIP] (unique)

ClusterIP:0 (ocf::heartbeat:IPaddr2): Started pacemaker01

ClusterIP:1 (ocf::heartbeat:IPaddr2): Started pacemaker03

Clone Set: clone-apache [WebSite]

Started: [ pacemaker01 pacemaker02 pacemaker03 ]

下面我们访问apache网站,发现有时候是pacemaker1返回,有时候是pacemaker2返回。

root@pacemaker02:/home/openstack# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker03</body>

</html>

root@pacemaker02:/home/openstack# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker03</body>

</html>

root@pacemaker02:/home/openstack# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker03</body>

</html>

root@pacemaker02:/home/openstack# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker03</body>

</html>

root@pacemaker02:/home/openstack# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker01</body>

</html>

root@pacemaker02:/home/openstack# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker01</body>

</html>

root@pacemaker02:/home/openstack# curl http://192.168.100.100

<html>

<body>My Test Site - pacemaker03</body>

</html>

最后一种Clone是stateful Clone

也即每种clone都是有状态的,主要是两种状态active和passive,和普通的active和passive不同,active可以是多个。

master-max :How many copies of the resource can be promoted to master status; default 1.

为了支持stateful Clone,resource agent需要有action: promote和demote

Stateful的一个很好的例子是mysql

mysql的resource agent是基于mysql replication技术进行的,mysql的instance有的是master,有的是slave

在文件/usr/lib/ocf/resource.d/heartbeat/mysql中

start) mysql_start

resource agent的start会调用mysql_start

在mysql_start中,会调用下面的命令

${OCF_RESKEY_binary} --defaults-file=$OCF_RESKEY_config \

--pid-file=$OCF_RESKEY_pid \

--socket=$OCF_RESKEY_socket \

--datadir=$OCF_RESKEY_datadir \

--user=$OCF_RESKEY_user $OCF_RESKEY_additional_parameters \

$mysql_extra_params >/dev/null 2>&1 &

rc=$?

其中OCF_RESKEY_binary_default="/usr/local/bin/mysqld_safe",这是启动mysql进程的命令

启动了mysql进行后,等待一段时间,于是判断ocf_is_ms,是否是master/slave模式

如果是,则首先将当前的mysql设为readonly状态,set_read_only on,因为不知道当前是否已经有一个master在运行,所以以slave的方式先启动

master_host=`echo $OCF_RESKEY_CRM_meta_notify_master_uname|tr -d " "`

if [ "$master_host" -a "$master_host" != ${HOSTNAME} ]; then

ocf_log info "Changing MySQL configuration to replicate from $master_host."

set_master

start_slave

if [ $? -ne 0 ]; then

ocf_log err "Failed to start slave"

return $OCF_ERR_GENERIC

fi

else

ocf_log info "No MySQL master present - clearing replication state"

unset_master

fi

接下来我们看OCF_RESKEY_CRM_meta_notify_master_uname,这个是pacemaker notify action的结果,

http://www.linux-ha.org/doc/dev-guides/_literal_notify_literal_action.html

$OCF_RESKEY_CRM_meta_notify_master_uname?—?node name of the node where the resource currently is in the Master role $OCF_RESKEY_CRM_meta_notify_promote_uname?—?node name of the node where the resource currently is being promoted to the Master role (promote notifications only) $OCF_RESKEY_CRM_meta_notify_demote_uname?—?node name of the node where the resource currently is being demoted to the Slave role (demote notifications only)当有其他的mysql被选举称为master的时候,则set_master,会调用

ocf_run $MYSQL $MYSQL_OPTIONS_REPL \

-e "CHANGE MASTER TO MASTER_HOST=‘$new_master‘, \

MASTER_USER=‘$OCF_RESKEY_replication_user‘, \

MASTER_PASSWORD=‘$OCF_RESKEY_replication_passwd‘ $master_params"

将slave指向master

start_slave() {

ocf_run $MYSQL $MYSQL_OPTIONS_REPL \

-e "START SLAVE"

}

如果当前还没有master,则调用unset_master

# Now, stop all slave activity and unset the master host

ocf_run $MYSQL $MYSQL_OPTIONS_REPL \

-e "STOP SLAVE"

if [ $? -gt 0 ]; then

ocf_log err "Error stopping rest slave threads"

exit $OCF_ERR_GENERIC

fi

ocf_run $MYSQL $MYSQL_OPTIONS_REPL \

-e "RESET SLAVE;"

if [ $? -gt 0 ]; then

ocf_log err "Failed to reset slave"

exit $OCF_ERR_GENERIC

fi

如果当期没有master,但是$master_host" == ${HOSTNAME}的时候,说明你自己被选作了master.

最后$CRM_MASTER -v 1

CRM_MASTER="${HA_SBIN_DIR}/crm_master -l reboot "

crm_master - A convenience wrapper for crm_attribute Set, update or delete a resource‘s promotion score

-l, --lifetime=value

Until when should the setting take affect. Valid values: reboot, forever

当你自己是master的时候,会被调用promote action

调用mysql_promote,里面首先会stop slave,终止自己作为slave的角色

ocf_run $MYSQL $MYSQL_OPTIONS_REPL \

-e "STOP SLAVE"

# Set Master Info in CIB, cluster level attribute

update_data_master_status

master_info="$(get_local_ip)|$(get_master_status File)|$(get_master_status Position)"

${CRM_ATTR_REPL_INFO} -v "$master_info"

自己将要是master了,update_data_master_status中

update_data_master_status() {

master_status_file="${HA_RSCTMP}/master_status.${OCF_RESOURCE_INSTANCE}"

$MYSQL $MYSQL_OPTIONS_REPL -e "SHOW MASTER STATUS\G" > $master_status_file

}

将master的status保存在文件里面

CRM_ATTR_REPL_INFO="${HA_SBIN_DIR}/crm_attribute --type crm_config --name ${INSTANCE_ATTR_NAME}_REPL_INFO -s mysql_replicatio

n"

将master的信息写入CIB

set_read_only off

正式成为master

$CRM_MASTER -v $((${OCF_RESKEY_max_slave_lag}+1))当前的master有个一个更高的score,从而原来的master回来的时候,不至于switch回去。

当其他的slave收到notify,有了新的master诞生了,马上投靠

在mysql_notify函数中

post-promote会unset_master,set_master,start_slave

如果想定义多个resources,有相似的配置,则可以使用resource templates

crm configure rsc_template BigVM ocf:heartbeat:Xen params allow_mem_management=”true” op monitor timeout=60s interval=15s op stop timeout=10m op start timeout=10m

我们可以基于他生成一个resource

crm configure primitive MyVM1 @BigVM params xmfile=”/etc/xen/shared-vm/MyVM1” name=”MyVM1”

也可以覆盖template中的参数

Resource多有以下几种参数,

一种称为Resource Options (Meta Attributes),在定义中,常用meta进行定义,在resource agent里面多采用OCF_RESKEY_CRM_meta_XXX

通过crm_resource可以进行管理

List the configured resources:

# crm_resource --list

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# crm_resource --list

Clone Set: clone-vip [ClusterIP] (unique)

ClusterIP:0 (ocf::heartbeat:IPaddr2): Started

ClusterIP:1 (ocf::heartbeat:IPaddr2): Started

Clone Set: clone-apache [WebSite]

Started: [ pacemaker01 pacemaker02 pacemaker03 ]

List the available OCF agents:

# crm_resource --list-agents ocf

List the available OCF agents from the linux-ha project:

# crm_resource --list-agents ocf:heartbeat

Display the current location of ‘myResource‘:

# crm_resource --resource myResource --locate

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# crm_resource --resource clone-apache --locate

resource clone-apache is running on: pacemaker03

resource clone-apache is running on: pacemaker01

resource clone-apache is running on: pacemaker02

root@pacemaker01:/usr/lib/ocf/resource.d/heartbeat# crm_resource --resource clone-vip --locate

resource clone-vip is running on: pacemaker01

resource clone-vip is running on: pacemaker03

Move ‘myResource‘ to another machine:

# crm_resource --resource myResource --move

Move ‘myResource‘ to a specific machine:

# crm_resource --resource myResource --move --node altNode

Allow (but not force) ‘myResource‘ to move back to its original location:

# crm_resource --resource myResource --un-move

Tell the cluster that ‘myResource‘ failed:

# crm_resource --resource myResource --fail

Stop a ‘myResource‘ (and anything that depends on it):

# crm_resource --resource myResource --set-parameter target-role --meta --parameter-value Stopped

Tell the cluster not to manage ‘myResource‘:

The cluster will not attempt to start or stop the resource under any circumstances.

Useful when performing maintenance tasks on a resource.

# crm_resource --resource myResource --set-parameter is-managed --meta --parameter-value false

Erase the operation history of ‘myResource‘ on ‘aNode‘:

The cluster will ‘forget‘ the existing resource state (including any errors) and attempt to recover the resource.

Useful when a resource had failed permanently and has been repaired by an administrator.

# crm_resource --resource myResource --cleanup --node aNode

crm_resource --meta --resource Email --set-parameter priority --property-value 100crm_resource --meta --resource Email --set-parameter multiple-active --property-value block

第二种是Instance Attributes (Parameters),多用params参数表示,crm ra info IPaddr2可以看到所有的参数,这些参数会传到resource agent里面

如OCF_RESKEY_cidr_netmask

crm_resource --resource Public-IP --set-parameter ip --property-value 1.2.3.4

第三种是Resource Operations,多用参数op表示,action常为monitor, start, stop,设置一般设置interval, timeout等。表示每过interval的时间,resource agent都会调用monitor来查看状态,而且start或者stop的时间不能超过timeout

requires指的是在什么条件下,操作才进行nothing, quorum, fencing

on-fail指的是当resource fail了,进行什么操作,ignore, stop, restart, fence, standby

role是什么角色才进行操作,有stopped, started, master比如

op monitor interval=”300s” role=”Stopped” timeout=”10s”

op monitor interval=”30s” timeout=”10s”

表示在running的情况下30s一监控,在stopped情况下,300s一监控。

Setting Global Defaults for Operations

crm_attribute --type op_defaults --attr-name timeout --attr-value 20s

When Resources Take a Long Time to Start/Stop

There are a number of implicit operations that the cluster will always perform - start, stop and a non-recurring monitor operation (used at startup to check the resource isn‘t already active). If one of these is taking too long, then you can create an entry for them and simply specify a new value.

<primitive id="Public-IP" class="ocf" type="IPaddr" provider="heartbeat">

<operations>

<op id="public-ip-startup" name="monitor" interval="0" timeout="90s"/>

<op id="public-ip-start" name="start" interval="0" timeout="180s"/>

<op id="public-ip-stop" name="stop" interval="0" timeout="15min"/>

</operations>

<instance_attributes id="params-public-ip">

<nvpair id="public-ip-addr" name="ip" value="1.2.3.4"/>

</instance_attributes>

</primitive>

Multiple Monitor Operations

To tell the resource agent what kind of check to perform, you need to provide each monitor with a different value for a common parameter. The OCF standard creates a special parameter called OCF_CHECK_LEVEL for this purpose and dictates that it is made available to the resource agent without the normal OCF_RESKEY_ prefix.

<primitive id="Public-IP" class="ocf" type="IPaddr" provider="heartbeat">

<operations>

<op id="public-ip-health-60" name="monitor" interval="60">

<instance_attributes id="params-public-ip-depth-60">

<nvpair id="public-ip-depth-60" name="OCF_CHECK_LEVEL" value="10"/>

</instance_attributes>

</op>

<op id="public-ip-health-300" name="monitor" interval="300">

<instance_attributes id="params-public-ip-depth-300">

<nvpair id="public-ip-depth-300" name="OCF_CHECK_LEVEL" value="20"/>

</instance_attributes>

</op>

</operations>

<instance_attributes id="params-public-ip">

<nvpair id="public-ip-level" name="ip" value="1.2.3.4"/>

</instance_attributes>

</primitive>High Availability手册(3): 配置,布布扣,bubuko.com

标签:des style blog http color 使用 os io

原文地址:http://www.cnblogs.com/popsuper1982/p/3887611.html