标签:

简介

运行hadoop或者spark(调用hdfs等)时,总出现这样的错误“Unable to load native-hadoop library for your platform”,其实是无法加载本地库

解决办法

1.环境变量是否设好(设好但是还不行尝试第二步)

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

2.默认的libhadoop.so.1.0.0(lib/native)是否是32位,而机器是64位,此时需要编译hadoop,然后替换lib里面的文件

编译安装选项

mvn clean package -Pdist -Dtar -Dmaven.javadoc.skip=true -DskipTests -fail-at-end -Pnative

如果提示需要‘libprotoc 2.5.0

1 sudo apt-get install -y gcc g++ make maven cmake zlib zlib1g-dev libcurl4-openssl-dev 2 curl -# -O https://protobuf.googlecode.com/files/protobuf-2.5.0.tar.gz 3 gunzip protobuf-2.5.0.tar.gz 4 tar -xvf protobuf-2.5.0.tar 5 cd protobuf-2.5.0 6 ./configure --prefix=/usr 7 make 8 sudo make install

9 sudo ldconfig

如果是在Ubuntu14.04里,提示libtool的错误,那么用/usr/bin/libtool来替换文件中的libtool

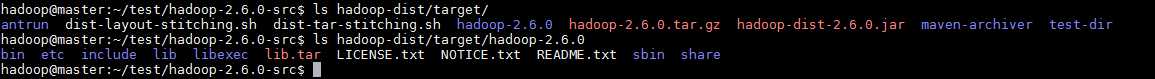

编译好的文件

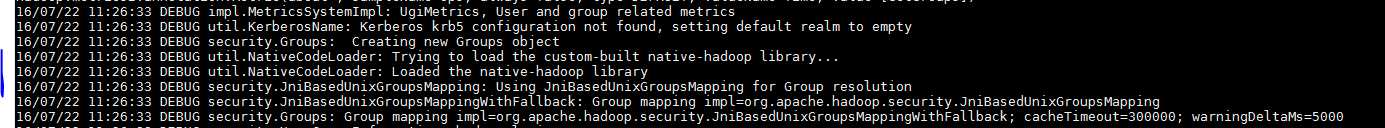

设置HADOOP的日志级别

export HADOOP_ROOT_LOGGER=DEBUG,console

结果

参考:

http://stackoverflow.com/questions/30702686/building-apache-hadoop-2-6-0-throwing-maven-error

http://stackoverflow.com/questions/19943766/hadoop-unable-to-load-native-hadoop-library-for-your-platform-warning

http://stackoverflow.com/questions/19556253/trunk-doesnt-compile-because-libprotoc-is-old-when-working-with-hadoop-under-ec

Hadoop - Unable to load native-hadoop library for your platform

标签:

原文地址:http://www.cnblogs.com/loadofleaf/p/5694567.html