标签:

深度学习框架 Torch 7 问题笔记

1. 尝试第一个 CNN 的 torch版本, 代码如下:

1 -- We now have 5 steps left to do in training our first torch neural network 2 -- 1. Load and normalize data 3 -- 2. Define Neural Network 4 -- 3. Define Loss function 5 -- 4. Train network on training data 6 -- 5. Test network on test data. 7 8 9 10 11 -- 1. Load and normalize data 12 require ‘paths‘ 13 require ‘image‘; 14 if (not paths.filep("cifar10torchsmall.zip")) then 15 os.execute(‘wget -c https://s3.amazonaws.com/torch7/data/cifar10torchsmall.zip‘) 16 os.execute(‘unzip cifar10torchsmall.zip‘) 17 end 18 trainset = torch.load(‘cifar10-train.t7‘) 19 testset = torch.load(‘cifar10-test.t7‘) 20 classes = {‘airplane‘, ‘automobile‘, ‘bird‘, ‘cat‘, 21 ‘deer‘, ‘dog‘, ‘frog‘, ‘horse‘, ‘ship‘, ‘truck‘} 22 23 print(trainset) 24 print(#trainset.data) 25 26 itorch.image(trainset.data[100]) -- display the 100-th image in dataset 27 print(classes[trainset.label[100]]) 28 29 -- ignore setmetatable for now, it is a feature beyond the scope of this tutorial. 30 -- It sets the index operator 31 setmetatable(trainset, 32 {__index = function(t, i) 33 return {t.data[i], t.label[i]} 34 end} 35 ); 36 trainset.data = trainset.data:double() -- convert the data from a ByteTensor to a DoubleTensor. 37 38 function trainset:size() 39 return self.data:size(1) 40 end 41 42 print(trainset:size()) 43 print(trainset[33]) 44 itorch.image(trainset[33][11]) 45 46 redChannel = trainset.data[{ {}, {1}, {}, {} }] -- this pick {all images, 1st channel, all vertical pixels, all horizontal pixels} 47 print(#redChannel) 48 49 -- TODO:fill 50 mean = {} 51 stdv = {} 52 for i = 1,3 do 53 mean[i] = trainset.data[{ {}, {i}, {}, {} }]:mean() -- mean estimation 54 print(‘Channel ‘ .. i .. ‘ , Mean: ‘ .. mean[i]) 55 trainset.data[{ {}, {i}, {}, {} }]:add(-mean[i]) -- mean subtraction 56 57 stdv[i] = trainset.data[ { {}, {i}, {}, {} }]:std() -- std estimation 58 print(‘Channel ‘ .. i .. ‘ , Standard Deviation: ‘ .. stdv[i]) 59 trainset.data[{ {}, {i}, {}, {} }]:div(stdv[i]) -- std scaling 60 end 61 62 63 64 -- 2. Define Neural Network 65 net = nn.Sequential() 66 net:add(nn.SpatialConvolution(3, 6, 5, 5)) -- 3 input image channels, 6 output channels, 5x5 convolution kernel 67 net:add(nn.ReLU()) -- non-linearity 68 net:add(nn.SpatialMaxPooling(2,2,2,2)) -- A max-pooling operation that looks at 2x2 windows and finds the max. 69 net:add(nn.SpatialConvolution(6, 16, 5, 5)) 70 net:add(nn.ReLU()) -- non-linearity 71 net:add(nn.SpatialMaxPooling(2,2,2,2)) 72 net:add(nn.View(16*5*5)) -- reshapes from a 3D tensor of 16x5x5 into 1D tensor of 16*5*5 73 net:add(nn.Linear(16*5*5, 120)) -- fully connected layer (matrix multiplication between input and weights) 74 net:add(nn.ReLU()) -- non-linearity 75 net:add(nn.Linear(120, 84)) 76 net:add(nn.ReLU()) -- non-linearity 77 net:add(nn.Linear(84, 10)) -- 10 is the number of outputs of the network (in this case, 10 digits) 78 net:add(nn.LogSoftMax()) -- converts the output to a log-probability. Useful for classification problems 79 80 81 -- 3. Let us difine the Loss function 82 criterion = nn.ClassNLLCriterion() 83 84 85 86 -- 4. Train the neural network 87 trainer = nn.StochasticGradient(net, criterion) 88 trainer.learningRate = 0.001 89 trainer.maxIteration = 5 -- just do 5 epochs of training. 90 trainer:train(trainset) 91 92 93 94 -- 5. Test the network, print accuracy 95 print(classes[testset.label[100]]) 96 itorch.image(testset.data[100]) 97 98 testset.data = testset.data:double() -- convert from Byte tensor to Double tensor 99 for i=1,3 do -- over each image channel 100 testset.data[{ {}, {i}, {}, {} }]:add(-mean[i]) -- mean subtraction 101 testset.data[{ {}, {i}, {}, {} }]:div(stdv[i]) -- std scaling 102 end 103 104 -- for fun, print the mean and standard-deviation of example-100 105 horse = testset.data[100] 106 print(horse:mean(), horse:std()) 107 108 print(classes[testset.label[100]]) 109 itorch.image(testset.data[100]) 110 predicted = net:forward(testset.data[100]) 111 112 -- the output of the network is Log-Probabilities. To convert them to probabilities, you have to take e^x 113 print(predicted:exp()) 114 115 116 for i=1,predicted:size(1) do 117 print(classes[i], predicted[i]) 118 end 119 120 121 -- test the accuracy 122 correct = 0 123 for i=1,10000 do 124 local groundtruth = testset.label[i] 125 local prediction = net:forward(testset.data[i]) 126 local confidences, indices = torch.sort(prediction, true) -- true means sort in descending order 127 if groundtruth == indices[1] then 128 correct = correct + 1 129 end 130 end 131 132 133 print(correct, 100*correct/10000 .. ‘ % ‘) 134 135 class_performance = {0, 0, 0, 0, 0, 0, 0, 0, 0, 0} 136 for i=1,10000 do 137 local groundtruth = testset.label[i] 138 local prediction = net:forward(testset.data[i]) 139 local confidences, indices = torch.sort(prediction, true) -- true means sort in descending order 140 if groundtruth == indices[1] then 141 class_performance[groundtruth] = class_performance[groundtruth] + 1 142 end 143 end 144 145 146 for i=1,#classes do 147 print(classes[i], 100*class_performance[i]/1000 .. ‘ %‘) 148 end 149 150 require ‘cunn‘; 151 net = net:cuda() 152 criterion = criterion:cuda() 153 trainset.data = trainset.data:cuda() 154 trainset.label = trainset.label:cuda() 155 156 trainer = nn.StochasticGradient(net, criterion) 157 trainer.learningRate = 0.001 158 trainer.maxIteration = 5 -- just do 5 epochs of training. 159 160 161 trainer:train(trainset)

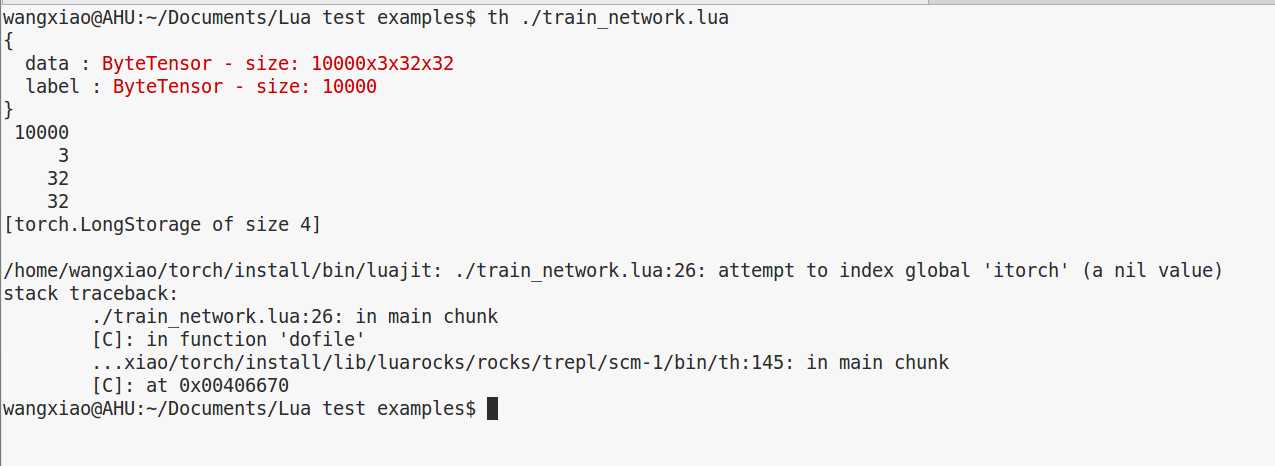

那么,运行起来 却出现如下的问题:

(1).

/home/wangxiao/torch/install/bin/luajit: ./train_network.lua:26: attempt to index global ‘itorch‘ (a nil value)

stack traceback:

./train_network.lua:26: in main chunk

[C]: in function ‘dofile‘

...xiao/torch/install/lib/luarocks/rocks/trepl/scm-1/bin/th:145: in main chunk

[C]: at 0x00406670

wangxiao@AHU:~/Documents/Lua test examples$

主要是 itorch 的问题, 另外就是 要引用 require ‘nn‘ 来解决 无法辨别 nn 的问题.

我是把 带有 itorch 的那些行都暂时注释了.

标签:

原文地址:http://www.cnblogs.com/wangxiaocvpr/p/5701358.html