pacemaker,是一个群集资源管理器。它实现最大可用性群集服务(亦称资源管理)的节点和资源级故障检测和恢复使用您的首选集群基础设施(OpenAIS的或Heaerbeat)提供的消息和成员能力。

它可以做乎任何规模的集群,并配备了一个强大的依赖模型,使管理员能够准确地表达群集资源之间的关系(包括顺序和位置)。几乎任何可以编写脚本,可以管理作为心脏起搏器集群的一部分。

pacemaker的特点:

主机和应用程序级别的故障检测和恢复

几乎支持任何冗余配置

同时支持多种集群配置模式

配置策略处理法定人数损失(多台机器失败时)

支持应用启动/关机顺序

支持,必须/必须在同一台机器上运行的应用程序

支持多种模式的应用程序(如主/从)

可以测试任何故障或群集的群集状态

primitive(native):基本资源,原始资源

group:资源组

clone:克隆资源(可同时运行在多个节点上),要先定义为primitive后才能进行clone。主要包含STONITH和集群文件系统(cluster filesystem)

master/slave:主从资源,如drdb

Lsb:linux表中库,一般位于/etc/rc.d/init.d/目录下的支持start|stop|status等参数的服务脚本都是lsb

ocf:Open cluster Framework,开放集群架构

heartbeat:heartbaet V1版本

stonith:专为配置stonith设备而用

172.25.85.2 server2.example.com(1024)

172.25.85.3 server3.example.com(1024)

1. server2/3:

/etc/init.d/ldirectord stop

chkconfig ldirectord off

yum install pacemaker -y

rpm -q corosync

server2:

cd /etc/corosync

cp corosync.conf.example corosync.conf

vim /etc/corosync/corosync.conf

bindnetaddr: 172.25.85.0

service {

name: pacemaker

ver: 0

} scp /etc/corosync/corosync.conf root@172.25.85.3:/etc/corosync/

/etc/init.d/corosync start

crm_verify -LV ##检测配置文件是否正确

server3:

/etc/init.d/corosync start

tail -f /var/log/messages

2. server2:

yum install crmsh-1.2.6-0.rc2.2.1.x86_64.rpm pssh-2.3.1-2.1.x86_64.rpm

crm

crm(live)configure# property no-quorum-policy=ignore ##将修改同步到其他节点 crm(live)configure# commit

vim /etc/httpd/conf/httpd.conf

<Location /server-status> SetHandler server-status Order deny,allow Deny from all Allow from 127.0.0.1 </Location>

crm

crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 params ip=172.25.85.100 crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 params ip=172.25.85.100 cidr_netmask=32 op monitor interval=30s primitive website ocf:heartbeat:apache params configfile=/etc/httpd/conf/httpd.conf op monitor interval=60s crm(live)configure# commit

crm(live)configure# collocation website-with-ip inf: website vip ##将这两个资源整合到一个主机上

crm(live)configure# commit

crm(live)configure# delete website-with-ip

crm(live)configure# commit

crm(live)configure# group apache vip website ##创建一个资源组apache

crm(live)configure# commit

crm(live)node# show server2.example.com: normal server3.example.com: normal crm(live)node# standby server2.example.com

crm(live)resource# stop apache crm(live)resource# show Resource Group: apache vip (ocf::heartbeat:IPaddr2): Stopped website (ocf::heartbeat:apache): Stopped crm(live)configure# delete apache crm(live)configure# delete website crm(live)configure# commit

[注意]

删除一个资源:

crm(live)resource# stop website

crm(live)configure# delete website

server3:

yum install crmsh-1.2.6-0.rc2.2.1.x86_64.rpm pssh-2.3.1-2.1.x86_64.rpm

vim /etc/httpd/conf/httpd.conf

<Location /server-status> SetHandler server-status Order deny,allow Deny from all Allow from 127.0.0.1 </Location>

crm_mon ##

3.真机上:systemctl start fence_virtd.service

server2:

stonith_admin -I

stonith_admin -M -a fence_xvm

crm

crm(live)configure# property stonith-enabled=true

primitive vmfence stonith:fence_xvm params pcmk_host_map="server2.example.com:ricci1;server3.example.com:heartbeat1" op monitor interval=1min

primitive sqldata ocf:linbit:drbd params drbd_resource=sqldata op monitor interval=30s

ms sqldataclone sqldata meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true

crm(live)configure# commit

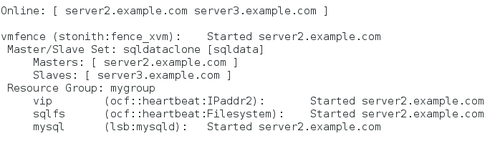

crm_mon

primitive sqlfs ocf:heartbeat:Filesystem params device=/dev/drbd1 directory=/var/lib/mysql fstype=ext4

colocation sqlfs_on_drbd inf: sqlfs sqldataclone:Master

order sqlfs-after-sqldata inf: sqldataclone:promote sqlfs:start

crm(live)configure# commit

crm_mon

crm(live)configure# primitive mysql lsb:mysqld op monitor interval=30s crm(live)configure# group mygroup vip sqlfs mysql INFO: resource references in colocation:sqlfs_on_drbd updated INFO: resource references in order:sqlfs-after-sqldata updated

crm(live)configure# commit

crm_mon

crm(live)resource# refresh mygroup

server3:

crm_mon ##监控节点状态

原文地址:http://11713145.blog.51cto.com/11703145/1834577