标签:

crawler4j是用Java实现的开源网络爬虫。提供了简单易用的接口,可以在几分钟内创建一个多线程网络爬虫。下面实例结合jsoup,采集搜狐新闻网(http://news.sohu.com/)新闻标题信息。

所有的过程仅需两步完成:

第一步:建立采集程序核心部分

1 /** 2 * Licensed to the Apache Software Foundation (ASF) under one or more 3 * contributor license agreements. See the NOTICE file distributed with 4 * this work for additional information regarding copyright ownership. 5 * The ASF licenses this file to You under the Apache License, Version 2.0 6 * (the "License"); you may not use this file except in compliance with 7 * the License. You may obtain a copy of the License at 8 * 9 * http://www.apache.org/licenses/LICENSE-2.0 10 * 11 * Unless required by applicable law or agreed to in writing, software 12 * distributed under the License is distributed on an "AS IS" BASIS, 13 * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 14 * See the License for the specific language governing permissions and 15 * limitations under the License. 16 * 17 * Author:eliteqing@foxmail.com 18 * 19 */ 20 package edu.uci.ics.crawler4j.tests; 21 22 import java.util.Set; 23 import java.util.regex.Pattern; 24 25 import edu.uci.ics.crawler4j.crawler.Page; 26 import edu.uci.ics.crawler4j.crawler.WebCrawler; 27 import edu.uci.ics.crawler4j.parser.HtmlParseData; 28 import edu.uci.ics.crawler4j.url.WebURL; 29 30 /** 31 * @date 2016年8月20日 上午11:52:13 32 * @version 33 * @since JDK 1.8 34 */ 35 public class MyCrawler extends WebCrawler { 36 37 //链接地址过滤// 38 private final static Pattern FILTERS = Pattern.compile(".*(\\.(css|js|gif|jpg" + "|png|mp3|mp3|zip|gz))$"); 39 40 @Override 41 public boolean shouldVisit(Page referringPage, WebURL url) { 42 String href = url.getURL().toLowerCase(); 43 return !FILTERS.matcher(href).matches() && href.startsWith("http://news.sohu.com/"); 44 } 45 46 /** 47 * This function is called when a page is fetched and ready to be processed 48 * by your program. 49 */ 50 @Override 51 public void visit(Page page) { 52 String url = page.getWebURL().getURL(); 53 logger.info("URL: " + url); 54 55 if (page.getParseData() instanceof HtmlParseData) { 56 HtmlParseData htmlParseData = (HtmlParseData) page.getParseData(); 57 String text = htmlParseData.getText(); 58 String html = htmlParseData.getHtml(); 59 Set<WebURL> links = htmlParseData.getOutgoingUrls(); 60 61 logger.debug("Text length: " + text.length()); 62 logger.debug("Html length: " + html.length()); 63 logger.debug("Number of outgoing links: " + links.size()); 64 logger.info("Title: " + htmlParseData.getTitle()); 65 66 } 67 } 68 69 }

第二步:建立采集程序控制部分

1 /** 2 * Licensed to the Apache Software Foundation (ASF) under one or more 3 * contributor license agreements. See the NOTICE file distributed with 4 * this work for additional information regarding copyright ownership. 5 * The ASF licenses this file to You under the Apache License, Version 2.0 6 * (the "License"); you may not use this file except in compliance with 7 * the License. You may obtain a copy of the License at 8 * 9 * http://www.apache.org/licenses/LICENSE-2.0 10 * 11 * Unless required by applicable law or agreed to in writing, software 12 * distributed under the License is distributed on an "AS IS" BASIS, 13 * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 14 * See the License for the specific language governing permissions and 15 * limitations under the License. 16 * 17 * Author:eliteqing@foxmail.com 18 * 19 */ 20 package edu.uci.ics.crawler4j.tests; 21 22 import edu.uci.ics.crawler4j.crawler.CrawlConfig; 23 import edu.uci.ics.crawler4j.crawler.CrawlController; 24 import edu.uci.ics.crawler4j.fetcher.PageFetcher; 25 import edu.uci.ics.crawler4j.robotstxt.RobotstxtConfig; 26 import edu.uci.ics.crawler4j.robotstxt.RobotstxtServer; 27 28 /** 29 * @date 2016年8月20日 上午11:55:56 30 * @version 31 * @since JDK 1.8 32 */ 33 public class MyController { 34 35 /** 36 * @param args 37 * @since JDK 1.8 38 */ 39 public static void main(String[] args) { 40 // TODO Auto-generated method stub 41 42 //本地嵌入式数据库,采用berkeley DB 43 String crawlStorageFolder = "data/crawl/root"; 44 int numberOfCrawlers = 3; 45 46 CrawlConfig config = new CrawlConfig(); 47 config.setCrawlStorageFolder(crawlStorageFolder); 48 49 /* 50 * Instantiate the controller for this crawl. 51 */ 52 PageFetcher pageFetcher = new PageFetcher(config); 53 RobotstxtConfig robotstxtConfig = new RobotstxtConfig(); 54 RobotstxtServer robotstxtServer = new RobotstxtServer(robotstxtConfig, pageFetcher); 55 CrawlController controller; 56 try { 57 controller = new CrawlController(config, pageFetcher, robotstxtServer); 58 controller.addSeed("http://news.sohu.com/"); 62 63 /* 64 * For each crawl, you need to add some seed urls. These are the 65 * first URLs that are fetched and then the crawler starts following 66 * links which are found in these pages 67 */ 68 69 /* 70 * Start the crawl. This is a blocking operation, meaning that your 71 * code will reach the line after this only when crawling is 72 * finished. 73 */ 74 controller.start(MyCrawler.class, numberOfCrawlers); 75 } catch (Exception e) { 76 // TODO Auto-generated catch block 77 e.printStackTrace(); 78 } 79 80 } 81 82 }

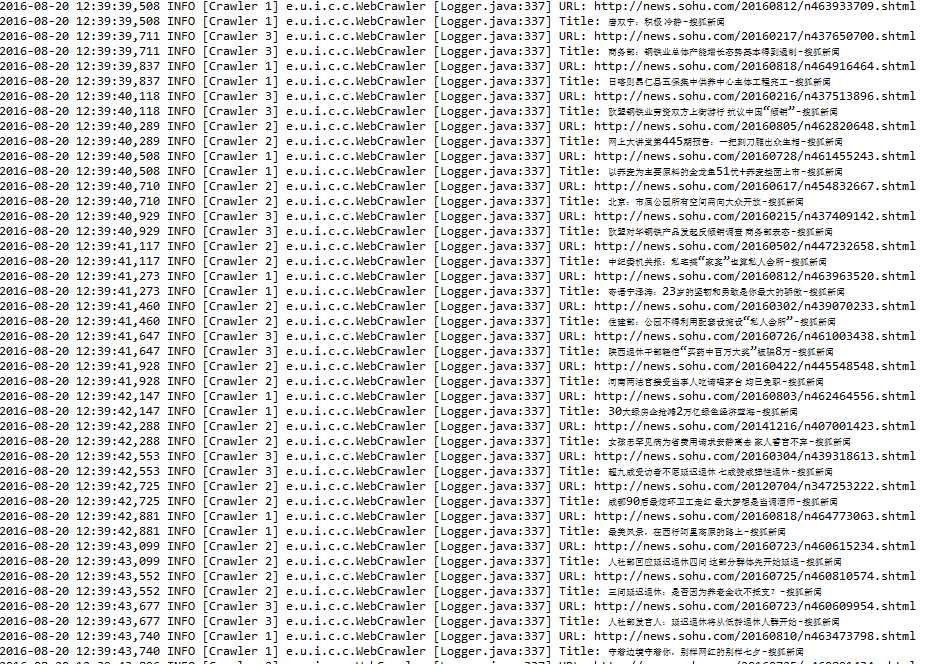

采集结果展示:

crawler4j源码学习(1):搜狐新闻网新闻标题采集爬虫

标签:

原文地址:http://www.cnblogs.com/liinux/p/5790207.html