标签:

RAID5的搭建

第一步:添加四个磁盘,开机并检查(略过)

第二步:使用fdisk命令分别对四个磁盘进行分区,效果如下图:

第三步:使用mdadm命令创建RAID5磁盘阵列

[root@localhost ~]# mdadm -Cv /dev/md5 -l 5 -n 3 -x 1 /dev/sd[b-e]1

注:-n 指定3个磁盘组成阵列、-x指定有一个热备盘(至少3个磁盘)

[root@localhost ~]# watch cat /proc/mdstat #查看磁盘阵列信息

[root@localhost ~]# mdadm -Cv /dev/md5 -l 5 -n 3 -x 1 /dev/sd[b-e]1

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: size set to 20954112K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0]

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [UU_]

[======>..............] recovery = 31.9% (6700756/20954112) finish=2.7min speed=85916K/sec

unused devices: <none>

[root@localhost ~]# watch cat !$

watch cat /proc/mdstat

[root@localhost ~]# mdadm -Dsv >> /etc/mdadm.conf

[root@localhost ~]# cat !$

cat /etc/mdadm.conf

ARRAY /dev/md5 level=raid5 num-devices=3 metadata=1.2 spares=2 name=localhost.localdomain:5 UUID=5f73c597:481d2f9e:df90d7de:1392d60a

devices=/dev/sdb1,/dev/sdc1,/dev/sdd1,/dev/sde1

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:09:54 2016

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 4

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Rebuild Status : 68% complete

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 11

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 spare rebuilding /dev/sdd1

3 8 65 - spare /dev/sde1

[root@localhost ~]#

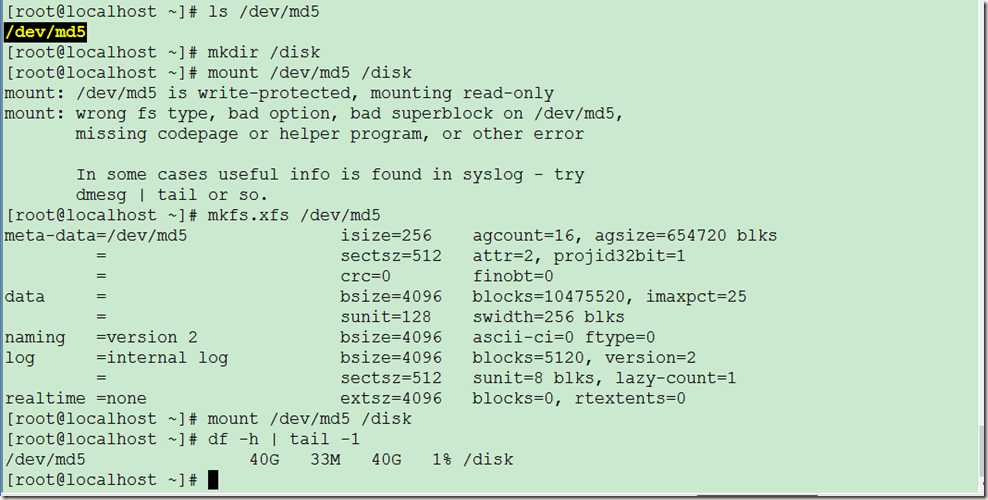

第四步:格式化并挂载

[root@localhost ~]# ls /dev/md5

[root@localhost ~]# mkdir /disk

[root@localhost ~]# mount /dev/md5 /disk #挂载失败,因为没有对分区进行格式化

[root@localhost ~]# mkfs.xfs /dev/md5

[root@localhost ~]# mount /dev/md5 /disk

[root@localhost ~]# df -h | tail -1

第五步:设置开机自动挂载

方法同RAID1

第六步:故障模拟及修复

当损坏一块磁盘时修复:

[root@localhost ~]# echo "hello world! " >> /disk/a.txt #检查文件

[root@localhost ~]# cat !$

cat /disk/a.txt

hello world!

[root@localhost ~]# mdadm /dev/md5 -f /dev/sdb1 #模拟磁盘损坏

mdadm: set /dev/sdb1 faulty in /dev/md5

[root@localhost ~]# mdadm -D /dev/md5 #检查磁盘状态

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:17:34 2016

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Rebuild Status : 2% complete

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 21

Number Major Minor RaidDevice State

3 8 65 0 spare rebuilding /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb1

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3] sdc1[1] sdb1[0](F)

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[=>...................] recovery = 5.9% (1236864/20954112) finish=7.1min speed=45809K/sec

unused devices: <none>

[root@localhost ~]# cat /disk/a.txt #检查磁盘中数据完整性

hello world!

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3] sdc1[1] sdb1[0](F)

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[=========>...........] recovery = 48.8% (10229276/20954112) finish=4.4min speed=40192K/sec

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:26:30 2016

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 1

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 48

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb1

[root@localhost ~]# df | grep disk

/dev/md5 41881600 33316 41848284 1% /disk

[root@localhost ~]# umount /disk

[root@localhost ~]# mdadm -S /dev/md5

mdadm: stopped /dev/md5

[root@localhost ~]# mdadm -zero-superblock /dev/sdb1

mdadm: -z does not set the mode, and so cannot be the first option.

[root@localhost ~]# mdadm --zero-superblock /dev/sdb1

[root@localhost ~]# mdadm -As /dev/md5

mdadm: /dev/md5 has been started with 3 drives.

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:30:34 2016

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 48

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

[root@localhost ~]# mdadm /dev/md5 -a /dev/sdb1 #只有当磁盘同步完成后,才可以添加磁盘

mdadm: added /dev/sdb1

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:31:40 2016

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 49

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

5 8 17 - spare /dev/sdb1

模拟两块磁盘损坏

[root@localhost ~]# mdadm /dev/md5 -f /dev/sdc1

mdadm: set /dev/sdc1 faulty in /dev/md5

[root@localhost ~]# mount -a

[root@localhost ~]# cat /disk/a.txt

hello world!

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:32:59 2016

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Rebuild Status : 4% complete

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 53

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

5 8 17 1 spare rebuilding /dev/sdb1

4 8 49 2 active sync /dev/sdd1

1 8 33 - faulty /dev/sdc1

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdb1[5] sde1[3] sdd1[4] sdc1[1](F)

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [U_U]

[=>...................] recovery = 5.2% (1091216/20954112) finish=10.8min speed=30344K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdb1[5] sde1[3] sdd1[4] sdc1[1](F)

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [U_U]

[=============>.......] recovery = 66.8% (13998080/20954112) finish=3.9min speed=29308K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdb1[5] sde1[3] sdd1[4] sdc1[1](F)

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>

[root@localhost ~]# mdadm /dev/md5 -f /dev/sde1

mdadm: set /dev/sde1 faulty in /dev/md5

[root@localhost ~]# cat /disk/a.txt

hello world!

[root@localhost ~]# clear

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:45:57 2016

State : clean, degraded

Active Devices : 2

Working Devices : 2

Failed Devices : 2

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 72

Number Major Minor RaidDevice State

0 0 0 0 removed

5 8 17 1 active sync /dev/sdb1

4 8 49 2 active sync /dev/sdd1

1 8 33 - faulty /dev/sdc1

3 8 65 - faulty /dev/sde1

[root@localhost ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/rhel-root 10475520 3307104 7168416 32% /

devtmpfs 485144 0 485144 0% /dev

tmpfs 500680 88 500592 1% /dev/shm

tmpfs 500680 13528 487152 3% /run

tmpfs 500680 0 500680 0% /sys/fs/cgroup

/dev/sr0 3947824 3947824 0 100% /mnt

/dev/sda1 201388 127728 73660 64% /boot

tmpfs 100136 16 100120 1% /run/user/0

/dev/md5 41881600 33316 41848284 1% /disk

[root@localhost ~]# umount /disk

[root@localhost ~]# mdadm -S /dev/md5

mdadm: stopped /dev/md5

[root@localhost ~]# mdadm --zero-superblock /dev/sdc1 /dev/sde1

[root@localhost ~]# mdadm -As /dev/md5

mdadm: /dev/md5 has been started with 2 drives (out of 3).

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:46:45 2016

State : clean, degraded

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 74

Number Major Minor RaidDevice State

0 0 0 0 removed

5 8 17 1 active sync /dev/sdb1

4 8 49 2 active sync /dev/sdd1

[root@localhost ~]# mdadm /dev/md5 -a /dev/sdc1

mdadm: added /dev/sdc1

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:47:47 2016

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Rebuild Status : 0% complete

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 76

Number Major Minor RaidDevice State

3 8 33 0 spare rebuilding /dev/sdc1

5 8 17 1 active sync /dev/sdb1

4 8 49 2 active sync /dev/sdd1

[root@localhost ~]# mdadm /dev/md5 -a /dev/sde1

mdadm: /dev/md5 has failed so using --add cannot work and might destroy

mdadm: data on /dev/sde1. You should stop the array and re-assemble it.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdc1[3] sdb1[5] sdd1[4]

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[>....................] recovery = 2.7% (571648/20954112) finish=10.8min speed=31424K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdc1[3] sdb1[5] sdd1[4]

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[========>............] recovery = 43.0% (9018880/20954112) finish=6.1min speed=32130K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdc1[3] sdb1[5] sdd1[4]

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[================>....] recovery = 80.7% (16911360/20954112) finish=1.8min speed=35542K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdc1[3] sdb1[5] sdd1[4]

41908224 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>

[root@localhost ~]# mdadm /dev/md5 -a /dev/sde1

mdadm: added /dev/sde1

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:59:26 2016

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 95

Number Major Minor RaidDevice State

3 8 33 0 active sync /dev/sdc1

5 8 17 1 active sync /dev/sdb1

4 8 49 2 active sync /dev/sdd1

6 8 65 - spare /dev/sde1

[root@localhost ~]#

模拟三块磁盘损坏

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 02:59:26 2016

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 95

Number Major Minor RaidDevice State

3 8 33 0 active sync /dev/sdc1

5 8 17 1 active sync /dev/sdb1

4 8 49 2 active sync /dev/sdd1

6 8 65 - spare /dev/sde1

[root@localhost ~]# mdadm -f /dev/sdc1

mdadm: /dev/sdc1 does not appear to be an md device

[root@localhost ~]# mdadm /dev/md5 -f /dev/sdc1

mdadm: set /dev/sdc1 faulty in /dev/md5

[root@localhost ~]# mdadm /dev/md5 -f /dev/sdb1

mdadm: set /dev/sdb1 faulty in /dev/md5

[root@localhost ~]# mdadm /dev/md5 -f /dev/sde1

mdadm: set /dev/sde1 faulty in /dev/md5

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 21 02:07:09 2016

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 21 03:05:40 2016

State : clean, FAILED

Active Devices : 1

Working Devices : 1

Failed Devices : 3

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 5f73c597:481d2f9e:df90d7de:1392d60a

Events : 4245

Number Major Minor RaidDevice State

0 0 0 0 removed

2 0 0 2 removed

4 8 49 2 active sync /dev/sdd1

3 8 33 - faulty /dev/sdc1

5 8 17 - faulty /dev/sdb1

6 8 65 - faulty /dev/sde1

[root@localhost ~]# mount /dev/md5 /disk #当损坏三块磁盘后,数据将不能读取!!!

mount: /dev/md5: can‘t read superblock

[root@localhost ~]#

1-15-2-RAID5 企业级RAID磁盘阵列的搭建(RAID1、RAID5、RAID10)

标签:

原文地址:http://www.cnblogs.com/xiaogan/p/5792050.html