文件I/O

Introduction

We’ll start our discussion of the UNIX System by describing the functions available for ?le I/O—open a ?le, read a ?le, write a ?le, and so on. Most ?le I/O on a UNIX system can be performed using only ?ve functions: open, read, write, lseek, and close.We then examine the effect of various buffer sizes on the read and write functions.

The functions described in this chapter are often referred to as unbuffered I/O, in contrast to the standard I/O routines, which we describe in Chapter 5. The term unbuffered means that each read or write invokes a system call in the kernel. These unbuffered I/O functions are not part of ISO C, but are part of POSIX.1 and the Single UNIX Speci?cation.

Whenever we describe the sharing of resources among multiple processes, the concept of an atomic operation becomes important. We examine this concept with regard to ?le I/O and the arguments to the open function. This leads to a discussion of how ?les are shared among multiple processes and which kernel data structures are involved. After describing these features, we describe the dup, fcntl, sync, fsync, and ioctl functions.

File Descriptors

To the kernel, all open ?les are referred to by ?le descriptors. A ?le descriptor is a non-negative integer. When we open an existing ?le or create a new ?le, the kernel returns a ?le descriptor to the process. When we want to read or write a ?le, we identify the ?le with the ?le descriptor that was returned by open or creat as an argument to either read or write.

By convention, UNIX System shells associate ?le descriptor 0 with the standard input of a process, ?le descriptor 1 with the standard output, and ?le descriptor 2 with the standard error. This convention is used by the shells and many applications; it is not a feature of the UNIX kernel. Nevertheless, many applications would break if these associations weren’t followed.

Although their values are standardized by POSIX.1, the magic numbers 0, 1, and 2 should be replaced in POSIX-compliant applications with the symbolic constants STDIN_FILENO, STDOUT_FILENO, and STDERR_FILENO to improve readability.These constants are de?ned in the <unistd.h> header.

File descriptors range from 0 through OPEN_MAX?1. (Recall Figure 2.11.) Early historical implementations of the UNIX System had an upper limit of 19, allowing a maximum of 20 open ?les per process, but many systems subsequently increased this limit to 63.

With FreeBSD 8.0, Linux 3.2.0, Mac OS X 10.6.8, and Solaris 10, the limit is essentially in?nite, bounded by the amount of memory on the system, the size of an integer, and any hard and soft limits con?gured by the system administrator.

open and openat Functions

A ?le is opened or created by calling either the open function or the openat function.

#include <fcntl.h>

int open(const char *path,int o?ag,... /* mode_t mode */ );

int openat(int fd,const char *path,int o?ag,... /* mode_t mode */ );

Both return: ?le descriptor if OK, ?1 on error

| O_RDONLY | Open for reading only. |

| O_WRONLY | Open for writing only. |

| O_RDWR | Open for reading and writing. |

Most implementations de?ne O_RDONLY as 0, O_WRONLY as 1, and O_RDWR as 2, for compatibility with older programs. | |

| O_EXEC | Open for execute only. |

| O_SEARCH | Open for search only (applies to directories). |

The purpose of the O_SEARCH constant is to evaluate search permissions at the time a directory is opened. Further operations using the directory’s ?le descriptor will not reevaluate permission to search the directory. None of the versions of the operating systems covered in this book support O_SEARCH yet. | |

One and only one of the previous ?ve constants must be speci?ed. The following constants are optional:

| O_APPEND | Append to the end of ?le on each write. We describe this option in detail in Section 3.11. |

| O_CLOEXEC | Set the FD_CLOEXEC ?le descriptor ?ag. We discuss ?le descriptor ?ags in Section 3.14. |

| O_CREAT | Create the ?le if it doesn’t exist. This option requires a third argument to the open function (a fourth argument to the openat function) — the mode, which speci?es the access permission bits of the new ?le. (When we describe a ?le’s access permission bits in Section 4.5, we’ll see how to specify the mode and how it can be modi?ed by the umask value of a process.) |

| O_DIRECTORY | Generate an error if path doesn’t refer to a directory. |

| O_EXCL | Generate an error if O_CREAT is also speci?ed and the ?le already exists. This test for whether the ?le already exists and the creation of the ?le if it doesn’t exist is an atomic operation. We describe atomic operations in more detail in Section 3.11. |

| O_NOCTTY | If path refers to a terminal device, do not allocate the device as the controlling terminal for this process. We talk about controlling terminals in Section 9.6. |

| O_NOFOLLOW | Generate an error if path refers to a symbolic link. We discuss symbolic links in Section 4.17. |

| O_NONBLOCK | If path refers to a FIFO, a block special ?le, or a character special ?le, this option sets the nonblocking mode for both the opening of the ?le and subsequent I/O. We describe this mode in Section 14.2. |

In earlier releases of System V, the O_NDELAY (no delay) ?ag was introduced. This option is similar to the O_NONBLOCK (nonblocking) option, but an ambiguity was introduced in the return value from a read operation. The no-delay option causes a read operation to return 0 if there is no data to be read from a pipe, FIFO, or device, but this con?icts with a return value of 0, indicating an end of ?le. SVR4-based systems still support the no-delay option, with the old semantics, but new applications should use the nonblocking option instead. | |

| O_SYNC | Have each write wait for physical I/O to complete, including I/O necessary to update ?le attributes modi?ed as a result of the write. We use this option in Section 3.14. |

| O_TRUNC | If the ?le exists and if it is successfully opened for either write-only or read–write, truncate its length to 0. |

| O_TTY_INIT | When opening a terminal device that is not already open, set the nonstandard termios parameters to values that result in behavior that conforms to the Single UNIX Speci?cation. We discuss the termios structure when we discuss terminal I/O in Chapter 18. |

The following two ?ags are also optional. They are part of the synchronized input and output option of the Single UNIX Speci?cation (and thus POSIX.1).

| O_DSYNC | Have each write wait for physical I/O to complete, but don’t wait for ?le attributes to be updated if they don’t affect the ability to read the data just written. |

The O_DSYNC and O_SYNC ?ags are similar, but subtly different. The O_DSYNC ?ag affects a ?le’s attributes only when they need to be updated to re?ect a change in the ?le’s data (for example, update the ?le’s size to re?ect more data). With the O_SYNC ?ag, data and attributes are always updated synchronously. When overwriting an existing part of a ?le opened with the O_DSYNC ?ag, the ?le times wouldn’t be updated synchronously. In contrast, if we had opened the ?le with the O_SYNC ?ag, every write to the ?le would update the ?le’s times before the write returns, regardless of whether we were writing over existing bytes or appending to the ?le. | |

| O_RSYNC | Have each read operation on the ?le descriptor wait until any pending writes for the same portion of the ?le are complete. |

Solaris 10 supports all three synchronization ?ags. Historically, FreeBSD (and thus Mac OS X) have used the O_FSYNC ?ag, which has the same behavior as O_SYNC. Because the two ?ags are equivalent, they de?ne the ?ags to have the same value. FreeBSD 8.0 doesn’t support the O_DSYNC or O_RSYNC ?ags. Mac OS X doesn’t support the O_RSYNC ?ag, but de?nes the O_DSYNC ?ag, treating it the same as the O_SYNC ?ag. Linux 3.2.0 supports the O_DSYNC ?ag, but treats the O_RSYNC ?ag the same as O_SYNC. | |

The ?le descriptor returned by open and openat is guaranteed to be the lowest numbered unused descriptor. This fact is used by some applications to open a new ?le on standard input, standard output, or standard error. For example, an application might close standard output — normally, ?le descriptor 1—and then open another ?le, knowing that it will be opened on ?le descriptor 1. We’ll see a better way to guarantee that a ?le is open on a given descriptor in Section 3.12, when we explore the dup2 function.

The fd parameter distinguishes the openat function from the open function. There are three possibilities:

1) The path parameter speci?es an absolute pathname. In this case, the fd parameter is ignored and the openat function behaves like the open function.

2) The path parameter speci?es a relative pathname and the fd parameter is a ?le descriptor that speci?es the starting location in the ?le system where the relative pathname is to be evaluated. The fd parameter is obtained by opening the directory wherethe relative pathname is to be evaluated.

3) The path parameter speci?es a relative pathname and the fd parameter has the special value AT_FDCWD. In this case, the pathname is evaluated starting in the current working directory and the openat function behaves like the open function.

The openat function is one of a class of functions added to the latest version of POSIX.1 to address two problems. First, it gives threads a way to use relative pathnames to open ?les in directories other than the current working directory. As we’ll see in Chapter 11, all threads in the same process share the same current working directory, so this makes it dif?cult for multiple threads in the same process to work in different directories at the same time. Second, it provides a way to avoid time-of-checkto-time-of-use (TOCTTOU) errors.

The basic idea behind TOCTTOU errors is that a program is vulnerable if it makes two ?le-based function calls where the second call depends on the results of the ?rst call. Because the two calls are not atomic, the ?le can change between the two calls, thereby invalidating the results of the ?rst call, leading to a program error. TOCTTOU errors in the ?le system namespace generally deal with attempts to subvert ?le system permissions by tricking a privileged program into either reducing permissions on a privileged ?le or modifying a privileged ?le to open up a security hole. Wei and Pu [2005] discuss TOCTTOU weaknesses in the UNIX ?le system interface.

Filename and Pathname Truncation

What happens if NAME_MAX is 14 and we try to create a new ?le in the current directory with a ?lename containing 15 characters? Traditionally, early releases of System V, such as SVR2, allowed this to happen, silently truncating the ?lename beyond the 14th character. BSD-derived systems, in contrast, returned an error status, with errno set to ENAMETOOLONG. Silently truncating the ?lename presents a problem that affects more than simply the creation of new ?les. If NAME_MAX is 14 and a ?le exists whose name is exactly 14 characters, any function that accepts a pathname argument, such as open or stat, has no way to determine what the original name of the ?le was, as the original name might have been truncated.

With POSIX.1, the constant _POSIX_NO_TRUNC determines whether long ?lenames and long components of pathnames are truncated or an error is returned. As we saw in Chapter 2, this value can vary based on the type of the ?le system, and we can use fpathconf or pathconf to query a directory to see which behavior is supported.

Whether an error is returned is largely historical. For example, SVR4-based systems do not generate an error for the traditional System V ?le system, S5. For the BSD-style ?le system (known as UFS), however, SVR4-based systems do generate an error. Figure 2.20 illustrates another example: Solaris will return an error for UFS, but not for PCFS, the DOS-compatible ?le system, as DOS silently truncates ?lenames that don’t ?t in an 8.3 format. BSD-derived systems and Linux always return an error.

If _POSIX_NO_TRUNC is in effect, errno is set to ENAMETOOLONG, and an error status is returned if any ?lename component of the pathname exceeds NAME_MAX.

Most modern ?le systems support a maximum of 255 characters for ?lenames. Because ?lenames are usually shorter than this limit, this constraint tends to not present problems for most applications.

creat Function

A new ?le can also be created by calling the creat function.

#include <fcntl.h>

int creat(const char *path,mode_t mode);

Returns: ?le descriptor opened for write-only if OK, ?1 on error

Note that this function is equivalent to

open(path,O_WRONLY | O_CREAT | O_TRUNC, mode);

Historically, in early versions of the UNIX System, the second argument to open could be only 0, 1, or 2. There was no way to open a ?le that didn’t already exist. Therefore, a separate system call, creat, was needed to create new ?les. With the O_CREAT and O_TRUNC options now provided by open,a separate creat function is no longer needed.

We’ll show how to specify mode in Section 4.5 when we describe a ?le’s access permissions in detail.

One de?ciency with creat is that the ?le is opened only for writing. Before the new version of open was provided, if we were creating a temporary ?le that we wanted to write and then read back, we had to call creat, close, and then open. A better way is to use the open function, as in

open(path,O_RDWR | O_CREAT | O_TRUNC, mode);

close Function

An open ?le is closed by calling the close function.

#include <unistd.h>

int close(int fd);

Returns: 0 if OK, ?1 on error

Closing a ?le also releases any record locks that the process may have on the ?le. We’ll discuss this point further in Section 14.3.

When a process terminates, all of its open ?les are closed automatically by the kernel. Many programs take advantage of this fact and don’t explicitly close open ?les. See the program in Figure1.4, for example.

lseek Function

Every open ?le has an associated ‘‘current ?le offset,’’ normally a non-negative integer that measures the number of bytes from the beginning of the ?le. (We describe some exceptions to the ‘‘non-negative’’ quali?er later in this section.) Read and write operations normally start at the current ?le offset and cause the offset to be incremented by the number of bytes read or written. By default, this offset is initialized to 0 when a ?le is opened, unless the O_APPEND option is speci?ed.

An open ?le’s offset can be set explicitly by calling lseek.

#include <unistd.h>

off_t lseek(int fd,off_t offset,int whence);

Returns: new ?le offset if OK, ?1 on error

The interpretation of the offset depends on the value of the whence argument.

- If whence is SEEK_SET, the ?le’s offset is set to offset bytes from the beginning of the ?le.

- If whence is SEEK_CUR, the ?le’s offset is set to its current value plus the offset. The offset can be positive or negative.

- If whence is SEEK_END, the ?le’s offset is set to the size of the ?le plus the offset. The offset can be positive or negative.

Because a successful call to lseek returns the new ?le offset, we can seek zero bytes from the current position to determine the current offset:

off_t currpos;

currpos = lseek(fd, 0, SEEK_CUR);

This technique can also be used to determine if a ?le is capable of seeking. If the ?le descriptor refers to a pipe, FIFO, or socket, lseek sets errno to ESPIPE and returns ?1.

The three symbolic constants—SEEK_SET, SEEK_CUR, and SEEK_END—were introduced with System V. Prior to this, whence was speci?ed as 0 (absolute), 1 (relative to the current offset), or 2 (relative to the end of ?le). Much software still exists with these numbers hard coded.

The character l in the name lseek means ‘‘long integer.’’ Before the introduction of the off_t data type, the offset argument and the return value were long integers. lseek was introduced with Version 7 when long integers were added to C. (Similar functionality was provided in Version 6 by the functions seek and tell.)

Example

The program in Figure3.1 tests its standard input to see whether it is capable of seeking.

/*** 文件名: fileio/seek.c* 内容:用于测试对其标准输入能否设置偏移量* 时间: 2016年 08月 23日 星期二 16:03:00 CST* 作者:firewaywei**/#include "apue.h"intmain(void){if (lseek(STDIN_FILENO, 0, SEEK_CUR) == -1){printf("cannot seek\n");}else{printf("seek OK\n");}exit(0);}

Normally, a ?le’s current offset must be a non-negative integer. It is possible, however, that certain devices could allow negative offsets. But for regular ?les, the offset must be non-negative. Because negative offsets are possible, we should be careful to compare the return value from lseek as being equal to or not equal to ?1, rather than testing whether it is less than 0.

The /dev/kmem device on FreeBSD for the Intel x86 processor supports negative offsets. Because the offset (off_t) is a signed data type (Figure 2.21), we lose a factor of 2 in the maximum ?le size. If off_t is a 32-bit integer,the maximum ?le size is 2^31?1bytes.

lseek only records the current ?le offset within the kernel—it does not cause any I/O to take place. This offset is then used by the next read or write operation.

The ?le’s offset can be greater than the ?le’s current size, in which case the next write to the ?le will extend the ?le. This is referred to as creating a hole in a ?le and is allowed. Any bytes in a ?le that have not been written are read back as 0.

A hole in a ?le isn’t required to have storage backing it on disk. Depending on the ?le system implementation, when you write after seeking past the end of a ?le, new disk blocks might be allocated to store the data, but there is no need to allocate disk blocks for the data between the old end of ?le and the location where you start writing.

/*** 文件名: fileio/seek.c* 内容:用于创建一个具有空洞的文件。* 时间: 2016年 08月 23日 星期二 16:03:00 CST* 作者:firewaywei*/#include "apue.h"#include <fcntl.h>char buf1[] = "abcdefghij";char buf2[] = "ABCDEFGHIJ";intmain(void){int fd;if ((fd = creat("file.hole", FILE_MODE)) < 0){err_sys("creat error");}/* offset now = 10 */if (write(fd, buf1, 10) != 10){err_sys("buf1 write error");}/* offset now = 16384 */if (lseek(fd, 16384, SEEK_SET) == -1){err_sys("lseek error");}/* offset now = 16394 */if (write(fd, buf2, 10) != 10){err_sys("buf2 write error");}exit(0);}

Running this program gives us

$ ./hole

$ ll file.hole

-rw-r--r-- 1 fireway fireway 16394 8月 23 16:18 file.hole

fireway:~/study/apue.3e/fileio$ od -c file.hole

0000000 a b c d e f g h i j \0 \0 \0 \0 \0 \0

0000020 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0 \0

*

0040000 A B C D E F G H I J

0040012

$

We use the od(1) command to look at the contents of the ?le. The -c ?ag tells it to print the contents as characters. We can see that the unwritten bytes in the middle are read back as zero. The seven-digit number at the beginning of each line is the byte offset in octal.

To prove that there is really a hole in the ?le, let’s compare the ?le we just created with a ?le of the same size, but without holes:

$ ls -ls file.hole file.nohole // 比较长度

8 -rw-r--r-- 1 fireway fireway 16394 8月 23 16:18 file.hole

20 -rw-r--r-- 1 fireway fireway 16394 8月 23 16:37 file.nohole

Although both ?les are the same size, the ?le without holes consumes 20 disk blocks, whereas the ?le with holes consumes only 8 blocks.

In this example, we call the write function (Section 3.8). We’ll have more to say about ?les with holes in Section 4.12.

Because the offset address that lseek uses is represented by an off_t, implementations are allowed to support whatever size is appropriate on their particular platform. Most platforms today provide two sets of interfaces to manipulate ?le offsets: one set that uses 32-bit ?le offsets and another set that uses 64-bit ?le offsets.

The Single UNIX Speci?cation provides a way for applications to determine which environments are supported through the sysconf function (Section 2.5.4). Figure 3.3 summarizes the sysconf constants that are de?ned.

| Name of option | Description | name argument |

| _POSIX_V7_ILP32_OFF32 | int, long,pointer,and off_t types are 32 bits. | _SC_V7_ILP32_OFF32 |

| _POSIX_V7_ILP32_OFFBIG | int, long,and pointer types are 32 bits; off_t types are at least 64 bits. | _SC_V7_ILP32_OFFBIG |

| _POSIX_V7_LP64_OFF64 | int types are 32 bits; long,pointer, and off_t types are 64 bits. | _SC_V7_LP64_OFF64 |

| _POSIX_V7_LP64_OFFBIG | int types are at least 32 bits; long, pointer,and off_t types areat least 64 bits. | _SC_V7_LP64_OFFBIG |

Figure 3.3 Data size options and name arguments to sysconf

The c99 compiler requires that we use the getconf(1) command to map the desired data size model to the ?ags necessary to compile and link our programs. Different ?ags and libraries might be needed, depending on the environments supported by each platform.

Unfortunately, this is one area in which implementations haven’t caught up to the standards. If your system does not match the latest version of the standard, the system might support the option names from the previous version of the Single UNIX Speci?cation: _POSIX_V6_ILP32_OFF32, _POSIX_V6_ILP32_OFFBIG, _POSIX_V6_LP64_OFF64, and _POSIX_V6_LP64_OFFBIG.

To get around this, applications can set the _FILE_OFFSET_BITS constant to 64 to enable 64-bit offsets. Doing so changes the de?nition of off_t to be a 64-bit signed integer. Setting _FILE_OFFSET_BITS to 32 enables 32-bit ?le offsets. Be aware, however, that although all four platforms discussed in this text support both 32-bit and 64-bit ?le offsets, setting _FILE_OFFSET_BITS is not guaranteed to be portable and might not have the desired effect.

Figure 3.4 summarizes the size in bytes of the off_t data type for the platforms covered in this book when an application doesn’t de?ne _FILE_OFFSET_BITS, as well as the size when an application de?nes _FILE_OFFSET_BITS to have a value of either 32 or 64.

| Operating system | CPU architecture | _FILE_OFFSET_BITS value | ||

| Unde?ned | 32 | 64 | ||

| FreeBSD 8.0 | x86 32-bit | 8 | 8 | 8 |

| Linux 3.2.0 | x86 64-bit | 8 | 8 | 8 |

| Mac OS X 10.6.8 | x86 64-bit | 8 | 8 | 8 |

| Solaris 10 | SPARC 64-bit | 8 | 4 | 8 |

Figure 3.4 Size in bytes of off_t for different platforms

Note that even though you might enable 64-bit ?le offsets, your ability to create a ?le larger than 2 GB (231?1bytes) depends on the underlying ?le system type.

read Function

Data is read from an open ?le with the read function.

#include <unistd.h>

ssize_t read(int fd,void *buf,size_t nbytes);

Returns: number of bytes read, 0 if end of ?le, ?1 on error

If the read is successful, the number of bytes read is returned. If the end of ?le is encountered, 0 is returned.

There are several cases in which the number of bytes actually read is less than the amount requested:

- When reading from a regular ?le, if the end of ?le is reached before the requested number of bytes has been read. For example, if 30 bytes remain until the end of ?le and we try to read 100 bytes, read returns 30. The next time we call read, it will return 0 (end of ?le).

- When reading from a terminal device. Normally, up to one line is read at a time. (We’ll see how to change this default in Chapter 18.)

- When reading from a network. Buffering within the network may cause less than the requested amount to be returned.

- When reading from a pipe or FIFO. If the pipe contains fewer bytes than requested, read will return only what is available.

- When reading from a record-oriented device. Some record-oriented devices, such as magnetic tape, can return up to a single record at a time.

- When interrupted by a signal and a partial amount of data has already been read. We discuss this further in Section 10.5.

The read operation starts at the ?le’s current offset. Before a successful return, the offset is incremented by the number of bytes actually read.

POSIX.1 changed the prototype for this function in several ways. The classic de?nition is

int read(int fd,char *buf,unsigned nbytes);

- First, the second argument was changed from char * to void * to be consistent with ISO C: the type void * is used for generic pointers.

- Next, the return value was required to be a signed integer (ssize_t) to return a positive byte count, 0 (for end of ?le), or ?1(for an error).

- Finally, the third argument historically has been an unsigned integer, to allow a 16-bit implementation to read or write up to 65,534 bytes at a time. With the 1990 POSIX.1 standard, the primitive system data type ssize_t was introduced to provide the signed return value, and the unsigned size_t was used for the third argument. (Recall the SSIZE_MAX constant from Section 2.5.2.)

write Function

Data is written to an open ?le with the write function.

#include <unistd.h>

ssize_t write(int fd,const void *buf,size_t nbytes);

Returns: number of bytes written if OK, ?1 on error

The return value is usually equal to the nbytes argument; otherwise, an error has occurred. A common cause for a write error is either ?lling up a disk or exceeding the ?le size limit for a given process (Section 7.11and Exercise 10.11).

For a regular ?le, the write operation starts at the ?le’s current offset. If the O_APPEND option was speci?ed when the ?le was opened, the ?le’s offset is set to the current end of ?le before each write operation. After a successful write, the ?le’s offset is incremented by the number of bytes actually written.

I/O Ef?ciency

The program in Figure3.5 copies a ?le, using only the read and write functions.

/*** 文件名: fileio/seek.c* 内容:只使用read和write函数复制一个文件.* 时间: 2016年 08月 25日 星期四 11:00:18 CST* 作者:firewaywei* 执行命令:* ./a.out < infile > outfile*/#include "apue.h"#define BUFFSIZE 4096intmain(void){int n;char buf[BUFFSIZE];while ((n = read(STDIN_FILENO, buf, BUFFSIZE)) > 0){if (write(STDOUT_FILENO, buf, n) != n){err_sys("write error");}}if (n < 0){err_sys("read error");}exit(0);}

Figure 3.5 Copy standardinput to standardoutput

The following caveats apply to this program.

- It reads from standard input and writes to standard output, assuming that these have been set up by the shell before this program is executed. Indeed, all normal UNIX system shells provide a way to open a ?le for reading on standard input and to create (or rewrite) a ?le on standard output. This prevents the program from having to open the input and output ?les, and allows the user to take advantage of the shell’s I/O redirection facilities.

- The program doesn’t close the input ?le or output ?le. Instead, the program uses the feature of the UNIX kernel that closes all open ?le descriptors in a process when that process terminates.

- This example works for both text ?les and binary ?les, since there is no difference between the two to the UNIX kernel.

One question we haven’t answered, however, is how we chose the BUFFSIZE value. Before answering that, let’s run the program using different values for BUFFSIZE. Figure 3.6 shows the results for reading a 516,581,760-byte ?le, using 20 different buffer sizes.

| BUFFSIZE | User CPU(seconds) | System CPU(seconds) | Clock time(seconds) | Number of loops |

| 1 | 20.03 | 117.50 | 138.73 | 516,581,760 |

| 2 | 9.69 | 58.76 | 68.60 | 258,290,880 |

| 4 | 4.60 | 36.47 | 41.27 | 129,145,440 |

| 8 | 2.47 | 15.44 | 18.38 | 64,572,720 |

| 16 | 1.07 | 7.93 | 9.38 | 32,286,360 |

| 32 | 0.56 | 4.51 | 8.82 | 16,143,180 |

| 64 | 0.34 | 2.72 | 8.66 | 8,071,590 |

| 128 | 0.34 | 1.84 | 8.69 | 4,035,795 |

| 256 | 0.15 | 1.30 | 8.69 | 2,017,898 |

| 512 | 0.09 | 0.95 | 8.63 | 1,008,949 |

| 1,024 | 0.02 | 0.78 | 8.58 | 504,475 |

| 2,048 | 0.04 | 0.66 | 8.68 | 252,238 |

| 4,096 | 0.03 | 0.58 | 8.62 | 126,119 |

| 8,192 | 0.00 | 0.54 | 8.52 | 63,060 |

| 16,384 | 0.01 | 0.56 | 8.69 | 31,530 |

| 32,768 | 0.00 | 0.56 | 8.51 | 15,765 |

| 65,536 | 0.01 | 0.56 | 9.12 | 7,883 |

| 131,072 | 0.00 | 0.58 | 9.08 | 3,942 |

| 262,144 | 0.00 | 0.60 | 8.70 | 1,971 |

| 524,288 | 0.01 | 0.58 | 8.58 | 986 |

Figure 3.6 Timing results for reading with different buffer sizes on Linux

The file was read using the program shown in Figure 3.5, with standard output redirected to /dev/null. The file system used for this test was the Linux ext4 file system with 4,096-byte blocks. (The st_blksize value, which we describe in Section 4.12, is 4,096.) This accounts for the minimum in the system time occurring at the few timing measurements starting around a BUFFSIZE of 4,096. Increasing the buffer size beyond this limit has little positive effect.

Most file systems support some kind of read-ahead to improve performance. When sequential reads are detected, the system tries to read in more data than an application requests, assuming that the application will read it shortly. The effect of read-ahead can be seen in Figure 3.6, where the elapsed time for buffer sizes as small as 32 bytes is as good as the elapsed time for larger buffer sizes.

We’ll return to this timing example later in the text. In Section 3.14, we show the effect of synchronous writes; in Section 5.8, we compare these unbuffered I/O times with the standard I/O library.

Beware when trying to measure the performance of programs that read and write files. The operating system will try to cache the file incore, so if you measure the performance of the program repeatedly, the successive timings will likely be better than the first. This improvement occurs because the first run causes the file to be entered into the system’s cache, and successive runs access the file from the system’s cache instead of from the disk. (The term incore means in main memory. Back in the day, a computer ’s main memory was built out of ferrite core. This is where the phrase ‘‘core dump’’ comes from: the main memory image of a program stored in a file on disk for diagnosis.)

In the tests reported in Figure 3.6, each run with a different buffer size was made using a different copy of the file so that the current run didn’t find the data in the cache from the previous run. The files are large enough that they all don’t remain in the cache (the test system was configured with 6 GB of RAM).

File Sharing

The UNIX System supports the sharing of open files among different processes. Before describing the dup function, we need to describe this sharing. To do this, we’ll examine the data structures used by the kernel for all I/O.

The following description is conceptual; it may or may not match a particular implementation. Refer to Bach [1986] for a discussion of these structures in System V. McKusick et al. [1996] describe these structures in 4.4BSD. McKusick and Neville-Neil [2005] cover FreeBSD 5.2. For a similar discussion of Solaris, see McDougall and Mauro [2007]. The Linux 2.6 kernel architecture is discussed in Bovet and Cesati [2006].

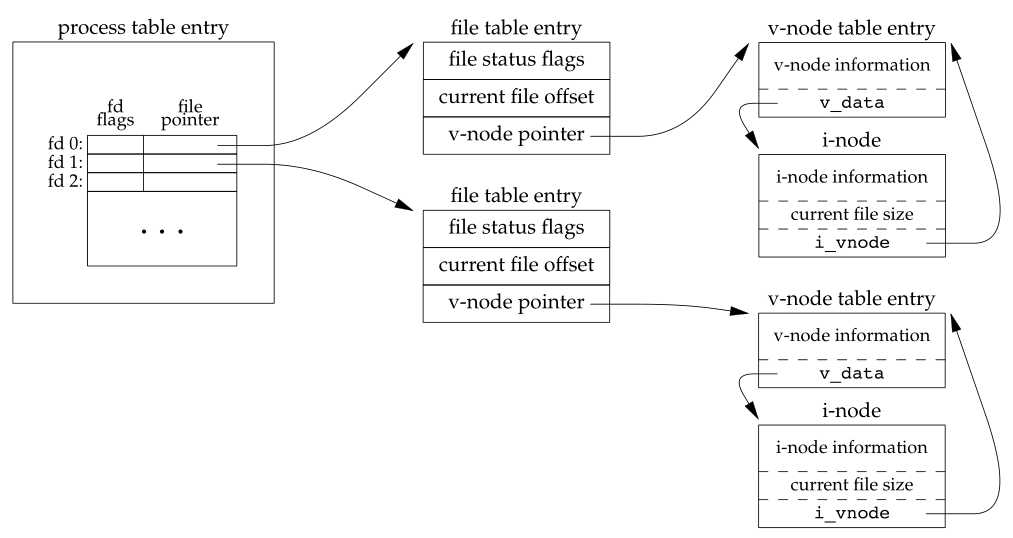

The kernel uses three data structures to represent an open file, and the relationships among them determine the effect one process has on another with regard to file sharing.

1) Every process has an entry in the process table. Within each process table entry is a table of open file descriptors, which we can think of as a vector, with one entry per descriptor. Associated with each file descriptor are

- The file descriptor flags (close-on-exec; refer to Figure 3.7 and Section 3.14)

- A pointer to a file table entry

2) The kernel maintains a file table for all open files. Each file table entry contains

- The file status flags for the file, such as read, write, append, sync, and nonblocking; more on these in Section 3.14

- The current file offset

- A pointer to the v-node table entry for the file

3) Each open file (or device) has a v-node structure that contains information about the type of file and pointers to functions that operate on the file. For most files, the v-node also contains the i-node for the file. This information is read from disk when the file is opened, so that all the pertinent information about the file isavailable. For example, the i-node contains the owner of the file, the size of the file, pointers to where the actual data blocks for the file are located on disk, and so on. (We talk more about i-nodes in Section 4.14 when we describe the typical UNIX file system in more detail.)

Linux has no v-node. Instead, a generic i-node structure is used. Although the implementations differ, the v-node is conceptually the same as a generic i-node. Both point to an i-node structure specific to the file system.

We’re ignoring some implementation details that don’t affect our discussion. For example, the table of open file descriptors can be stored in the user area (a separate per-process structure that can be paged out) instead of the process table. Also, these tables can be implemented in numerous ways—they need not be arrays; one alternate implementation is a linked lists of structures. Regardless of the implementation details, the general concepts remain the same.

Figure 3.7 shows a pictorial arrangement of these three tables for a single process that has two different files open: one file is open on standard input (file descriptor 0), and the other is open on standard output (file descriptor 1).

Figure 3.7 Kernel data structures for open files

The arrangement of these three tables has existed since the early versions of the UNIX System [Thompson 1978]. This arrangement is critical to the way files are shared among processes. We’ll return to this figure in later chapters, when we describe additional ways that files are shared.

The v-node was invented to provide support for multiple file system types on a single computer system. This work was done independently by Peter Weinberger (Bell Laboratories) and Bill Joy (Sun Microsystems). Sun called this the Virtual File System and called the file system–independent portion of the i-node the v-node [Kleiman 1986]. The v-node propagated through various vendor implementations as support for Sun’s Network File System (NFS) was added. The first release from Berkeley to provide v-nodes was the 4.3BSD Reno release, when NFS was added.

In SVR4, the v-node replaced the file system–independent i-node of SVR3. Solaris is derived from SVR4 and, therefore, uses v-nodes.

Instead of splitting the data structures into a v-node and an i-node, Linux uses a file system–independent i-node and a file system–dependent i-node.

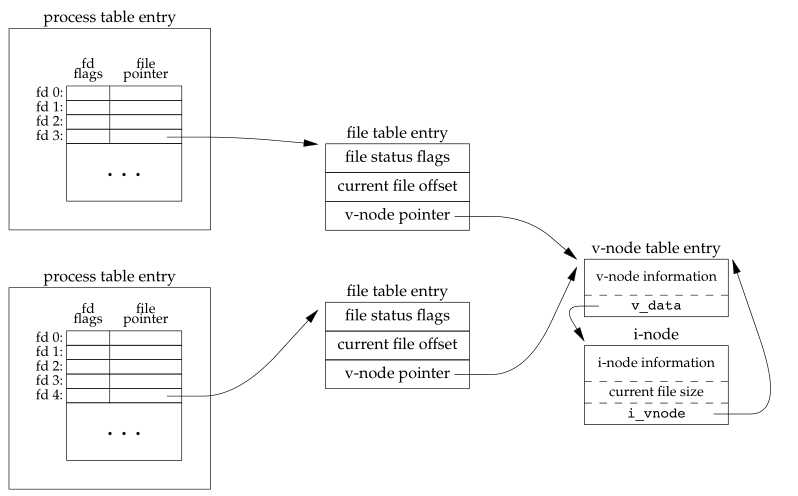

If two independent processes have the same file open, we could have the arrangement shown in Figure 3.8.

Figure 3.8 Two independent processes with the same file open

We assume here that the first process has the file open on descriptor 3 and that the second process has that same file open on descriptor 4. Each process that opens the file gets its own file table entry, but only a single v-node table entry is required for a given file. One reason each process gets its own file table entry is so that each process has its own current offset for the file.

Given these data structures, we now need to be more specific about what happens with certain operations that we’ve already described.

- After each write is complete, the current file offset in the file table entry is incremented by the number of bytes written. If this causes the current file offset to exceed the current file size, the current file size in the i-node table entry is set to the current file offset (for example, the file is extended).

- If a file is opened with the O_APPEND flag, a corresponding flag is set in the file status flags of the file table entry. Each time a write is performed for a file with this append flag set, the current file offset in the file table entry is first set to the current file size from the i-node table entry. This forces every write to be appended to the current end of file.

- If a file is positioned to its current end of file using lseek, all that happens is the current file offset in the file table entry is set to the current file size from the i-node table entry. (Note that this is not the same as if the file was opened with the O_APPEND flag, as we will see in Section 3.11.)

- The lseek function modifies only the current file offset in the file table entry. No I/O takes place.

It is possible for more than one file descriptor entry to point to the same file table entry, as we’ll see when we discuss the dup function in Section 3.12. This also happens after a fork when the parent and the child share the same file table entry for each open descriptor (Section 8.3).

Note the difference in scope between the file descriptor flags and the file status flags. The former apply only to a single descriptor in a single process, whereas the latter apply to all descriptors in any process that point to the given file table entry. When we describe the fcntl function in Section 3.14, we’ll see how to fetch and modify both the file descriptor flags and the file status flags.

Everything that we’ve described so far in this section works fine for multiple processes that are reading the same file. Each process has its own file table entry with its own current file offset. Unexpected results can arise, however, when multiple processes write to the same file. To see how to avoid some surprises, we need to understand the concept of atomic operations.

Atomic Operations

Appending to a File

Consider a single process that wants to append to the end of a file. Older versions of the UNIX System didn’t support the O_APPEND option to open, so the program was coded as follows:

if (lseek(fd, 0L, 2) < 0) /* position to EOF */{err_sys("lseek error");}if (write(fd, buf, 100) != 100) /* and write */{err_sys("write error");}

Assume that two independent processes, A and B, are appending to the same file. Each has opened the file but without the O_APPEND flag. This gives us the same picture as Figure 3.8. Each process has its own file table entry, but they share a single v-node table entry. Assume that process A does the lseek and that this sets the current offset for the file for process A to byte offset 1,500 (the current end of file). Then the kernel switches processes, and B continues running. Process B then does the lseek, which sets the current offset for the file for process B to byte offset 1,500 also (the current end of file). Then B calls write, which increments B’s current file offset for the file to 1,600. Because the file’s size has been extended, the kernel also updates the current file size in the v-node to 1,600. Then the kernel switches processes and A resumes. When A calls write, the data is written starting at the current file offset for A, which is byte offset 1,500. This overwrites the data that B wrote to the file.

The problem here is that our logical operation of ‘‘position to the end of file and write’’ requires two separate function calls (as we’ve shown it). The solution is to have the positioning to the current end of file and the write be an atomic operation with regard to other processes. Any operation that requires more than one function call cannot be atomic, as there is always the possibility that the kernel might temporarily suspend the process between the two function calls (as we assumed previously).

The UNIX System provides an atomic way to do this operation if we set the O_APPEND flag when a file is opened. As we described in the previous section, this causes the kernel to position the file to its current end of file before each write. We no longer have to call lseek before each write.

pread and pwrite Functions

The Single UNIX Specification includes two functions that allow applications to seek and perform I/O atomically: pread and pwrite.

#include <unistd.h>

ssize_t pread(int fd, void *buf, size_t nbytes, off_t offset);

Returns: number of bytes read, 0 if end of file, ?1 on error

ssize_t pwrite(int fd, const void *buf, size_t nbytes, off_t offset);

Returns: number of bytes written if OK, ?1 on error

Calling pread is equivalent to calling lseek followed by a call to read, with the following exceptions.

- There is no way to interrupt the two operations that occur when we call pread.

- The current file offset is not updated.

Calling pwrite is equivalent to calling lseek followed by a call to write, with similar exceptions.

Creating a File

We saw another example of an atomic operation when we described the O_CREAT and O_EXCL options for the open function. When both of these options are specified, the open will fail if the file already exists. We also said that the check for the existence of the file and the creation of the file was performed as an atomic operation. If we didn’t have this atomic operation, we might try

if ((fd = open(path, O_WRONLY)) < 0){if (errno == ENOENT){if ((fd = creat(path, mode)) < 0){err_sys("creat error");}}else{err_sys("open error");}}

The problem occurs if the file is created by another process between the open and the creat. If the file is created by another process between these two function calls, and if that other process writes something to the file, that data is erased when this creat is executed. Combining the test for existence and the creation into a single atomic operation avoids this problem.

In general, the term atomic operation refers to an operation that might be composed of multiple steps. If the operation is performed atomically, either all the steps are performed (on success) or none are performed (on failure). It must not be possible for only a subset of the steps to be performed. We’ll return to the topic of atomic operations when we describe the link function (Section 4.15) and record locking (Section 14.3).

dup and dup2 Functions

An existing file descriptor is duplicated by either of the following functions:

#include <unistd.h>

int dup(int fd);

int dup2(int fd, int fd2);

Both return: new file descriptor if OK, ?1 on error

The new file descriptor returned by dup is guaranteed to be the lowest-numbered available file descriptor. With dup2, we specify the value of the new descriptor with the fd2 argument. If fd2 is already open, it is first closed. If fd equals fd2, then dup2 returns fd2 without closing it. Otherwise, the FD_CLOEXEC file descriptor flag is cleared for fd2, so that fd2 is left open if the process calls exec.

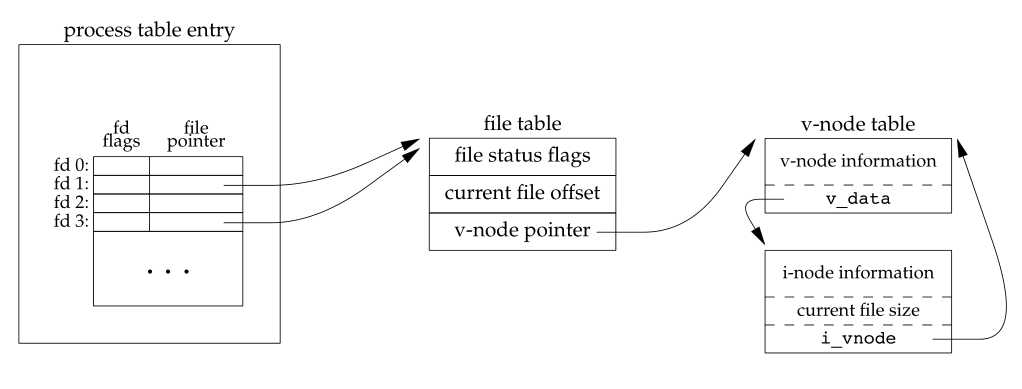

The new file descriptor that is returned as the value of the functions shares the same file table entry as the fd argument. We show this in Figure 3.9.

Figure 3.9 Kernel data structures after dup(1)

In this figure, we assume that when it’s started, the process executes

newfd = dup(1);

We assume that the next available descriptor is 3 (which it probably is, since 0, 1, and 2 are opened by the shell). Because both descriptors point to the same file table entry, they share the same file status flags—read, write, append, and so on—and the same current file offset.

Each descriptor has its own set of file descriptor flags. As we describe in Section 3.14, the close-on-exec file descriptor flag for the new descriptor is always cleared by the dup functions.

Another way to duplicate a descriptor is with the fcntl function, which we describe in Section 3.14. Indeed, the call

dup(fd);

is equivalent to

fcntl(fd, F_DUPFD, 0);

Similarly, the call

dup2(fd, fd2);

is equivalent to

close(fd2);

fcntl(fd, F_DUPFD, fd2);

In this last case, the dup2 is not exactly the same as a close followed by an fcntl. The differences are as follows:

- dup2 is an atomic operation, whereas the alternate form involves two function calls. It is possible in the latter case to have a signal catcher called between the close and the fcntl that could modify the file descriptors. (We describe signals in Chapter 10.) The same problem could occur if a different thread changes the file descriptors. (We describe threads in Chapter 11.)

- There are some errno differences between dup2 and fcntl.

The dup2 system call originated with Version 7 and propagated through the BSD releases. The fcntl method for duplicating file descriptors appeared with System III and continued with System V. SVR3.2 picked up the dup2 function, and 4.2BSD picked up the fcntl function and the F_DUPFD functionality. POSIX.1 requires both dup2 and the F_DUPFD feature of fcntl.

sync, fsync, and fdatasync Functions

Traditional implementations of the UNIX System have a buffer cache or page cache in the kernel through which most disk I/O passes. When we write data to a file, the data is normally copied by the kernel into one of its buffers and queued for writing to disk at some later time. This is called delayed write. (Chapter 3 of Bach [1986] discusses this buffer cache in detail.)

The kernel eventually writes all the delayed-write blocks to disk, normally when it needs to reuse the buffer for some other disk block. To ensure consistency of the file system on disk with the contents of the buffer cache, the sync, fsync, and fdatasync functions are provided.

#include <unistd.h>

int fsync(int fd);

int fdatasync(int fd);

Returns: 0 if OK, ?1 on error

void sync(void);

The sync function simply queues all the modified block buffers for writing and returns; it does not wait for the disk writes to take place.

The function sync is normally called periodically (usually every 30 seconds) from a system daemon, often called update. This guarantees regular flushing of the kernel’s block buffers. The command sync(1) also calls the sync function.

The function fsync refers only to a single file, specified by the file descriptor fd, and waits for the disk writes to complete before returning. This function is used when an application, such as a database, needs to be sure that the modified blocks have been written to the disk.

The fdatasync function is similar to fsync, but it affects only the data portions of a file. With fsync, the file’s attributes are also updated synchronously.

fcntl Function

ioctl Function

/dev/fd

Summary

参考