标签:

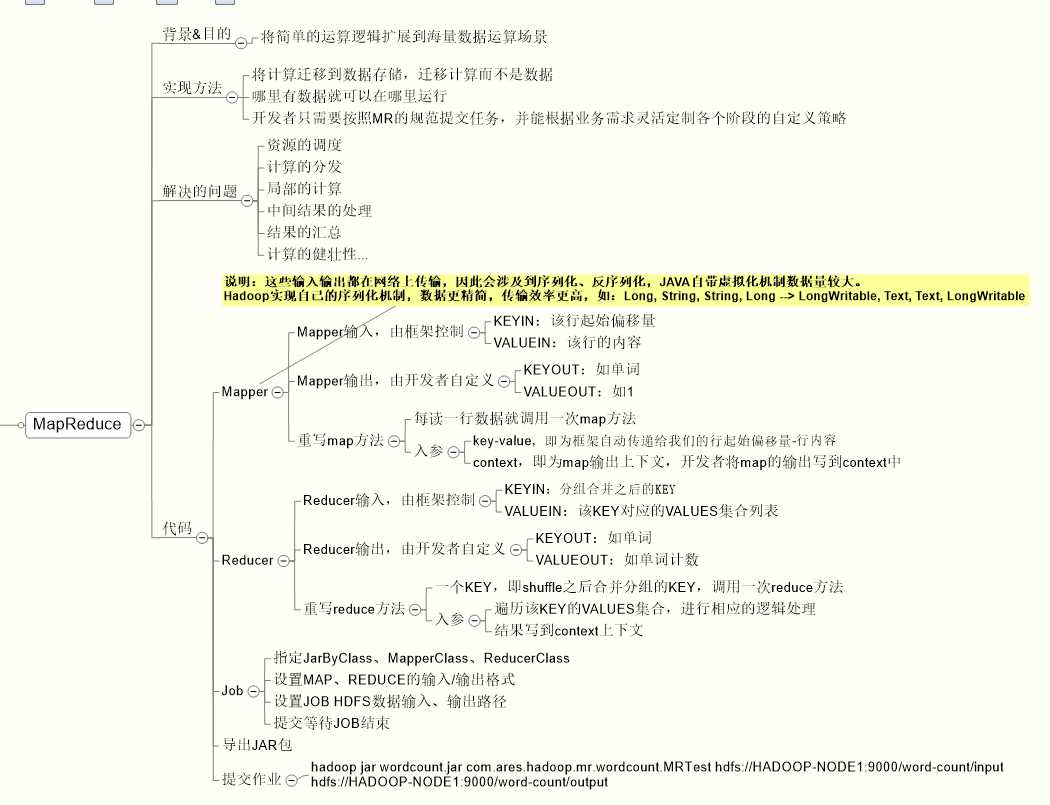

1、基本概念

2、Mapper

package com.ares.hadoop.mr.wordcount; import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; //Long, String, String, Long --> LongWritable, Text, Text, LongWritable public class WordCountMapper extends Mapper<LongWritable, Text, Text, LongWritable> { private final static LongWritable ONE = new LongWritable(1L) ; private Text word = new Text(); @Override protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, LongWritable>.Context context) throws IOException, InterruptedException { // TODO Auto-generated method stub //super.map(key, value, context); StringTokenizer itr = new StringTokenizer(value.toString(), " "); while (itr.hasMoreTokens()) { //efficiency is not well //context.write(new Text(itr.nextToken()), new LongWritable(1L)); word.set(itr.nextToken()); context.write(word, ONE); } } }

3、Reducer

package com.ares.hadoop.mr.wordcount; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; public class WordCountReducer extends Reducer<Text, LongWritable, Text, LongWritable>{ private LongWritable result = new LongWritable(); @Override protected void reduce(Text key, Iterable<LongWritable> vlaues, Reducer<Text, LongWritable, Text, LongWritable>.Context context) throws IOException, InterruptedException { // TODO Auto-generated method stub //super.reduce(arg0, arg1, arg2); long sum = 0; for (LongWritable value : vlaues) { sum += value.get(); } result.set(sum); context.write(key, result); } }

4、JobRunner

package com.ares.hadoop.mr.wordcount; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.log4j.Logger; public class MRTest { private static final Logger LOGGER = Logger.getLogger(MRTest.class); public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { LOGGER.debug("MRTest: MRTest STARTED..."); if (args.length != 2) { LOGGER.error("MRTest: ARGUMENTS ERROR"); System.exit(-1); } Configuration conf = new Configuration(); Job job = Job.getInstance(conf); // JOB NAME job.setJobName("wordcount"); // JOB MAPPER & REDUCER job.setJarByClass(MRTest.class); job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class); // MAP & REDUCE job.setOutputKeyClass(Text.class); job.setOutputValueClass(LongWritable.class); // MAP job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(LongWritable.class); // JOB INPUT & OUTPUT PATH //FileInputFormat.addInputPath(job, new Path(args[0])); FileInputFormat.setInputPaths(job, args[0]); FileOutputFormat.setOutputPath(job, new Path(args[1])); // VERBOSE OUTPUT if (job.waitForCompletion(true)) { LOGGER.debug("MRTest: MRTest SUCCESSFULLY..."); } else { LOGGER.debug("MRTest: MRTest FAILED..."); } LOGGER.debug("MRTest: MRTest COMPLETED..."); } }

5、JAR 提交作业 到YARN

hadoop jar wordcount.jar com.ares.hadoop.mr.wordcount.MRTest hdfs://HADOOP-NODE1:9000/word-count/input hdfs://HADOOP-NODE1:9000/word-count/output

标签:

原文地址:http://www.cnblogs.com/junneyang/p/5844480.html