标签:

前言

Spark可以通过SBT和Maven两种方式进行编译,再通过make-distribution.sh脚本生成部署包。

SBT编译需要安装git工具,而Maven安装则需要maven工具,两种方式均需要在联网 下进行。

尽管maven是Spark官网推荐的编译方式,但是sbt的编译速度更胜一筹。因此,对于spark的开发者来说,sbt编译可能是更好的选择。由于sbt编译也是基于maven的POM文件,因此sbt的编译参数与maven的编译参数是一致的。

心得

有时间,自己一定要动手编译源码,想要成为高手和大数据领域大牛,前面的苦,是必定要吃的。

无论是编译spark源码,还是hadoop源码。新手初次编译,一路会碰到很多问题,也许会花上个一天甚至几天,这个是正常。把心态端正就是!有错误,更好,解决错误,是最好锻炼和提升能力的。

更不要小看它们,能碰到是幸运,能弄懂和深入研究,之所以然,是福气。

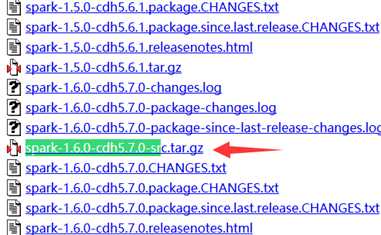

主流是这3大版本,其实,是有9大版本。

CDH的CM是要花钱的,当然它的预编译包,是免费的。

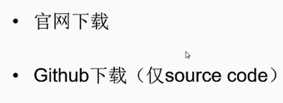

以下是从官网下载:

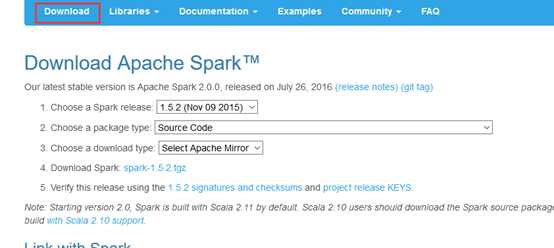

以下是Github下载(仅source code)

http://archive-primary.cloudera.com/cdh5/cdh/5/

http://zh.hortonworks.com/products/

********************************************************************************

好的,那我这里就以,Githud为例。

准备Linux系统环境(如CentOS6.5)

思路流程:

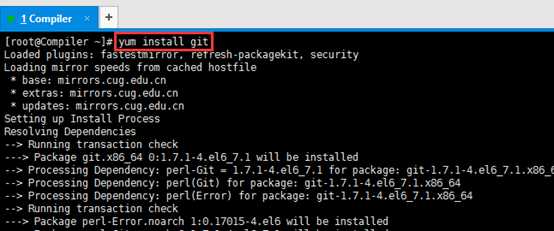

第一大步:在线安装git

第二大步:创建一个目录来克隆spark源代码(mkdir -p /root/projects/opensource)

第三大步:切换分支

第四大步:安装jdk1.7+

第三大步:

第四大步:

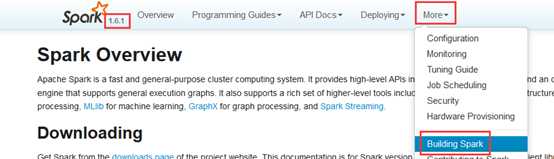

当然,可以参考官网给出的文档,

http://spark.apache.org/docs/1.6.1/building-spark.html

第一大步:在线安装git(root 用户下)

yum install git (root用户)

或者

Sudo yum install git (普通用户)

[root@Compiler ~]# yum install git

Loaded plugins: fastestmirror, refresh-packagekit, security

Loading mirror speeds from cached hostfile

* base: mirrors.cug.edu.cn

* extras: mirrors.cug.edu.cn

* updates: mirrors.cug.edu.cn

Setting up Install Process

Resolving Dependencies

--> Running transaction check

---> Package git.x86_64 0:1.7.1-4.el6_7.1 will be installed

--> Processing Dependency: perl-Git = 1.7.1-4.el6_7.1 for package: git-1.7.1-4.el6_7.1.x86_64

--> Processing Dependency: perl(Git) for package: git-1.7.1-4.el6_7.1.x86_64

--> Processing Dependency: perl(Error) for package: git-1.7.1-4.el6_7.1.x86_64

--> Running transaction check

---> Package perl-Error.noarch 1:0.17015-4.el6 will be installed

---> Package perl-Git.noarch 0:1.7.1-4.el6_7.1 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

===============================================================================================================================================================================================

Package Arch Version Repository Size

===============================================================================================================================================================================================

Installing:

git x86_64 1.7.1-4.el6_7.1 base 4.6 M

Installing for dependencies:

perl-Error noarch 1:0.17015-4.el6 base 29 k

perl-Git noarch 1.7.1-4.el6_7.1 base 28 k

Transaction Summary

===============================================================================================================================================================================================

Install 3 Package(s)

Total download size: 4.7 M

Installed size: 15 M

Is this ok [y/N]: y

Downloading Packages:

(1/3): git-1.7.1-4.el6_7.1.x86_64.rpm | 4.6 MB 00:01

(2/3): perl-Error-0.17015-4.el6.noarch.rpm | 29 kB 00:00

(3/3): perl-Git-1.7.1-4.el6_7.1.noarch.rpm | 28 kB 00:00

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Total 683 kB/s | 4.7 MB 00:06

warning: rpmts_HdrFromFdno: Header V3 RSA/SHA1 Signature, key ID c105b9de: NOKEY

Retrieving key from file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

Importing GPG key 0xC105B9DE:

Userid : CentOS-6 Key (CentOS 6 Official Signing Key) <centos-6-key@centos.org>

Package: centos-release-6-5.el6.centos.11.1.x86_64 (@anaconda-CentOS-201311272149.x86_64/6.5)

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

Is this ok [y/N]: y

Running rpm_check_debug

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Installing : 1:perl-Error-0.17015-4.el6.noarch 1/3

Installing : git-1.7.1-4.el6_7.1.x86_64 2/3

Installing : perl-Git-1.7.1-4.el6_7.1.noarch 3/3

Verifying : perl-Git-1.7.1-4.el6_7.1.noarch 1/3

Verifying : 1:perl-Error-0.17015-4.el6.noarch 2/3

Verifying : git-1.7.1-4.el6_7.1.x86_64 3/3

Installed:

git.x86_64 0:1.7.1-4.el6_7.1

Dependency Installed:

perl-Error.noarch 1:0.17015-4.el6 perl-Git.noarch 0:1.7.1-4.el6_7.1

Complete!

[root@Compiler ~]#

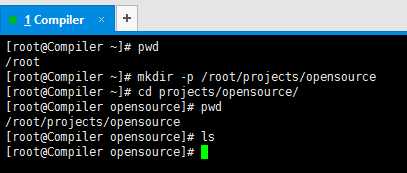

第二大步:创建一个目录克隆spark源代码

mkdir -p /root/projects/opensource

cd /root/projects/opensource

git clone https://github.com/apache/spark.git

[root@Compiler ~]# pwd

/root

[root@Compiler ~]# mkdir -p /root/projects/opensource

[root@Compiler ~]# cd projects/opensource/

[root@Compiler opensource]# pwd

/root/projects/opensource

[root@Compiler opensource]# ls

[root@Compiler opensource]#

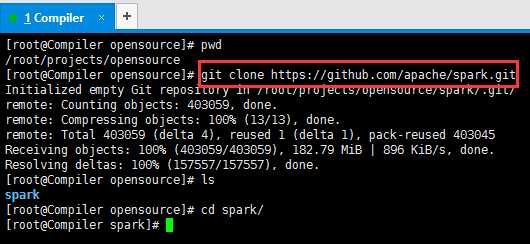

[root@Compiler opensource]# pwd

/root/projects/opensource

[root@Compiler opensource]# git clone https://github.com/apache/spark.git

Initialized empty Git repository in /root/projects/opensource/spark/.git/

remote: Counting objects: 403059, done.

remote: Compressing objects: 100% (13/13), done.

remote: Total 403059 (delta 4), reused 1 (delta 1), pack-reused 403045

Receiving objects: 100% (403059/403059), 182.79 MiB | 896 KiB/s, done.

Resolving deltas: 100% (157557/157557), done.

[root@Compiler opensource]# ls

spark

[root@Compiler opensource]# cd spark/

[root@Compiler spark]#

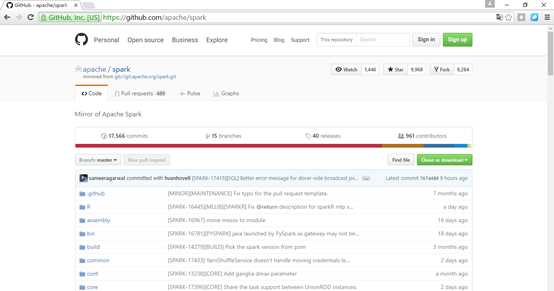

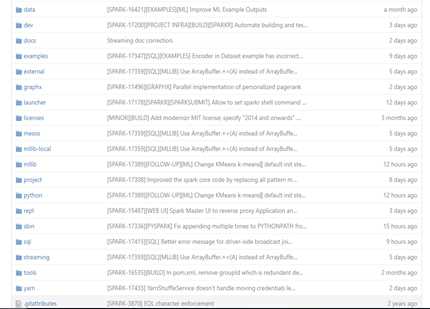

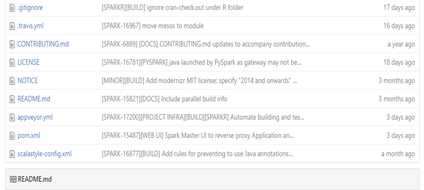

其实就是,对应着,如下网页界面

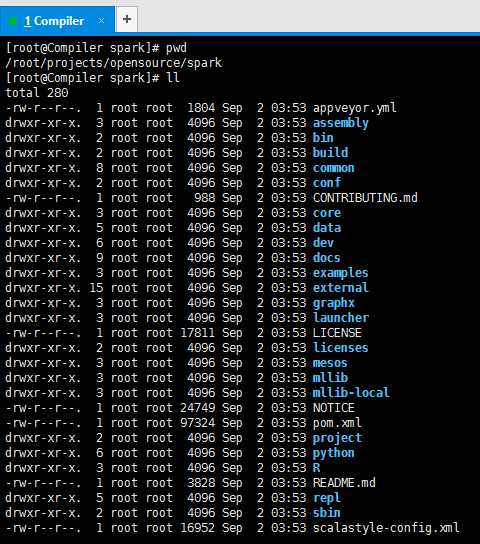

[root@Compiler spark]# pwd

/root/projects/opensource/spark

[root@Compiler spark]# ll

total 280

-rw-r--r--. 1 root root 1804 Sep 2 03:53 appveyor.yml

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 assembly

drwxr-xr-x. 2 root root 4096 Sep 2 03:53 bin

drwxr-xr-x. 2 root root 4096 Sep 2 03:53 build

drwxr-xr-x. 8 root root 4096 Sep 2 03:53 common

drwxr-xr-x. 2 root root 4096 Sep 2 03:53 conf

-rw-r--r--. 1 root root 988 Sep 2 03:53 CONTRIBUTING.md

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 core

drwxr-xr-x. 5 root root 4096 Sep 2 03:53 data

drwxr-xr-x. 6 root root 4096 Sep 2 03:53 dev

drwxr-xr-x. 9 root root 4096 Sep 2 03:53 docs

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 examples

drwxr-xr-x. 15 root root 4096 Sep 2 03:53 external

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 graphx

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 launcher

-rw-r--r--. 1 root root 17811 Sep 2 03:53 LICENSE

drwxr-xr-x. 2 root root 4096 Sep 2 03:53 licenses

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 mesos

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 mllib

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 mllib-local

-rw-r--r--. 1 root root 24749 Sep 2 03:53 NOTICE

-rw-r--r--. 1 root root 97324 Sep 2 03:53 pom.xml

drwxr-xr-x. 2 root root 4096 Sep 2 03:53 project

drwxr-xr-x. 6 root root 4096 Sep 2 03:53 python

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 R

-rw-r--r--. 1 root root 3828 Sep 2 03:53 README.md

drwxr-xr-x. 5 root root 4096 Sep 2 03:53 repl

drwxr-xr-x. 2 root root 4096 Sep 2 03:53 sbin

-rw-r--r--. 1 root root 16952 Sep 2 03:53 scalastyle-config.xml

drwxr-xr-x. 6 root root 4096 Sep 2 03:53 sql

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 streaming

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 tools

drwxr-xr-x. 3 root root 4096 Sep 2 03:53 yarn

[root@Compiler spark]#

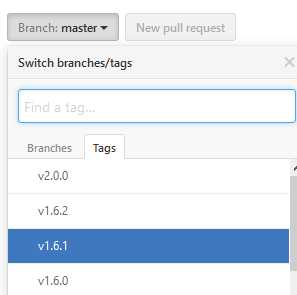

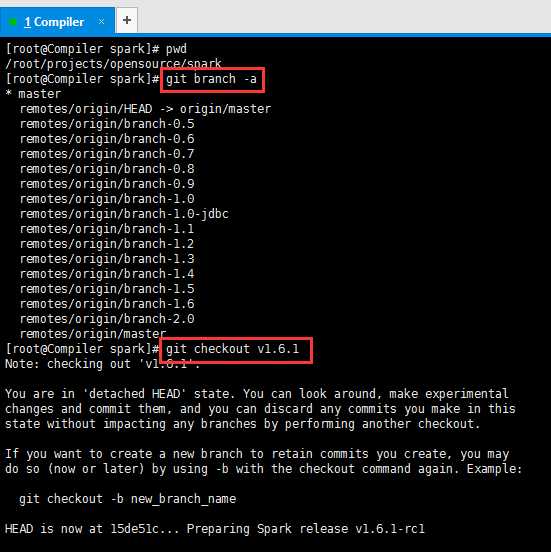

第三大步:切换分支

git checkout v1.6.1 //在spark目录下执行

[root@Compiler spark]# pwd

/root/projects/opensource/spark

[root@Compiler spark]# git branch -a

* master

remotes/origin/HEAD -> origin/master

remotes/origin/branch-0.5

remotes/origin/branch-0.6

remotes/origin/branch-0.7

remotes/origin/branch-0.8

remotes/origin/branch-0.9

remotes/origin/branch-1.0

remotes/origin/branch-1.0-jdbc

remotes/origin/branch-1.1

remotes/origin/branch-1.2

remotes/origin/branch-1.3

remotes/origin/branch-1.4

remotes/origin/branch-1.5

remotes/origin/branch-1.6

remotes/origin/branch-2.0

remotes/origin/master

[root@Compiler spark]# git checkout v1.6.1

Note: checking out ‘v1.6.1‘.

You are in ‘detached HEAD‘ state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by performing another checkout.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -b with the checkout command again. Example:

git checkout -b new_branch_name

HEAD is now at 15de51c... Preparing Spark release v1.6.1-rc1

[root@Compiler spark]#

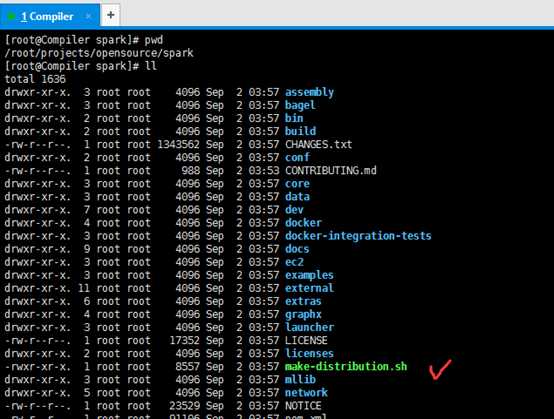

那么,就有了。make-distribution.sh

[root@Compiler spark]# pwd

/root/projects/opensource/spark

[root@Compiler spark]# ll

total 1636

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 assembly

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 bagel

drwxr-xr-x. 2 root root 4096 Sep 2 03:57 bin

drwxr-xr-x. 2 root root 4096 Sep 2 03:57 build

-rw-r--r--. 1 root root 1343562 Sep 2 03:57 CHANGES.txt

drwxr-xr-x. 2 root root 4096 Sep 2 03:57 conf

-rw-r--r--. 1 root root 988 Sep 2 03:53 CONTRIBUTING.md

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 core

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 data

drwxr-xr-x. 7 root root 4096 Sep 2 03:57 dev

drwxr-xr-x. 4 root root 4096 Sep 2 03:57 docker

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 docker-integration-tests

drwxr-xr-x. 9 root root 4096 Sep 2 03:57 docs

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 ec2

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 examples

drwxr-xr-x. 11 root root 4096 Sep 2 03:57 external

drwxr-xr-x. 6 root root 4096 Sep 2 03:57 extras

drwxr-xr-x. 4 root root 4096 Sep 2 03:57 graphx

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 launcher

-rw-r--r--. 1 root root 17352 Sep 2 03:57 LICENSE

drwxr-xr-x. 2 root root 4096 Sep 2 03:57 licenses

-rwxr-xr-x. 1 root root 8557 Sep 2 03:57 make-distribution.sh

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 mllib

drwxr-xr-x. 5 root root 4096 Sep 2 03:57 network

-rw-r--r--. 1 root root 23529 Sep 2 03:57 NOTICE

-rw-r--r--. 1 root root 91106 Sep 2 03:57 pom.xml

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 project

-rw-r--r--. 1 root root 13991 Sep 2 03:57 pylintrc

drwxr-xr-x. 6 root root 4096 Sep 2 03:57 python

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 R

-rw-r--r--. 1 root root 3359 Sep 2 03:57 README.md

drwxr-xr-x. 5 root root 4096 Sep 2 03:57 repl

drwxr-xr-x. 2 root root 4096 Sep 2 03:57 sbin

drwxr-xr-x. 2 root root 4096 Sep 2 03:57 sbt

-rw-r--r--. 1 root root 13191 Sep 2 03:57 scalastyle-config.xml

drwxr-xr-x. 6 root root 4096 Sep 2 03:57 sql

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 streaming

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 tags

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 tools

-rw-r--r--. 1 root root 848 Sep 2 03:57 tox.ini

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 unsafe

drwxr-xr-x. 3 root root 4096 Sep 2 03:57 yarn

[root@Compiler spark]#

其实啊,对应下面的这个界面

按照http://spark.apache.org/docs/1.6.1/building-spark.html 这个步骤来。

● 安装jdk7+

标签:

原文地址:http://www.cnblogs.com/zlslch/p/5865707.html