标签:

docker k8s + flannel

kubernetes 是谷歌开源的 docker 集群管理解决方案。

项目地址:

http://kubernetes.io/

测试环境:

node-1: 10.6.0.140

node-2: 10.6.0.187

node-3: 10.6.0.188

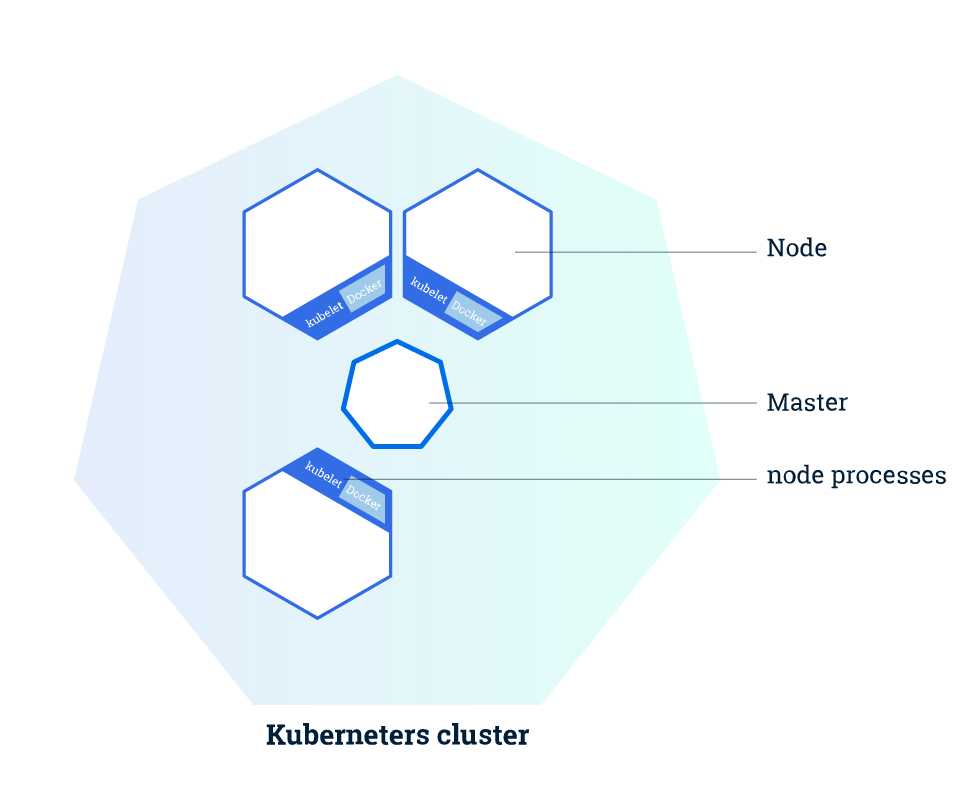

kubernetes 集群,包含 master 节点,与 node 节点。

关系图:

hostnamectl --static set-hostname hostname

10.6.0.140 - k8s-master

10.6.0.187 - k8s-node-1

10.6.0.188 - k8s-node-2

部署:

一: 首先,我们需要先安装 etcd , etcd 是k8s集群的基础组件。

分别安装 etcd

yum -y install etcd

修改配置文件,/etc/etcd/etcd.conf 需要修改如下参数:

ETCD_NAME=etcd1 ETCD_DATA_DIR="/var/lib/etcd/etcd1.etcd" ETCD_LISTEN_PEER_URLS="http://10.6.0.140:2380" ETCD_LISTEN_CLIENT_URLS="http://10.6.0.140:2379,http://127.0.0.1:2379" ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.6.0.140:2380" ETCD_INITIAL_CLUSTER="etcd1=http://10.6.0.140:2380,etcd2=http://10.6.0.187:2380,etcd3=http://10.6.0.188:2380" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster" ETCD_ADVERTISE_CLIENT_URLS="http://10.6.0.140:2379"

其他etcd集群中: ETCD_NAME , 以及IP 需要变动

修改 etcd 启动文件 /usr/lib/systemd/system/etcd.service

sed -i ‘s/\\\"${ETCD_LISTEN_CLIENT_URLS}\\\"/\\\"${ETCD_LISTEN_CLIENT_URLS}\\\" --listen-client-urls=\\\"${ETCD_LISTEN_CLIENT_URLS}\\\" --advertise-client-urls=\\\"${ETCD_ADVERTISE_CLIENT_URLS}\\\" --initial-cluster-token=\\\"${ETCD_INITIAL_CLUSTER_TOKEN}\\\" --initial-cluster=\\\"${ETCD_INITIAL_CLUSTER}\\\" --initial-cluster-state=\\\"${ETCD_INITIAL_CLUSTER_STATE}\\\"/g‘ /usr/lib/systemd/system/etcd.service

分别启动 所有节点的 etcd 服务

systemctl enable etcd

systemctl start etcd

查看启动情况

systemctl status etcd

查看 etcd 集群状态:

etcdctl cluster-health

出现 cluster is healthy 表示成功

查看 etcd 集群成员:

etcdctl member list

二: 部署k8s 的网络 flannel

编辑 /etc/hosts 文件,配置hostname 通信

vi /etc/hosts 添加:

10.6.0.140 k8s-master

10.6.0.187 k8s-node-1

10.6.0.188 k8s-node-2

安装 flannel 。

yum -y install flannel

清除网络中遗留的docker 网络 (docker0, flannel0 等)

ifconfig

如果存在 请删除之,以免发生不必要的未知错误

ip link delete docker0

....

设置 flannel 所用到的IP段

etcdctl --endpoint http://10.6.0.140:2379 set /flannel/network/config ‘{"Network":"10.10.0.0/16","SubnetLen":25,"Backend":{"Type":"vxlan","VNI":1}}‘

接下来修改 flannel 配置文件

vim /etc/sysconfig/flanneld

FLANNEL_ETCD="http://10.6.0.140:2379,http://10.6.0.187:2379,http://10.6.0.188:2379" # 修改为 集群地址 FLANNEL_ETCD_KEY="/flannel/network/config" # 修改为 上面导入配置中的 /flannel/network FLANNEL_OPTIONS="--iface=em1" # 修改为 本机物理网卡的名称

启动 flannel

systemctl enable flanneld

systemctl start flanneld

下面还需要修改 docker 的启动文件 /usr/lib/systemd/system/docker.service

在 ExecStart 参数 dockerd 后面增加

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

重新读取配置,启动 docker

systemctl daemon-reload

systemctl start docker

查看网络接管

ifconfig

可以看到 docker0 与 flannel.1 已经在我们设置的IP段内了,表示已经成功

三、安装k8s

安装k8s 首先是 Master 端安装

下载朋友的 rpm 包

http://upyun.mritd.me/kubernetes/kubernetes-1.3.8-1.x86_64.rpm

rpm -ivh kubernetes-1.3.8-1.x86_64.rpm

由于 google 被墙 国内已经有人将镜像上传至 docker hub 里面了 我们直接下载: docker pull chasontang/kube-proxy-amd64:v1.4.0 docker pull chasontang/kube-discovery-amd64:1.0 docker pull chasontang/kubedns-amd64:1.7 docker pull chasontang/kube-scheduler-amd64:v1.4.0 docker pull chasontang/kube-controller-manager-amd64:v1.4.0 docker pull chasontang/kube-apiserver-amd64:v1.4.0 docker pull chasontang/etcd-amd64:2.2.5 docker pull chasontang/kube-dnsmasq-amd64:1.3 docker pull chasontang/exechealthz-amd64:1.1 docker pull chasontang/pause-amd64:3.0 下载以后使用 docker tag 命令将其做别名改为 gcr.io/google_containers docker tag chasontang/kube-proxy-amd64:v1.4.0 gcr.io/google_containers/kube-proxy-amd64:v1.4.0 docker tag chasontang/kube-discovery-amd64:1.0 gcr.io/google_containers/kube-discovery-amd64:1.0 docker tag chasontang/kubedns-amd64:1.7 gcr.io/google_containers/kubedns-amd64:1.7 docker tag chasontang/kube-scheduler-amd64:v1.4.0 gcr.io/google_containers/kube-scheduler-amd64:v1.4.0 docker tag chasontang/kube-controller-manager-amd64:v1.4.0 gcr.io/google_containers/kube-controller-manager-amd64:v1.4.0 docker tag chasontang/kube-apiserver-amd64:v1.4.0 gcr.io/google_containers/kube-apiserver-amd64:v1.4.0 docker tag chasontang/etcd-amd64:2.2.5 gcr.io/google_containers/etcd-amd64:2.2.5 docker tag chasontang/kube-dnsmasq-amd64:1.3 gcr.io/google_containers/kube-dnsmasq-amd64:1.3 docker tag chasontang/exechealthz-amd64:1.1 gcr.io/google_containers/exechealthz-amd64:1.1 docker tag chasontang/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0 清楚原来下载的镜像 docker rmi chasontang/kube-proxy-amd64:v1.4.0 docker rmi chasontang/kube-discovery-amd64:1.0 docker rmi chasontang/kubedns-amd64:1.7 docker rmi chasontang/kube-scheduler-amd64:v1.4.0 docker rmi chasontang/kube-controller-manager-amd64:v1.4.0 docker rmi chasontang/kube-apiserver-amd64:v1.4.0 docker rmi chasontang/etcd-amd64:2.2.5 docker rmi chasontang/kube-dnsmasq-amd64:1.3 docker rmi chasontang/exechealthz-amd64:1.1 docker rmi chasontang/pause-amd64:3.0

安装完 kubernetes 以后

配置 apiserver

编辑配置文件

vim /etc/kubernetes/apiserver

### # kubernetes system config # # The following values are used to configure the kube-apiserver # # The address on the local server to listen to. KUBE_API_ADDRESS="--insecure-bind-address=10.6.0.140" # The port on the local server to listen on. KUBE_API_PORT="--port=8080" # Port minions listen on KUBELET_PORT="--kubelet-port=10250" # Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=http://10.6.0.140:2379,http://10.6.0.187:2379,http://10.6.0.188:2379" # Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" # default admission control policies KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" # Add your own! KUBE_API_ARGS=""

配置完毕以后 启动 所有服务

systemctl start kube-apiserver

systemctl start kube-controller-manager

systemctl start kube-scheduler

systemctl enable kube-apiserver

systemctl enable kube-controller-manager

systemctl enable kube-scheduler

systemctl status kube-apiserver

systemctl status kube-controller-manager

systemctl status kube-scheduler

下面 node 端安装

wget http://upyun.mritd.me/kubernetes/kubernetes-1.3.8-1.x86_64.rpm

rpm -ivh kubernetes-1.3.8-1.x86_64.rpm

配置 kubelet

编辑配置文件

vim /etc/kubernetes/kubelet

### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=10.6.0.187" # The port for the info server to serve on KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=k8s-node-1" # location of the api-server KUBELET_API_SERVER="--api-servers=http://10.6.0.140:8080" # Add your own! KUBELET_ARGS="--pod-infra-container-image=docker.io/kubernetes/pause:latest"

注: KUBELET_HOSTNAME 这个配置中 配置的为 hostname 名称,主要用于区分 node 在集群中的显示

名称 必须能 ping 通,所以前面在 /etc/hosts 中要做配置

下面修改 kubernetes 的 config 文件

vim /etc/kubernetes/config

### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://10.6.0.140:8080"

最后 启动 所有服务

systemctl start kubelet

systemctl start kube-proxy

systemctl enable kubelet

systemctl enable kube-proxy

systemctl status kubelet

systemctl status kube-proxy

master 中 测试 是否 node 已经正常

[root@k8s-master ~]#kubectl --server="http://10.6.0.140:8080" get node NAME STATUS AGE k8s-master NotReady 50m k8s-node-1 Ready 1m k8s-node-2 Ready 57s

至此,整个集群已经搭建完成,剩下的就是pod 的测试

标签:

原文地址:http://www.cnblogs.com/jicki/p/5941658.html