标签:through sata 块大小 硬盘 osd read sam 2.0 contain

配置信息:

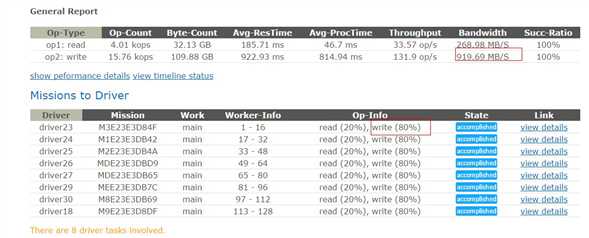

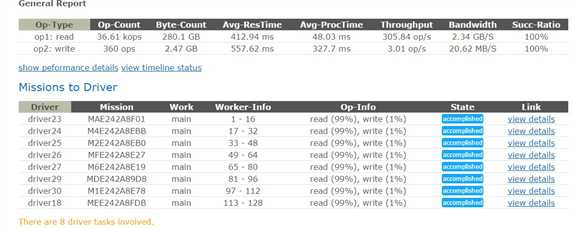

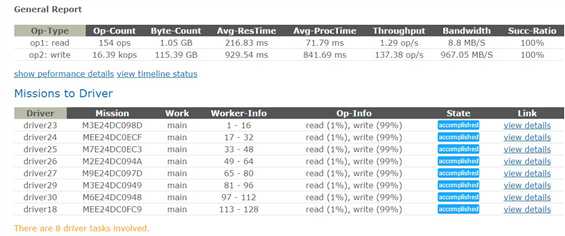

使用 2个万兆网卡的RGW主机,并用OSPF做高可用和负载均衡。 Ceph OSD集群 有21Node (万兆网卡+ 12个4T SATA机械硬盘)

测试VM配置:

使用在使用VXLAN协议构建的VPC网络内的8个4核8G的VM作为cosbench driver。 使用 128个cosbench work同时执行测试,文件块大小(4M-10M)。

测试场景和结果:

| ratio throughput | ratio throughput | ratio throughput | ratio throughput | |||||

| read | 80% 2.04GB/s | 20% 268.98 MB/s | 99% 2.34GB/s | 1% 8.8MB/s | ||||

| write | 20% 511.94MB/s | 80% 919.69MB/s | 1% 20.62MB/s | 99% 967.05MB/s |

结论: RGW读能跑满2个RGW主机的万兆网卡,RGW写1GB左右,达到RGW集群顺序写上限(增加RGW主机或在主机上部署多个RGW进程或许对提升写有帮助)。

s3workload.xml 配置文件:

<?xml version="1.0" encoding="UTF-8" ?> <workload name="s3-sample" description="sample benchmark for s3"> <storage type="s3" config="accesskey=ak;secretkey=sk;proxyhost=;proxyport=;endpoint=http://rgw-host-ip" /> <workflow> <workstage name="init"> <work type="init" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" /> </workstage> <workstage name="prepare"> <work type="prepare" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,10);sizes=u(4,10)MB" /> </workstage> <workstage name="main"> <work name="main" workers="128" runtime="120"> <operation type="read" ratio="99" config="cprefix=s3testqwer;containers=u(1,2);objects=u(1,10)"/> <operation type="write" ratio="1" config="cprefix=s3testqwer;containers=u(1,2);objects=u(11,32);sizes=u(4,10)MB" /> </work> </workstage> <workstage name="cleanup"> <work type="cleanup" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,32)" /> </workstage> <workstage name="dispose"> <work type="dispose" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" /> </workstage> </workflow> </workload>

Cosbench测试截图:

标签:through sata 块大小 硬盘 osd read sam 2.0 contain

原文地址:http://www.cnblogs.com/bodhitree/p/6149581.html