标签:arp 文件 interval 动手 爬虫 uid foreach size html

应用场景

爬虫过于频繁的抓取网站信息会被反爬虫机制屏蔽掉,或者有些网站对我们的Ip有限制,一个IP之能操作一次,这个时候就需要设置代理了。这方面需求还是很大的,有专门的服务商提供代理,没钱的自己动手打造一个代理池吧。

所用的工具

O/RM-Entity Framework

Html解析-HtmlAgilityPack 任务调度-Quartz.NET基本原理

部分网站上有免费的代理IP信息,比如xicidaili.com,proxy360.cn。这些网站有很多免费代理IP,然而有些质量不好,需要程序及时从代理池中删掉质量低的代理,不断加入优质代理。

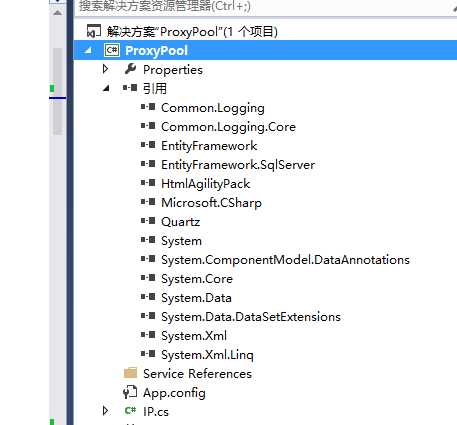

解决方案构建

创建一个ProxyPool的控制台应用程序,并使用NuGET添加Entity Framework,HtmlAgilityPack,Quartz.NET包

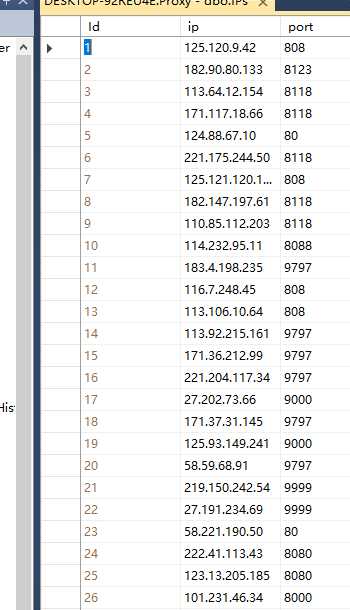

创建一个IP类

public class IP

{

public int Id { get; set; }

public string ip { get; set; }

public int port { get; set; }

}

创建数据库上下文类

public class ProxyEntity:DbContext { public DbSet<IP> IPS { get; set; } }

修改配置文件,添加

<connectionStrings>

<add name="ProxyEntity" connectionString="server=.;database=Proxy;uid=sa;pwd=000000" providerName="System.Data.SqlClient" />

</connectionStrings>

private ProxyEntity Database;

public PoolManage()

{

Database = new ProxyEntity();

}

封装对代理IP的添加,首先取数据库查查是否已经加入池子中,不存在则加入,这个是为了保证数据不重复

public void Add(IP ip) { var ips = Database.IPS; if (ips.Where(i => i.ip == ip.ip).Count() == 0) { ips.Add(ip); Database.SaveChanges(); } }

封装一个页面下载方法

public string DownloadHtml(string url)

{

try

{

HttpWebRequest request = (HttpWebRequest)WebRequest.Create(url);

request.UserAgent = "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:49.0) Gecko/20100101 Firefox/49.0";

HttpWebResponse response = (HttpWebResponse)request.GetResponse();

using (Stream dataStream = response.GetResponseStream())

{

using (StreamReader reader = new StreamReader(dataStream, Encoding.UTF8))

{

return reader.ReadToEnd();

}

}

}

catch

{

return "";

}

}

public void Downloadxicidaili()//下载西刺代理的html页面

{

string url = "http://www.xicidaili.com/";

string html = DownloadHtml(url);

HtmlDocument doc = new HtmlAgilityPack.HtmlDocument();

doc.LoadHtml(html);

HtmlNode node = doc.DocumentNode;

string xpathstring = "//tr[@class=‘odd‘]";

HtmlNodeCollection collection = node.SelectNodes(xpathstring);

foreach (var item in collection)

{

IP ip = new IP();

string xpath = "td[2]";

ip.ip = item.SelectSingleNode(xpath).InnerHtml;

xpath = "td[3]";

ip.port = int.Parse(item.SelectSingleNode(xpath).InnerHtml);

Add(ip);

}

}

抓取proxy360

public void Downloadproxy360()//下载proxy360

{

string url = "http://www.proxy360.cn/default.aspx";

string html= DownloadHtml(url);

HtmlDocument doc = new HtmlAgilityPack.HtmlDocument();

doc.LoadHtml(html);

string xpathstring = "//div[@class=‘proxylistitem‘]";

HtmlNode node = doc.DocumentNode;

HtmlNodeCollection collection = node.SelectNodes(xpathstring);

foreach (var item in collection)

{

IP ip = new IP();

var childnode = item.ChildNodes[1];

xpathstring = "span[1]";

ip.ip = childnode.SelectSingleNode(xpathstring).InnerHtml.Trim();

xpathstring = "span[2]";

ip.port = int.Parse(childnode.SelectSingleNode(xpathstring).InnerHtml);

ip.From = 3;

Add(ip);

}

}

只往池子里面加代理还不行,对于劣质代理需要及时抽出来

代理检测

public static bool IsAvailable(IP ip)

{

try

{

HttpWebRequest request = (HttpWebRequest)WebRequest.Create("https://www.baidu.com/");

request.UserAgent = "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:49.0) Gecko/20100101 Firefox/49.0";

HttpWebResponse response = (HttpWebResponse)request.GetResponse();

using (Stream dataStream = response.GetResponseStream())

{

using (StreamReader reader = new StreamReader(dataStream, Encoding.UTF8))

{

if (reader.ReadToEnd().Contains("百度"))

{

return true;

}

return false;

}

}

}

catch

{

return false;

}

}

public class TextThread

{

private ProxyEntity Database;

private int PageNum;

private int pageSize = 10;

public TextThread(int _PageNum)

{

Database = new ProxyEntity();

PageNum = _PageNum;

}

public void test()

{

List<IP> Ips = Database.IPS.OrderBy(i => i.Id).Skip(pageSize * PageNum).Take(pageSize).ToList();

Console.WriteLine();

foreach (var item in Ips)

{

if (!PoolManage.IsAvailable(item))

{

Database.IPS.Remove(item);

Database.SaveChanges();

Console.WriteLine("删除一个");

return;

}

Console.WriteLine("测试通过");

}

}

}

回到PoolManage

public void TextAllIps()

{

var ips = Database.IPS;

int pageSize = 10;

double s = ips.Count() / pageSize;

int PageCount = (int)Math.Ceiling(s);

for (int i = 0; i < PageCount; i++)

{

TextThread test = new TextThread(i);

Thread thread = new Thread(test.test);

thread.IsBackground = true;

thread.Start();

}

}

执行这个工作的时候需要抓取代理,检测代理,把这些行为封装到一起

public void Initial()

{

Downloadxicidaili();

Downloadproxy360();

TextAllIps();

}

进行测试下,在main方法中添加

PoolManage manager = new PoolManage();

manager.Initial();

Console.ReadLine();

很多端口可能会挂掉,由优质变成劣质,程序还不能停下来,需要不断检测,不断抓取,这里使用Quartz来定时执行

先创建工作类

class TotalJob:IJob

{

public void Execute(IJobExecutionContext context)

{

PoolManage manager = new PoolManage();

manager.Initial();

}

}

private static void Run()

{

try

{

StdSchedulerFactory factory = new StdSchedulerFactory();

IScheduler scheduler = factory.GetScheduler();

scheduler.Start();

IJobDetail job = JobBuilder.Create<TotalJob>().WithIdentity("job1", "group1").Build();

ITrigger trigger = TriggerBuilder.Create()

.WithIdentity("trigger1", "group1")

.StartNow()

.WithSimpleSchedule(

x => x

.WithIntervalInMinutes(5)//每5分钟执行一次

.RepeatForever()

).Build();

scheduler.ScheduleJob(job, trigger);

//Thread.Sleep(TimeSpan.FromSeconds(60));

// scheduler.Shutdown();

}

catch (SchedulerException se)

{

Console.WriteLine(se);

}

}

在main函数里面添加代码

Run();

Console.WriteLine("Press any key to close the application");

已经实现了对代理的管理,这个池子的资源还是要给人用,服装一个方法,让它从池子里面随机返回一个代理

public class Pool

{

public static IP GetIP()

{

ProxyEntity Database = new ProxyEntity();

List<IP> list = Database.IPS.ToList();

Random randowm = new Random();

int index=0;

if(list.Count>0)

{

index=randowm.Next(list.Count);

}

return (IP)list.ToArray().GetValue(index);

}

}

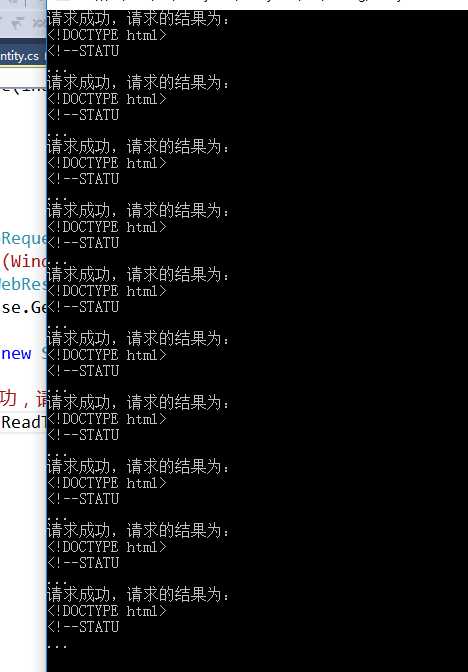

测试一下代理池的质量吧

public static void Test() { try { HttpWebRequest request = (HttpWebRequest)WebRequest.Create("https://www.baidu.com/"); request.UserAgent = "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:49.0) Gecko/20100101 Firefox/49.0"; HttpWebResponse response = (HttpWebResponse)request.GetResponse(); using (Stream dataStream = response.GetResponseStream()) { using (StreamReader reader = new StreamReader(dataStream, Encoding.UTF8)) { Console.WriteLine("请求成功,请求的结果为:"); Console.WriteLine(reader.ReadToEnd().Substring(0,25)); Console.WriteLine("..."); } } } catch { Console.WriteLine("失败"); } }

测试结果

质量还行

标签:arp 文件 interval 动手 爬虫 uid foreach size html

原文地址:http://www.cnblogs.com/zuin/p/6217421.html