标签:apache oca src ted logs arch log appname 区分

![]()

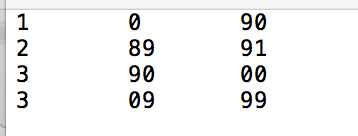

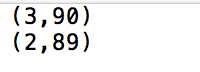

import org.apache.spark.SparkConf import org.apache.spark.SparkContext object MaxTemperaturer { def main(args: Array[String]): Unit = { var conf = new SparkConf().setAppName("MaxGroup").setMaster("local") var sc = new SparkContext(conf) sc.textFile("/Users/lihu/Desktop/crawle/maxforgroup.txt").map(_.split("\t")).filter(_(1) != "0").map(rec => (rec(0).toInt, rec(1).toInt)).reduceByKey(Math.max(_,_)).saveAsTextFile("/Users/lihu/Desktop/crawle/MaxTemperatureLogsss") } }

// 出现次数最多的8个单词

import org.apache.spark.SparkConf import org.apache.spark.SparkContext object TopSearchKeyWords { def main(args: Array[String]): Unit = { var conf = new SparkConf().setAppName("TopSearchKeyWords").setMaster("local") var sc = new SparkContext(conf) var src = sc.textFile("/Users/lihu/Desktop/crawle/wahah.txt") var countData = src.map(line => (line.toLowerCase(),1)).reduceByKey(_+_) var sortedData = countData.map{case (k,v) => (v,k)}.sortByKey(false) var topData = sortedData.take(8).map{case (v, k) => (k, v)}.foreach(println _) } }

// 统计单词个数,不区分大小写 import org.apache.spark.SparkConf import org.apache.spark.SparkContext object TopSearchKeyWords { def main(args: Array[String]): Unit = { var conf = new SparkConf().setAppName("TopSearchKeyWords").setMaster("local") var sc = new SparkContext(conf) var src = sc.textFile("/Users/lihu/Desktop/crawle/wahah.txt") var countData = src.map(line => (line.toLowerCase(),1)).countByKey().foreach(println _)

var countData1 = src.map(line => (line.toLowerCase(),1)).reduceByKey(_+_).collect().foreach(println _)

}

}

标签:apache oca src ted logs arch log appname 区分

原文地址:http://www.cnblogs.com/sunyaxue/p/6368554.html