标签:启动 1.2 其他 spark slave mit code div core

====================================================

<dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.10</artifactId> <version>2.1.0</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-hive_2.10</artifactId> <version>2.1.0</version> </dependency> <dependency> <groupId>org.elasticsearch</groupId> <artifactId>elasticsearch-spark-20_2.11</artifactId> <version>5.1.2</version> </dependency>

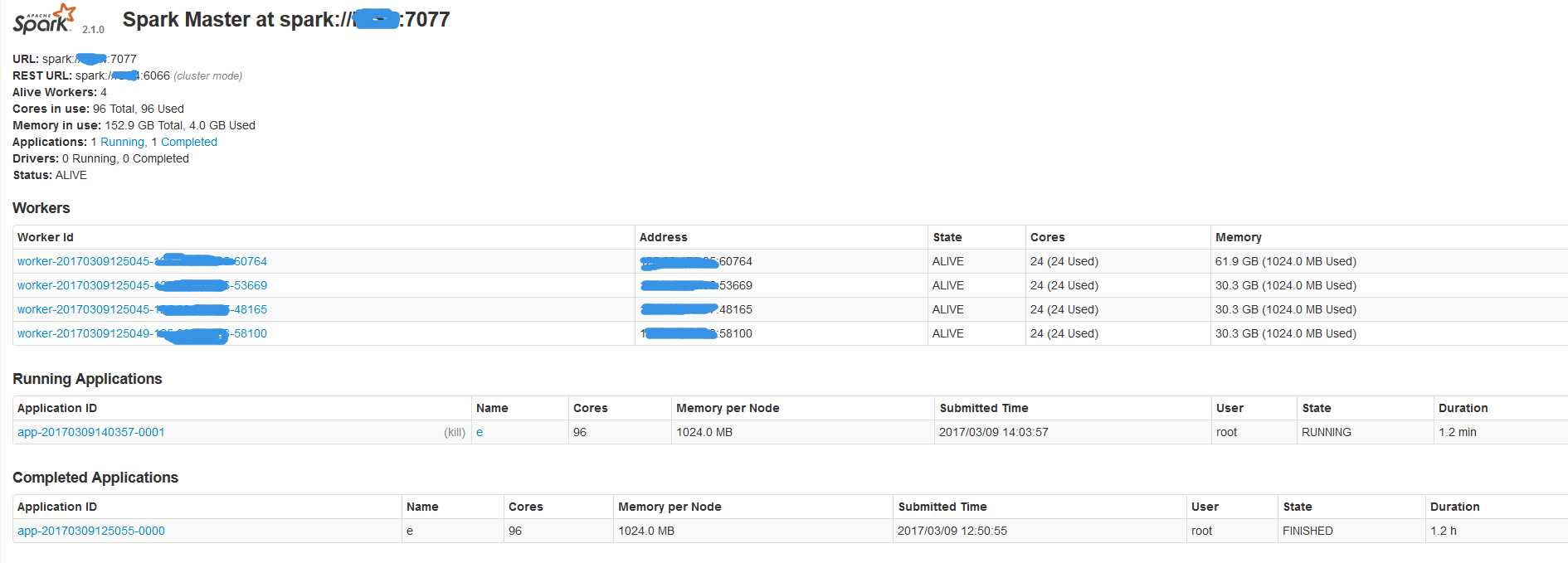

SparkConf conf = new SparkConf().setAppName("e").setMaster("spark://主节点:7077");

conf.set("es.nodes", "elasticsearchIP");

conf.set("es.port", "9200");

JavaSparkContext jsc = new JavaSparkContext(conf); JavaRDD<Map<String, Object>> esRDD = JavaEsSpark.esRDD(jsc, "logstash-spark_test/spark_test", "?q=selpwd").values();

JavaEsSpark.saveToEs(inJPRDD.values(), "logstash-spark_test/spark_test");

标签:启动 1.2 其他 spark slave mit code div core

原文地址:http://www.cnblogs.com/blair-chen/p/6526416.html