标签:运行 dir 删除 [] mapr except reduce test image

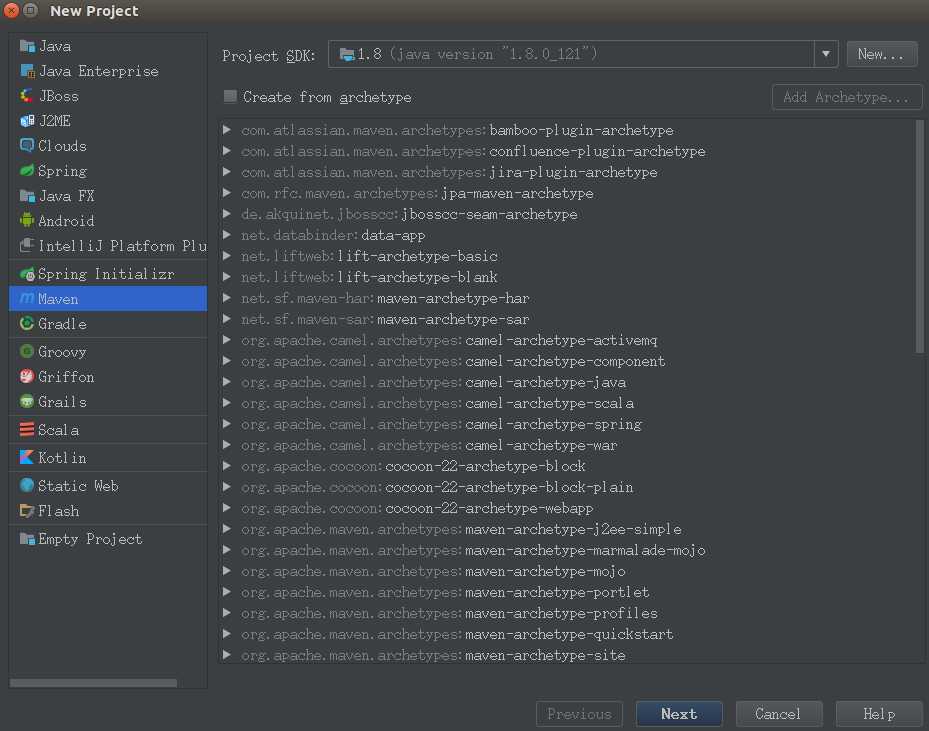

1.在IDEA下新建工程,选择from Mevan

GroupId:WordCount

ArtifactId:com.hadoop.1st

Project name:WordCount

2.pom.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>WordCount</groupId>

<artifactId>com.hadoop.1st</artifactId>

<version>1.0-SNAPSHOT</version>

<repositories>

<repository>

<id>apache</id>

<url>http://maven.apache.org</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-core</artifactId>

<version>1.2.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.1</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<artifactId>maven-dependency-plugin</artifactId>

<configuration>

<excludeTransitive>false</excludeTransitive>

<stripVersion>true</stripVersion>

<outputDirectory>./lib</outputDirectory>

</configuration>

</plugin>

</plugins>

</build>

</project>

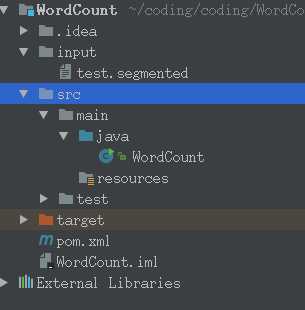

3.main/java目录下新建WordCount.java文件

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import java.io.IOException;

import java.util.StringTokenizer;

/**

* Created by common on 17-3-26.

*/

public class WordCount {

public static class WordCountMap extends

Mapper<LongWritable, Text, Text, IntWritable> {

private final IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String line = value.toString();

StringTokenizer token = new StringTokenizer(line);

while (token.hasMoreTokens()) {

word.set(token.nextToken());

context.write(word, one);

}

}

}

public static class WordCountReduce extends

Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

context.write(key, new IntWritable(sum));

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = new Job(conf);

job.setJarByClass(WordCount.class);

job.setJobName("wordcount");

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setMapperClass(WordCountMap.class);

job.setReducerClass(WordCountReduce.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true);

}

}

4.在src同级目录下新建input目录,以及下面的test.segmented文件

test.segmented文件内容

aa bb cc dd aa cc ee ff ff gg hh aa

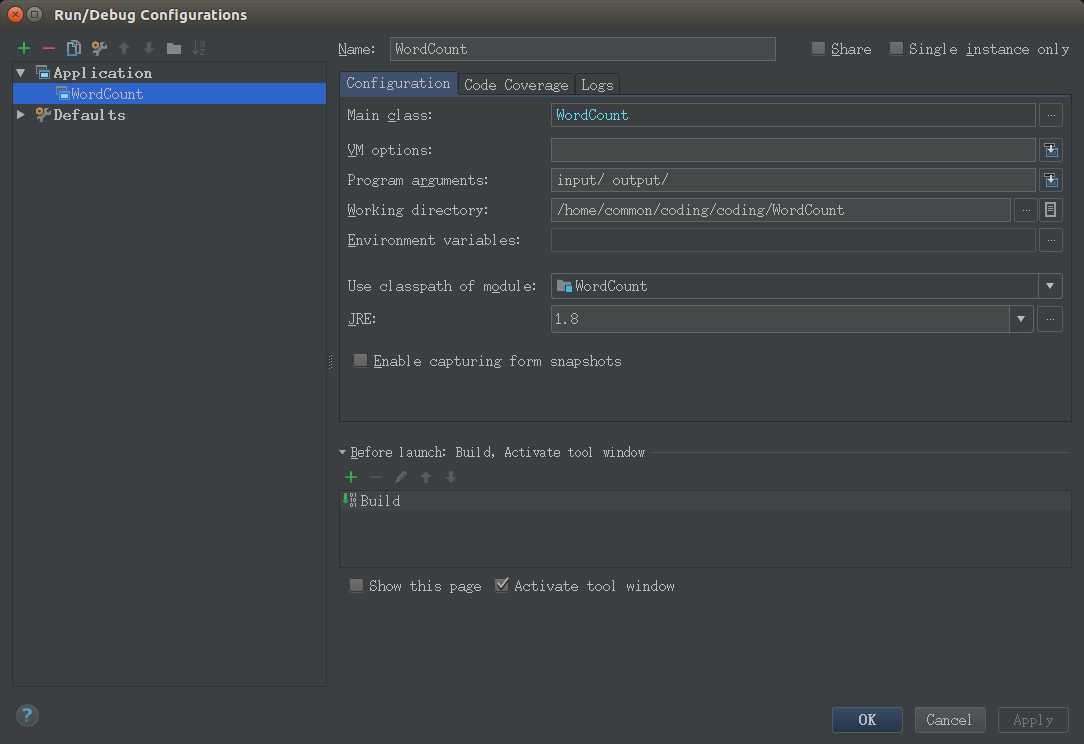

4.在run configuration下设置运行方式为Application

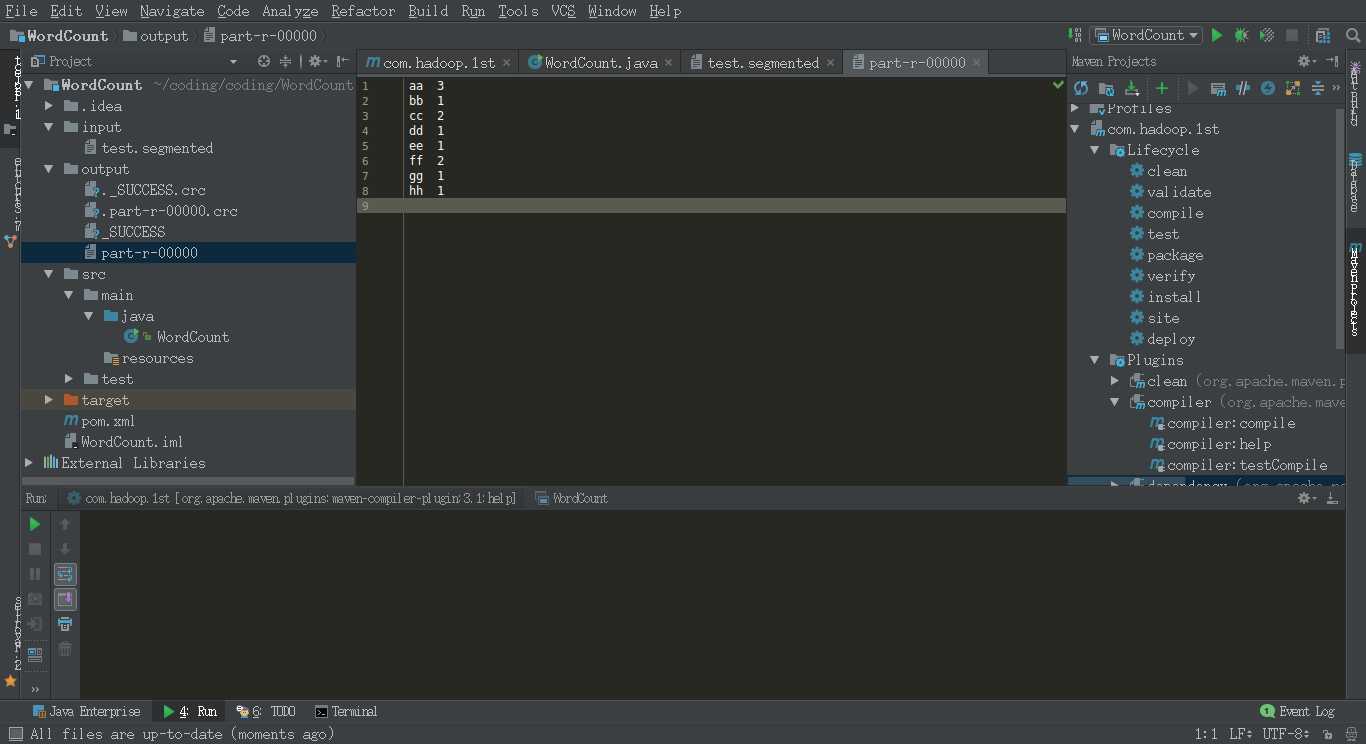

5.运行java文件,将会生成output目录,part-r-00000为运行的结果,下次运行必须删除output目录,否则会报错

标签:运行 dir 删除 [] mapr except reduce test image

原文地址:http://www.cnblogs.com/tonglin0325/p/6623566.html