标签:ace 遍历 ase loss == info image infer reduce

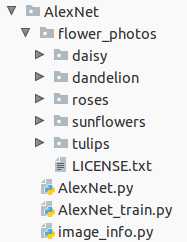

本次整合了前面两节的模组,并添加向前传播&反馈训练部分,使之成为一个包含训练&验证&测试的分类网络。

image_info.py,图片读取部分

1 import glob 2 import os.path 3 import random 4 import numpy as np 5 import tensorflow as tf 6 7 def creat_image_lists(validation_percentage,testing_percentage,INPUT_DATA): 8 ‘‘‘ 9 将图片(无路径文件名)信息保存在字典中 10 :param validation_percentage: 验证数据百分比 11 :param testing_percentage: 测试数据百分比 12 :param INPUT_DATA: 最外层数据路径(到达类目录上层) 13 :return: 字典{标签:{文件夹:str,训练:[],验证:[],测试:[]},...} 14 ‘‘‘ 15 result = {} 16 sub_dirs = [x[0] for x in os.walk(INPUT_DATA)] 17 # 由于os.walk()列表第一个是‘./‘,所以排除 18 is_root_dir = True #<----- 19 # 遍历各个label文件夹 20 for sub_dir in sub_dirs: 21 if is_root_dir: #<----- 22 is_root_dir = False 23 continue 24 25 extensions = [‘jpg‘, ‘jpeg‘, ‘JPG‘, ‘JPEG‘] 26 file_list = [] 27 dir_name = os.path.basename(sub_dir) 28 # 遍历各个可能的文件尾缀 29 for extension in extensions: 30 # file_glob = os.path.join(INPUT_DATA,dir_name,‘*.‘+extension) 31 file_glob = os.path.join(sub_dir, ‘*.‘ + extension) 32 file_list.extend(glob.glob(file_glob)) # 匹配并收集路径&文件名 33 # print(file_glob,‘\n‘,glob.glob(file_glob)) 34 if not file_list: continue 35 36 label_name = dir_name.lower() # 生成label,实际就是小写文件夹名 37 38 # 初始化各个路径&文件收集list 39 training_images = [] 40 testing_images = [] 41 validation_images = [] 42 43 # 去路径,只保留文件名 44 for file_name in file_list: 45 base_name = os.path.basename(file_name) 46 47 # 随机划分数据给验证和测试 48 chance = np.random.randint(100) 49 if chance < validation_percentage: 50 validation_images.append(base_name) 51 elif chance < (validation_percentage + testing_percentage): 52 testing_images.append(base_name) 53 else: 54 training_images.append(base_name) 55 # 本标签字典项生成 56 result[label_name] = { 57 ‘dir‘ : dir_name, 58 ‘training‘ : training_images, 59 ‘testing‘ : testing_images, 60 ‘validation‘ : validation_images 61 } 62 return result 63 64 def get_image_path(image_lists, image_dir, label_name, index, category): 65 ‘‘‘ 66 获取单张图片的存储地址 67 :param image_lists: 全图片字典 68 :param image_dir: 外层文件夹(内部是标签文件夹) 69 :param label_name: 标签名 70 :param index: 随机数索引 71 :param category: training or validation 72 :return: 图片中间变量地址 73 ‘‘‘ 74 label_lists = image_lists[label_name] 75 category_list = label_lists[category] # 获取目标category图片列表 76 mod_index = index % len(category_list) # 随机获取一张图片的索引 77 base_name = category_list[mod_index] # 通过索引获取图片名 78 return os.path.join(image_dir,label_lists[‘dir‘],base_name) 79 80 81 def get_random_cached_inputs(sess,n_class,image_lists,batch,category,INPUT_DATA,shape=[299,299]): 82 ‘‘‘ 83 函数随机获取一个batch的图片作为训练数据,并调整大小至输入层大小 84 调用get_image_path 85 :param sess: 会话句柄 86 :param n_class: 分类数目 87 :param image_lists: 图片字典 88 :param batch: batch大小 89 :param category: training or validation 90 :param INPUT_DATA: 最外层图片文件夹 91 :param shape: 输入层size 92 :return: image & label数组 93 ‘‘‘ 94 images = [] 95 labels = [] 96 for i in range(batch): 97 label_index = random.randrange(n_class) # 标签索引随机生成 98 label_name = list(image_lists.keys())[label_index] # 标签名获取 99 image_index = random.randrange(65536) # 标签内图片索引随机种子 100 101 image_path = get_image_path(image_lists, INPUT_DATA, label_name, image_index, category) 102 image_raw = tf.gfile.FastGFile(image_path, ‘rb‘).read() 103 image_data = sess.run(tf.image.resize_images(tf.image.decode_jpeg(image_raw), 104 shape,method=random.randint(0,3))) 105 106 ground_truth = np.zeros(n_class,dtype=np.float32) 107 ground_truth[label_index] = 1.0 # 标准结果[0,0,1,0...] 108 # 收集瓶颈张量和label 109 images.append(image_data) 110 labels.append(ground_truth) 111 return images,labels 112 113 114 def get_test_images(sess,image_lists,n_class,shape=[299,299]): 115 ‘‘‘ 116 获取所有test数据 117 调用get_image_path 118 :param sess: 会话句柄 119 :param image_lists: 图片字典 120 :param n_class: 分类数目 121 :param shape: 输入层size 122 :return: 123 ‘‘‘ 124 test_images = [] 125 ground_truths = [] 126 label_name_list = list(image_lists.keys()) 127 for label_index,label_name in enumerate(image_lists[label_name_list]): 128 category = ‘testing‘ 129 for image_index, unused_base_name in enumerate(image_lists[label_name][category]): # 索引, {文件名} 130 131 image_path = get_image_path(image_lists, INPUT_DATA, label_name, image_index, category) 132 image_raw = tf.gfile.FastGFile(image_path, ‘rb‘).read() 133 image_data = sess.run(tf.image.resize_images(tf.image.decode_jpeg(image_raw), 134 shape, method=random.randint(0, 3))) 135 136 ground_truth = np.zeros(n_class, dtype=np.float32) 137 ground_truth[label_index] = 1.0 138 test_images.append(image_data) 139 ground_truths.append(ground_truth) 140 return test_images, ground_truths 141 142 143 if __name__==‘__main__‘: 144 145 INPUT_DATA = ‘./flower_photos‘ # 数据文件夹 146 VALIDATION_PERCENTAGE = 10 # 验证用数据百分比 147 TEST_PERCENTAGE = 10 # 测试用数据百分比 148 BATCH_SIZE = 128 # 数据包大小 149 INPUT_SIZE = [224, 224] # 标准输入尺寸 150 151 image_dict = creat_image_lists(VALIDATION_PERCENTAGE,TEST_PERCENTAGE,INPUT_DATA) 152 n_class = len(image_dict.keys()) 153 sess = tf.Session() 154 category = ‘training‘ 155 image_list, label_list = get_random_cached_inputs( 156 sess, n_class, image_dict, BATCH_SIZE, category,INPUT_DATA,INPUT_SIZE) 157 # exit()

AlexNet.py,网络主干

优化方式:

由于l2正则化结果收集进‘losses‘中,所以主函数计算loss时应该注意

更新:

由于training和validation一次传入1batch的数据量,而test一次传入所有数据,所以修改了fc1层代码,使网络不再需要固定长度的数据量。

1 import tensorflow as tf 2 3 ‘‘‘ 4 AlwxNet网络结构主体,会接受batch图片返回数组label, 5 且会在全连接Weights上l2正则化并收集进‘losses‘集合内部 6 ‘‘‘ 7 8 def print_activations(t): 9 """ 10 打印卷积层&池化层的尺寸 11 :param t: tensor 12 :return: 13 """ 14 print(t.op.name, ‘ ‘, t.get_shape().as_list()) 15 16 def inference(images,regularizer,N_CLASS=10,train=True): 17 ‘‘‘ 18 AlexNex网络主体 19 :param images: 图片batch,4-D:array 20 :param regularizer: 正则化函数选择,eg:regularizer = tf.contrib.layers.l2_regularizer(0.0001) 21 :param N_CLASS: 分类数目 22 :param train: True or False,是否是在训练,引入FC层的dropout 23 :return: logits,01型list;parameters,全训练参数(历史遗留问题,一般用不到) 24 ‘‘‘ 25 26 parameters = [] 27 28 # conv1 29 with tf.name_scope(‘conv1‘) as scope: 30 kernel = tf.Variable(tf.truncated_normal([11, 11, 3, 64], dtype=tf.float32, 31 stddev=1e-1), name=‘Weights‘) 32 conv = tf.nn.conv2d(images, kernel, [1, 4, 4, 1], padding=‘SAME‘) 33 biases = tf.Variable(tf.constant(0.0, shape=[64], dtype=tf.float32), 34 trainable=True, name=‘biases‘) 35 bias = tf.nn.bias_add(conv, biases) 36 conv1 = tf.nn.relu(bias, name=scope) 37 print_activations(conv1) 38 parameters += [kernel, biases] 39 40 # pool1 41 pool1 = tf.nn.max_pool(conv1, 42 ksize=[1, 3, 3, 1], 43 strides=[1, 2, 2, 1], 44 padding=‘VALID‘, 45 name=‘pool1‘) 46 print_activations(pool1) 47 48 # conv2 49 with tf.name_scope(‘conv2‘) as scope: 50 kernel = tf.Variable(tf.truncated_normal([5, 5, 64, 192], dtype=tf.float32, 51 stddev=1e-1), name=‘Weights‘) 52 conv = tf.nn.conv2d(pool1, kernel, [1, 1, 1, 1], padding=‘SAME‘) 53 biases = tf.Variable(tf.constant(0.0, shape=[192], dtype=tf.float32), 54 trainable=True, name=‘biases‘) 55 bias = tf.nn.bias_add(conv, biases) 56 conv2 = tf.nn.relu(bias, name=scope) 57 parameters += [kernel, biases] 58 print_activations(conv2) 59 60 # pool2 61 pool2 = tf.nn.max_pool(conv2, 62 ksize=[1, 3, 3, 1], 63 strides=[1, 2, 2, 1], 64 padding=‘VALID‘, 65 name=‘pool2‘) 66 print_activations(pool2) 67 68 # conv3 69 with tf.name_scope(‘conv3‘) as scope: 70 kernel = tf.Variable(tf.truncated_normal([3, 3, 192, 384], 71 dtype=tf.float32, 72 stddev=1e-1), name=‘Weights‘) 73 conv = tf.nn.conv2d(pool2, kernel, [1, 1, 1, 1], padding=‘SAME‘) 74 biases = tf.Variable(tf.constant(0.0, shape=[384], dtype=tf.float32), 75 trainable=True, name=‘biases‘) 76 bias = tf.nn.bias_add(conv, biases) 77 conv3 = tf.nn.relu(bias, name=scope) 78 parameters += [kernel, biases] 79 print_activations(conv3) 80 81 # conv4 82 with tf.name_scope(‘conv4‘) as scope: 83 kernel = tf.Variable(tf.truncated_normal([3, 3, 384, 256], 84 dtype=tf.float32, 85 stddev=1e-1), name=‘Weights‘) 86 conv = tf.nn.conv2d(conv3, kernel, [1, 1, 1, 1], padding=‘SAME‘) 87 biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32), 88 trainable=True, name=‘biases‘) 89 bias = tf.nn.bias_add(conv, biases) 90 conv4 = tf.nn.relu(bias, name=scope) 91 parameters += [kernel, biases] 92 print_activations(conv4) 93 94 # conv5 95 with tf.name_scope(‘conv5‘) as scope: 96 kernel = tf.Variable(tf.truncated_normal([3, 3, 256, 256], 97 dtype=tf.float32, 98 stddev=1e-1), name=‘Weights‘) 99 conv = tf.nn.conv2d(conv4, kernel, [1, 1, 1, 1], padding=‘SAME‘) 100 biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32), 101 trainable=True, name=‘biases‘) 102 bias = tf.nn.bias_add(conv, biases) 103 conv5 = tf.nn.relu(bias, name=scope) 104 parameters += [kernel, biases] 105 print_activations(conv5) 106 107 # pool5 108 pool5 = tf.nn.max_pool(conv5, 109 ksize=[1, 3, 3, 1], 110 strides=[1, 2, 2, 1], 111 padding=‘VALID‘, 112 name=‘pool5‘) 113 print_activations(pool5) 114 115 # fc1 116 with tf.name_scope(‘fc1‘) as scope: 117 reshape = tf.reshape(pool5,[images.get_shape().as_list()[0],-1]) # [BATCH_SIZE,-1] <------- 118 dim = reshape.get_shape()[1].value 119 Weight = tf.Variable(tf.truncated_normal([dim,4096], stddev=0.01),name=‘Weights‘) 120 bias = tf.Variable(tf.constant(0.1,shape=[4096]),name=‘biases‘) 121 fc1 = tf.nn.relu(tf.matmul(reshape,Weight) + bias,name=scope) 122 tf.add_to_collection(‘losses‘, regularizer(Weight)) 123 if train : fc1 = tf.nn.dropout(fc1,0.5) 124 parameters += [Weight, biases] 125 print_activations(fc1) 126 127 # fc2 128 with tf.name_scope(‘fc2‘) as scope: 129 Weight = tf.Variable(tf.truncated_normal([4096, 4096], stddev=0.01),name=‘Weights‘) 130 bias = tf.Variable(tf.constant(0.1, shape=[4096]),name=‘biases‘) 131 fc2 = tf.nn.relu(tf.matmul(fc1, Weight) + bias,name=scope) 132 tf.add_to_collection(‘losses‘, regularizer(Weight)) 133 if train: fc2 = tf.nn.dropout(fc2, 0.5) 134 parameters += [Weight, biases] 135 print_activations(fc2) 136 137 # fc3 138 with tf.name_scope(‘fc3‘) as scope: 139 Weight = tf.Variable(tf.truncated_normal([4096, N_CLASS], stddev=0.01),name=‘Weights‘) 140 bias = tf.Variable(tf.constant(0.1, shape=[N_CLASS]),name=‘biases‘) 141 logits = tf.add(tf.matmul(fc2, Weight),bias,name=scope) 142 tf.add_to_collection(‘losses‘, regularizer(Weight)) 143 parameters += [Weight, biases] 144 print_activations(logits) 145 146 return logits, parameters

AlexNet_train.py,训练部分

接收了L2正则化优化

数据有测试&验证两个随机抽取图片过程和一次性全部加载的test过程

1 import AlexNet as Net 2 import tensorflow as tf 3 import image_info as img_io 4 5 6 ‘‘‘训练参数‘‘‘ 7 8 INPUT_DATA = ‘./flower_photos‘ # 数据文件夹 9 VALIDATION_PERCENTAGE = 10 # 验证用数据百分比 10 TEST_PERCENTAGE = 10 # 测试用数据百分比 11 BATCH_SIZE = 128 # 数据包大小 12 INPUT_SIZE = [224, 224] # 标准输入尺寸 13 MAX_STEP = 5000 # 最大迭代轮数 14 15 def loss(logits, labels): 16 """ 17 Add L2Loss to all the trainable variables. 18 Args: 19 logits: Logits from inference(). 20 labels: Labels from distorted_inputs or inputs(). 1-D tensor 21 of shape [batch_size] 22 Returns: 23 Loss tensor of type float. 24 """ 25 cross_entropy_mean = tf.reduce_mean( 26 tf.nn.sparse_softmax_cross_entropy_with_logits( 27 labels=tf.argmax(labels,1), logits=logits), name=‘cross_entropy‘) 28 tf.add_to_collection(‘losses‘, cross_entropy_mean) 29 return tf.add_n(tf.get_collection(‘losses‘), name=‘total_loss‘) 30 31 if __name__==‘__main__‘: 32 33 image_dict = img_io.creat_image_lists(VALIDATION_PERCENTAGE, TEST_PERCENTAGE, INPUT_DATA) 34 n_class = len(image_dict.keys()) 35 36 image_holder = tf.placeholder(tf.float32,[BATCH_SIZE, INPUT_SIZE[0], INPUT_SIZE[1], 3]) 37 label_holder = tf.placeholder(tf.float32,[BATCH_SIZE, n_class]) 38 39 # 正则化函数选择并向前传播 40 regularizer = tf.contrib.layers.l2_regularizer(0.0001) 41 logits, _ = Net.inference(image_holder, regularizer, n_class) 42 43 # loss计算并反向传播 44 loss = loss(logits, label_holder) 45 train_step = tf.train.GradientDescentOptimizer(1e-3).minimize(loss) 46 47 # 正确率计算 48 with tf.name_scope(‘evaluation‘): 49 correct_prediction = tf.equal(tf.argmax(logits,1),tf.argmax(label_holder,1)) 50 evaluation_step = tf.reduce_mean(tf.cast(correct_prediction,tf.float32)) 51 52 53 with tf.Session() as sess: 54 55 # 确保在变量生成在最后 56 init = tf.global_variables_initializer().run() 57 58 for step in range(MAX_STEP): 59 print(step) 60 # 训练 61 image_batch, label_batch = img_io.get_random_cached_inputs( 62 sess, n_class, image_dict, BATCH_SIZE, ‘training‘, INPUT_DATA, INPUT_SIZE) 63 sess.run(train_step,feed_dict={ 64 image_holder:image_batch,label_holder:label_batch}) 65 66 # 验证 67 if (step % 100 == 0) or (step + 1 == MAX_STEP): 68 image_batch_val, label_batch_val = img_io.get_random_cached_inputs( 69 sess, n_class, image_dict, BATCH_SIZE, ‘validation‘, INPUT_DATA, INPUT_SIZE) 70 validation_accuracy = sess.run(evaluation_step, feed_dict={ 71 image_holder:image_batch_val,label_holder:label_batch_val}) 72 print(‘Step %d: Validation accuracy on random sampled %d examples = %.1f%%‘ % 73 (step, BATCH_SIZE, validation_accuracy * 100)) 74 75 # 测试 76 image_batch_test, label_batch_test = 77 img_io.get_test_images(sess,image_dict,n_class,INPUT_SIZE) 78 test_accuracy = sess.run(evaluation_step, feed_dict={ 79 image_holder: image_batch_test, label_holder: label_batch_test}) 80 print(‘Final test accuracy = %.1f%%‘ % (test_accuracy * 100))

『TensorFlow』徒手装高达_初号机_添加训练模组并整合为可用分类网络

标签:ace 遍历 ase loss == info image infer reduce

原文地址:http://www.cnblogs.com/hellcat/p/6919861.html