标签:nbsp model learn add gaussian gen max ping http

1. Probabilistic clustering model

2. Gaussian distribution

1-D gaussian is fully specified by mean μ and variance σ2.

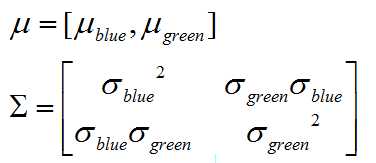

2-D gaussian is fully specified by mean μ vector and covariance matrix Σ.

thusly our mixture model of gaussian is defined by

{πk, μk, Σk}

3. EM(Expectation maximization)

what if we knew the cluster parameters {πk, μk, Σk} ?

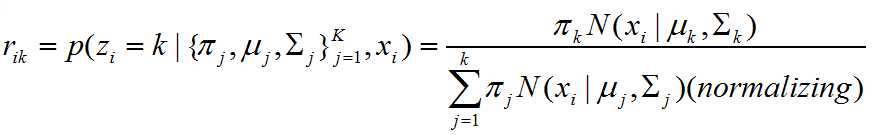

compute responsibilites:

rik is the responsibility cluster k takes for observation i.

p is the probability of assignment to cluster k, given model parameters and observaed value.

πk is the initial probability of being from cluster k.

N is the gaussian model.

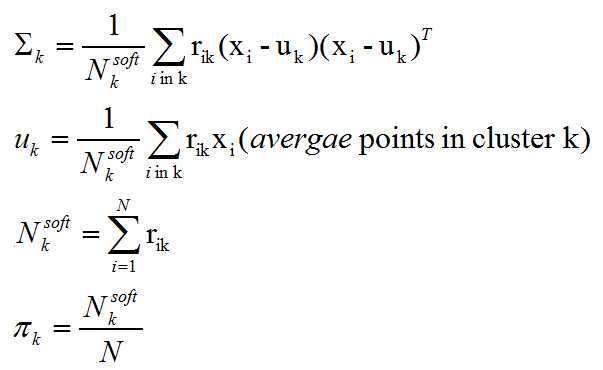

what if we knew the cluster soft assignments rij ?

The procedure for the iterative algorithm:

1. initialize

2. estimate cluster responsibilities given current parameter estimates(E-step)

3. maximize likelihood given soft assignments

Notes:

EM is a coordinate-ascent algorithm

EM converges to a local mode

There are many ways to initialize the EM algorithm and it is important for convergence rates and quality of local mode

prevent overfitting

机器学习笔记(Washington University)- Clustering Specialization-week four

标签:nbsp model learn add gaussian gen max ping http

原文地址:http://www.cnblogs.com/climberclimb/p/6931296.html