标签:特性 further prim 文档 cycle UI aspect win card

Introduction to DPDK: Architecture and Principles | DPDK概论:体系结构与实现原理

Linux network stack performance has become increasingly relevant over the past few years. This is perfectly understandable: the amount of data that can be transferred over a network and the corresponding workload has been growing not by the day, but by the hour.

这几年以来,Linux网络栈的性能变得越来越重要。这很好理解,因为可以通过网络来传输的数据量和对应的工作负载随着时间的推移而大幅度地增长。

Not even the widespread use of 10 GE network cards has resolved this issue; this is because a lot of bottlenecks that prevent packets from being quickly processed are found in the Linux kernel itself.

即便广泛使用10GbE网卡也解决不了这一性能问题(然并卵),因为在Linux内核中,存在着许多阻止数据包被快速地处理的瓶颈。

There have been many attempts to circumvent these bottlenecks with techniques called kernel bypasses (a short description can be found here). They let you process packets without involving the Linux network stack and make it so that the application running in the user space communicates directly with networking device. We’d like to discuss one of these solutions, the Intel DPDK (Data Plane Development Kit), in today’s article.

尝试绕过这些瓶颈的技术有很多,统称为kernel bypass(简短的描述戳这里)。kernel bypass技术让编程人员处理数据包,而不卷入Linux网络栈,在用户空间中运行的应用程序能够直接与网络设备打交道。在本文中,我们将讨论众多的kernel bypass解决方案中的一种,那就是Intel的DPDK(数据平面开发套件)。

A lot of posts have already been published about the DPDK and in a variety of languages. Although many of these are fairly informative, they don’t answer the most important questions: How does the DPDK process packets and what route does the packet take from the network device to the user?

来自多国语言的与有关DPDK的文章很多。虽然信息量已经很丰富了,但是并没有回答两个重要的问题。问题一:DPDK是如何处理数据包的?问题二:从网络设备到用户程序,数据包使用的路由是什么?

Finding the answers to these questions was not easy; since we couldn’t find everything we needed in the official documentation, we had to look through a myriad of additional materials and thoroughly review their sources. But first thing’s first: before talking about the DPDK and the issues it can help resolve, we should review how packets are processed in Linux.

找到上面的两个问题的答案不是一件容易的事情。由于我们无法在官方文档中找到我们所需要的所有东西,我们不得不查阅大量的额外资料,并彻底审查资料来源。但是最为首要的是:在谈论DPDK可以帮助我们解决问题之前,我们应该审阅一下数据包在Linux中是如何被处理的。

Processing Packets in Linux: Main Stages

Linux中的数据包处理的主要几个阶段

When a network card first receives a packet, it sends it to a receive queue, or RX. From there, it gets copied to the main memory via the DMA (Direct Memory Access) mechanism.

当网卡接收数据包之后,首先将其发送到接收队列(RX)。在那里,数据包被复制到内存中,通过直接内存访问(DMA)机制。

Afterwards, the system needs to be notified of the new packet and pass the data onto a specially allocated buffer (Linux allocates these buffers for every packet). To do this, Linux uses an interrupt mechanism: an interrupt is generated several times when a new packet enters the system. The packet then needs to be transferred to the user space.

接下来,系统需要被通知到,有新的数据包来了,然后系统将数据传递到一个专门分配的缓冲区中去(Linux为每一个数据包都分配这样的特别缓冲区)。为了做到这一点,Linux使用了中断机制:当一个新的数据包进入系统时,中断多次生成。然后,该数据包需要被转移到用户空间中去。

One bottleneck is already apparent: as more packets have to be processed, more resources are consumed, which negatively affects the overall system performance.

在这里,存在着一个很明显的瓶颈:伴随着更多的数据包需要被处理,更多的资源将被消耗掉,这无疑对整个系统的性能将产生负面的影响。

As we’ve already said, these packets are saved to specially allocated buffers - more specifically, the sk_buff struct. This struct is allocated for each packet and becomes free when a packet enters the user space. This operation consumes a lot of bus cycles (i.e. cycles that transfer data from the CPU to the main memory).

我们在前面已经说过,这些数据包被保存在专门分配的缓冲区中-更具体地说就是sk_buff结构体。系统给每一个数据包都分配一个这样的结构体,一但数据包到达用户空间,该结构体就被系统给释放掉。这种操作消耗大量的总线周期(bus cycle即是把数据从CPU挪到内存的周期)。

There is another problem with the sk_buff struct: the Linux network stack was originally designed to be compatible with as many protocols as possible. As such, metadata for all of these protocols is included in the sk_buff struct, but that’s simply not necessary for processing specific packets. Because of this overly complicated struct, processing is slower than it could be.

与sk_buff struct密切相关的另一个问题是:设计Linux网络协议栈的初衷是尽可能地兼容更多的协议。因此,所有协议的元数据都包含在sk_buff struct中,但是,处理特定的数据包的时候这些(与特定数据包无关的协议元数据)根本不需要。因而处理速度就肯定比较慢,由于这个结构体过于复杂。

Another factor that negatively affects performance is context switching. When an application in the user space needs to send or receive a packet, it executes a system call. The context is switched to kernel mode and then back to user mode. This consumes a significant amount of system resources.

对性能产生负面影响的另外一个因素就是上下文切换。当用户空间的应用程序需要发送或接收一个数据包的时候,执行一个系统调用。这个上下文切换就是从用户态切换到内核态,(系统调用在内核的活干完后)再返回到用户态。这无疑消耗了大量的系统资源。

To solve some of these problems, all Linux kernels since version 2.6 have included NAPI (New API), which combines interrupts with requests. Let’s take a quick look at how this works.

为了解决上面提及的所有问题,Linux内核从2.6版本开始包括了NAPI,将请求和中断予以合并。我们解析来快速地看一看这是如何工作的。

The network card first works in interrupt mode, but as soon as a packet enters the network interface, it registers itself in a poll queue and disables the interrupt. The system periodically checks the queue for new devices and gathers packets for further processing. As soon as the packets are processed, the card will be deleted from the queue and interrupts are again enabled.

首先网卡是在中断模式下工作。但是,一旦有数据包进入网络接口,网卡就会去轮询队列中注册,并将中断禁用掉。系统周期性地检查新设备队列,收集数据包以便做进一步地处理。一旦数据包被系统处理了,系统就将对应的网卡从轮询队列中删除掉,并再次启用该网卡的中断(即将网卡恢复到中断模式下去工作)。

This has been just a cursory description of how packets are processed. A more detailed look at this process can be found in an article series from Private Internet Access. However, even a quick glance is enough to see the problems slowing down packet processing. In the next section, we’ll describe how these problems are solved using DPDK.

这只是对数据包如何被处理的粗略的描述。有关数据包处理过程的详细描述请参见Private Internet Access的系列文章。然而,就是这么一个快速一瞥也足以让我们看到数据包处理被减缓的问题。在下一节中,我们将描述使用DPDK后,这些问题是如何被解决掉的。

DPDK: How It Works | DPDK 是如何工作的

General Features | 一般特性

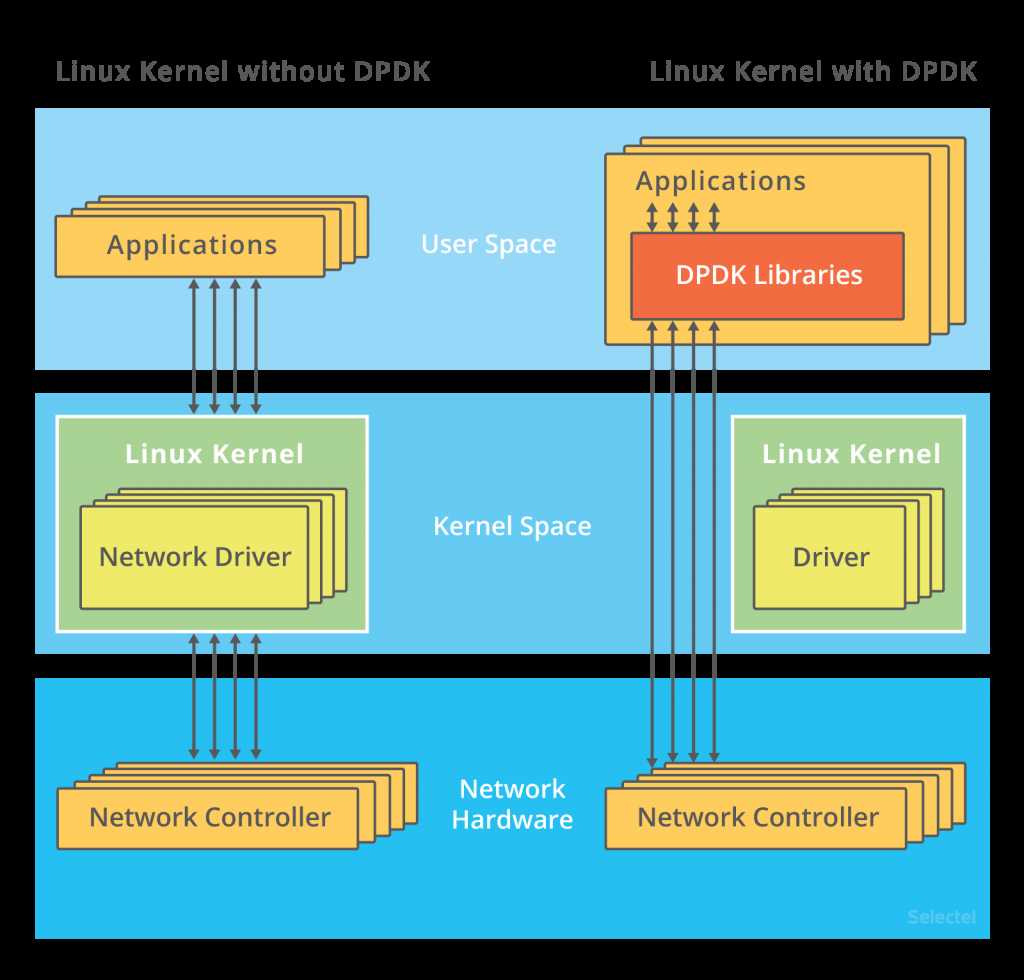

Let’s look at the following illustration: 让我们来看看下面的插图

On the left you see the traditional way packets are processed, and on the right - with DPDK. As we can see, the kernel in the second example doesn’t step in at all: interactions with the network card are performed via special drivers and libraries.

如图所示,在左边的是传统的数据包处理方式,在右边的则是使用了DPDK之后的数据包处理方式。正如我们看到的一样,右边的例子中,内核根本不需要介入,与网卡的交互是通过特殊的驱动和库函数来进行的。

If you’ve already read about DPDK or have ever used it, then you know that the ports receiving incoming traffic on network cards need to be unbound from Linux (the kernel driver). This is done using the dpdk_nic_bind (or dpdk-devbind) command, or ./dpdk_nic_bind.py in earlier versions.

如果你已经读过DPDK或者已经使用过DPDK,那么你肯定知道网卡接收数据传入的网口需要从Linux内核驱动上去除绑定(松棒)。用dpdk_nic_bind(或dpdk-devbind)命令就可以完成松绑,早期的版本中使用dpdk_nic_bind.py。

How are ports then managed by DPDK? Every driver in Linux has bind and unbind files. That includes network card drivers:

ls /sys/bus/pci/drivers/ixgbe bind module new_id remove_id uevent unbind

XXXX

To unbind a device from a driver, the device’s bus number needs to be written to the unbind file. Similarly, to bind a device to another driver, the bus number needs to be written to its bind file. More detailed information about this can be found here.

XXXX

The DPDK installation instructions tell us that our ports need to be managed by the vfio_pci, igb_uio, or uio_pci_generic driver. (We won’t be geting into details here, but we suggested interested readers look at the following articles on kernel.org: 1 and 2.)

XXXX

These drivers make it possible to interact with devices in the user space. Of course they include a kernel module, but that’s just to initialize devices and assign the PCI interface.

XXXX

All further communication between the application and network card is organized by the DPDK poll mode driver (PMD) DPDK has poll mode drivers for all supported network cards and virtual devices.

XXXX

The DPDK also requires hugepages be configured. This is required for allocating large chunks of memory and writing data to them. We can say that hugepages does the same job in DPDK that DMA does in traditional packet processing.

XXXX

We’ll discuss all of its nuances in more detail, but for now, let’s go over the main stages of packet processing with the DPDK:

Let’s take a closer look at the DPDK’s internal structure.

XXXX

EAL: Environment Abstraction | 环境抽象层

The EAL, or Environment Abstraction Layer, is the main concept behind the DPDK.

XXX

The EAL is a set of programming tools that let the DPDK work in a specific hardware environment and under a specific operating system. In the official DPDK repository, libraries and drivers that are part of the EAL are saved in the rte_eal directory.

XXX

Drivers and libraries for Linux and the BSD system are saved in this directory. It also contains a set of header files for various processor architectures: ARM, x86, TILE64, and PPC64.

XXX

We access software in the EAL when we compile the DPDK from the source code:

make config T=x86_64-native-linuxapp-gcc

XXXX

One can guess that this command will compile DPDK for Linux in an x86_64 architecture.

XXX

The EAL is what binds the DPDK to applications. All of the applications that use the DPDK (see here for examples) must include the EAL’s header files.

XXXX

The most commonly of these include:

XXXX

More details on this structure and EAL functions can be found in the official documentation.

XXXX

Managing Queues: rte_ring | 队列管理

As we’ve already said, packets received by the network card are sent to a ring buffer, which acts as a receiving queue. Packets received in the DPDK are also sent to a queue implemented on the rte_ring library. The library’s description below comes from information gathered from the developer’s guide and comments in the source code.

XXX

The rte_ring was developed from the FreeBSD ring buffer. If you look at the source code, you’ll see the following comment: Derived from FreeBSD’s bufring.c.

XXX

The queue is a lockless ring buffer built on the FIFO (First In, First Out) principle. The ring buffer is a table of pointers for objects that can be saved to the memory. Pointers can be divided into four categories: prod_tail, prod_head, cons_tail, cons_head.

XXX

Prods is short for producer, and cons for consumer. The producer is the process that writes data to the buffer at a given time, and the consumer is the process that removes data from the buffer.

XXX

The tail is where writing takes place on the ring buffer. The place the buffer is read from at a given time is called the head.

XXX

The idea behind the process for adding and removing elements from the queue is as follows: when a new object is added to the queue, the ring->prod_tail indicator should end up pointing to the location where ring->prod_head previously pointed to.

XXX

This is just a brief description; a more detailed account of how the ring buffer scripts work can be found in the developer’s manual on the DPDK site.

XXX

This approach has a number of advantages. Firstly, data is written to the buffer extremely quickly. Secondly, when adding or removing a large number of objects from the queue, cache misses occur much less frequently since pointers are saved in a table.

XXX

The drawback to DPDK’s ring buffer is its fixed size, which cannot be increased on the fly. Additionally, much more memory is spent working with the ring structure than in a linked queue since the buffer always uses the the maximum number of pointers.

XXX

Memory Management: rte_mempool | 内存管理

We mentioned above that DPDK requires hugepages. The installation instructions recommend creating 2MB hugepages.

These pages are combined in segments, which are then divided into zones. Objects that are created by applications or other libraries, like queues and packet buffers, are placed in these zones.

These objects include memory pools, which are created by the rte_mempool library. These are fixed size object pools that use rte_ring for storing free objects and can be identified by a unique name.

Memory alignment techniques can be implemented to improve performance.

Even though access to free objects is designed on a lockless ring buffer, consumption of system resources may still be very high. As multiple cores have access to the ring, a compare-and-set (CAS) operation usually has to be performed each time it is accessed.

To prevent bottlenecking, every core is given an additional local cache in the memory pool. Using the locking mechanism, cores can fully access the free object cache. When the cache is full or entirely empty, the memory pool exchanges data with the ring buffer. This gives the core access to frequently used objects.

Buffer Management: rte_mbuf | 缓冲区管理

In the Linux network stack, all network packets are represented by the the sk_buff data structure. In DPDK, this is done using the rte_mbuf struct, which is described in the rte_mbuf.h header file.

The buffer management approach in DPDK is reminiscent of the approach used in FreeBSD: instead of one big sk_buff struct, there are many smaller rte_mbuf buffers. The buffers are created before the DPDK application is launched and are saved in memory pools (memory is allocated by rte_mempool).

In addition to its own packet data, each buffer contains metadata (message type, length, data segment starting address). The buffer also contains pointers for the next buffer. This is needed when handling packets with large amounts of data. In cases like these, packets can be combined (as is done in FreeBSD; more detailed information about this can be found here).

Other Libraries: General Overview | 其他库概览

In previous sections, we talked about the most basic DPDK libraries. There’s a great deal of other libraries, but one article isn’t enough to describe them all. Thus, we’ll be limiting ourselves to just a brief overview.

With the LPM library, DPDK runs the Longest Prefix Match (LPM) algorithm, which can be used to forward packets based on their IPv4 address. The primary function of this library is to add and delete IP addresses as well as to search for new addresses using the LPM algorithm.

A similar function can be performed for IPv6 addresses using the LPM6 library.

Other libraries offer similar functionality based on hash functions. With rte_hash, you can search through a large record set using a unique key. This library can be used for classifying and distributing packets, for example.

The rte_timer library lets you execute functions asynchronously. The timer can run once or periodically.

Conclusion | 总结

In this article we went over the internal device and principles of DPDK. This is far from comprehensive though; the subject is too complex and extensive to fit in one article. So sit tight, we will continue this topic in a future article, where we’ll discuss the practical aspects of using DPDK.

We’d be happy to answer your questions in the comments below. And if you’ve had any experience using DPDK, we’d love to hear your thoughts and impressions.

For anyone interested in learning more, please visit the following links:

Andrej Yemelianov 24 November 2016 Tags: DPDK, linux, network, network stacks, packet processing

参考资料

1. https://blog.selectel.com/introduction-dpdk-architecture-principles/

2. http://it-events.com/system/attachments/files/000/001/102/original/LinuxPiter-DPDK-2015.pdf

[中英对照]Introduction to DPDK: Architecture and Principles

标签:特性 further prim 文档 cycle UI aspect win card

原文地址:http://www.cnblogs.com/idorax/p/6931037.html