标签:geo dex str 图片 log mini 客户 message put

filebeat用于是日志收集,感觉和 flume相同,但是用go开发,性能比较好

在2.4版本中, 客户机部署logstash收集匹配日志,传输到 kafka,在用logstash 从消息队列中抓取日志存储到elasticsearch中。

但是在 5.5版本中,使用filebeat 收集日志,减少对客户机的性能影响, filebeat 收集日志 传输到 logstash的 5044端口, logstash接收日志,然后传输到es中

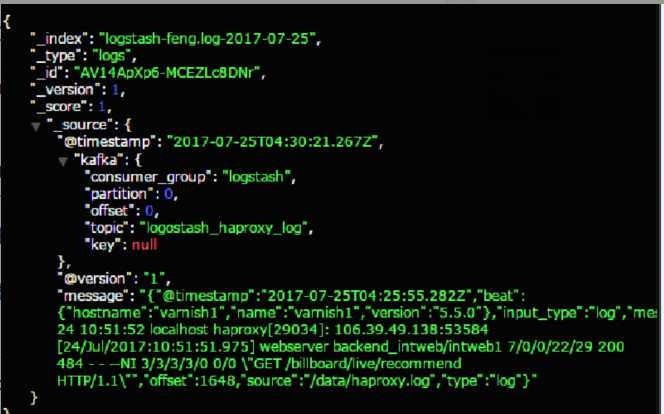

实验 filebeat ---- kafka ------logstash ----- es, 但是logstash message中带有filebeat信息,gork匹配有些困难。

以下图片是日志格式

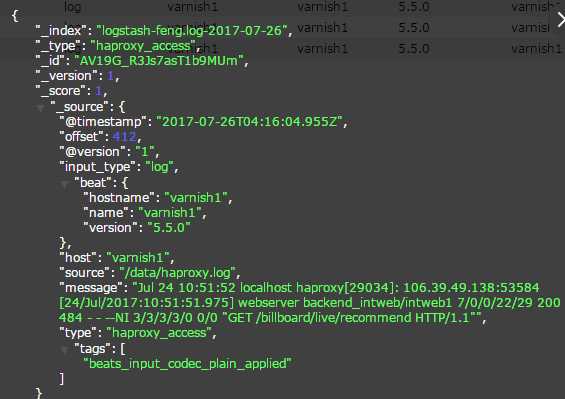

以下是 filebeat------logstash ------------es 收集的日志

现阶段分析的日志量比较小,所以暂时使用 filebeat-----logstash------es 架构

192.168.20.119 client

192.168.99.13 logstash

192.168.99.6 es

#####filebeat配置文件,192.168.99.13是logstash############## 192.168.20.119

filebeat.prospectors: - input_type: log paths: - /data/*.log document_type: haproxy_access filebeat: spool_size: 1024 idle_timeout: "5s" registry_file: /var/lib/filebeat/registry output.logstash: hosts: ["192.168.99.13:5044"] logging: files: path: /var/log/mybeat name: mybeat rotateeverybytes: 10485760

启动filebeat

[root@varnish1 filebeat-5.5.0-linux-x86_64]# nohup ./filebeat -e -c filebeat.yml &

192.168.99.13 logstash 配置文件

[root@logstashserver etc]# vim /data/logstash/etc/logstash.conf

input { beats { host => "0.0.0.0" port => 5044 } } filter { if [type] == "haproxy_access" { grok { match => ["message", "%{HAPROXYHTTP}"] } grok { match => ["message", "%{HAPROXYDATE:accept_date}"] } date { match => ["accept_date", "dd/MMM/yyyy:HH:mm:ss.SSS"] } if [host] == "varnish1" { mutate { add_field => { "SrvName" => "varnish2" } } } geoip { source => "client_ip" } } } output { elasticsearch { hosts => ["es1:9200","es2:9200","es3:9200"] manage_template => true index => "logstash-feng.log-%{+YYYY-MM-dd}" } }

[root@varnish1 filebeat-5.5.0-linux-x86_64]# /data/logstash/bin/logstash -f /data/logstash/etc/logstash.conf

elasticsearch 配置文件

[root@es1 config]# grep -v "#" elasticsearch.yml cluster.name: senyint_elasticsearch node.name: es1 path.data: /data/elasticsearch/data path.logs: /data/elasticsearch/logs bootstrap.memory_lock: true network.host: 192.168.99.8 http.port: 9200 discovery.zen.ping.unicast.hosts: ["es1", "es2", "es3"] discovery.zen.minimum_master_nodes: 2 http.cors.enabled: true http.cors.allow-origin: "*" xpack.security.enabled: false

filebeat+logstash+elasticsearch收集haproxy日志

标签:geo dex str 图片 log mini 客户 message put

原文地址:http://www.cnblogs.com/fengjian2016/p/7239910.html