标签:line c++ 预测 tor ack vma round clu end

http://www.cnblogs.com/YiXiaoZhou/p/6058890.html

RNN求解过程推导与实现

RNN

LSTM

BPTT

matlab code

opencv code

BPTT,Back Propagation Through Time.

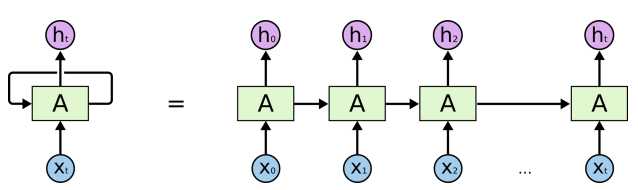

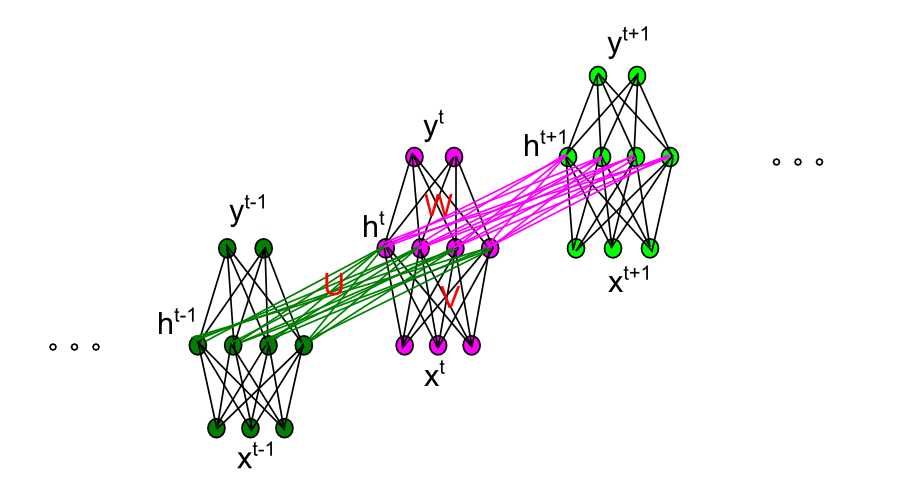

首先来看看怎么处理RNN。

RNN展开网络如下图

现令第t时刻的输入表示为 ,隐层节点的输出为

,隐层节点的输出为 ,输出层的预测值

,输出层的预测值 ,输入到隐层的权重矩阵

,输入到隐层的权重矩阵 ,隐层自循环的权重矩阵

,隐层自循环的权重矩阵 ,隐层到输出层的权重矩阵

,隐层到输出层的权重矩阵 ,对应的偏执向量分别表示为

,对应的偏执向量分别表示为 ,输入层的某一个节点使用i标识,如

,输入层的某一个节点使用i标识,如 ,类似的隐层和输出层某一节点表示为

,类似的隐层和输出层某一节点表示为 。这里我们仅以三层网络为例。

。这里我们仅以三层网络为例。

那么首先正向计算

其中 分别表示激活前对应的加权和,

分别表示激活前对应的加权和, 表示激活函数。

表示激活函数。

然后看看误差如何传递。

假设真实的输出应该是 ,那么误差可以定义为

,那么误差可以定义为 ,

, 是训练样本的index。整个网络的误差

是训练样本的index。整个网络的误差

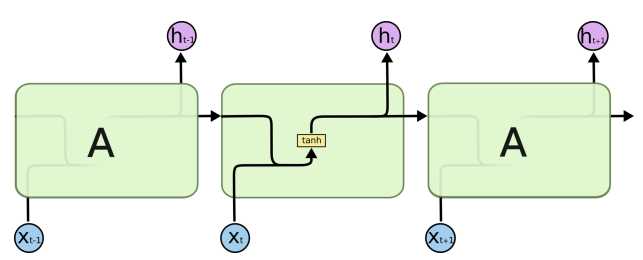

我们将RNN再放大一些,看看细节

令 则

则

矩阵向量化表示

所以梯度为:

其中 是点乘符号,即对应元素乘。

是点乘符号,即对应元素乘。

代码实现:

我们可以注意到在计算梯度时需要用到的之前计算过的量,即需要保存的量包括,前向计算时隐层节点和输出节点的输出值,以及由 时刻累积的

时刻累积的 。

。

人人都能用Python写出LSTM-RNN的代码![你的神经网络学习最佳起步]这篇文章里使用python实现了基本的RNN过程。代码功能是模拟二进制相加过程中的依次进位过程,代码很容易明白。

这里改写成matlab代码

- function error = binaryRNN( )

- largestNumber=256;

- T=8;

- dic=dec2bin(0:largestNumber-1)-‘0‘;

- eta=0.1;

- inputDim=2;

- hiddenDim=16;

- outputDim=1;

-

- W=rand(hiddenDim,outputDim)*2-1;

- U=rand(hiddenDim,hiddenDim)*2-1;

- V=rand(inputDim,hiddenDim)*2-1;

-

- delta_W=zeros(hiddenDim,outputDim);

- delta_U=zeros(hiddenDim,hiddenDim);

- delta_V=zeros(inputDim,hiddenDim);

- error=0;

- for p=1:10000

- aInt=randi(largestNumber/2);

- bInt=randi(largestNumber/2);

- a=dic(aInt+1,:);

- b=dic(bInt+1,:);

- cInt=aInt+bInt;

- c=dic(cInt+1,:);

- y=zeros(1,T);

-

- preh=zeros(1,hiddenDim);

- hDic=zeros(T,hiddenDim);

-

- for t=T:-1:1

- x=[a(t),b(t)];

- h=sigmoid(x*V+preh*U);

- y(t)=sigmoid(h*W);

- hDic(t,:)=h;

- preh=h;

- end

-

- err=y-c;

- error=error+norm(err,2)/2;

- next_delta_h=zeros(1,hiddenDim);

-

- for t=1:T

- delta_y = err(t).*sigmoidOutput2d(y(t));

- delta_h=(delta_y*W‘+next_delta_h*U‘).*sigmoidOutput2d(hDic(t,:));

-

- delta_W=delta_W+hDic(t,:)‘*delta_y;

- if t<T

- delta_U=delta_U+hDic(t+1,:)‘*delta_h;

- end

- delta_V=delta_V+[a(t),b(t)]‘*delta_h;

- next_delta_h=delta_h;

- end

-

- W=W-eta*delta_W;

- U=U-eta*delta_U;

- V=V-eta*delta_V;

-

- delta_W=zeros(hiddenDim,outputDim);

- delta_U=zeros(hiddenDim,hiddenDim);

- delta_V=zeros(inputDim,hiddenDim);

-

- if mod(p,1000)==0

- fprintf(‘Samples:%d\n‘,p);

- fprintf(‘True:%d\n‘,cInt);

- fprintf(‘Predict:%d\n‘,bin2dec(int2str(round(y))));

- fprintf(‘Error:%f\n‘,norm(err,2)/2);

- end

- end

- end

-

- function sx=sigmoid(x)

- sx=1./(1+exp(-x));

- end

-

- function dx=sigmoidOutput2d(output)

- dx=output.*(1-output);

- end

为了更深入理解RNN过程,这里我想用OpenCV和C++实现自己的RNN,简单的单隐层网络。同样类似的功能,模拟多个十进制数的加法进位过程。

- # include "highgui.h"

- # include "cv.h"

- # include <iostream>

- #include "math.h"

- #include<cstdlib>

- using namespace std;

-

- # define random(x) ((rand()*rand())%x)

-

- void Display(CvMat* mat)

- {

- cout << setiosflags(ios::fixed);

- for (int i = 0; i < mat->rows; i++)

- {

- for (int j = 0; j < mat->cols; j++)

- cout << cvmGet(mat, i, j) << " ";

- cout << endl;

- }

-

- }

-

-

- float sigmoid(float x)

- {

- return 1 / (1 + exp(-x));

- }

- CvMat* sigmoidM(CvMat* mat)

- {

- CvMat*mat2 = cvCloneMat(mat);

-

- for (int i = 0; i < mat2->rows; i++)

- {

- for (int j = 0; j < mat2->cols; j++)

- cvmSet(mat2, i, j, sigmoid(cvmGet(mat, i, j)));

- }

- return mat2;

- }

- float diffSigmoid(float x)

- {

-

- return x*(1 - x);

- }

- CvMat* diffSigmoidM(CvMat* mat)

- {

- CvMat* mat2 = cvCloneMat(mat);

-

- for (int i = 0; i < mat2->rows; i++)

- {

- for (int j = 0; j < mat2->cols; j++)

- {

- float t = cvmGet(mat, i, j);

- cvmSet(mat2, i, j, t*(1 - t));

- }

-

- }

- return mat2;

-

- }

-

-

- int sample(int inputdim, CvMat* Sample,int MAX)

- {

- int sum = 0;

- for (int i = 0; i < inputdim; i++)

- {

- int t = random(MAX);

- cvmSet(Sample, 0, i, t);

- sum += cvmGet(Sample,0,i);

- }

- return sum;

- }

- CvMat* splitM( CvMat*Sample)

- {

- CvMat* mat = cvCreateMat(Sample->cols, 8, CV_32F);

- cvSetZero(mat);

- for (int i = 0; i < mat->rows; i++)

- {

- int x = cvmGet(Sample,0,i);

- for (int j = 0; j < 8; ++j)

- {

- cvmSet(mat,i,j, x % 10);

- x = x / 10;

- }

- }

- return mat;

- }

-

- int merge(CvMat* mat)

- {

- double d = 0;

- for (int i = mat->cols; i >0; i--)

- {

- d = 10 * d + round(10*(cvmGet(mat,0,i-1)));

-

- }

- return int(d);

- }

- CvMat* split(int y)

- {

- CvMat* mat = cvCreateMat(1, 8, CV_32F);

- for (int i = 0; i < 8; i++)

- {

- cvmSet(mat,0,i, (y % 10) / 10.0);

- y = y / 10;

- }

- return mat;

-

- }

-

- CvMat*randM(int rows,int cols, float a,float b)

- {

- CvMat* mat = cvCreateMat(rows, cols, CV_32FC1);

- float* ptr;

- for (int i = 0; i < mat->rows; i++)

- {

- for (int j = 0; j < mat->cols; j++)

- {

- cvmSet(mat, i, j, random(1000) / 1000.0*(b - a) + a);

- }

- }

- return mat;

- }

-

- int main()

- {

- srand(time(NULL));

-

- int inputdim = 2;

- int hiddendim = 16;

- int outputdim = 1;

- float eta = 0.1;

- int MAX = 100000000;

-

- CvMat* V = randM(inputdim, hiddendim,-1,1);

- CvMat* U = randM(hiddendim, hiddendim, -1, 1);

- CvMat* W = randM(hiddendim, outputdim, -1, 1);

- CvMat* bh = randM(1, hiddendim, -1, 1);

- CvMat* by = randM(1, outputdim, -1, 1);

-

- CvMat*Sample = cvCreateMat(1, inputdim, CV_32F);

- cvSetZero(Sample);

- CvMat* delta_V = cvCloneMat(V);

- CvMat* delta_U = cvCloneMat(U);

- CvMat* delta_W = cvCloneMat(W);

- CvMat* delta_by = cvCloneMat(by);

- CvMat* delta_bh = cvCloneMat(bh);

-

-

- for (int p = 0; p < 20000; p++)

- {

- int sum = sample(inputdim,Sample,MAX);

- CvMat* sampleM = splitM(Sample);

- CvMat* d = split(sum);

-

- CvMat* pre_h = cvCreateMat(1, hiddendim, CV_32F);

- cvSetZero(pre_h);

- CvMat* y = cvCreateMat(1, 8, CV_32F);

- cvSetZero(y);

- CvMat* h = cvCreateMat(8, hiddendim, CV_32F);

-

- CvMat* temp1 = cvCreateMat(1, hiddendim, CV_32F);

- CvMat* temp2 = cvCreateMat(1, outputdim, CV_32F);

- CvMat* xt = cvCreateMatHeader(inputdim, 1, CV_32S);

- for (int t = 0; t < 8; t++)

- {

- cvGetCol(sampleM, xt, t);

- cvGEMM(xt, V, 1,bh, 1, temp1, CV_GEMM_A_T);

- cvGEMM(pre_h, U, 1, temp1, 1, pre_h);

- pre_h = sigmoidM(pre_h);

-

- cvGEMM(pre_h, W, 1, by, 1, temp2);

- float yvalue = sigmoid(cvmGet(temp2, 0, 0));

- cvmSet(y, 0, t, yvalue);

-

-

- for (int j = 0; j < hiddendim; j++)

- {

- cvmSet(h, t, j, cvmGet(pre_h, 0, j));

- }

- }

- cvReleaseMat(&temp1);

- cvReleaseMat(&temp2);

-

-

- int oy = merge(y);

- CvMat* temp = cvCreateMat(1, 8, CV_32F);

- cvSub(y, d, temp);

- double error = 0.5*cvDotProduct(temp, temp);

- if ((p+1)%1000==0)

- {

- cout << "************************第" << p + 1 << "个样本***********" << endl;

- cout << "真实值:" << sum%MAX << endl;

- cout << "预测值:" << oy << endl;

- cout << "误差:" << error << endl;

- }

-

- cvSetZero(delta_V);

- cvSetZero(delta_U);

- cvSetZero(delta_W);

- cvSetZero(delta_bh);

- cvSetZero(delta_by);

-

- CvMat* delta_h = cvCreateMat(1, hiddendim, CV_32F);

- cvSetZero(delta_h);

- CvMat* delta_y = cvCreateMat(1, outputdim, CV_32F);

- cvSetZero(delta_y);

- CvMat* next_delta_h = cvCreateMat(1, hiddendim, CV_32F);

- cvSetZero(next_delta_h);

-

- for (int t = 7; t > 0; t--)

- {

- cvmSet(delta_y, 0, 0, (cvmGet(y, 0, t) - cvmGet(d, 0, t))*diffSigmoid(cvmGet(y, 0, t)));

- cvGEMM(delta_y, W, 1, delta_h, 0, delta_h, CV_GEMM_B_T);

- cvGEMM(next_delta_h, U, 1, delta_h, 1, delta_h, CV_GEMM_B_T);

- cvMul(delta_h, diffSigmoidM(cvGetRow(h, temp, t)), delta_h);

-

- cvGEMM(cvGetRow(h, temp, t), delta_y, 1, delta_W, 1, delta_W, CV_GEMM_A_T);

- if (t>0)

- cvGEMM(cvGetRow(h, temp, t - 1), delta_h, 1, delta_U, 1, delta_U, CV_GEMM_A_T);

- cvGetCol(sampleM, xt, t);

- cvGEMM(xt, delta_h, 1, delta_V, 1, delta_V);

- cvAddWeighted(delta_by, 1, delta_y, 1, 0, delta_by);

- cvAddWeighted(delta_bh, 1, delta_h, 1, 0, delta_bh);

-

- cvAddWeighted(delta_h, 1, next_delta_h, 0, 0, next_delta_h);

-

- }

- cvAddWeighted(W, 1, delta_W, -eta, 0, W);

- cvAddWeighted(V, 1, delta_V, -eta, 0, V);

- cvAddWeighted(U, 1, delta_U, -eta, 0, U);

-

- cvAddWeighted(by, 1, delta_by, -eta, 0, by);

- cvAddWeighted(bh, 1, delta_bh, -eta, 0, bh);

-

- cvReleaseMat(&sampleM);

- cvReleaseMat(&d);

- cvReleaseMat(&pre_h);

- cvReleaseMat(&y);

- cvReleaseMat(&h);

- cvReleaseMat(&delta_h);

- cvReleaseMat(&delta_y);

- }

- cvReleaseMat(&U);

- cvReleaseMat(&V);

- cvReleaseMat(&W);

- cvReleaseMat(&by);

- cvReleaseMat(&bh);

- cvReleaseMat(&Sample);

- cvReleaseMat(&delta_V);

- cvReleaseMat(&delta_U);

- cvReleaseMat(&delta_W);

- cvReleaseMat(&delta_by);

- cvReleaseMat(&delta_bh);

- system("PAUSE");

- return 0;

- }

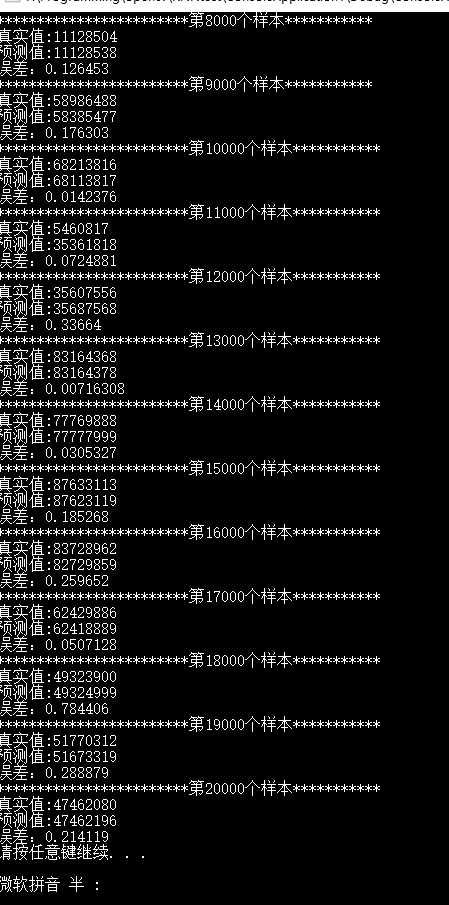

下面是代码结果,并没有完全一致。分析下主要原因可能是由于输出层是(0,1)的小数,但我们希望得到的是[0,10]的整数,而通过round或者强制类型转换总会带来较大误差,所以会出现预测值和真实值差别很大,这时候其实比较值的差异意义不大,应该对比每一位上数字的差异。

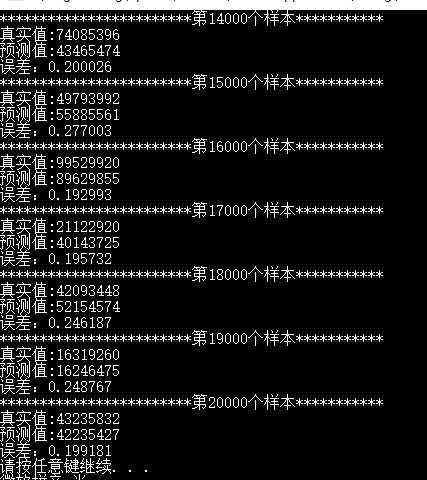

再下面是3个输入,32个隐层节点的结果

RNN推导

标签:line c++ 预测 tor ack vma round clu end

原文地址:http://www.cnblogs.com/xqnq2007/p/7306038.html

,隐层节点的输出为

,隐层节点的输出为 ,输出层的预测值

,输出层的预测值 ,输入到隐层的权重矩阵

,输入到隐层的权重矩阵 ,隐层自循环的权重矩阵

,隐层自循环的权重矩阵 ,隐层到输出层的权重矩阵

,隐层到输出层的权重矩阵 ,对应的偏执向量分别表示为

,对应的偏执向量分别表示为 ,输入层的某一个节点使用i标识,如

,输入层的某一个节点使用i标识,如 ,类似的隐层和输出层某一节点表示为

,类似的隐层和输出层某一节点表示为 。这里我们仅以三层网络为例。

。这里我们仅以三层网络为例。

分别表示激活前对应的加权和,

分别表示激活前对应的加权和, 表示激活函数。

表示激活函数。 ,那么误差可以定义为

,那么误差可以定义为 ,

, 是训练样本的index。整个网络的误差

是训练样本的index。整个网络的误差

则

则

是点乘符号,即对应元素乘。

是点乘符号,即对应元素乘。 时刻累积的

时刻累积的 。

。