标签:lease ref pom 知识 linu roo mis form mon

为实现全栈,从今天开始研究Hadoop,个人体会是成为某方面的专家需要从三个方面着手

好吧,我们从安装入手。

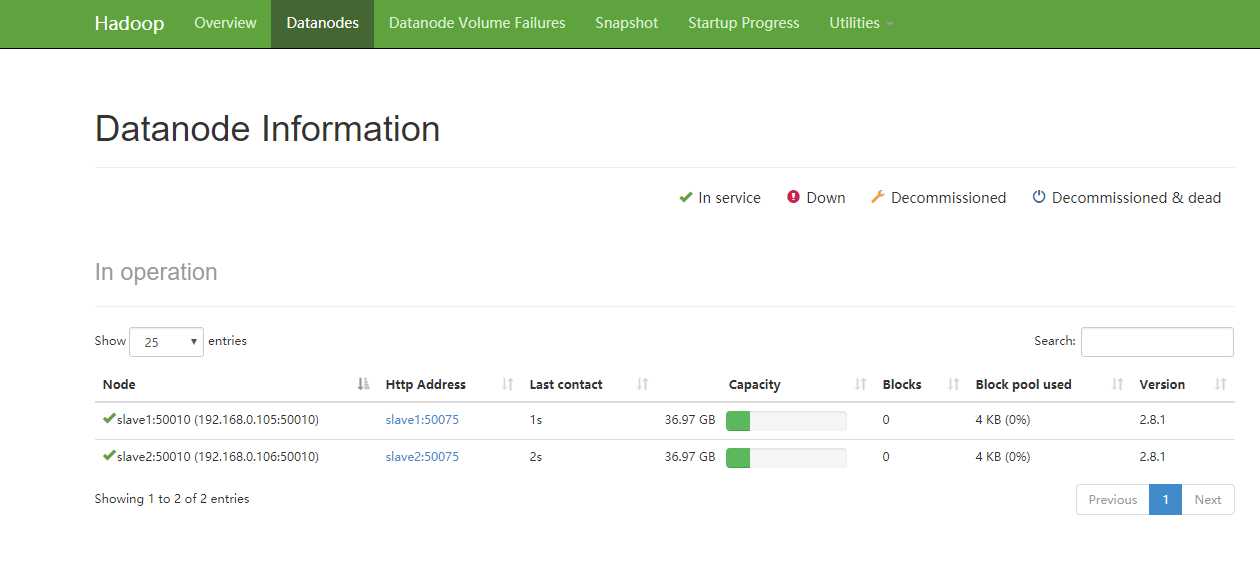

1.找三个CentOS的虚拟环境,我的是centos 7,大概的规划如下,一个master,两个slave

修改三台机器的/etc/hosts文件

192.168.0.104 master 192.168.0.105 slave1 192.168.0.106 slave2

2.配置ssh互信

在三台机器上输入下面的命令,生成ssh key以及authorized key,为了简单,我是在root用户下操作,大家可以在需要启动hadoop的用户下操作更规范一些

ssh-keygen -t rsa

cd .ssh

cp id_rsa.pub authorized_keys

然后将三台机器的authorized_keys合并成一个文件并且复制在三台机器上。比如我的authorized key

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCrtxZC5VB1tyjU4nGy4+Yd//LsT3Zs2gtNtpw6z4bv7VdL6BI0tzFLs8QIHS0Q82BmiXdBIG2fkLZUHZuaAJlkE+GCPHBmQSdlS+ZvUWKFr+vpbzF86RBGwJp1HHs7GtDFtirN3Z/Qh6pKgNLFuFCxIF/Ee4sL50RUAh6wFOY/TRU4XxQissNXd9rhVFrZnOkctfA3Wek4FgNRyT+xUezSW1Vl2GliGc0siI5RCQezDhKwZNHyzY4yyiifeQYL14S4D0RrlCvv+5PIUZUrKznKc1BMYIljxMVOrAs0DsvQ0fkna/Q/pA53cuPhkD4P8ehA/fJuMCTZ+1q/Z2o1WW4j root@master ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDBmuwzWdWI1oEwA8BC2RutAWWeCvFkkH7qYR4pWyMK8Ubkpc5HxB+mqCr24Bgug17bvFdrTdUyABY7GSJpGx3xBIcyh96bBgG9Thnc0k/XT6oO3cTai0jDr74CCTkkXymBwpVkAIlYY/MrdxQAym4gOMnU2celMMpkq7GhFJ7zOZqfI3cdQ6Q9x9LyNP6DcDFp7QQePcGylNpHeZITgABZzozWFyqg1nHi9qfGy3NtXM2lnGF+W+6JR/OtShTWeaxAOwQXt0rDEjHyUZ8JAv95J4sawGrwgWX89oWr4xorR8rMYl0FZz84OtvvNSFm5KR2NRxj8yPZZQKjaJ8nuDGN root@slave1 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDCrRbwk8Xc2EHLNRL25ve3IlLLkshByTXwwWslP61ASNeKhYk2HObGAjL09mOpOmzdbVXJJ6YLDWIKczLSnSt4o5W7bjWQpCh136O9vCupibxCr1q4uJa+qpW69mUhrvREa4hOLvRXCXmz16p0/dOtCnPudF8AgzhezrqI/4yQkLubGZamQauHB8LEd+1VMdjRHWx0j6mQHrcDnqlaIEq8XW4UM2TcmSS7Ztp6q0zzcC39dz/xopwq/WixwQi2z4Ywc++YufXHmyDp/gkqyXG1tHwH9TMQ/kkmD3piEcnrFKDlU8Kk/B1YCnNIKTG5BT9k1JI1qenJ8NxHJ06gtM3J root@slave2

3.安装JDK,我们用jdk-7u79-linux-x64.gz版本。

tar xzvf jdk-7u79-linux-x64.gz

修改.bashrc

JAVA_HOME=/hadoop/jdk1.7.0_79 CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar PATH=$JAVA_HOME/bin:$PATH export JAVA_HOME CLASSPATH PATH

4.安装hadoop并配置

在下面链接下载2.8.1版本,然后解压

http://hadoop.apache.org/releases.html

我把jdk和hadoop都放在/hadoop目录下,然后建立目录

mkdir tmp mkdir -p hdfs/data mkdir -p hdfs/name

然后修改核心的几个配置文件。/hadoop/hadoop-2.8.1/etc/hadoop

core-site.xml

[root@master hadoop]# cat core-site.xml <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://192.168.0.104:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>file:/hadoop/tmp</value> </property> <property> <name>io.file.buffer.size</name> <value>131702</value> </property> </configuration>

hdfs-site.xml

[root@master hadoop]# cat hdfs-site.xml <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.namenode.name.dir</name> <value>file:/hadoop/hdfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/hadoop/hdfs/data</value> </property> <property> <name>dfs.replication</name> <value>2</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>192.168.0.104:9001</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> </configuration>

mapred-site.xml

[root@master hadoop]# cat mapred-site.xml <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>192.168.0.104:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>192.168.0.104:19888</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>192.168.0.104:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>192.168.0.104:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>192.168.0.104:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>192.168.0.104:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>192.168.0.104:8088</value> </property> <property> <name>yarn.nodemanager.resource.memory-mb</name> <value>768</value> </property> </configuration>

配置hadoop-env.sh、yarn-env.sh中JAVA_HOME

配置slave节点

[root@master hadoop]# cat slaves 192.168.0.105 192.168.0.106

将master节点的软件复制到slave上。

scp -r /hadoop 192.168.0.105:/ scp -r /hadoop 192.168.0.106:/

5.格式化

在master节点上进入/hadoop/hadoop-2.8.1/bin目录运行,格式化hdfs系统

./hdfs namenode -format

6.启动,停止

全部启动sbin/start-all.sh,也可以分开sbin/start-dfs.sh、sbin/start-yarn.sh

[root@master sbin]# ./start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [master] master: starting namenode, logging to /hadoop/hadoop-2.8.1/logs/hadoop-root-namenode-master.out 192.168.0.106: starting datanode, logging to /hadoop/hadoop-2.8.1/logs/hadoop-root-datanode-slave2.out 192.168.0.105: starting datanode, logging to /hadoop/hadoop-2.8.1/logs/hadoop-root-datanode-slave1.out Starting secondary namenodes [master] master: starting secondarynamenode, logging to /hadoop/hadoop-2.8.1/logs/hadoop-root-secondarynamenode-master.out starting yarn daemons starting resourcemanager, logging to /hadoop/hadoop-2.8.1/logs/yarn-root-resourcemanager-master.out 192.168.0.105: starting nodemanager, logging to /hadoop/hadoop-2.8.1/logs/yarn-root-nodemanager-slave1.out 192.168.0.106: starting nodemanager, logging to /hadoop/hadoop-2.8.1/logs/yarn-root-nodemanager-slave2.out

停止的话,输入命令,sbin/stop-all.sh

输入命令,jps,可以看到相关信息

master上

[root@master bin]# jps 4018 NameNode 4223 SecondaryNameNode 4383 ResourceManager 4686 Jps

slave上

[root@slave2 ~]# jps 3592 NodeManager 3510 DataNode 7173 Jps

7.访问

要先开放端口或者直接关闭防火墙

(1)输入命令,systemctl stop firewalld.service

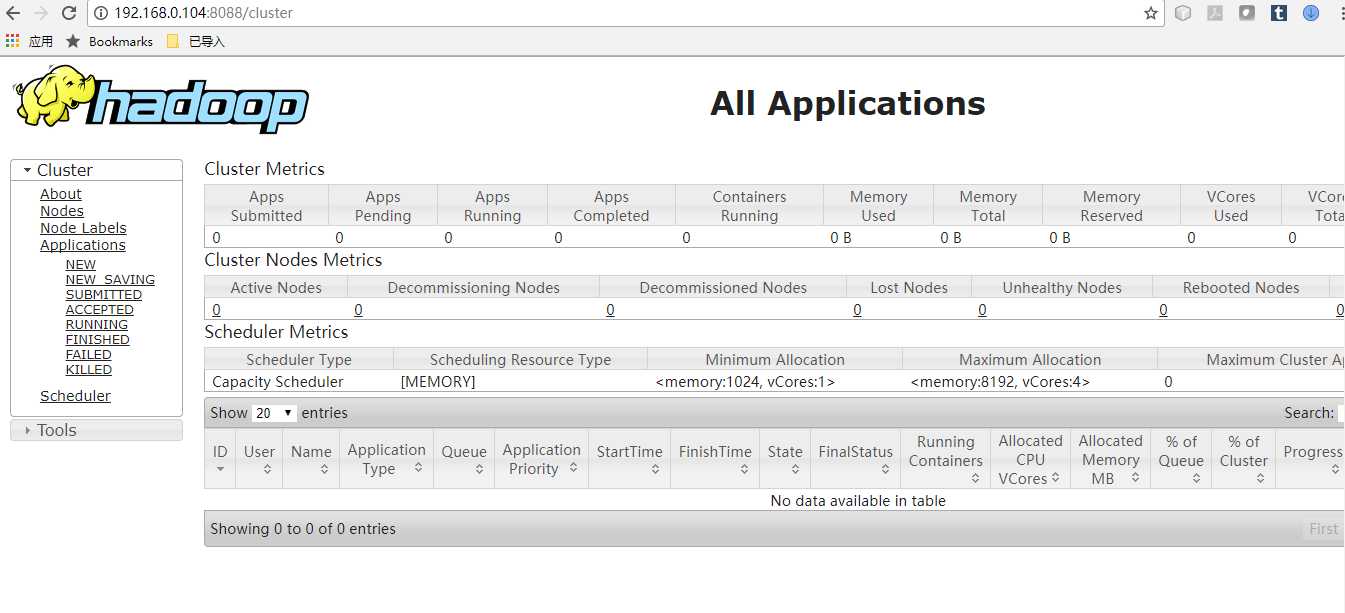

(2)浏览器打开http://192.168.0.104:8088/

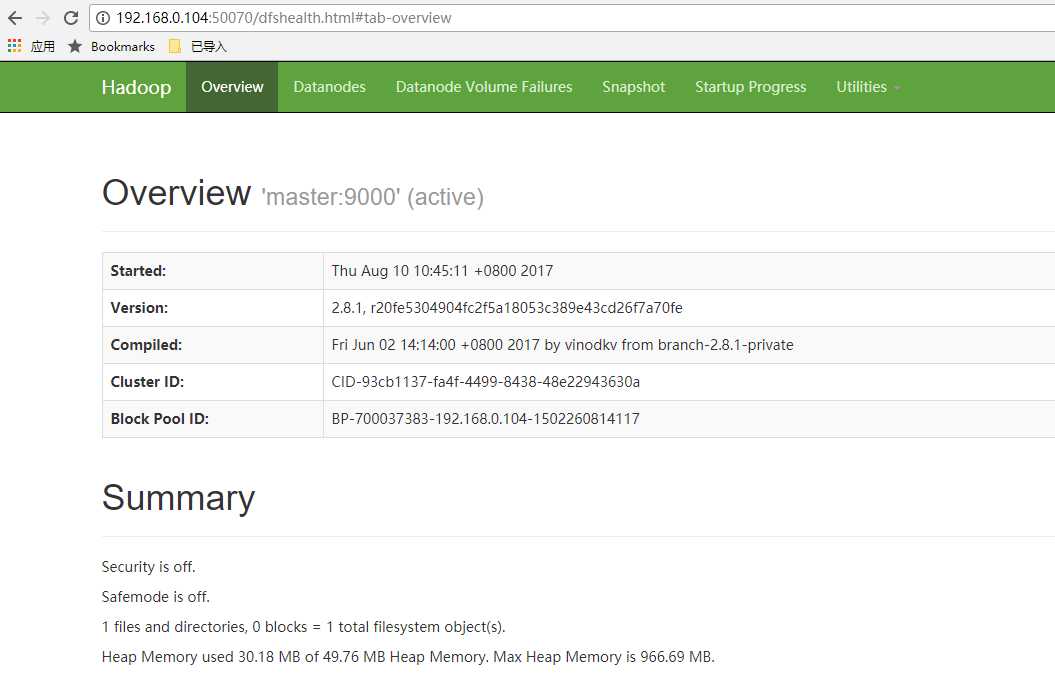

(3)浏览器打开http://192.168.0.104:50070/

标签:lease ref pom 知识 linu roo mis form mon

原文地址:http://www.cnblogs.com/ericnie/p/7337955.html