标签:自己的 英文资料 ice set defaults 简单 des 文件上传 change

想实现语音识别已经很久了,也尝试了许多次,终究还是失败了,原因很多,识别效果不理想,个人在技术上没有成功实现,种种原因,以至于花费了好多时间在上面。语音识别,我尝试过的有科大讯飞、百度语音,微软系。最终还是喜欢微软系的简洁高效。(勿喷,纯个人感觉)

最开始自己的想法是我说一句话(暂且在控制台上做Demo),控制台程序能识别我说的是什么,然后显示出来,并且根据我说的信息,执行相应的行为.(想法很美好,现实很糟心)初入语音识别,各种错误各种来,徘徊不定的选择哪家公司的api,百度上查找各种语音识别的demo,学习参考,可是真正在.NET平台上运行成功的却是寥寥无几,或许是我查找方向有问题,经历了许多的坑,没一次成功过,心灰且意冷,打了几次退堂鼓,却终究忍受不住想玩语音识别。

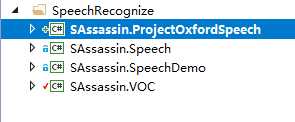

可以看看我VS中的语音demo

第一个是今天的主角-稍后再提。

第二个和第三个是微软系的系统自带的System.Speech.dll和看了微软博客里面的一篇文章而去尝试的Microsoft.Speech.dll 可惜文章写的挺好的,我尝试却是失败 的,并且发现一个问题,就是英文版的微软语音识别是无效的(Microsoft.Speech.Recognition),而中文版的语音合成是无效的(Microsoft.Speech.Synthesis).,因 此,我不得不将两个dll混合使用,来达到我想要的效果,最终效果确实达到了,不过却是极其简单的,一旦识别词汇多起来,这识别率直接下降,我一直认为是采样 频率的问题,可是怎么也找不到采样频率的属性或是字段,如有会的朋友可给我点信息,让我也飞起来,哈哈。

第四个是百度语音识别demo,代码简洁许多,实现难度不难,可是小细节很多,需要注意,然后是雷区挺多的,但是呢,指导走出雷区的说明书却是太少了,我是 踩了雷,很痛的那群。

首先来看看,现在市面上主流语音识别设计方式:

1、离线语音识别

离线语音识别很好理解,就是语音识别库在本地或是局域网内,无需发起远程连接。这个也是我当初的想法,自己弄一套语音识别库,然后根据里面的内容设计想要的行为请求。利用微软系的System.Speech.dll中的语音识别和语音合成功能。实现了简单的中文语音识别功能,但是一旦我将语音识别库逐渐加大,识别率就越来越低,不知是我电脑麦克风不行还是其它原因。最终受打击,放弃。当我试着学习百度语音时,也发现了离线语音识别库,但是呢官方并没有给出具体的操作流程和设计思路,我也没有去深入了解,有时间我要好好了解一番。

1 using System;

2 //using Microsoft.Speech.Synthesis;//中文版tts不能发声

3 using Microsoft.Speech.Recognition;

4 using System.Speech.Synthesis;

5 //using System.Speech.Recognition;

6

7 namespace SAssassin.SpeechDemo

8 {

9 /// <summary>

10 /// 微软语音识别 中文版 貌似效果还好点

11 /// </summary>

12 class Program

13 {

14 static SpeechSynthesizer sy = new SpeechSynthesizer();

15 static void Main(string[] args)

16 {

17 //创建中文识别器

18 using (SpeechRecognitionEngine recognizer = new SpeechRecognitionEngine(new System.Globalization.CultureInfo("zh-CN")))

19 {

20 foreach (var config in SpeechRecognitionEngine.InstalledRecognizers())

21 {

22 Console.WriteLine(config.Id);

23 }

24 //初始化命令词

25 Choices commonds = new Choices();

26 string[] commond1 = new string[] { "一", "二", "三", "四", "五", "六", "七", "八", "九" };

27 string[] commond2 = new string[] { "很高兴见到你", "识别率", "assassin", "长沙", "湖南", "实习" };

28 string[] commond3 = new string[] { "开灯", "关灯", "播放音乐", "关闭音乐", "浇水", "停止浇水", "打开背景灯", "关闭背景灯" };

29 //添加命令词

30 commonds.Add(commond1);

31 commonds.Add(commond2);

32 commonds.Add(commond3);

33 //初始化命令词管理

34 GrammarBuilder gBuilder = new GrammarBuilder();

35 //将命令词添加到管理中

36 gBuilder.Append(commonds);

37 //实例化命令词管理

38 Grammar grammar = new Grammar(gBuilder);

39

40 //创建并加载听写语法(添加命令词汇识别的比较精准)

41 recognizer.LoadGrammarAsync(grammar);

42 //为语音识别事件添加处理程序。

43 recognizer.SpeechRecognized += new EventHandler<SpeechRecognizedEventArgs>(Recognizer_SpeechRRecongized);

44 //将输入配置到语音识别器。

45 recognizer.SetInputToDefaultAudioDevice();

46 //启动异步,连续语音识别。

47 recognizer.RecognizeAsync(RecognizeMode.Multiple);

48 //保持控制台窗口打开。

49 Console.WriteLine("你好");

50 sy.Speak("你好");

51 Console.ReadLine();

52 }

53 }

54

55 //speechrecognized事件处理

56 static void Recognizer_SpeechRRecongized(object sender, SpeechRecognizedEventArgs e)

57 {

58 Console.WriteLine("识别结果:" + e.Result.Text + " " + e.Result.Confidence + " " + DateTime.Now);

59 sy.Speak(e.Result.Text);

60 }

61 }

62 }

2、在线语音识别。

在线语音识别是我们当前程序将语音文件发送到远程服务中心,待远程服务中心匹配解决后将匹配结果进行返回的过程。其使用的一般是Restful风格,利用Json数据往返识别结果。

刚开始学习科大讯飞的语音识别,刚开始什么也不懂,听朋友推荐加上自己百度学习,科大讯飞都说很不错,也抱着心态去学习学习,可是windows平台下只有C++的demo,无奈我是C#,虽说语言很大程度上不分家,可是不想过于麻烦,网上找了一个demo,据说是最全的C#版本的讯飞语音识别demo,可是当看到里面错综复杂的源代码时,内心是忧伤的,这里是直接通过一种方式引用c++的函数,运行了该demo,成功了,能简单的录音然后识别,但是有些地方存在问题,也得不到解决方案,不得已,放弃。

后来,百度语音吸引我了,七月份时,重新开始看百度语音的demo,官网demo比较简单,尝试着学习了一下,首先你得到百度语音开放平台去创建应用得到App key 和Secret key,然后下载着demo,在构造函数或者字段中又或是写入配置文件中,将这两个得到的key写入,程序会根据这两个key去发起请求的。就如同开头所说,这是在线语音识别,利用Restful风格,将语音文件上传至百度语音识别中心,然后识别后将回执数据返回到我们的程序中,刚开始,配置的时候自己技术不怎么样,配置各种出错,地雷开始踩了,总要炸几次,最终还是能将demo中的测试文件识别出来,算是我个人的一小步把.(如果有朋友正好碰到踩雷问题,不妨可与我一起探讨,或许我也不懂,但在我踩过的里面至少我懂了,哈哈)

接下来是设计思路的问题,语音识别能成功了,语音合成也能成功了,这里要注意,语音识别和语音合成要分别开通,并且这两个都有App Key和Secret Key 虽然是一样的,但是还是要注意,不然语音合成就会出问题的。接下来要考虑的问题就是,百度语音的设计思路是根据文件识别,但是我们考虑的最多的就是我直接麦克风语音输入,然后识别,这也是我的想法,接下来解决这一问题,设计思路是,我将输入的信息作为文件形式保存,等我输入完,然后就调用语音识别方法,这不就行了吗,确实也是可以的,此处,又开始进入雷区了,利用NAudio.dll文件实现录音功能,这个包可以在Nuget中下载。

1 using NAudio.Wave;

2 using System;

3

4 namespace SAssassin.VOC

5 {

6 /// <summary>

7 /// 实现录音功能

8 /// </summary>

9 public class RecordWaveToFile

10 {

11 private WaveFileWriter waveFileWriter = null;

12 private WaveIn myWaveIn = null;

13

14 public void StartRecord()

15 {

16 ConfigWave();

17 myWaveIn.StartRecording();

18 }

19

20 private void ConfigWave()

21 {

22 string filePath = AppDomain.CurrentDomain.BaseDirectory + "Temp.wav";

23 myWaveIn = new WaveIn()

24 {

25 WaveFormat = new WaveFormat(16000, 16, 1)//8k,16bit,单频

26 //WaveFormat = new WaveFormat()//识别音质清晰

27 };

28 myWaveIn.DataAvailable += new System.EventHandler<WaveInEventArgs>(WaveIn_DataAvailable);

29 myWaveIn.RecordingStopped += new System.EventHandler<StoppedEventArgs>(WaveIn_RecordingStopped);

30 waveFileWriter = new WaveFileWriter(filePath, myWaveIn.WaveFormat);

31 }

32

33 private void WaveIn_DataAvailable(object sender,WaveInEventArgs e)

34 {

35 if(waveFileWriter != null)

36 {

37 waveFileWriter.Write(e.Buffer,0,e.BytesRecorded);

38 waveFileWriter.Flush();

39 }

40 }

41

42 private void WaveIn_RecordingStopped(object sender,StoppedEventArgs e)

43 {

44 myWaveIn.StopRecording();

45 }

46 }

47 }

此处控制器中使用WaveInEvent不会报错,可就在这之前,我用的是WaveIn类,然后直接报错了

“System.InvalidOperationException:“Use WaveInEvent to record on a background thread””

在StackOverFlow上找到了解决方案,就是将WaveIn类换成WaveInEvent类即可,进入类里面看一下,其实发现都是引用同一个接口,甚至说两个类的结构都是一模一样的,只是一个用于GUI线程,一个用于后台线程。一切就绪,录音也能实现,可是当我查看自己的录音文件时,杂音好多,音质不侵袭,甚至是直接失真了,没什么用,送百度也识别失败,当将采样频率提高到44k时效果很好,录音文件很不错,但是问题来了,百度语音识别规定的pcm文件只能是8k-16bit,糟心,想换成其它格式的文件,采取压缩形式保存,但是一旦将采样频率降下来,这个效果就很糟糕,识别也是成了问题。不得不说,这还要慢慢来解决哈。

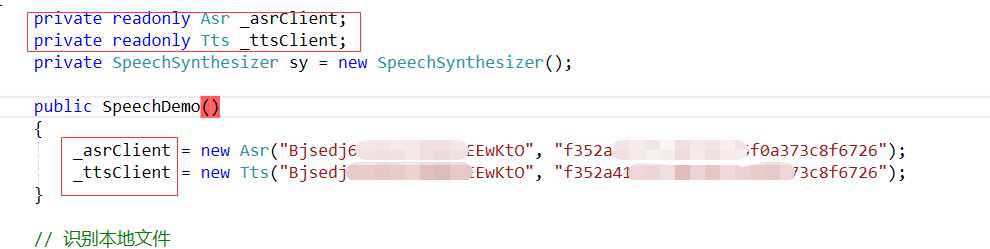

进入今天重头戏,这也是我博客园第一篇随笔文章,该讲点重点了,微软认知服务,七月中旬的时候接触到了必应的语音识别api,在微软bing官网里,并且里面的识别效果,让我惊呼,这识别率太高了。然后想找它的api,发现文档全是英文资料,糟心。把资料看完,感觉使用方式很不错,也是远程调用的方式,但是api呢,官网找了老半天,只有文档,那时也没看上面的产品,试用版什么的,只能看着,却不能用,心累。也就在这几天,重新看了下必应的语音识别文档,才接触到这个词--"微软认知服务", 恕我见识太浅,这个好东西却没听过,百度一查,真是不错,微软太牛了,这个里面包含很多api,语音识别都只算小菜一只,人脸识别,语义感知,等等很牛的功能,找到Api,找到免费试用,登录获得app的secret key ,便可以用起来了。下载一个demo,将secret key输入,测试一下,哇塞,这识别效果,简直了,太强了。并且从百度中看到很多结果,使用到微软认知服务语音识别功能的很少,我也因此有写一点东西的想法。

我将demo中的很多地方抽出来直接形成了一个控制器程序,源码如下

1 public class SpeechConfig

2 {

3 #region Fields

4 /// <summary>

5 /// The isolated storage subscription key file name.

6 /// </summary>

7 private const string IsolatedStorageSubscriptionKeyFileName = "Subscription.txt";

8

9 /// <summary>

10 /// The default subscription key prompt message

11 /// </summary>

12 private const string DefaultSubscriptionKeyPromptMessage = "Secret key";

13

14 /// <summary>

15 /// You can also put the primary key in app.config, instead of using UI.

16 /// string subscriptionKey = ConfigurationManager.AppSettings["primaryKey"];

17 /// </summary>

18 private string subscriptionKey = ConfigurationManager.AppSettings["primaryKey"];

19

20 /// <summary>

21 /// Gets or sets subscription key

22 /// </summary>

23 public string SubscriptionKey

24 {

25 get

26 {

27 return this.subscriptionKey;

28 }

29

30 set

31 {

32 this.subscriptionKey = value;

33 this.OnPropertyChanged<string>();

34 }

35 }

36

37 /// <summary>

38 /// The data recognition client

39 /// </summary>

40 private DataRecognitionClient dataClient;

41

42 /// <summary>

43 /// The microphone client

44 /// </summary>

45 private MicrophoneRecognitionClient micClient;

46

47 #endregion Fields

48

49 #region event

50 /// <summary>

51 /// Implement INotifyPropertyChanged interface

52 /// </summary>

53 public event PropertyChangedEventHandler PropertyChanged;

54

55 /// <summary>

56 /// Helper function for INotifyPropertyChanged interface

57 /// </summary>

58 /// <typeparam name="T">Property type</typeparam>

59 /// <param name="caller">Property name</param>

60 private void OnPropertyChanged<T>([CallerMemberName]string caller = null)

61 {

62 this.PropertyChanged?.Invoke(this, new PropertyChangedEventArgs(caller));

63 }

64 #endregion event

65

66 #region 属性

67 /// <summary>

68 /// Gets the current speech recognition mode.

69 /// </summary>

70 /// <value>

71 /// The speech recognition mode.

72 /// </value>

73 private SpeechRecognitionMode Mode

74 {

75 get

76 {

77 if (this.IsMicrophoneClientDictation ||

78 this.IsDataClientDictation)

79 {

80 return SpeechRecognitionMode.LongDictation;

81 }

82

83 return SpeechRecognitionMode.ShortPhrase;

84 }

85 }

86

87 /// <summary>

88 /// Gets the default locale.

89 /// </summary>

90 /// <value>

91 /// The default locale.

92 /// </value>

93 private string DefaultLocale

94 {

95 //get { return "en-US"; }

96 get { return "zh-CN"; }

97

98 }

99

100 /// <summary>

101 /// Gets the Cognitive Service Authentication Uri.

102 /// </summary>

103 /// <value>

104 /// The Cognitive Service Authentication Uri. Empty if the global default is to be used.

105 /// </value>

106 private string AuthenticationUri

107 {

108 get

109 {

110 return ConfigurationManager.AppSettings["AuthenticationUri"];

111 }

112 }

113

114 /// <summary>

115 /// Gets a value indicating whether or not to use the microphone.

116 /// </summary>

117 /// <value>

118 /// <c>true</c> if [use microphone]; otherwise, <c>false</c>.

119 /// </value>

120 private bool UseMicrophone

121 {

122 get

123 {

124 return this.IsMicrophoneClientWithIntent ||

125 this.IsMicrophoneClientDictation ||

126 this.IsMicrophoneClientShortPhrase;

127 }

128 }

129

130 /// <summary>

131 /// Gets the short wave file path.

132 /// </summary>

133 /// <value>

134 /// The short wave file.

135 /// </value>

136 private string ShortWaveFile

137 {

138 get

139 {

140 return ConfigurationManager.AppSettings["ShortWaveFile"];

141 }

142 }

143

144 /// <summary>

145 /// Gets the long wave file path.

146 /// </summary>

147 /// <value>

148 /// The long wave file.

149 /// </value>

150 private string LongWaveFile

151 {

152 get

153 {

154 return ConfigurationManager.AppSettings["LongWaveFile"];

155 }

156 }

157 #endregion 属性

158

159 #region 模式选择控制器设置

160 /// <summary>

161 /// Gets or sets a value indicating whether this instance is microphone client short phrase.

162 /// </summary>

163 /// <value>

164 /// <c>true</c> if this instance is microphone client short phrase; otherwise, <c>false</c>.

165 /// </value>

166 public bool IsMicrophoneClientShortPhrase { get; set; }

167

168 /// <summary>

169 /// Gets or sets a value indicating whether this instance is microphone client dictation.

170 /// </summary>

171 /// <value>

172 /// <c>true</c> if this instance is microphone client dictation; otherwise, <c>false</c>.

173 /// </value>

174 public bool IsMicrophoneClientDictation { get; set; }

175

176 /// <summary>

177 /// Gets or sets a value indicating whether this instance is microphone client with intent.

178 /// </summary>

179 /// <value>

180 /// <c>true</c> if this instance is microphone client with intent; otherwise, <c>false</c>.

181 /// </value>

182 public bool IsMicrophoneClientWithIntent { get; set; }

183

184 /// <summary>

185 /// Gets or sets a value indicating whether this instance is data client short phrase.

186 /// </summary>

187 /// <value>

188 /// <c>true</c> if this instance is data client short phrase; otherwise, <c>false</c>.

189 /// </value>

190 public bool IsDataClientShortPhrase { get; set; }

191

192 /// <summary>

193 /// Gets or sets a value indicating whether this instance is data client with intent.

194 /// </summary>

195 /// <value>

196 /// <c>true</c> if this instance is data client with intent; otherwise, <c>false</c>.

197 /// </value>

198 public bool IsDataClientWithIntent { get; set; }

199

200 /// <summary>

201 /// Gets or sets a value indicating whether this instance is data client dictation.

202 /// </summary>

203 /// <value>

204 /// <c>true</c> if this instance is data client dictation; otherwise, <c>false</c>.

205 /// </value>

206 public bool IsDataClientDictation { get; set; }

207

208 #endregion

209

210 #region 委托执行对象

211 /// <summary>

212 /// Called when the microphone status has changed.

213 /// </summary>

214 /// <param name="sender">The sender.</param>

215 /// <param name="e">The <see cref="MicrophoneEventArgs"/> instance containing the event data.</param>

216 private void OnMicrophoneStatus(object sender, MicrophoneEventArgs e)

217 {

218 Task task = new Task(() =>

219 {

220 Console.WriteLine("--- Microphone status change received by OnMicrophoneStatus() ---");

221 Console.WriteLine("********* Microphone status: {0} *********", e.Recording);

222 if (e.Recording)

223 {

224 Console.WriteLine("Please start speaking.");

225 }

226

227 Console.WriteLine();

228 });

229 task.Start();

230 }

231

232 /// <summary>

233 /// Called when a partial response is received.

234 /// </summary>

235 /// <param name="sender">The sender.</param>

236 /// <param name="e">The <see cref="PartialSpeechResponseEventArgs"/> instance containing the event data.</param>

237 private void OnPartialResponseReceivedHandler(object sender, PartialSpeechResponseEventArgs e)

238 {

239 Console.WriteLine("--- Partial result received by OnPartialResponseReceivedHandler() ---");

240 Console.WriteLine("{0}", e.PartialResult);

241 Console.WriteLine();

242 }

243

244 /// <summary>

245 /// Called when an error is received.

246 /// </summary>

247 /// <param name="sender">The sender.</param>

248 /// <param name="e">The <see cref="SpeechErrorEventArgs"/> instance containing the event data.</param>

249 private void OnConversationErrorHandler(object sender, SpeechErrorEventArgs e)

250 {

251 Console.WriteLine("--- Error received by OnConversationErrorHandler() ---");

252 Console.WriteLine("Error code: {0}", e.SpeechErrorCode.ToString());

253 Console.WriteLine("Error text: {0}", e.SpeechErrorText);

254 Console.WriteLine();

255 }

256

257 /// <summary>

258 /// Called when a final response is received;

259 /// </summary>

260 /// <param name="sender">The sender.</param>

261 /// <param name="e">The <see cref="SpeechResponseEventArgs"/> instance containing the event data.</param>

262 private void OnMicShortPhraseResponseReceivedHandler(object sender, SpeechResponseEventArgs e)

263 {

264 Task task = new Task(() =>

265 {

266 Console.WriteLine("--- OnMicShortPhraseResponseReceivedHandler ---");

267

268 // we got the final result, so it we can end the mic reco. No need to do this

269 // for dataReco, since we already called endAudio() on it as soon as we were done

270 // sending all the data.

271 this.micClient.EndMicAndRecognition();

272

273 this.WriteResponseResult(e);

274 });

275 task.Start();

276 }

277

278 /// <summary>

279 /// Called when a final response is received;

280 /// </summary>

281 /// <param name="sender">The sender.</param>

282 /// <param name="e">The <see cref="SpeechResponseEventArgs"/> instance containing the event data.</param>

283 private void OnDataShortPhraseResponseReceivedHandler(object sender, SpeechResponseEventArgs e)

284 {

285 Task task = new Task(() =>

286 {

287 Console.WriteLine("--- OnDataShortPhraseResponseReceivedHandler ---");

288

289 // we got the final result, so it we can end the mic reco. No need to do this

290 // for dataReco, since we already called endAudio() on it as soon as we were done

291 // sending all the data.

292 this.WriteResponseResult(e);

293

294 });

295 task.Start();

296 }

297

298 /// <summary>

299 /// Called when a final response is received;

300 /// </summary>

301 /// <param name="sender">The sender.</param>

302 /// <param name="e">The <see cref="SpeechResponseEventArgs"/> instance containing the event data.</param>

303 private void OnMicDictationResponseReceivedHandler(object sender, SpeechResponseEventArgs e)

304 {

305 Console.WriteLine("--- OnMicDictationResponseReceivedHandler ---");

306 if (e.PhraseResponse.RecognitionStatus == RecognitionStatus.EndOfDictation ||

307 e.PhraseResponse.RecognitionStatus == RecognitionStatus.DictationEndSilenceTimeout)

308 {

309 Task task = new Task(() =>

310 {

311 // we got the final result, so it we can end the mic reco. No need to do this

312 // for dataReco, since we already called endAudio() on it as soon as we were done

313 // sending all the data.

314 this.micClient.EndMicAndRecognition();

315 });

316 task.Start();

317 }

318

319 this.WriteResponseResult(e);

320 }

321

322 /// <summary>

323 /// Called when a final response is received;

324 /// </summary>

325 /// <param name="sender">The sender.</param>

326 /// <param name="e">The <see cref="SpeechResponseEventArgs"/> instance containing the event data.</param>

327 private void OnDataDictationResponseReceivedHandler(object sender, SpeechResponseEventArgs e)

328 {

329 Console.WriteLine("--- OnDataDictationResponseReceivedHandler ---");

330 if (e.PhraseResponse.RecognitionStatus == RecognitionStatus.EndOfDictation ||

331 e.PhraseResponse.RecognitionStatus == RecognitionStatus.DictationEndSilenceTimeout)

332 {

333 Task task = new Task(() =>

334 {

335

336 // we got the final result, so it we can end the mic reco. No need to do this

337 // for dataReco, since we already called endAudio() on it as soon as we were done

338 // sending all the data.

339 });

340 task.Start();

341 }

342

343 this.WriteResponseResult(e);

344 }

345

346 /// <summary>

347 /// Sends the audio helper.

348 /// </summary>

349 /// <param name="wavFileName">Name of the wav file.</param>

350 private void SendAudioHelper(string wavFileName)

351 {

352 using (FileStream fileStream = new FileStream(wavFileName, FileMode.Open, FileAccess.Read))

353 {

354 // Note for wave files, we can just send data from the file right to the server.

355 // In the case you are not an audio file in wave format, and instead you have just

356 // raw data (for example audio coming over bluetooth), then before sending up any

357 // audio data, you must first send up an SpeechAudioFormat descriptor to describe

358 // the layout and format of your raw audio data via DataRecognitionClient‘s sendAudioFormat() method.

359 int bytesRead = 0;

360 byte[] buffer = new byte[1024];

361

362 try

363 {

364 do

365 {

366 // Get more Audio data to send into byte buffer.

367 bytesRead = fileStream.Read(buffer, 0, buffer.Length);

368

369 // Send of audio data to service.

370 this.dataClient.SendAudio(buffer, bytesRead);

371 }

372 while (bytesRead > 0);

373 }

374 finally

375 {

376 // We are done sending audio. Final recognition results will arrive in OnResponseReceived event call.

377 this.dataClient.EndAudio();

378 }

379 }

380 }

381 #endregion 委托执行对象

382

383 #region 辅助方法

384 /// <summary>

385 /// Gets the subscription key from isolated storage.

386 /// </summary>

387 /// <returns>The subscription key.</returns>

388 private string GetSubscriptionKeyFromIsolatedStorage()

389 {

390 string subscriptionKey = null;

391

392 using (IsolatedStorageFile isoStore = IsolatedStorageFile.GetStore(IsolatedStorageScope.User | IsolatedStorageScope.Assembly, null, null))

393 {

394 try

395 {

396 using (var iStream = new IsolatedStorageFileStream(IsolatedStorageSubscriptionKeyFileName, FileMode.Open, isoStore))

397 {

398 using (var reader = new StreamReader(iStream))

399 {

400 subscriptionKey = reader.ReadLine();

401 }

402 }

403 }

404 catch (FileNotFoundException)

405 {

406 subscriptionKey = null;

407 }

408 }

409

410 if (string.IsNullOrEmpty(subscriptionKey))

411 {

412 subscriptionKey = DefaultSubscriptionKeyPromptMessage;

413 }

414

415 return subscriptionKey;

416 }

417

418 /// <summary>

419 /// Creates a new microphone reco client without LUIS intent support.

420 /// </summary>

421 private void CreateMicrophoneRecoClient()

422 {

423 this.micClient = SpeechRecognitionServiceFactory.CreateMicrophoneClient(

424 this.Mode,this.DefaultLocale,this.SubscriptionKey);

425

426 this.micClient.AuthenticationUri = this.AuthenticationUri;

427

428 // Event handlers for speech recognition results

429 this.micClient.OnMicrophoneStatus += this.OnMicrophoneStatus;

430 this.micClient.OnPartialResponseReceived += this.OnPartialResponseReceivedHandler;

431 if (this.Mode == SpeechRecognitionMode.ShortPhrase)

432 {

433 this.micClient.OnResponseReceived += this.OnMicShortPhraseResponseReceivedHandler;

434 }

435 else if (this.Mode == SpeechRecognitionMode.LongDictation)

436 {

437 this.micClient.OnResponseReceived += this.OnMicDictationResponseReceivedHandler;

438 }

439

440 this.micClient.OnConversationError += this.OnConversationErrorHandler;

441 }

442

443 /// <summary>

444 /// Creates a data client without LUIS intent support.

445 /// Speech recognition with data (for example from a file or audio source).

446 /// The data is broken up into buffers and each buffer is sent to the Speech Recognition Service.

447 /// No modification is done to the buffers, so the user can apply their

448 /// own Silence Detection if desired.

449 /// </summary>

450 private void CreateDataRecoClient()

451 {

452 this.dataClient = SpeechRecognitionServiceFactory.CreateDataClient(

453 this.Mode,

454 this.DefaultLocale,

455 this.SubscriptionKey);

456 this.dataClient.AuthenticationUri = this.AuthenticationUri;

457

458 // Event handlers for speech recognition results

459 if (this.Mode == SpeechRecognitionMode.ShortPhrase)

460 {

461 this.dataClient.OnResponseReceived += this.OnDataShortPhraseResponseReceivedHandler;

462 }

463 else

464 {

465 this.dataClient.OnResponseReceived += this.OnDataDictationResponseReceivedHandler;

466 }

467

468 this.dataClient.OnPartialResponseReceived += this.OnPartialResponseReceivedHandler;

469 this.dataClient.OnConversationError += this.OnConversationErrorHandler;

470 }

471

472 /// <summary>

473 /// Writes the response result.

474 /// </summary>

475 /// <param name="e">The <see cref="SpeechResponseEventArgs"/> instance containing the event data.</param>

476 private void WriteResponseResult(SpeechResponseEventArgs e)

477 {

478 if (e.PhraseResponse.Results.Length == 0)

479 {

480 Console.WriteLine("No phrase response is available.");

481 }

482 else

483 {

484 Console.WriteLine("********* Final n-BEST Results *********");

485 for (int i = 0; i < e.PhraseResponse.Results.Length; i++)

486 {

487 Console.WriteLine(

488 "[{0}] Confidence={1}, Text=\"{2}\"",

489 i,

490 e.PhraseResponse.Results[i].Confidence,

491 e.PhraseResponse.Results[i].DisplayText);

492 if (e.PhraseResponse.Results[i].DisplayText == "关闭。")

493 {

494 Console.WriteLine("收到命令,马上关闭");

495 }

496 }

497

498 Console.WriteLine();

499 }

500 }

501 #endregion 辅助方法

502

503 #region Init

504 public SpeechConfig()

505 {

506 this.IsMicrophoneClientShortPhrase = true;

507 this.IsMicrophoneClientWithIntent = false;

508 this.IsMicrophoneClientDictation = false;

509 this.IsDataClientShortPhrase = false;

510 this.IsDataClientWithIntent = false;

511 this.IsDataClientDictation = false;

512

513 this.SubscriptionKey = this.GetSubscriptionKeyFromIsolatedStorage();

514 }

515

516 /// <summary>

517 /// 语音识别开始执行

518 /// </summary>

519 public void SpeechRecognize()

520 {

521 if (this.UseMicrophone)

522 {

523 if (this.micClient == null)

524 {

525 this.CreateMicrophoneRecoClient();

526 }

527

528 this.micClient.StartMicAndRecognition();

529 }

530 else

531 {

532 if (null == this.dataClient)

533 {

534 this.CreateDataRecoClient();

535 }

536

537 this.SendAudioHelper((this.Mode == SpeechRecognitionMode.ShortPhrase) ? this.ShortWaveFile : this.LongWaveFile);

538 }

539 }

540 #endregion Init

541 }

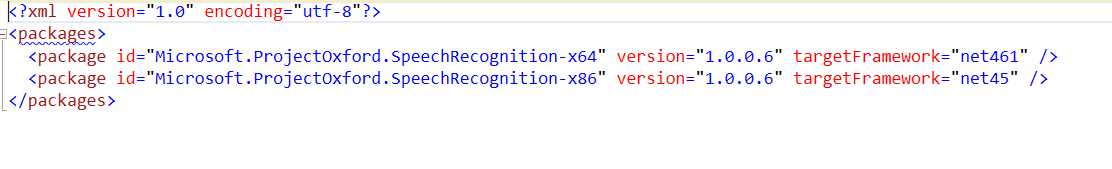

在这其中有几个引用文件可以通过nuget包下载,基本没什么问题。

对了这里注意的一个问题就是,下载Microsoft.Speech的时候一定是两个包都需要下载,不然会报错的,版本必须是4.5+以上的。

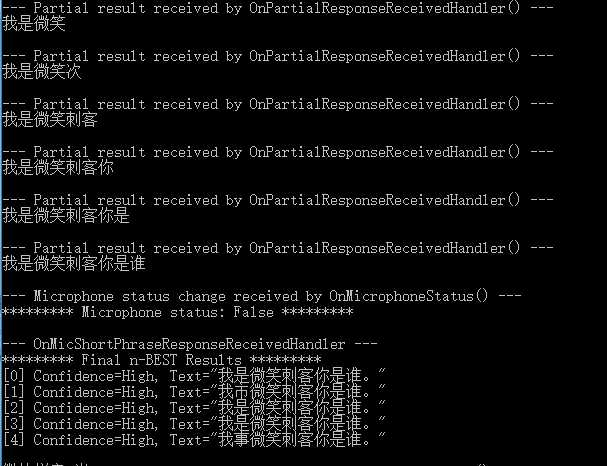

只需替换默认的key就行,程序便可跑起来,效果真是很6

这识别率真是很好很好,很满意,可是这个微软的免费试用只有一个月,那就只能在这个月里多让它开花结果了哈哈。

第一篇博客我推荐了微软认知服务-语音识别api,亲僧体会过强大,才想将其作为首篇博客内容。

2017-08-20,望技术有成后能回来看见自己的脚步。

标签:自己的 英文资料 ice set defaults 简单 des 文件上传 change

原文地址:http://www.cnblogs.com/CKExp/p/7400969.html