标签:memcache cache redhat name 技术分享 foreign 组件 dev inter

saltstack三种运行模式:

local本地、master/minion(类似于agent)、salt ssh

saltstack三大功能:

远程执行、配置管理、云管理

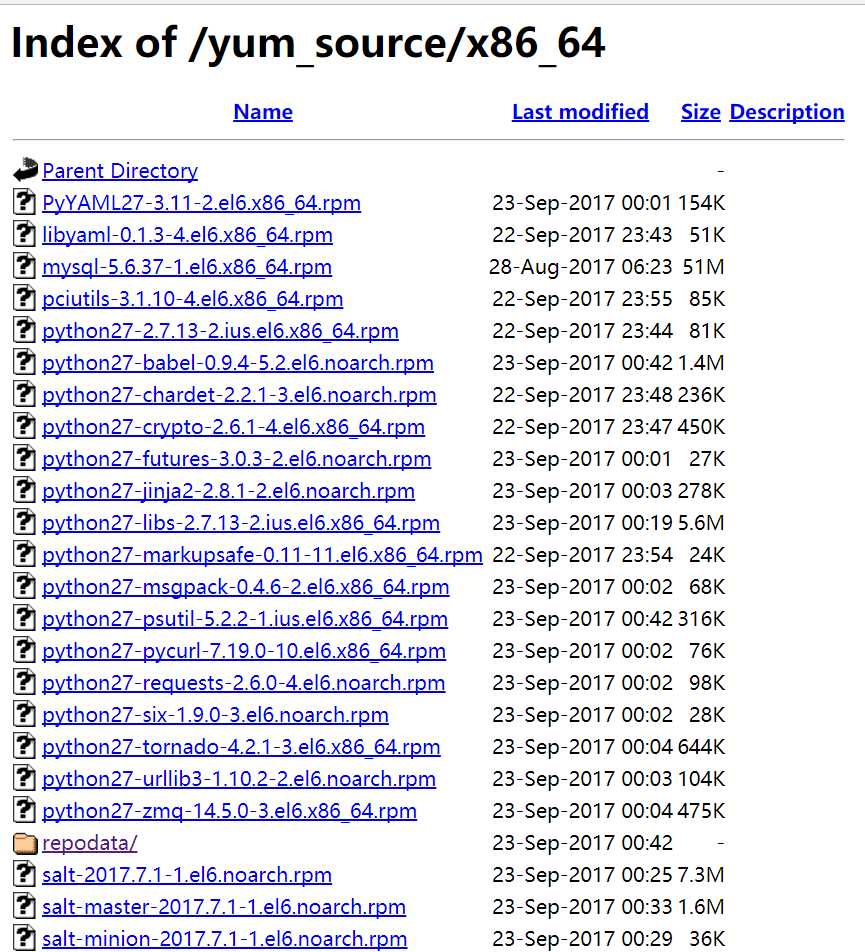

saltstack安装:1、使用官方进行yum安装 2、自建yum源进行安装

node1:

wget https://repo.saltstack.com/yum/redhat/salt-repo-2017.7-1.el6.noarch.rpm yum install salt-repo-2017.7-1.el6.noarch.rpm yum clean expire-cache yum install salt-master salt-minion

修改minion配置:/etc/salt/minion

master: 指向master节点ip

node2:

wget https://repo.saltstack.com/yum/redhat/salt-repo-2017.7-1.el6.noarch.rpm yum install salt-repo-2017.7-1.el6.noarch.rpm yum clean expire-cache yum install salt-minion

修改minion配置:/etc/salt/minion

查看下配置文件:

[root@node1 ~]# egrep -v "^#|^$" /etc/salt/master [root@node1 ~]# egrep -v "^#|^$" /etc/salt/minion master: 192.168.44.134 [root@node2 ~]# egrep -v "^#|^$" /etc/salt/minion master: 192.168.44.134

启动两节点上的服务:

[root@node1 ~]# /etc/init.d/salt-master start Starting salt-master daemon: [确定] [root@node1 ~]# /etc/init.d/salt-minion start Starting salt-minion:root:node1 daemon: OK [root@node1 ~]# netstat -tunlp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1265/sshd tcp 0 0 0.0.0.0:4505 0.0.0.0:* LISTEN 2797/python2.7 tcp 0 0 0.0.0.0:4506 0.0.0.0:* LISTEN 2803/python2.7 [root@node2 ~]# /etc/init.d/salt-minion start Starting salt-minion:root:node2 daemon: OK

显示当前未连接上master的minion节点:

[root@node1 master]# tree . ├── master.pem ├── master.pub ├── minions ├── minions_autosign ├── minions_denied ├── minions_pre 还没有成为master的minion节点 │ ├── node1 │ └── node2 └── minions_rejected

[root@node1 master]# salt-key Accepted Keys: Denied Keys: Unaccepted Keys: 未同意的key有两个,node1和node2上的minion node1 node2 Rejected Keys:

[root@node1 master]# salt-key -a node* The following keys are going to be accepted: Unaccepted Keys: node1 node2 Proceed? [n/Y] Y Key for minion node1 accepted. Key for minion node2 accepted.

[root@node1 master]# tree . ├── master.pem ├── master.pub ├── minions │ ├── node1 │ └── node2 ├── minions_autosign ├── minions_denied ├── minions_pre └── minions_rejected salt-key: [root@node1 ~]# salt-key -L Accepted Keys: node1 node2 Denied Keys: Unaccepted Keys: Rejected Keys:

1、test.ping:类似于zabbix的agent的ping,test是一个模块,ping是模块中的方法

[root@node1 ~]# salt ‘*‘ test.ping

node2:

True

node1:

True

2、cmd.run:执行所有命令的模块

[root@node1 ~]# salt "*" cmd.run "uptime"

node1:

12:04:22 up 2:32, 2 users, load average: 0.00, 0.00, 0.00

node2:

12:04:22 up 23:28, 1 user, load average: 0.07, 0.02, 0.00

[root@node1 ~]# salt "*" cmd.run "df -h"

node2:

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_node2-lv_root

16G 3.5G 12G 24% /

tmpfs 932M 12K 932M 1% /dev/shm

/dev/sda1 485M 32M 429M 7% /boot

node1:

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_node1-lv_root

16G 2.9G 12G 20% /

tmpfs 932M 28K 932M 1% /dev/shm

/dev/sda1 485M 32M 429M 7% /boot

[root@node1 salt]# salt ‘node1‘ grains.ls 列出所有的grains信息

node1:

- SSDs

- biosreleasedate

- biosversion

- cpu_flags

- cpu_model

- cpuarch

- disks

- dns

[root@node1 salt]# salt ‘node1‘ grains.item fqdn 获取某一个grains的item

node1:

----------

fqdn:

node1

或者使用get方法:

[root@node1 salt]# salt ‘node1‘ grains.get fqdn 获取某一个grains的值

node1:

node1

显示minion端的操作系统:

[root@node1 salt]# salt ‘node1‘ grains.get os 获取某一个grains的值

node1:

CentOS

在操作系统是CentOS上执行某命令:

[root@node1 salt]# salt -G os:CentOS cmd.run ‘w‘ -G:以grains类型作匹配

node1:

13:04:59 up 3:32, 2 users, load average: 0.00, 0.00, 0.00

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

root pts/0 192.168.44.1 11:42 0.00s 0.54s 0.35s /usr/bin/python

root pts/1 192.168.44.1 11:49 28:30 0.04s 0.04s -bash

node2:

13:04:59 up 1 day, 29 min, 1 user, load average: 0.00, 0.00, 0.00

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

root pts/0 192.168.44.1 11:42 28:36 0.08s 0.08s -bash

[root@node1 salt]# salt "*" grains.item roles 获取roles这个item的grains信息

node1:

----------

roles:

- webserver

- memcache

node2:

----------

roles:

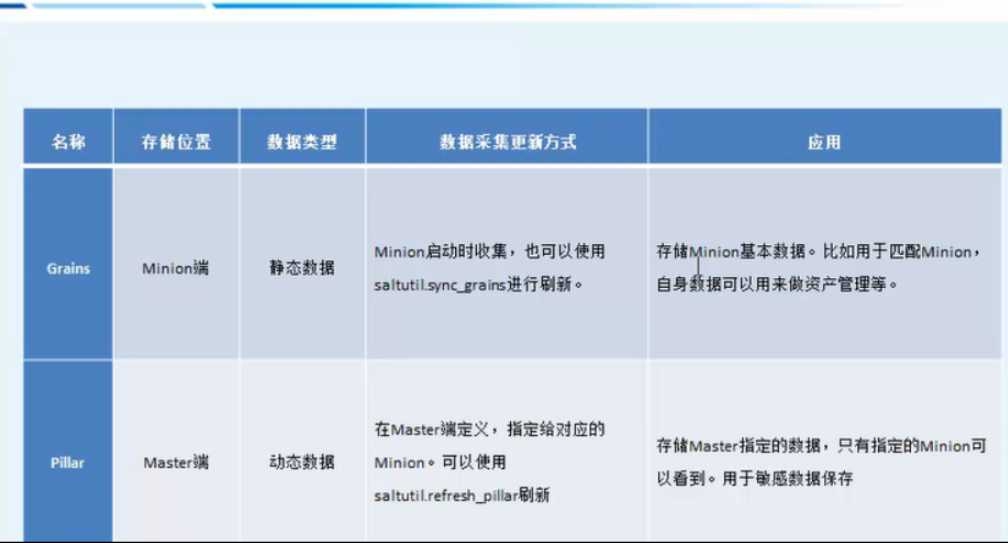

grains存在于minion端的配置文件中,可以使用配置grains进行将minion打一个标签,然后通过使用grains进行匹配,执行某操作:

[root@node1 salt]# egrep -v "^$|^#" /etc/salt/minion

master: 192.168.44.134

grains:

roles:

- webserver

- memcache

修改了配置文件,需要重启才能生效:

[root@node1 salt]# /etc/init.d/salt-minion restart

Stopping salt-minion:root:node1 daemon: OK

Starting salt-minion:root:node1 daemon: OK

执行操作:

[root@node1 salt]# salt -G "roles:memcache" cmd.run ‘echo "hello node1"‘

node1:

hello node1

-G "roles:memcache":匹配刚刚在minion端配置文件中的roles定义

当然如果不想将grains配置写在minion的配置文件中,那么可以将grains配置单独写在/etc/salt/grains中:匹配某一个minion端执行某一个命令

[root@node1 salt]# ll /etc/salt/grains,该grains需要事先进行创建 比如: [root@node1 salt]# cat /etc/salt/grains roles: nginx [root@node1 salt]# /etc/init.d/salt-minion restart Stopping salt-minion:root:node1 daemon: OK Starting salt-minion:root:node1 daemon: OK [root@node1 salt]# salt -G "roles:nginx" cmd.run ‘echo "node1 nginx"‘ No minions matched the target. No command was sent, no jid was assigned. ERROR: No return received

报错原因:/etc/salt/grains 改配置文件中的key为roles与minion中的key值roles一致,导致冲突了,修改该key就可以解决

[root@node1 salt]# cat /etc/salt/grains

web: nginx

[root@node1 salt]# /etc/init.d/salt-minion restart

Stopping salt-minion:root:node1 daemon: OK

Starting salt-minion:root:node1 daemon: OK

[root@node1 salt]# salt -G "web:nginx" cmd.run ‘echo "node1 nginx"‘

node1:

node1 nginx

pillar:只有在master配置文件中才会用到设置pillar

默认pillar没有打开

[root@node1 salt]# salt "*" pillar.items

node1:

----------

node2:

----------

需要在master配置文件中开启:

[root@node1 ~]# egrep -v "^#|^$" /etc/salt/master

file_roots:

base:

- /srv/salt

pillar_opts: True

[root@node1 ~]# /etc/init.d/salt-master restart

Stopping salt-master daemon: [确定]

Starting salt-master daemon: [确定]

[root@node1 ~]# salt "*" pillar.items

node2:

----------

master:

----------

__role:

master

allow_minion_key_revoke:

pillar支持环境,base环境或其他,pillar也有一个入口目录,入口文件top file,top file必须放在base环境下面,打开base环境设置:

[root@node1 ~]# egrep -v "^#|^$" /etc/salt/master

pillar_roots:

base:

- /srv/pillar

创建该文件:[root@node1 ~]# mkdir /srv/pillar

重启master服务:[root@node1 ~]# /etc/init.d/salt-master restart

[root@node1 pillar]# cat apache.sls

{% if grains[‘os‘] == ‘CentOS‘ %}

apache: httpd

{% elif grains[‘os‘] == ‘Debian‘ %}

apache: apache2

{% endif %}

[root@node1 pillar]# cat top.sls

base:

‘*‘:

- apache

将pillar的True再次修改为False,重启master,查看上面编写的sls文件是否已经生效

[root@node1 pillar]# salt ‘*‘ pillar.items

node2:

----------

apache:

httpd

node1:

----------

apache:

httpd

设置完了pillar,需要进行刷新才能够使用:

[root@node1 pillar]# salt ‘*‘ saltutil.refresh_pillar

node2:

True

node1:

True

[root@node1 pillar]# salt -I ‘apache:httpd‘ test.ping 刷新了才能调用pillar的设置,-I表示使用pillar匹配

node2:

True

node1:

True

标签:memcache cache redhat name 技术分享 foreign 组件 dev inter

原文地址:http://www.cnblogs.com/jsonhc/p/7591118.html