标签:mat several exp could reject eps cannot book 分享

From:https://developer.nvidia.com/content/life-triangle-nvidias-logical-pipeline

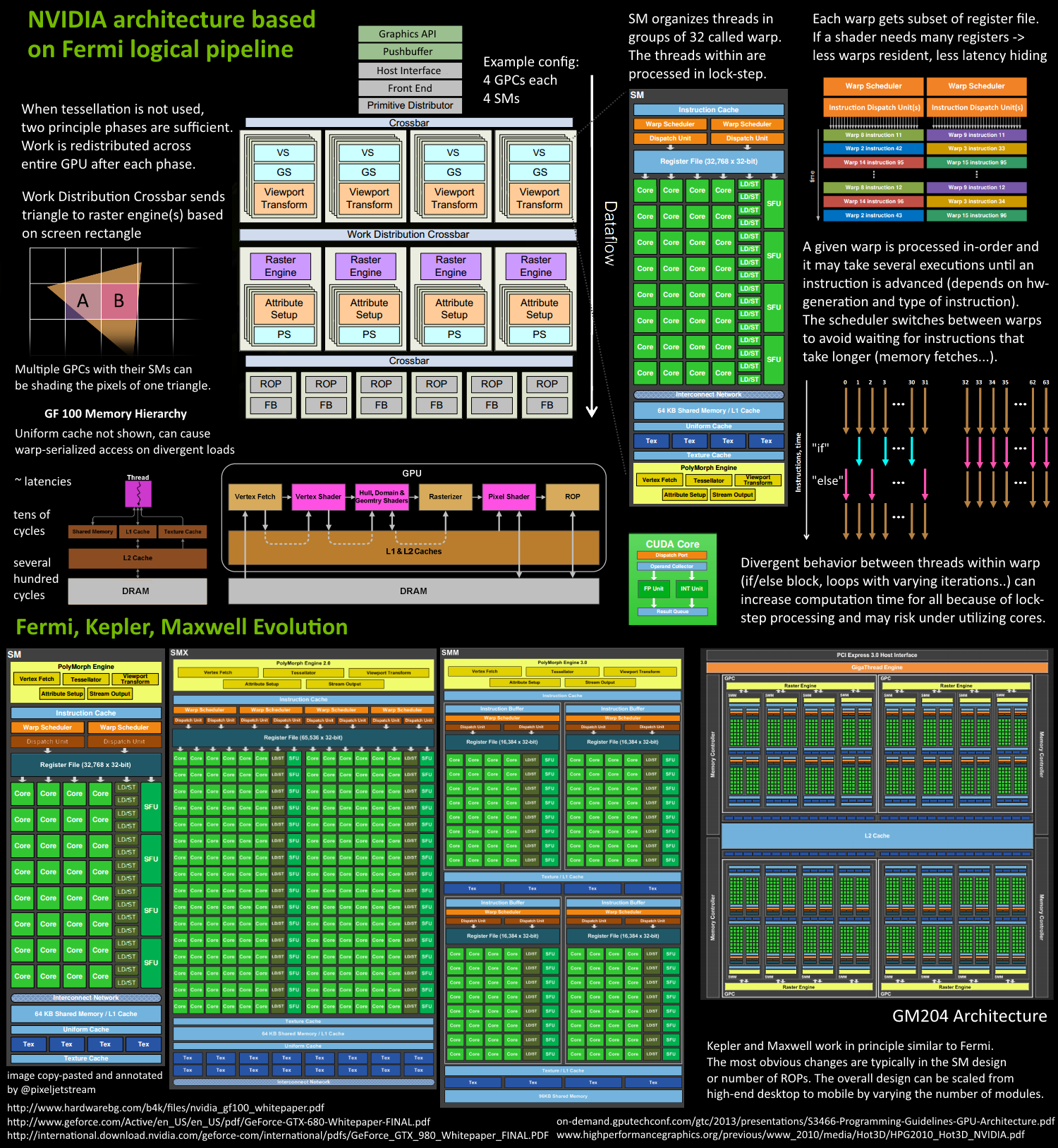

Since the release of the ground breaking Fermi architecture almost 5 years have gone by, it might be time to refresh the principle graphics architecture beneath it. Fermi was the first NVIDIA GPU implementing a fully scalable graphics engine and its core architecture can be found in Kepler as well as Maxwell. The following article and especially the “compressed pipeline knowledge” image below should serve as a primer based on the various public materials, such as whitepapers or GTC tutorials about the GPU architecture. This article focuses on the graphics viewpoint on how the GPU works, although some principles such as how shader program code gets executed is the same for compute.

Why all this complexity? In graphics we have to deal with data amplification that creates lots of variable workloads. Each drawcall may generate a different amount of triangles. The amount of vertices after clipping is different from what our triangles were originally made of. After back-face and depth culling, not all triangles may need pixels on the screen. The screen size of a triangle can mean it requires millions of pixels or none at all.

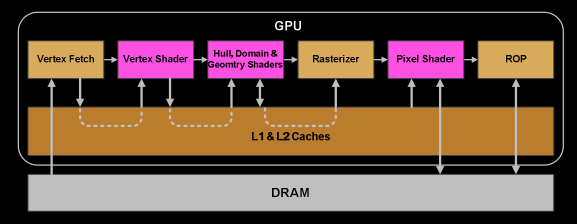

As a consequence modern GPUs let their primitives (triangles, lines, points) follow a logical pipeline, not a physical pipeline. In the old days before G80‘s unified architecture (think DX9 hardware, ps3, xbox360), the pipeline was represented on the chip with the different stages and work would run through it one after another. G80 essentially reused some units for both vertex and fragment shader computations, depending on the load, but it still had a serial process for the primitives/rasterization and so on. With Fermi the pipeline became fully parallel, which means the chip implements a logical pipeline (the steps a triangle goes through) by reusing multiple engines on the chip.

Let‘s say we have two triangles A and B. Parts of their work could be in different logical pipeline steps. A has already been transformed and needs to be rasterized. Some of its pixels could be running pixel-shader instructions already, while others are being rejected by depth-buffer (Z-cull), others could be already being written to framebuffer, and some may actually wait. And next to all that, we could be fetching the vertices of triangle B. So while each triangle has to go through the logical steps, lots of them could be actively processed at different steps of their lifetime. The job (get drawcall‘s triangles on screen) is split into many smaller tasks and even subtasks that can run in parallel. Each task is scheduled to the resources that are available, which is not limited to tasks of a certain type (vertex-shading parallel to pixel-shading).

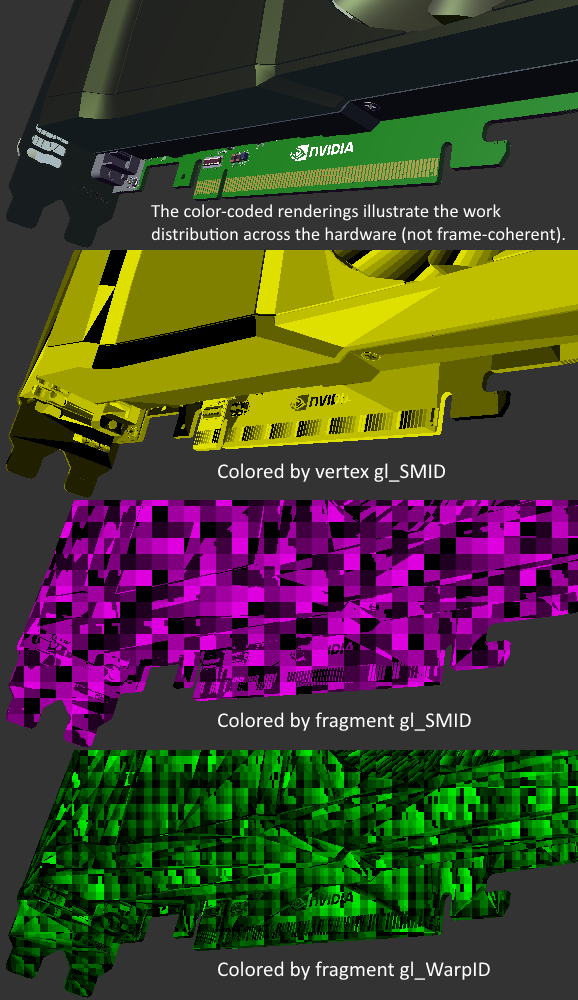

Think of a river that fans out. Parallel pipeline streams, that are independent of each other, everyone on their own time line, some may branch more than others. If we would color-code the units of a GPU based on the triangle, or drawcall it‘s currently working on, it would be multi-color blinkenlights :)

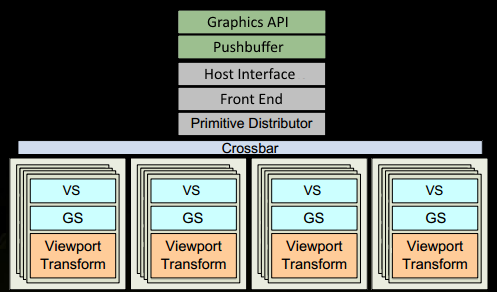

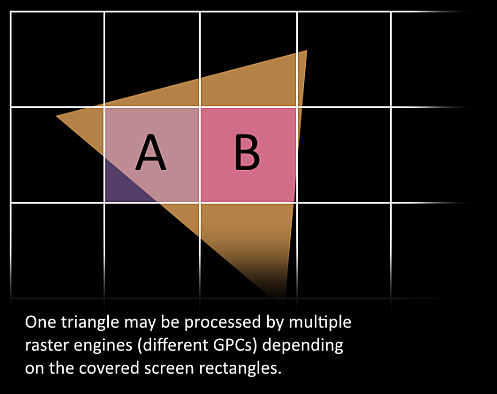

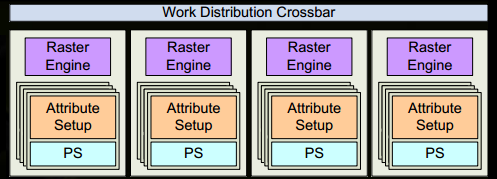

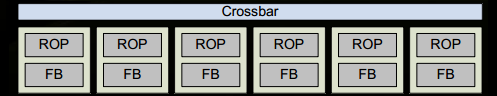

Since Fermi NVIDIA has a similar principle architecture. There is a Giga Thread Engine which manages all the work that‘s going on. The GPU is partitioned into multiple GPCs (Graphics Processing Cluster), each has multiple SMs (Streaming Multiprocessor) and one Raster Engine. There is lots of interconnects in this process, most notably a Crossbar that allows work migration across GPCs or other functional units like ROP (render output unit) subsystems.

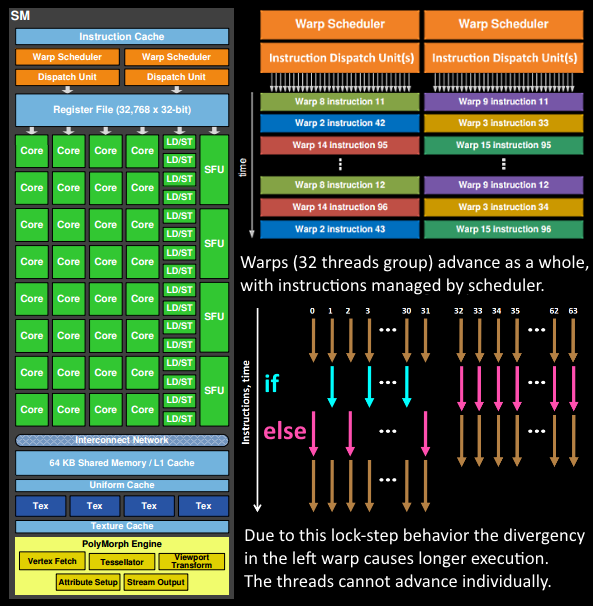

The work that a programmer thinks of (shader program execution) is done on the SMs. It contains many Cores which do the math operations for the threads. One thread could be a vertex-, or pixel-shader invocation for example. Those cores and other units are driven by Warp Schedulers, which manage a group of 32 threads as warp and hand over the instructions to be performed to Dispatch Units. The code logic is handled by the scheduler and not inside a core itself, which just sees something like "sum register 4234 with register 4235 and store in 4230" from the dispatcher. A core itself is rather dumb, compared to a CPU where a core is pretty smart. The GPU puts the smartness into higher levels, it conducts the work of an entire ensemble (or multiple if you will).

How many of these units are actually on the GPU (how many SMs per GPC, how many GPCs..) depends on the chip configuration itself. As you can see above GM204 has 4 GPCs with each 4 SMs, but Tegra X1 for example has 1 GPC and 2 SMs, both with Maxwell design. The SM design itself (number of cores, instruction units, schedulers...) has also changed over time from generation to generation (see first image) and helped making the chips so efficient they can be scaled from high-end desktop to notebook to mobile.

For the sake of simplicity several details are omitted. We assume the drawcall references some index- and vertexbuffer that is already filled with data and lives in the DRAM of the GPU and uses only vertex- and pixelshader (GL: fragmentshader).

Puh! we are done, we have written some pixel into a rendertarget. I hope this information was helpful to understand some of the work/data flow within a GPU. It may also help understand another side-effect of why synchronization with CPU is really hurtful. One has to wait until everything is finished and no new work is submitted (all units become idle), that means when sending new work, it takes a while until everything is fully under load again, especially on the big GPUs.

In the image below you can see how we rendered a CAD model and colored it by the different SMs or warp ids that contributed to the image (NV_shader_thread_group). The result would not be frame-coherent, as the work distribution will vary frame to frame. The scene was rendered using many drawcalls, of which several may also be processed in parallel (using NSIGHT you can see some of that drawcall parallelism as well).

【转】Life of a triangle - NVIDIA's logical pipeline

标签:mat several exp could reject eps cannot book 分享

原文地址:http://www.cnblogs.com/hustztz/p/7592924.html