标签:histogram 数字图像 cond hub 数据 lock 平方根 extractor 图像

一、概述

前面一个系列,我们对车牌识别的相关技术进行了研究,但是车牌识别相对来说还是比较简单的,后续本人会对人脸检测、人脸识别,人脸姿态估计和人眼识别做一定的学习和研究。其中人脸检测相对来说比较简单,譬如Dlib库中直接封装了现成的库函数 frontal_face_detector 供相关人员使用,但是Dlib的运行速率并不是很高,另外于仕琪老师的 libfaceDetection 库具有较高的识别率和相对较快的运行速度,具体可以从github 上获取 https://github.com/ShiqiYu/libfacedetection 。但是该库并没有提供源码分析,只有现成的lib库可以直接使用。

从学习和研究的角度来说,我们还是希望能够直接从源码中进行相关学习,因此此处我们通过Dlib库代码解读,来对人脸识别的相关技术做一定的分析。Dlib是一个机器学习的C++库,包含了许多机器学习常用的算法,并且文档和例子都非常详细。 Dlib官网地址: http://www.dlib.net 。下面我们通过一个简单的例子,来看下人脸检测是如何工作的,代码如下所示:

1 try 2 { 3 frontal_face_detector detector = get_frontal_face_detector(); 4 image_window win; 5 6 string filePath = "E:\\dlib-18.16\\dlib-18.16\\examples\\faces\\2008_002079.jpg"; 7 cout << "processing image " << filePath << endl; 8 array2d<unsigned char> img; 9 load_image(img, filePath); 10 // Make the image bigger by a factor of two. This is useful since 11 // the face detector looks for faces that are about 80 by 80 pixels 12 // or larger. Therefore, if you want to find faces that are smaller 13 // than that then you need to upsample the image as we do here by 14 // calling pyramid_up(). So this will allow it to detect faces that 15 // are at least 40 by 40 pixels in size. We could call pyramid_up() 16 // again to find even smaller faces, but note that every time we 17 // upsample the image we make the detector run slower since it must 18 // process a larger image. 19 20 pyramid_up(img); 21 22 // Now tell the face detector to give us a list of bounding boxes 23 // around all the faces it can find in the image. 24 std::vector<rectangle> dets = detector(img); 25 cout << "Number of faces detected: " << dets.size() << endl; 26 // Now we show the image on the screen and the face detections as 27 // red overlay boxes. 28 win.clear_overlay(); 29 win.set_image(img); 30 win.add_overlay(dets, rgb_pixel(255, 0, 0)); 31 32 cout << "Hit enter to process the next image..." << endl; 33 cin.get(); 34 } 35 catch (exception& e) 36 { 37 cout << "\nexception thrown!" << endl; 38 cout << e.what() << endl; 39 }

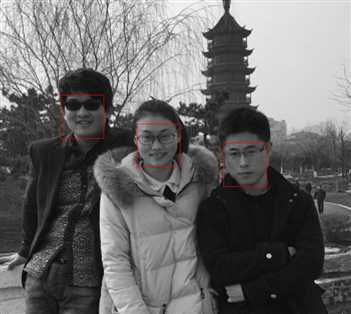

如上图所示,frontal_face_detector 将图像中所有的人脸都检测了出来,从代码中也可以看到,该方法的使用过程及其简单,当然人脸识别的内部逻辑是极其复杂的。

二、代码分析

下面我们一步步跟踪下代码,看看Dlib人脸识别内部究竟是如何工作的。

object_detector

typedef object_detector<scan_fhog_pyramid<pyramid_down<6> > > frontal_face_detector;

类 frontal_face_detector 是Dlib库中定义的,位于 “frontal_face_detector.h” 中,可以看到类 frontal_face_detector 是类 object_detector的一种特殊情况;具体关于object_detector的内容后面再详细介绍。

定义Scanner,用于扫描图片并提取特征

类scan_fhog_pyramid 定义来自于”scan_fhog_pyramid.h ”

template <typename Pyramid_type, typename Feature_extractor_type =default_fhog_feature_extractor>

class scan_fhog_pyramid : noncopyable{...}

类模板中参数Pyramid_type表示图像金字塔的类型,本文使用的是pyramid_down<6>,表示图像金字塔进行下采样的比率为5/6,即对原图像不断缩小5/6,构成多级金字塔。当图像的大小小于扫描窗口大小的时候,停止下采样。

参数 Feature_extractor_type 表示特征提取器,默认情况下使用 "fhog.h"中的extract_fhog_feature() 提取特征,函数原型为:

template <typename image_type, typename T, typename mm>

void extract_fhog_features(

const image_type& img,

array2d<matrix<T,31,1>,mm>& hog,

int cell_size = 8,

int filter_rows_padding = 1,

int filter_cols_padding = 1

)

{

impl_fhog::impl_extract_fhog_features(img, hog, cell_size, filter_rows_padding, filter_cols_padding);

}

此函数提取的HOG特征来自于Felzenszwalb 版本的HOG [1] (简称fhog)它是对每个8*8像素大小的cell提取31维的 fhog算子,然后保存到上述hog array中供后续计算使用。

HOG的发明者是Navneet Dalal,在2005年其在CVPR上发表了《Histograms of Oriented Gradients forHuman Detection》这一篇论文,HOG一战成名。当然ND大神也就是我们经常使用的Inria数据集的缔造者。其博士的毕业论文《Finding People in Images and Videos》更是HOG研究者的一手资料。

HOG算法思想:

在计算机视觉以及数字图像处理中梯度方向直方图(HOG)是一种能对物体进行检测的基于形状边缘特征的描述算子,它的基本思想是利用梯度信息能很好的反映图像目标的边缘信息并通过局部梯度的大小将图像局部的外观和形状特征化。

HOG特征的提取可以用下面过程表示: 颜色空间的归一化是为了减少光照以及背景等因素的影响;划分检测窗口成大小相同的细胞单元(cell),并分别提取相应的梯度信息;组合相邻的细胞单元成大的相互有重叠的块(block),这样能有效的利用重叠的边缘信息,以统计整个块的直方图;并对每个块内的梯度直方图进行归一化,从而进一步减少背景颜色及噪声的影响;最后将整个窗口中所有块的HOG特征收集起来,并使用特征向量来表示其特征。

颜色空间归一化:

在现实的情况,图像目标会出现在不同的环境中,光照也会有所不一样,颜色空间归一化就是对整幅图像的颜色信息作归一化处理从而减少不同光照及背景的影响,也为了提高检测的鲁棒性,引入图像Gamma和颜色空间归一化来作为特征提取的预处理手段。ND大神等人也对不同的图像像素点的表达方式包括灰度空间等进行了评估,最终验证RGB还有LAB色彩空间能使检测结果大致相同且能起到积极的影响,且另一方面,ND大神等人在研究中分别在每个颜色通道上使用了两种不同的Gamma归一化方式,取平方根或者使用对数法,最终验证这一预处理对检测的结果几乎没有影响,而不能对图像进行高斯平滑处理,因平滑处理会降低图像目标边缘信息的辨识度,影响检测结果。

梯度计算:

边缘是由图像局部特征包括灰度、颜色和纹理的突变导致的。一幅图像中相邻的像素点之间变化比较少,区域变化比较平坦,则梯度幅值就会比较小,反之,则梯度幅值就会比较大。梯度在图像中对应的就是其一阶导数。模拟图像f(x,y)中任一像素点(x,y)的梯度是一个矢量:

其中,Gx是沿x方向上的梯度,Gy是沿y方向上的梯度,梯度的幅值及方向角可表示如下:

数字图像中像素点的梯度是用差分来计算的:

一维离散微分模板在将图像的梯度信息简单、快速且有效地计算出来,其公式如下:

式中,Gx,Gy,H(x,y)分别表示的是像素点(x,y)在水平方向上及垂直方向上的梯度以及像素的灰度值,其梯度的幅值及方向计算公式如下:

计算细胞单元的梯度直方图:

对于整个目标窗口,我们需要将其分成互不重叠大小相同的细胞单元(cell),然后分别计算出每个cell的梯度信息,包括梯度大小和梯度方向。ND大神等人实验指出,将像素的梯度方向在0-180°区间内平均划分为9个bins,超过9个时不仅检测性能没有明显的提高反而增加了检测运算量, 每个cell内的像素为其所在的梯度方向直方图进行加权投票,加权的权值可以是像素本身的梯度幅值,也可以是幅值的平方或平方根等,而若使用平方或平方根,实验的检测性能会有所降低,ND大神等人也验证,使用梯度幅值的实验效果更可靠。

对组合成块的梯度直方图作归一化:

从梯度计算公式中可以看出,梯度幅值绝对值的大小容易受到前景与背景对比度及局部光照的影响,要减少这种影响得到较准确的检测效果就必须对局部细胞单元进行归一化处理。归一化方法多种多样,但整体思想基本上是一致的:将几个细胞单元(cell)组合成更大的块(block),这时整幅图像就可看成是待检测窗口,将更大的块看成是滑动窗口,依次从左到右从上到下进行滑动,得到一些有重复细胞单元的块及一些相同细胞单元(cell)在不同块(block)中的梯度信息,再对这些块(block)信息分别作归一化处理,不同的细胞单元尺寸大小及不同块的尺寸大小会影响最终的检测效果。

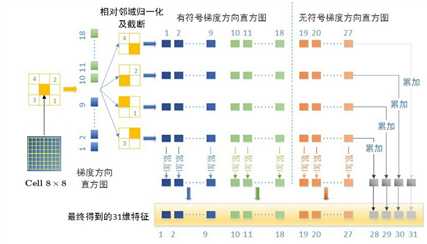

介绍完HOG算子的基本概念,这边分析下31维的 fhog算子具体是从何而来呢?

其中,31D fhog=18D+9D+4D。

最终,每个cell的31维fhog特征就来自于上述三部分的串联。

具体代码如下所示:

1 template < 2 typename image_type, 3 typename out_type 4 > 5 void impl_extract_fhog_features( 6 const image_type& img_, 7 out_type& hog, 8 int cell_size, 9 int filter_rows_padding, 10 int filter_cols_padding 11 ) 12 { 13 const_image_view<image_type> img(img_); 14 // make sure requires clause is not broken 15 DLIB_ASSERT( cell_size > 0 && 16 filter_rows_padding > 0 && 17 filter_cols_padding > 0 , 18 "\t void extract_fhog_features()" 19 << "\n\t Invalid inputs were given to this function. " 20 << "\n\t cell_size: " << cell_size 21 << "\n\t filter_rows_padding: " << filter_rows_padding 22 << "\n\t filter_cols_padding: " << filter_cols_padding 23 ); 24 25 /* 26 This function implements the HOG feature extraction method described in 27 the paper: 28 P. Felzenszwalb, R. Girshick, D. McAllester, D. Ramanan 29 Object Detection with Discriminatively Trained Part Based Models 30 IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 32, No. 9, Sep. 2010 31 32 Moreover, this function is derived from the HOG feature extraction code 33 from the features.cc file in the voc-releaseX code (see 34 http://people.cs.uchicago.edu/~rbg/latent/) which is has the following 35 license (note that the code has been modified to work with grayscale and 36 color as well as planar and interlaced input and output formats): 37 38 Copyright (C) 2011, 2012 Ross Girshick, Pedro Felzenszwalb 39 Copyright (C) 2008, 2009, 2010 Pedro Felzenszwalb, Ross Girshick 40 Copyright (C) 2007 Pedro Felzenszwalb, Deva Ramanan 41 42 Permission is hereby granted, free of charge, to any person obtaining 43 a copy of this software and associated documentation files (the 44 "Software"), to deal in the Software without restriction, including 45 without limitation the rights to use, copy, modify, merge, publish, 46 distribute, sublicense, and/or sell copies of the Software, and to 47 permit persons to whom the Software is furnished to do so, subject to 48 the following conditions: 49 50 The above copyright notice and this permission notice shall be 51 included in all copies or substantial portions of the Software. 52 53 THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, 54 EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF 55 MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND 56 NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE 57 LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION 58 OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION 59 WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. 60 */ 61 62 if (cell_size == 1) 63 { 64 impl_extract_fhog_features_cell_size_1(img_,hog,filter_rows_padding,filter_cols_padding); 65 return; 66 } 67 68 // unit vectors used to compute gradient orientation 69 matrix<double,2,1> directions[9]; 70 directions[0] = 1.0000, 0.0000; 71 directions[1] = 0.9397, 0.3420; 72 directions[2] = 0.7660, 0.6428; 73 directions[3] = 0.500, 0.8660; 74 directions[4] = 0.1736, 0.9848; 75 directions[5] = -0.1736, 0.9848; 76 directions[6] = -0.5000, 0.8660; 77 directions[7] = -0.7660, 0.6428; 78 directions[8] = -0.9397, 0.3420; 79 80 81 82 // First we allocate memory for caching orientation histograms & their norms. 83 const int cells_nr = (int)((double)img.nr()/(double)cell_size + 0.5); 84 const int cells_nc = (int)((double)img.nc()/(double)cell_size + 0.5); 85 86 if (cells_nr == 0 || cells_nc == 0) 87 { 88 hog.clear(); 89 return; 90 } 91 92 // We give hist extra padding around the edges (1 cell all the way around the 93 // edge) so we can avoid needing to do boundary checks when indexing into it 94 // later on. So some statements assign to the boundary but those values are 95 // never used. 96 array2d<matrix<float,18,1> > hist(cells_nr+2, cells_nc+2); 97 for (long r = 0; r < hist.nr(); ++r) 98 { 99 for (long c = 0; c < hist.nc(); ++c) 100 { 101 hist[r][c] = 0; 102 } 103 } 104 105 array2d<float> norm(cells_nr, cells_nc); 106 assign_all_pixels(norm, 0); 107 108 // memory for HOG features 109 const int hog_nr = std::max(cells_nr-2, 0); 110 const int hog_nc = std::max(cells_nc-2, 0); 111 if (hog_nr == 0 || hog_nc == 0) 112 { 113 hog.clear(); 114 return; 115 } 116 const int padding_rows_offset = (filter_rows_padding-1)/2; 117 const int padding_cols_offset = (filter_cols_padding-1)/2; 118 init_hog(hog, hog_nr, hog_nc, filter_rows_padding, filter_cols_padding); 119 120 const int visible_nr = std::min((long)cells_nr*cell_size,img.nr())-1; 121 const int visible_nc = std::min((long)cells_nc*cell_size,img.nc())-1; 122 123 // First populate the gradient histograms 124 for (int y = 1; y < visible_nr; y++) 125 { 126 const double yp = ((double)y+0.5)/(double)cell_size - 0.5; 127 const int iyp = (int)std::floor(yp); 128 const double vy0 = yp-iyp; 129 const double vy1 = 1.0-vy0; 130 int x; 131 for (x = 1; x < visible_nc-3; x+=4) 132 { 133 simd4f xx(x,x+1,x+2,x+3); 134 // v will be the length of the gradient vectors. 135 simd4f grad_x, grad_y, v; 136 get_gradient(y,x,img,grad_x,grad_y,v); 137 138 // We will use bilinear interpolation to add into the histogram bins. 139 // So first we precompute the values needed to determine how much each 140 // pixel votes into each bin. 141 simd4f xp = (xx+0.5)/(float)cell_size + 0.5; 142 simd4i ixp = simd4i(xp); 143 simd4f vx0 = xp-ixp; 144 simd4f vx1 = 1.0f-vx0; 145 146 v = sqrt(v); 147 148 // Now snap the gradient to one of 18 orientations 149 simd4f best_dot = 0; 150 simd4f best_o = 0; 151 for (int o = 0; o < 9; o++) 152 { 153 simd4f dot = grad_x*directions[o](0) + grad_y*directions[o](1); 154 simd4f_bool cmp = dot>best_dot; 155 best_dot = select(cmp,dot,best_dot); 156 dot *= -1; 157 best_o = select(cmp,o,best_o); 158 159 cmp = dot>best_dot; 160 best_dot = select(cmp,dot,best_dot); 161 best_o = select(cmp,o+9,best_o); 162 } 163 164 165 // Add the gradient magnitude, v, to 4 histograms around pixel using 166 // bilinear interpolation. 167 vx1 *= v; 168 vx0 *= v; 169 // The amounts for each bin 170 simd4f v11 = vy1*vx1; 171 simd4f v01 = vy0*vx1; 172 simd4f v10 = vy1*vx0; 173 simd4f v00 = vy0*vx0; 174 175 int32 _best_o[4]; simd4i(best_o).store(_best_o); 176 int32 _ixp[4]; ixp.store(_ixp); 177 float _v11[4]; v11.store(_v11); 178 float _v01[4]; v01.store(_v01); 179 float _v10[4]; v10.store(_v10); 180 float _v00[4]; v00.store(_v00); 181 182 hist[iyp+1] [_ixp[0] ](_best_o[0]) += _v11[0]; 183 hist[iyp+1+1][_ixp[0] ](_best_o[0]) += _v01[0]; 184 hist[iyp+1] [_ixp[0]+1](_best_o[0]) += _v10[0]; 185 hist[iyp+1+1][_ixp[0]+1](_best_o[0]) += _v00[0]; 186 187 hist[iyp+1] [_ixp[1] ](_best_o[1]) += _v11[1]; 188 hist[iyp+1+1][_ixp[1] ](_best_o[1]) += _v01[1]; 189 hist[iyp+1] [_ixp[1]+1](_best_o[1]) += _v10[1]; 190 hist[iyp+1+1][_ixp[1]+1](_best_o[1]) += _v00[1]; 191 192 hist[iyp+1] [_ixp[2] ](_best_o[2]) += _v11[2]; 193 hist[iyp+1+1][_ixp[2] ](_best_o[2]) += _v01[2]; 194 hist[iyp+1] [_ixp[2]+1](_best_o[2]) += _v10[2]; 195 hist[iyp+1+1][_ixp[2]+1](_best_o[2]) += _v00[2]; 196 197 hist[iyp+1] [_ixp[3] ](_best_o[3]) += _v11[3]; 198 hist[iyp+1+1][_ixp[3] ](_best_o[3]) += _v01[3]; 199 hist[iyp+1] [_ixp[3]+1](_best_o[3]) += _v10[3]; 200 hist[iyp+1+1][_ixp[3]+1](_best_o[3]) += _v00[3]; 201 } 202 // Now process the right columns that don‘t fit into simd registers. 203 for (; x < visible_nc; x++) 204 { 205 matrix<double,2,1> grad; 206 double v; 207 get_gradient(y,x,img,grad,v); 208 209 // snap to one of 18 orientations 210 double best_dot = 0; 211 int best_o = 0; 212 for (int o = 0; o < 9; o++) 213 { 214 const double dot = dlib::dot(directions[o], grad); 215 if (dot > best_dot) 216 { 217 best_dot = dot; 218 best_o = o; 219 } 220 else if (-dot > best_dot) 221 { 222 best_dot = -dot; 223 best_o = o+9; 224 } 225 } 226 227 v = std::sqrt(v); 228 // add to 4 histograms around pixel using bilinear interpolation 229 const double xp = ((double)x+0.5)/(double)cell_size - 0.5; 230 const int ixp = (int)std::floor(xp); 231 const double vx0 = xp-ixp; 232 const double vx1 = 1.0-vx0; 233 234 hist[iyp+1][ixp+1](best_o) += vy1*vx1*v; 235 hist[iyp+1+1][ixp+1](best_o) += vy0*vx1*v; 236 hist[iyp+1][ixp+1+1](best_o) += vy1*vx0*v; 237 hist[iyp+1+1][ixp+1+1](best_o) += vy0*vx0*v; 238 } 239 } 240 241 // compute energy in each block by summing over orientations 242 for (int r = 0; r < cells_nr; ++r) 243 { 244 for (int c = 0; c < cells_nc; ++c) 245 { 246 for (int o = 0; o < 9; o++) 247 { 248 norm[r][c] += (hist[r+1][c+1](o) + hist[r+1][c+1](o+9)) * (hist[r+1][c+1](o) + hist[r+1][c+1](o+9)); 249 } 250 } 251 } 252 253 const double eps = 0.0001; 254 // compute features 255 for (int y = 0; y < hog_nr; y++) 256 { 257 const int yy = y+padding_rows_offset; 258 for (int x = 0; x < hog_nc; x++) 259 { 260 const simd4f z1(norm[y+1][x+1], 261 norm[y][x+1], 262 norm[y+1][x], 263 norm[y][x]); 264 265 const simd4f z2(norm[y+1][x+2], 266 norm[y][x+2], 267 norm[y+1][x+1], 268 norm[y][x+1]); 269 270 const simd4f z3(norm[y+2][x+1], 271 norm[y+1][x+1], 272 norm[y+2][x], 273 norm[y+1][x]); 274 275 const simd4f z4(norm[y+2][x+2], 276 norm[y+1][x+2], 277 norm[y+2][x+1], 278 norm[y+1][x+1]); 279 280 const simd4f nn = 0.2*sqrt(z1+z2+z3+z4+eps); 281 const simd4f n = 0.1/nn; 282 283 simd4f t = 0; 284 285 const int xx = x+padding_cols_offset; 286 287 // contrast-sensitive features 288 for (int o = 0; o < 18; o+=3) 289 { 290 simd4f temp0(hist[y+1+1][x+1+1](o)); 291 simd4f temp1(hist[y+1+1][x+1+1](o+1)); 292 simd4f temp2(hist[y+1+1][x+1+1](o+2)); 293 simd4f h0 = min(temp0,nn)*n; 294 simd4f h1 = min(temp1,nn)*n; 295 simd4f h2 = min(temp2,nn)*n; 296 set_hog(hog,o,xx,yy, sum(h0)); 297 set_hog(hog,o+1,xx,yy, sum(h1)); 298 set_hog(hog,o+2,xx,yy, sum(h2)); 299 t += h0+h1+h2; 300 } 301 302 t *= 2*0.2357; 303 304 // contrast-insensitive features 305 for (int o = 0; o < 9; o+=3) 306 { 307 simd4f temp0 = hist[y+1+1][x+1+1](o) + hist[y+1+1][x+1+1](o+9); 308 simd4f temp1 = hist[y+1+1][x+1+1](o+1) + hist[y+1+1][x+1+1](o+9+1); 309 simd4f temp2 = hist[y+1+1][x+1+1](o+2) + hist[y+1+1][x+1+1](o+9+2); 310 simd4f h0 = min(temp0,nn)*n; 311 simd4f h1 = min(temp1,nn)*n; 312 simd4f h2 = min(temp2,nn)*n; 313 set_hog(hog,o+18,xx,yy, sum(h0)); 314 set_hog(hog,o+18+1,xx,yy, sum(h1)); 315 set_hog(hog,o+18+2,xx,yy, sum(h2)); 316 } 317 318 319 float temp[4]; 320 t.store(temp); 321 322 // texture features 323 set_hog(hog,27,xx,yy, temp[0]); 324 set_hog(hog,28,xx,yy, temp[1]); 325 set_hog(hog,29,xx,yy, temp[2]); 326 set_hog(hog,30,xx,yy, temp[3]); 327 } 328 } 329 }

标签:histogram 数字图像 cond hub 数据 lock 平方根 extractor 图像

原文地址:http://www.cnblogs.com/freedomker/p/7428522.html