借助于hdfs(具体可参考 搭建hadoop),再搭建hbase就十分方便

hbase需要hdfs,zookeeper。所以自建一个zk server

环境:

master:主机名/ip都是master

d1-d3:主机名/ip分别是d1-d3,统称为ds

zk在d1上

以下操作均在master上完成

首先下载hbase并解压到/opt/hbase

wget http://www-eu.apache.org/dist/hbase/stable/hbase-1.2.6-bin.tar.gz

进入conf开始配置

hbase-env.sh

# The java implementation to use. Java 1.7+ required. export JAVA_HOME=/usr/lib/jvm/java-8-oracle

hbase-site.xml

<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://master:9000/hbase</value> </property> <property> <name>hbase.master</name> <value>master</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <name>hbase.zookeeper.property.clientPort</name> <value>2181</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>d1</value> </property> <property> <name>zookeeper.session.timeout</name> <value>60000000</value> </property> <property> <name>dfs.support.append</name> <value>true</value> </property> </configuration>

可以看到这里主要指定hadoop namenode和zk的位置

regionservers:

d1

d2

d3

依次把hbase节点填入

然后把/opt/hbase用scp拷贝到ds

在master,执行start-hbase.sh即可全部启动

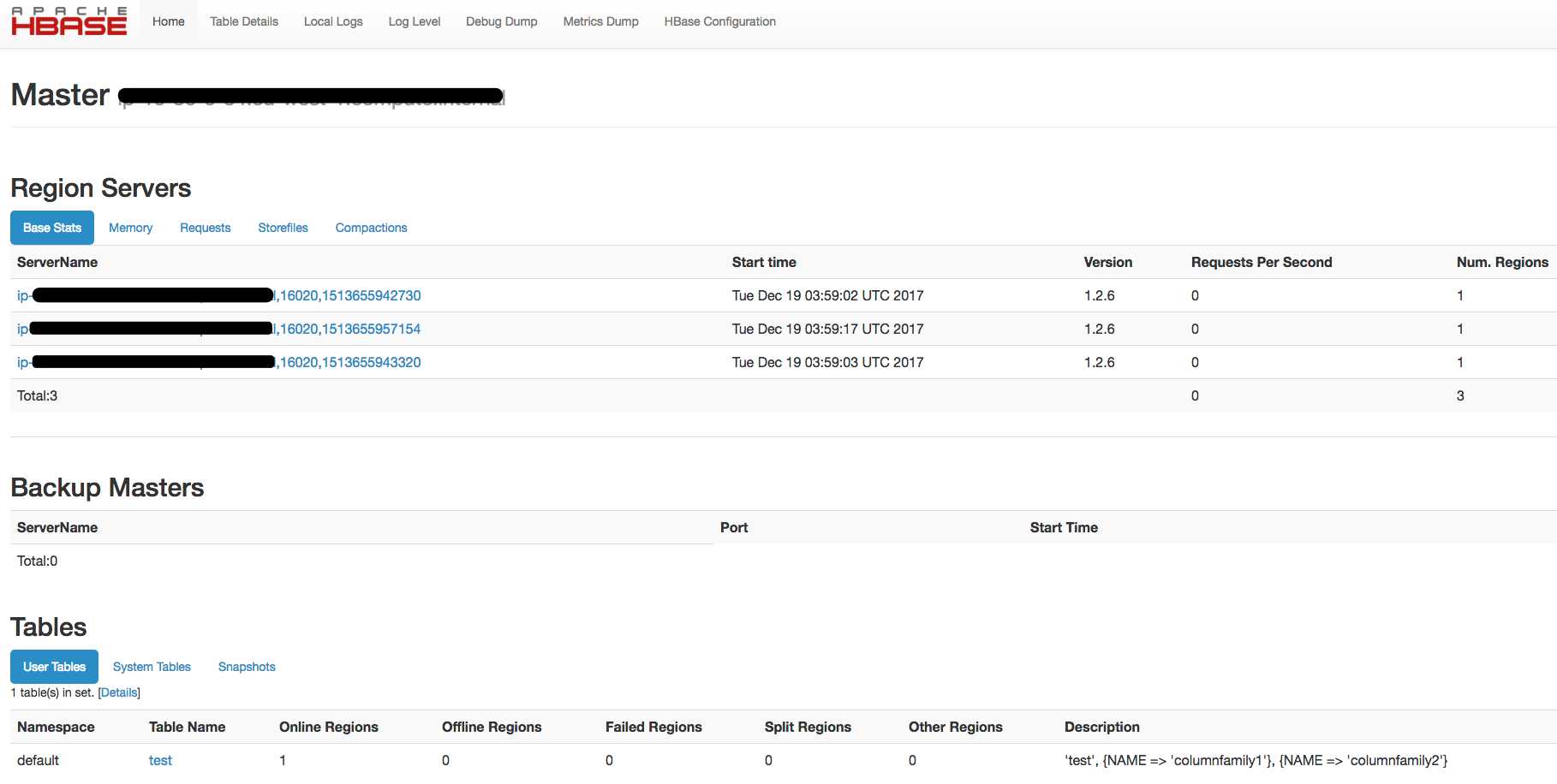

自带的管理web ui:http://master:16010/master-status

来做一些常用数据操作

xxx$hbase shell

...

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.6, rUnknown, Mon May 29 02:25:32 CDT 2017

hbase(main):001:0> list

TABLE

test

1 row(s) in 0.1550 seconds

=> ["test"]

hbase(main):002:0> create ‘table1‘,‘cf1‘,‘cf2‘

0 row(s) in 2.3260 seconds

=> Hbase::Table - table1

hbase(main):003:0> put ‘table1‘,‘row1‘,‘cf1:c1‘,‘i am cf1.c1 value‘

0 row(s) in 0.1480 seconds

hbase(main):004:0> put ‘table1‘,‘row1‘,‘cf1:c2‘,‘i am cf1.c2 value‘

0 row(s) in 0.0090 seconds

hbase(main):005:0> put ‘table1‘,‘row1‘,‘cf2:c1‘,‘i am cf2.c1 value‘

0 row(s) in 0.0070 seconds

hbase(main):007:0> put ‘table1‘,‘row2‘,‘cf2:c4‘,‘i am cf2.c4 value‘

0 row(s) in 0.0050 seconds

hbase(main):008:0> scan ‘table1‘

ROW COLUMN+CELL

row1 column=cf1:c1, timestamp=1513669511645, value=i am cf1.c1 value

row1 column=cf1:c2, timestamp=1513669522464, value=i am cf1.c2 value

row1 column=cf2:c1, timestamp=1513669539419, value=i am cf2.c1 value

row2 column=cf2:c4, timestamp=1513669561824, value=i am cf2.c4 value

2 row(s) in 0.0140 seconds

hbase(main):011:0> scan ‘table1‘, {ROWPREFIXFILTER => ‘row2‘}

ROW COLUMN+CELL

row2 column=cf2:c4, timestamp=1513669561824, value=i am cf2.c4 value

1 row(s) in 0.0100 seconds

hbase(main):013:0> create ‘table2‘,‘xx‘

0 row(s) in 1.2270 seconds

hbase(main):014:0> list

TABLE

table1

table2

test

3 row(s) in 0.0040 seconds

base(main):016:0> disable ‘table2‘

0 row(s) in 2.2380 seconds

hbase(main):017:0> drop ‘table2‘

0 row(s) in 1.2390 seconds

hbase(main):018:0> list

TABLE

table1

test

2 row(s) in 0.0060 seconds

建表,删表,插入,条件查询等

可以看到跟hadoop一样,数据节点只需做ssh设置,其他操作都在master完成